IBM DS8900F Performance Best Practices and Monitoring

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High Performance Solid State Storage Under Linux

High Performance Solid State Storage Under Linux Eric Seppanen, Matthew T. O’Keefe, David J. Lilja Department of Electrical and Computer Engineering University of Minnesota Minneapolis, MN 55455 fsepp0019, mokeefe, [email protected] Abstract—Solid state drives (SSDs) allow single-drive perfor- II. BACKGROUND mance that is far greater than disks can produce. Their low latency and potential for parallel operations mean that they are A. Solid State Drives able to read and write data at speeds that strain operating system In many ways, SSD performance is far superior to that of I/O interfaces. Additionally, their performance characteristics expose gaps in existing benchmarking methodologies. disk drives. With random read performance nearly identical We discuss the impact on Linux system design of a prototype to sequential read performance, seek-heavy workloads can PCI Express SSD that operates at least an order of magnitude perform orders of magnitude faster with an SSD compared to faster than most drives available today. We develop benchmark- a disk drive. SSDs have other benefits besides performance: ing strategies and focus on several areas where current Linux they consume less power and are more resistant to physical systems need improvement, and suggest methods of taking full advantage of such high-performance solid state storage. abuse than disk drives. We demonstrate that an SSD can perform with high through- But SSDs bear the burden of NAND flash management. put, high operation rates, and low latency under the most Large flash block erase sizes cause write amplification and difficult conditions. This suggests that high-performance SSDs delays, and drives must have large page-mapping tables to sup- can dramatically improve parallel I/O performance for future port small writes efficiently. -

IBM Flashsystem A9000 Product Guide (Version 12.3)

Front cover IBM FlashSystem A9000 Product Guide (Updated for Version 12.3.2) Bert Dufrasne Stephen Solewin Francesco Anderloni Roger Eriksson Lisa Martinez Product Guide IBM FlashSystem A9000 Product Guide This IBM® Redbooks® Product Guide is an overview of the main characteristics, features, and technologies that are used in IBM FlashSystem® A9000 Models 425 and 25U, with IBM FlashSystem A9000 Software V12.3.2. IBM FlashSystem A9000 storage system uses the IBM FlashCore® technology to help realize higher capacity and improved response times over disk-based systems and other competing flash and solid-state drive (SSD)-based storage. FlashSystem A9000 offers world-class software features that are built with IBM Spectrum™ Accelerate. The extreme performance of IBM FlashCore technology with a grid architecture and comprehensive data reduction creates one powerful solution. Whether you are a service provider who requires highly efficient management or an enterprise that is implementing cloud on a budget, FlashSystem A9000 provides consistent and predictable microsecond response times and the simplicity that you need. As a cloud optimized solution, FlashSystem A9000 suits the requirements of public and private cloud providers who require features, such as inline data deduplication, multi-tenancy, and quality of service. It also uses powerful software-defined storage capabilities from IBM Spectrum Accelerate, such as Hyper-Scale technology, VMware, and storage container integration. FlashSystem A9000 is a modular system that consists of three grid controllers and a flash enclosure. An external view of the Model 425 is shown in Figure 1. Figure 1 IBM FlashSystem A9000 Model 425 © Copyright IBM Corp. 2017, 2018. All rights reserved. -

SAS Enters the Mainstream Although Adoption of Serial Attached SCSI

SAS enters the mainstream By the InfoStor staff http://www.infostor.com/articles/article_display.cfm?Section=ARTCL&C=Newst&ARTICLE_ID=295373&KEYWORDS=Adaptec&p=23 Although adoption of Serial Attached SCSI (SAS) is still in the infancy stages, the next 12 months bode well for proponents of the relatively new disk drive/array interface. For example, in a recent InfoStor QuickVote reader poll, 27% of the respondents said SAS will account for the majority of their disk drive purchases over the next year, although Serial ATA (SATA) topped the list with 37% of the respondents, followed by Fibre Channel with 32%. Only 4% of the poll respondents cited the aging parallel SCSI interface (see figure). However, surveys of InfoStor’s readers are skewed by the fact that almost half of our readers are in the channel (primarily VARs and systems/storage integrators), and the channel moves faster than end users in terms of adopting (or at least kicking the tires on) new technologies such as serial interfaces. Click here to enlarge image To get a more accurate view of the pace of adoption of serial interfaces such as SAS, consider market research predictions from firms such as Gartner and International Data Corp. (IDC). Yet even in those firms’ predictions, SAS is coming on surprisingly strong, mostly at the expense of its parallel SCSI predecessor. For example, Gartner predicts SAS disk drives will account for 16.4% of all multi-user drive shipments this year and will garner almost 45% of the overall market in 2009 (see figure on p. 18). -

Faster Oracle Performance with IBM Flashsystem 2 Faster Oracle Performance with IBM Flashsystem

IBM Systems and Technology Group May 2013 Thought Leadership White Paper Faster Oracle performance with IBM FlashSystem 2 Faster Oracle performance with IBM FlashSystem Executive summary The result is a massive performance gap, felt most painfully This whitepaper discusses methods for improving Oracle® by database servers, which typically carry out far more I/O database performance using flash storage to accelerate the most transactions than other systems. Super fast processors and resource-intensive data that slows performance across the massive amounts of bandwidth are often wasted as storage board. devices take several milliseconds just to access the requested data. To this end, it discusses methods for identifying I/O performance bottlenecks, and it points out components that are the best candidates for migration to a flash storage appliance. An in-depth explanation of flash technology and possible implementations are also included. The problem of I/O wait time Often, additional processing power alone will do little or nothing to improve Oracle performance. This is because the processor, no matter how fast, finds itself constantly waiting on mechanical storage devices for its data. While every other component in the “data chain” moves in terms of computation times and the raw speed of electricity through a circuit, hard drives move mechanically, relying on physical movement around a magnetic platter to access information. In the last 20 years, processor speeds have increased at a Figure 1: Comparing processor and storage performance improvements geometric rate. At the same time, however, conventional storage access times have only improved marginally (see Figure 1). IBM Systems and Technololgy Group 3 When servers wait on storage, users wait on servers. -

IBM DS8880: Hybrid Cloud Integration with Transparent Cloud Tiering

Accelerate with IBM Storage: IBM DS8880: Hybrid cloud integration with Transparent Cloud Tiering Craig Gordon Consulting I/T Specialist IBM Washington Systems Center [email protected] © Copyright IBM Corporation 2018. Washington Systems Center - Storage Accelerate with IBM Storage Webinars The Free IBM Storage Technical Webinar Series Continues in 2018... Washington Systems Center – Storage experts cover a variety of technical topics. Audience: Clients who have or are considering acquiring IBM Storage solutions. Business Partners and IBMers are also welcome. To automatically receive announcements of upcoming Accelerate with IBM Storage webinars, Clients, Business Partners and IBMers are welcome to send an email request to [email protected]. Located in the Accelerate with IBM Storage Blog: https://www.ibm.com/developerworks/mydeveloperworks/blogs/accelerate/?lang=en Also, check out the WSC YouTube Channel here: https://www.youtube.com/playlist?list=PLSdmGMn4Aud-gKUBCR8K0kscCiF6E6ZYD&disable_polymer=true 2018 Webinars: January 9 – DS8880 Easy Tier January 17 – Start 2018 Fast! What's New for Spectrum Scale V5 and ESS February 8 - VersaStack - Solutions For Fast Deployments February 16 - TS7700 R4.1 Phase 2 GUI with Live Demo February 22 - DS8880 Transparent Cloud Tiering Live Demo March 1 - Spectrum Scale/ESS Application Case Study Register Here: https://ibm2.webex.com/ibm2/onstage/g.php?MTID=e25146b6c1207cb081f4392087fb6f73a March 7 - Spectrum Storage Management, Control, Insights, Foundation; what’s the difference? Register Here: https://ibm2.webex.com/ibm2/onstage/g.php?MTID=e3165dfc6c698c8fcb83132c95ae6dfe7 March 15 - IBM FlashSystem A9000/R and SVC Configuration Best Practices Register Here: https://ibm2.webex.com/ibm2/onstage/g.php?MTID=e87d423c5cccbdefbbb61850d54f70f4b © Copyright IBM Corporation 2018. -

IBM Power® Systems for SAS® Empowers Advanced Analytics Harry Seifert, Laurent Montaron, IBM Corporation

Paper 4695-2020 IBM Power® Systems for SAS® Empowers Advanced Analytics Harry Seifert, Laurent Montaron, IBM Corporation ABSTRACT For over 40+ years of partnership between IBM and SAS®, clients have been benefiting from the added value brought by IBM’s infrastructure platforms to deploy SAS analytics, and now SAS Viya’s evolution of modern analytics. IBM Power® Systems and IBM Storage empower SAS environments with infrastructure that does not make tradeoffs among performance, cost, and reliability. The unified solution stack, comprising server, storage, and services, reduces the compute time, controls costs, and maximizes resilience of SAS environment with ultra-high bandwidth and highest availability. INTRODUCTION We will explore how to deploy SAS on IBM Power Systems platforms and unleash the full potential of the infrastructure, to reduce deployment risk, maximize flexibility and accelerate insights. We will start by reviewing IBM and SAS’s technology relationship and the current state of SAS products on IBM Power Systems. Then we will look at some of the infrastructure options to deploy SAS 9.4 on IBM Power Systems and IBM Storage, while maximizing resiliency & throughput by leveraging best practices. Next, we will look at SAS Viya, which introduces changes to the underlying infrastructure requirements while remaining able to be deployed alongside a traditional SAS 9.4 operation. We’ll explore the various deployment modes available. Finally, we’ll look at tuning practices and reference materials available for a deeper dive in deploying SAS on IBM platforms. SAS: 40 YEARS OF PARTNERSHIP WITH IBM IBM and SAS have been partners since the founding of SAS. -

Best Practices for IBM DS8000 and IBM Z/OS Hyperswap with IBM Copy Services Manager

Front cover Best Practices for IBM DS8000 and IBM z/OS HyperSwap with IBM Copy Services Manager Thomas Luther Alexander Warmuth Marcelo Takakura Redbooks IBM Redbooks Best Practices for IBM DS8000 and IBM z/OS HyperSwap with IBM Copy Services Manager May 2019 SG24-8431-00 Note: Before using this information and the product it supports, read the information in “Notices” on page vii. First Edition (May 2019) This edition applies to IBM Copy Services Manager (CSM) V6.2.3 with IBM DS8000 Version 8.5. © Copyright International Business Machines Corporation 2019. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . vii Trademarks . viii Preface . ix Authors. ix Now you can become a published author, too! . .x Comments welcome. .x Stay connected to IBM Redbooks . .x Part 1. Introduction and planning . 1 Chapter 1. Introduction. 3 1.1 IBM Copy Services Manager overview . 4 1.2 CSM licenses . 5 1.2.1 CSM licenses for z/OS platforms . 5 1.2.2 CSM licenses for distributed server platforms. 6 1.3 z/OS HyperSwap overview . 7 1.3.1 z/OS HyperSwap: Not so basic anymore . 8 1.3.2 CSM sessions that support HyperSwap . 9 1.3.3 z/OS HyperSwap functions . 9 1.3.4 HyperSwap sequence. 11 1.3.5 Planned and unplanned HyperSwap . 12 1.4 CSM and HyperSwap communication flow . 13 1.5 GDPS Metro solutions. 14 1.6 IBM Resiliency Orchestration and CSM . 15 Chapter 2. IBM Copy Services Manager and IBM z/OS HyperSwap implementation topologies . -

IBM Flashsystem A9000 • Product Overview

IBM FlashSystem A9000 Version 12.2 Product Overview IBM GC27-8583-07 Note Before using this document and the product it supports, read the information in “Notices” on page 119. Edition notice Publication number: GC27-8583-07. This publication applies to IBM FlashSystem A9000 version 12.2 and to all subsequent releases and modifications until otherwise indicated in a newer publication. © Copyright IBM Corporation 2016, 2018. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Figures ................................... vii Tables .................................... ix About this document .............................. xi Intended audience ................................. xi Document conventions................................ xi Related information and publications ........................... xi IBM Publications Center ............................... xii Sending or posting your comments ........................... xii Getting information, help, and service .......................... xiii Chapter 1. Introduction ............................. 1 Architecture ................................... 2 Flash enclosure ................................. 3 Grid controllers ................................. 4 Back-end interconnect ............................... 4 Logical architecture ................................ 4 Functionality ................................... 5 Specifications ................................... 6 Performance .................................. -

FWB's Guide to Storage

GtoS 2nd Ed. Book Page i Friday, March 27, 1998 12:05 PM FWB’s Guide to Storage Second Edition GtoS 2nd Ed. Book Page ii Friday, March 27, 1998 12:05 PM Copyright Notice This manual is copyrighted by FWB Software, LLC (FWB) with all rights reserved. Your rights with regard to this manual are subject to the restrictions and limitations imposed by the copyright laws of the United States of Amer- ica. Under the copyright laws, this manual may not be copied, reproduced, translated, transmitted or reduced to any printed or electronic medium or to any machine-readable form, in whole or in part, without the written consent of FWB. © 1991, 1996 By FWB Software, LLC December 1996 Part No.: 07-00841-201 GtoS 2nd Ed. Book Page iii Friday, March 27, 1998 12:05 PM Trademarks Hard Disk ToolKit, RAID ToolKit, SpaceMaker ToolKit, and CD-ROM Tool- Kit are trademarks of FWB Software, LLC. FWB is a registered trademark of FWB Software, LLC. All brand and product names are trademarks or registered trademarks of their respective holders. Credits This guide was written by Norman Fong with help from Joan Carter, Steve Dalton, Bruce Dundas, Eric Herzog, Al Pierce, Stuart Saraquse, and Fred Swan. It was designed, edited, and composed by Joan Carter with help from Allan Levite. Illustrations were produced by Deane Morris. The original version of this manual was written in 1991 by Leslie Feldman, Norman Fong, Kevin Kachadourian, Neil Strudwick, and Paul Worthington as part of FWB’s Hard Disk ToolKit 1.x manual. Dedication This book is dedicated to my family and friends who put up with the long hours put into this effort. -

Introducing IBM Z/OS Data Set Encryption

Front cover Getting Started with z/OS Data Set Encryption Bill White Philippe Richard Cecilia Carranza Lewis Romoaldo Santos Eysha Shirrine Powers Isabel Arnold David Rossi Kasper Lindberg Eric Rossman Andy Coulson Jacky Doll Brad Habbershaw Thomas Liu Ryan McCarry Version 7 Redbooks Draft Document for Review January 30, 2021 7:14 pm 8410edno.fm IBM Redbooks Getting Started with z/OS Data Set Encryption December 2020 SG24-8410-01 8410edno.fm Draft Document for Review January 30, 2021 7:14 pm Note: Before using this information and the product it supports, read the information in “Notices” on page ix. Second Edition (December 2020) This edition applies to the required and optional hardware and software components needed for z/OS data set encryption. This document was created or updated on January 30, 2021. © Copyright International Business Machines Corporation 2020. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Draft Document for Review April 28, 2021 12:46 pm 8410TOC.fm Contents Notices . ix Trademarks . .x Preface . xi Authors. xi Now you can become a published author, too! . xiii Comments welcome. xiii Stay connected to IBM Redbooks . xiv Chapter 1. Protecting data in today’s IT environment. 1 1.1 Which data . 2 1.1.1 Data at-rest . 2 1.1.2 Data in-use . 2 1.1.3 Data in-flight . 2 1.1.4 Sensitive data . 2 1.2 Why protect data . 3 1.2.1 Accidental exposure . 3 1.2.2 Insider attacks. -

IBM Flashsystem 9100

IBM FlashSystem Data Sheet IBM FlashSystem 9100 Flexible NVMe storage powered by IBM Spectrum Virtualize and IBM FlashCore Highlights technologies Real-time and AI-based applications are where business is • Accelerate business apps with NVMe and IBM moving—rapidly. In fact, within the next few years, industry FlashCore technologies analysts predict that half of all enterprise IT infrastructure will employ some form of machine learning or AI capabilities • Increase storage cost- to improve enterprise productivity, manage business risks efficiency with compression and drive cost reductions. Also, the majority of Fortune 2000 and deduplication companies will have at least one mission-critical workload • Leverage AI to optimize that leverages real-time big data analytics. For both AI and storage operations and real-time applications, the amount of data that must be efficiency rapidly captured and processed will drive the need for the • Transform IT infrastructure performance of both flash-based storage and solutions with IBM Spectrum 1 Virtualize leveraging NVMe technologies. • Increase ROI with IBM Spectrum Virtualize • Extend a rich set of data services across all your storage systems • Simplify data reuse and protection with multicloud solutions IBM FlashSystem Data Sheet For decades, IBM has offered a range of enterprise class high-performance, ultra-low latency storage solutions.2 Now, IBM FlashSystem 9100 combines the performance of flash and end-to- end NVMe with the reliability and innovation of IBM FlashCore technology and the rich feature set and high availability of IBM Spectrum Virtualize. This powerful new storage platform provides: The option to use IBM FlashCore modules (FCMs) with inline-hardware compression, data protection and innovative flash management features provided by IBM FlashCore technology, or industry-standard NVMe flash drives. -

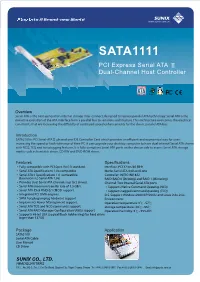

SATA1111 PCI Express Serial ATA Ⅱ Dual-Channel Host Controller

www.sunix.com.tw SATA1111 PCI Express Serial ATA Ⅱ Dual-Channel Host Controller Overview Serial ATA is the next-generation internal storage inter-connect, designed to replace parallel ATA technology. Serial ATA is the proactive evolution of the ATA interface from a parallel bus to serial bus architecture.This architecture overcomes the electrical constraints that are increasing the difficulty of continued speed enhancements for the classic parallel ATA bus. Introduction SATA2100 is PCI Serial-ATAⅡ phaesd one IDE Controller Card which provides an efficient and economical way for users increasing the speed or fault-tolerance of their PC. It can upgrade your desktop computer to have dual internal Serial ATA channels with NCQ,TCQ and hot plugging features. It is fully compliant Serial ATA ports on the device side to access Serial ATA storage media such as hard disk drives, CD-RW and DVD-ROM drives. Features Specifications ‧Fully compatible with PCI Spec.Ver2.3 standard. Interface: PCI 32 bit/66 MHz ‧Serial ATA Specifications 1.0a compatible. Mode: Serial ATA II phaesd one ‧Serial ATA II Specifications 1.0 compatible. Controller: INITIO INI1622 (Extensions to Serial ATA 1.0a) RAID: RAID 0 (Striping) and RAID 1 (Mirroring) ‧Provides dual Serial ATA channels (up to 2 drives). Channel:Two internal Serial ATA ports ‧Serial ATA maximum transfer rate of 1.5 GB/s. ‧Supports Native Command Queuing (NCQ) ‧Serial ATA Disk RAID 0/1/JBOD support. ‧Supports tagged command queuing (TCQ) ‧Integrated PCI DMA engines. O.S. Support:Windows2000/XP/2003/ and Linux 2.4x, 2.6x ‧SATA hot plug/unplug hardware support Environment : ‧Implements Power Management support.