On the Smoothness of Value Functions and the Existence of Optimal Strategies in Diffusion Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ian R. Porteous 9 October 1930 - 30 January 2011

In Memoriam Ian R. Porteous 9 October 1930 - 30 January 2011 A tribute by Peter Giblin (University of Liverpool) Będlewo, Poland 16 May 2011 Caustics 1998 Bill Bruce, disguised as a Terry Wall, ever a Pro-Vice-Chancellor mathematician Watch out for Bruce@60, Wall@75, Liverpool, June 2012 with Christopher Longuet-Higgins at the Rank Prize Funds symposium on computer vision in Liverpool, summer 1987, jointly organized by Ian, Joachim Rieger and myself After a first degree at Edinburgh and National Service, Ian worked at Trinity College, Cambridge, for a BA then a PhD under first William Hodge, but he was about to become Secretary of the Royal Society and Master of Pembroke College Cambridge so when Michael Atiyah returned from Princeton in January 1957 he took on Ian and also Rolph Schwarzenberger (6 years younger than Ian) as PhD students Ian’s PhD was in algebraic geometry, the effect of blowing up on Chern Classes, published in Proceedings of the Cambridge Philosophical Society in 1960: The behaviour of the Chern classes or of the canonical classes of an algebraic variety under a dilatation has been studied by several authors (Todd, Segre, van de Ven). This problem is of interest since a dilatation is the simplest form of birational transformation which does not preserve the underlying topological structure of the algebraic variety. A relation between the Chern classes of the variety obtained by dilatation of a subvariety and the Chern classes of the original variety has been conjectured by the authors cited above but a complete proof of this relation is not in the literature. -

Evolutes of Curves in the Lorentz-Minkowski Plane

Evolutes of curves in the Lorentz-Minkowski plane 著者 Izumiya Shuichi, Fuster M. C. Romero, Takahashi Masatomo journal or Advanced Studies in Pure Mathematics publication title volume 78 page range 313-330 year 2018-10-04 URL http://hdl.handle.net/10258/00009702 doi: info:doi/10.2969/aspm/07810313 Evolutes of curves in the Lorentz-Minkowski plane S. Izumiya, M. C. Romero Fuster, M. Takahashi Abstract. We can use a moving frame, as in the case of regular plane curves in the Euclidean plane, in order to define the arc-length parameter and the Frenet formula for non-lightlike regular curves in the Lorentz- Minkowski plane. This leads naturally to a well defined evolute asso- ciated to non-lightlike regular curves without inflection points in the Lorentz-Minkowski plane. However, at a lightlike point the curve shifts between a spacelike and a timelike region and the evolute cannot be defined by using this moving frame. In this paper, we introduce an alternative frame, the lightcone frame, that will allow us to associate an evolute to regular curves without inflection points in the Lorentz- Minkowski plane. Moreover, under appropriate conditions, we shall also be able to obtain globally defined evolutes of regular curves with inflection points. We investigate here the geometric properties of the evolute at lightlike points and inflection points. x1. Introduction The evolute of a regular plane curve is a classical subject of differen- tial geometry on Euclidean plane which is defined to be the locus of the centres of the osculating circles of the curve (cf. [3, 7, 8]). -

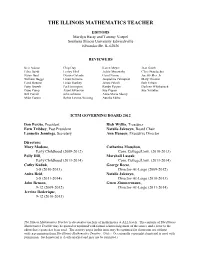

The Illinois Mathematics Teacher

THE ILLINOIS MATHEMATICS TEACHER EDITORS Marilyn Hasty and Tammy Voepel Southern Illinois University Edwardsville Edwardsville, IL 62026 REVIEWERS Kris Adams Chip Day Karen Meyer Jean Smith Edna Bazik Lesley Ebel Jackie Murawska Clare Staudacher Susan Beal Dianna Galante Carol Nenne Joe Stickles, Jr. William Beggs Linda Gilmore Jacqueline Palmquist Mary Thomas Carol Benson Linda Hankey James Pelech Bob Urbain Patty Bruzek Pat Herrington Randy Pippen Darlene Whitkanack Dane Camp Alan Holverson Sue Pippen Sue Younker Bill Carroll John Johnson Anne Marie Sherry Mike Carton Robin Levine-Wissing Aurelia Skiba ICTM GOVERNING BOARD 2012 Don Porzio, President Rich Wyllie, Treasurer Fern Tribbey, Past President Natalie Jakucyn, Board Chair Lannette Jennings, Secretary Ann Hanson, Executive Director Directors: Mary Modene, Catherine Moushon, Early Childhood (2009-2012) Com. College/Univ. (2010-2013) Polly Hill, Marshall Lassak, Early Childhood (2011-2014) Com. College/Univ. (2011-2014) Cathy Kaduk, George Reese, 5-8 (2010-2013) Director-At-Large (2009-2012) Anita Reid, Natalie Jakucyn, 5-8 (2011-2014) Director-At-Large (2010-2013) John Benson, Gwen Zimmermann, 9-12 (2009-2012) Director-At-Large (2011-2014) Jerrine Roderique, 9-12 (2010-2013) The Illinois Mathematics Teacher is devoted to teachers of mathematics at ALL levels. The contents of The Illinois Mathematics Teacher may be quoted or reprinted with formal acknowledgement of the source and a letter to the editor that a quote has been used. The activity pages in this issue may be reprinted for classroom use without written permission from The Illinois Mathematics Teacher. (Note: Occasionally copyrighted material is used with permission. Such material is clearly marked and may not be reprinted.) THE ILLINOIS MATHEMATICS TEACHER Volume 61, No. -

Some Remarks on Duality in S3

GEOMETRY AND TOPOLOGY OF CAUSTICS — CAUSTICS ’98 BANACH CENTER PUBLICATIONS, VOLUME 50 INSTITUTE OF MATHEMATICS POLISH ACADEMY OF SCIENCES WARSZAWA 1999 SOME REMARKS ON DUALITY IN S 3 IAN R. PORTEOUS Department of Mathematical Sciences University of Liverpool Liverpool, L69 3BX, UK e-mail: [email protected] Abstract. In this paper we review some of the concepts and results of V. I. Arnol0d [1] for curves in S2 and extend them to curves and surfaces in S3. 1. Introduction. In [1] Arnol0d introduces the concepts of the dual curve and the derivative curve of a smooth (= C1) embedded curve in S2. In particular he shows that the evolute or caustic of such a curve is the dual of the derivative. The dual curve is just the unit normal curve, while the derivative is the unit tangent curve. The definitions extend in an obvious way to curves on S2 with ordinary cusps. Then one proves easily that where a curve has an ordinary geodesic inflection the dual has an ordinary cusp and vice versa. Since the derivative of a curve without cusps clearly has no cusps it follows that the caustic of such a curve has no geodesic inflections. This prompts investigating what kind of singularity a curve must have for its caustic to have a geodesic inflection, by analogy with the classical construction of de l'H^opital,see [2], pages 24{26. In the second and third parts of the paper the definitions of Arnol0d are extended to surfaces in S3 and to curves in S3. The notations are those of Porteous [2], differentiation being indicated by subscripts. -

High Energy and Smoothness Asymptotic Expansion of the Scattering Amplitude

HIGH ENERGY AND SMOOTHNESS ASYMPTOTIC EXPANSION OF THE SCATTERING AMPLITUDE D.Yafaev Department of Mathematics, University Rennes-1, Campus Beaulieu, 35042, Rennes, France (e-mail : [email protected]) Abstract We find an explicit expression for the kernel of the scattering matrix for the Schr¨odinger operator containing at high energies all terms of power order. It turns out that the same expression gives a complete description of the diagonal singular- ities of the kernel in the angular variables. The formula obtained is in some sense universal since it applies both to short- and long-range electric as well as magnetic potentials. 1. INTRODUCTION d−1 d−1 1. High energy asymptotics of the scattering matrix S(λ): L2(S ) → L2(S ) for the d Schr¨odinger operator H = −∆+V in the space H = L2(R ), d ≥ 2, with a real short-range potential (bounded and satisfying the condition V (x) = O(|x|−ρ), ρ > 1, as |x| → ∞) is given by the Born approximation. To describe it, let us introduce the operator Γ0(λ), −1/2 (d−2)/2 ˆ 1/2 d−1 (Γ0(λ)f)(ω) = 2 k f(kω), k = λ ∈ R+ = (0, ∞), ω ∈ S , (1.1) of the restriction (up to the numerical factor) of the Fourier transform fˆ of a function f to −1 −1 the sphere of radius k. Set R0(z) = (−∆ − z) , R(z) = (H − z) . By the Sobolev trace −r d−1 theorem and the limiting absorption principle the operators Γ0(λ)hxi : H → L2(S ) and hxi−rR(λ + i0)hxi−r : H → H are correctly defined as bounded operators for any r > 1/2 and their norms are estimated by λ−1/4 and λ−1/2, respectively. -

Bertini's Theorem on Generic Smoothness

U.F.R. Mathematiques´ et Informatique Universite´ Bordeaux 1 351, Cours de la Liberation´ Master Thesis in Mathematics BERTINI1S THEOREM ON GENERIC SMOOTHNESS Academic year 2011/2012 Supervisor: Candidate: Prof.Qing Liu Andrea Ricolfi ii Introduction Bertini was an Italian mathematician, who lived and worked in the second half of the nineteenth century. The present disser- tation concerns his most celebrated theorem, which appeared for the first time in 1882 in the paper [5], and whose proof can also be found in Introduzione alla Geometria Proiettiva degli Iperspazi (E. Bertini, 1907, or 1923 for the latest edition). The present introduction aims to informally introduce Bertini’s Theorem on generic smoothness, with special attention to its re- cent improvements and its relationships with other kind of re- sults. Just to set the following discussion in an historical perspec- tive, recall that at Bertini’s time the situation was more or less the following: ¥ there were no schemes, ¥ almost all varieties were defined over the complex numbers, ¥ all varieties were embedded in some projective space, that is, they were not intrinsic. On the contrary, this dissertation will cope with Bertini’s the- orem by exploiting the powerful tools of modern algebraic ge- ometry, by working with schemes defined over any field (mostly, but not necessarily, algebraically closed). In addition, our vari- eties will be thought of as abstract varieties (at least when over a field of characteristic zero). This fact does not mean that we are neglecting Bertini’s original work, containing already all the rele- vant ideas: the proof we shall present in this exposition, over the complex numbers, is quite close to the one he gave. -

Jia 84 (1958) 0125-0165

INSTITUTE OF ACTUARIES A MEASURE OF SMOOTHNESS AND SOME REMARKS ON A NEW PRINCIPLE OF GRADUATION BY M. T. L. BIZLEY, F.I.A., F.S.S., F.I.S. [Submitted to the Institute, 27 January 19581 INTRODUCTION THE achievement of smoothness is one of the main purposes of graduation. Smoothness, however, has never been defined except in terms of concepts which themselves defy definition, and there is no accepted way of measuring it. This paper presents an attempt to supply a definition by constructing a quantitative measure of smoothness, and suggests that such a measure may enable us in the future to graduate without prejudicing the process by an arbitrary choice of the form of the relationship between the variables. 2. The customary method of testing smoothness in the case of a series or of a function, by examining successive orders of differences (called hereafter the classical method) is generally recognized as unsatisfactory. Barnett (J.1.A. 77, 18-19) has summarized its shortcomings by pointing out that if we require successive differences to become small, the function must approximate to a polynomial, while if we require that they are to be smooth instead of small we have to judge their smoothness by that of their own differences, and so on ad infinitum. Barnett’s own definition, which he recognizes as being rather vague, is as follows : a series is smooth if it displays a tendency to follow a course similar to that of a simple mathematical function. Although Barnett indicates broadly how the word ‘simple’ is to be interpreted, there is no way of judging decisively between two functions to ascertain which is the simpler, and hence no quantitative or qualitative measure of smoothness emerges; there is a further vagueness inherent in the term ‘similar to’ which would prevent the definition from being satisfactory even if we could decide whether any given function were simple or not. -

Lecture 2 — February 25Th 2.1 Smooth Optimization

Statistical machine learning and convex optimization 2016 Lecture 2 — February 25th Lecturer: Francis Bach Scribe: Guillaume Maillard, Nicolas Brosse This lecture deals with classical methods for convex optimization. For a convex function, a local minimum is a global minimum and the uniqueness is assured in the case of strict convexity. In the sequel, g is a convex function on Rd. The aim is to find one (or the) minimum θ Rd and the value of the function at this minimum g(θ ). Some key additional ∗ ∗ properties will∈ be assumed for g : Lipschitz continuity • d θ R , θ 6 D g′(θ) 6 B. ∀ ∈ k k2 ⇒k k2 Smoothness • d 2 (θ , θ ) R , g′(θ ) g′(θ ) 6 L θ θ . ∀ 1 2 ∈ k 1 − 2 k2 k 1 − 2k2 Strong convexity • d 2 µ 2 (θ , θ ) R ,g(θ ) > g(θ )+ g′(θ )⊤(θ θ )+ θ θ . ∀ 1 2 ∈ 1 2 2 1 − 2 2 k 1 − 2k2 We refer to Lecture 1 of this course for additional information on these properties. We point out 2 key references: [1], [2]. 2.1 Smooth optimization If g : Rd R is a convex L-smooth function, we remind that for all θ, η Rd: → ∈ g′(θ) g′(η) 6 L θ η . k − k k − k Besides, if g is twice differentiable, it is equivalent to: 0 4 g′′(θ) 4 LI. Proposition 2.1 (Properties of smooth convex functions) Let g : Rd R be a con- → vex L-smooth function. Then, we have the following inequalities: L 2 1. -

Chapter 16 the Tangent Space and the Notion of Smoothness

Chapter 16 The tangent space and the notion of smoothness We will always assume K algebraically closed. In this chapter we follow the approach of ˇ n Safareviˇc[S]. We define the tangent space TX,P at a point P of an affine variety X A as ⇢ the union of the lines passing through P and “ touching” X at P .Itresultstobeanaffine n subspace of A .Thenwewillfinda“local”characterizationofTX,P ,thistimeinterpreted as a vector space, the direction of T ,onlydependingonthelocalring :thiswill X,P OX,P allow to define the tangent space at a point of any quasi–projective variety. 16.1 Tangent space to an affine variety Assume first that X An is closed and P = O =(0,...,0). Let L be a line through P :if ⇢ A(a1,...,an)isanotherpointofL,thenageneralpointofL has coordinates (ta1,...,tan), t K.IfI(X)=(F ,...,F ), then the intersection X L is determined by the following 2 1 m \ system of equations in the indeterminate t: F (ta ,...,ta )= = F (ta ,...,ta )=0. 1 1 n ··· m 1 n The solutions of this system of equations are the roots of the greatest common divisor G(t)of the polynomials F1(ta1,...,tan),...,Fm(ta1,...,tan)inK[t], i.e. the generator of the ideal they generate. We may factorize G(t)asG(t)=cte(t ↵ )e1 ...(t ↵ )es ,wherec K, − 1 − s 2 ↵ ,...,↵ =0,e, e ,...,e are non-negative, and e>0ifandonlyifP X L.The 1 s 6 1 s 2 \ number e is by definition the intersection multiplicity at P of X and L. -

Convergence Theorems for Gradient Descent

Convergence Theorems for Gradient Descent Robert M. Gower. October 5, 2018 Abstract Here you will find a growing collection of proofs of the convergence of gradient and stochastic gradient descent type method on convex, strongly convex and/or smooth functions. Important disclaimer: Theses notes do not compare to a good book or well prepared lecture notes. You should only read these notes if you have sat through my lecture on the subject and would like to see detailed notes based on my lecture as a reminder. Under any other circumstances, I highly recommend reading instead the first few chapters of the books [3] and [1]. 1 Assumptions and Lemmas 1.1 Convexity We say that f is convex if d f(tx + (1 − t)y) ≤ tf(x) + (1 − t)f(y); 8x; y 2 R ; t 2 [0; 1]: (1) If f is differentiable and convex then every tangent line to the graph of f lower bounds the function values, that is d f(y) ≥ f(x) + hrf(x); y − xi ; 8x; y 2 R : (2) We can deduce (2) from (1) by dividing by t and re-arranging f(y + t(x − y)) − f(y) ≤ f(x) − f(y): t Now taking the limit t ! 0 gives hrf(y); x − yi ≤ f(x) − f(y): If f is twice differentiable, then taking a directional derivative in the v direction on the point x in (2) gives 2 2 d 0 ≥ hrf(x); vi + r f(x)v; y − x − hrf(x); vi = r f(x)v; y − x ; 8x; y; v 2 R : (3) Setting y = x − v then gives 2 d 0 ≤ r f(x)v; v ; 8x; v 2 R : (4) 1 2 d The above is equivalent to saying the r f(x) 0 is positive semi-definite for every x 2 R : An analogous property to (2) holds even when the function is not differentiable. -

A Convex, Smooth and Invertible Contact Model for Trajectory Optimization

A convex, smooth and invertible contact model for trajectory optimization Emanuel Todorov Departments of Applied Mathematics and Computer Science and Engineering University of Washington Abstract— Trajectory optimization is done most efficiently There is however one additional requirement which we when an inverse dynamics model is available. Here we develop believe is essential and requires a qualitatively new approach: the first model of contact dynamics definedinboththeforward iv. The contact model should be defined for both forward and and inverse directions. The contact impulse is the solution to a convex optimization problem: minimize kinetic energy inverse dynamics. Here the forward dynamics in contact space subject to non-penetration and friction-cone w = a (q w u ) (1) constraints. We use a custom interior-point method to make the +1 1 optimization problem unconstrained; this is key to defining the compute the next-step velocity w+1 given the current forward and inverse dynamics in a consistent way. The resulting generalized position q , velocity w and applied force u , model has a parameter which sets the amount of contact smoothing, facilitating continuation methods for optimization. while the inverse dynamics We implemented the proposed contact solver in our new physics u = b (q w w ) (2) engine (MuJoCo). A full Newton step of trajectory optimization +1 for a 3D walking gait takes only 160 msec, on a 12-core PC. compute the applied force that caused the observed change in velocity. Computing the total force that caused the observed I. INTRODUCTION change in velocity is of course straightforward. The hard Optimal control theory provides a powerful set of methods part is decomposing this total force into a contact force and for planning and automatic control. -

Mathematics 1

Mathematics 1 MATHEMATICS Courses MATH 1483 Mathematical Functions and Their Uses (A) Math is the language of science and a vital part of both cutting-edge Prerequisites: An acceptable placement score - see research and daily life. Contemporary mathematics investigates such placement.okstate.edu. basic concepts as space and number and also the formulation and Description: Analysis of functions and their graphs from the viewpoint analysis of mathematical models arising from applications. Mathematics of rates of change. Linear, exponential, logarithmic and other functions. has always had close relationships to the physical sciences and Applications to the natural sciences, agriculture, business and the social engineering. As the biological, social, and management sciences have sciences. become increasingly quantitative, the mathematical sciences have Credit hours: 3 moved in new directions to support these fields. Contact hours: Lecture: 3 Contact: 3 Levels: Undergraduate Mathematicians teach in high schools and colleges, do research and Schedule types: Lecture teach at universities, and apply mathematics in business, industry, Department/School: Mathematics and government. Outside of education, mathematicians usually work General Education and other Course Attributes: Analytical & Quant in research and analytical positions, although they have become Thought increasingly involved in management. Firms in the aerospace, MATH 1493 Applications of Modern Mathematics (A) communications, computer, defense, electronics, energy, finance, and Prerequisites: An acceptable placement score (see insurance industries employ many mathematicians. In such employment, placement.okstate.edu). a mathematician typically serves either in a consulting capacity, giving Description: Introduction to contemporary applications of discrete advice on mathematical problems to engineers and scientists, or as a mathematics. Topics from management science, statistics, coding and member of a research team composed of specialists in several fields.