Computers and Systems Copyrighted Material

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The PORTHOS Executive System for the IBM 7094

A § 1 " * '& I m I ^ ;/ 1 ^ # **W : : : The Porthos Executive System for the IBM 7O9I+ User ' s Manual Table of Contents Title Sheet Preface Table of Contents 1. The 709^-1^01 Computer Installation 1.1. Description 1.1.1. General 1.1.2. Organization 1.1.3* Equipment 1.2. Programming Services 1.2.1. System Consulting 1.2.2. System Development 1.2.3- Associated Programmers 1.3« Library Services 1.3* 1' Program Documentation 1.3*2. Subroutines I.3. 3« Reference Publications 1.3.1+. The Mailing List 1.3' 5* Information Bulletins Date 5/1/65 Section Contents Page: 1 Change 2 Porthos Manual Digitized by the Internet Archive in 2012 with funding from University of Illinois Urbana-Champaign http://archive.org/details/porthosexecutivesyOOuniv : s 2. Use of the Computing Facility 2.1. Conditions of Use 2.1.1. Policy for Charging for Computer Use 2.1.2. Refunding of IBM 7094 Time Due to System or Machine Failures 2.1.2.1. In-System Jobs 2.1.2.2. Relinquish Jobs 2.2. Application for Use of Equipment 2.2.1. The Form 2.2.2. Submission and Approval 2.2.3. Entries on the Problem Specification Form 2.2.4. Changes in the Problem Specification 2.2.5. Problem Abstracts 2.3- Logging and Control of Computer Time 2.3«1« Accounting for Computer Time 2.3.2. Notices to Users Concerning Lapsing Time 2.3.3. Department Reports 2.3'3-l« Internal Reports 2. 3- 3* 2. The Technical Progress Report 2. -

Ibm Presentations: Black Template

Zorislav Sic, Power Systems SME, IBM CEE May 2009 IBM Dynamic Infrastructure FORUM Effective Workload Consolidation on Power © 2009 IBM Corporation Building a smarter planet The difference betweenGlobally, white systems and white and templates infrastructure lies are in the intended audiencereaching and a usagebreaking point. Proliferation of servers and networking devices . Excessive energy usage and heating problems . Inadequate power and cooling infrastructure . Data silos and data synchronization . Expectations that “everything” is connected . Linear staffing costs . Skyrocketing software costs . Unexplained outages Meanwhile, customer expectations, competitive pressures, regulatory requirements and fiscal pressures are increasing. 2 © 2009 IBM Corporation Building a smarter planet IT Leaders Need to do More With Less Operational issues can inhibit business growth and innovation CIO key spending initiatives Overburdened IT Server virtualization staff 53% Server consolidation 44% Cost cutting 42% Application integration 37% Data center consolidation 36% Business intelligence Complex service 33% Security delivery 28% Storage hardware 26% Disaster recovery 25% Storage virtualization 23% 0% 10% 20% 30% 40% 50% 60% % of respondents rating issue as high priority Strained IT budgets Source: Goldman Sachs Group IT Spending Survey, July 2008 © 2009 IBM Corporation Building a smarter planet A dynamic infrastructure addresses today’s challenges… and tomorrow’s opportunities REDUCE COST Not just containing operational cost and complexity, but achieving breakthrough productivity gains through virtualization, optimization, energy stewardship, and flexible sourcing. IMPROVE SERVICE Not only ensuring high availability and quality of existing services, but also meeting customer expectations for real-time, dynamic access to innovative new services. MANAGE RISK Not only addressing today’s security, resiliency, and compliance challenges, but also preparing for the new risks posed by an even more connected and collaborative world. -

History of Operating Systems

System Architecture History of Operating Systems Some slides from A. D. Joseph, University of Berkeley See also: www.osdata.com/kind/history.htm www.armory.com/~spectre/tech.html courses.cs.vt.edu/~cs1104/VirtualMachines/OS.1.html en.wikipedia.org/wiki/History_of_operating_systems © 2008 Universität Karlsruhe (TH), System Architecture Group 1 Moore’s Law Drives OS Change 1981 2006 Factor CPU MHz, 10 3200x4 1,280 Cycles/inst 3—10 0.25—0.5 6—40 DRAM capacity 128KB 4GB 32,768 Disk capacity 10MB 1TB 100, 000 Net bandwidth 9600 b/s 1 Gb/s 110,000 # addr bits 16 32 2 #users/machine 10 1 0.1 Price $25,000 $4,000 0.2 Typical academic computer 1981 vs 2006 © 2008 Universität Karlsruhe (TH), System Architecture Group 2 Moore’s Law Effects Nothing like this in any other area of business Transportation in over 200 years: Only 2 orders of magnitude from horseback @10mph to Concorde @1000mph Computers do this every decade What does this mean for us? Techniques have to vary over time to adapt to changing tradeoffs Let’s place a lot more emphasis on principles The key concepts underlying computer systems Less emphasis on facts that are likely to change over the next few years… Let’s examine the way changes in $/MIP has radically changed how OS’s work © 2008 Universität Karlsruhe (TH), System Architecture Group 3 Dawn of Time ENIAC: (1945-55) “The machine designed by Eckert and Mauchly was a monstrosity. When it was finished, the ENIAC filled an entire room, weighed 30 tons, and consumed 200 kilowatts of power.” http://ei.cs.vt.edu/~history/ENIAC.Richey.HTML © 2008 Universität Karlsruhe (TH), System Architecture Group 4 History Phase 1: 19481948--7070 Expensive Hardware Cheap Humans © 2008 Universität Karlsruhe (TH), System Architecture Group 5 History of Systems History OS: Evolution Step 0 APP OS Hardware Simple OS: One program, one user, one machine: examples: early computers, early PCs, embedded controllers such as Nintendo, cars, elevators OS just a library of standard services, e.g. -

The Discursive Coding of Software: a Study of the Relationship Between Stability and Change Jennifer Helene Maher Iowa State University

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Digital Repository @ Iowa State University Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 2006 The discursive coding of software: a study of the relationship between stability and change Jennifer Helene Maher Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Discourse and Text Linguistics Commons, Other Communication Commons, Programming Languages and Compilers Commons, and the Rhetoric and Composition Commons Recommended Citation Maher, Jennifer Helene, "The discursive coding of software: a study of the relationship between stability and change" (2006). Retrospective Theses and Dissertations. 13941. https://lib.dr.iastate.edu/rtd/13941 This Dissertation is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. The discursive coding of software: A study of the relationship between stability and change by Jennifer Helene Maher A dissertation submitted to the graduate faculty in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY Major: Rhetoric and Professional Communication Program of Study Committee: David Russell, Major Professor Carl Herndl Dorothy Winsor Diane Price Herndl David Schweingruber Iowa State University Ames, Iowa 2006 Copyright © Jennifer Helene Maher, 2006. All rights reserved UMI Number: 3243534 UMI Microform 3243534 Copyright 2007 by ProQuest Information and Learning Company. -

Background Analysis and Design of ABOS, an Agent-Based Operating System

Background Analysis and Design of ABOS, an Agent-Based Operating System Author: Mikael Svahnberg, [email protected] A Software Engineering Master’s Thesis at the University of Karlskrona/Ronneby, 1998. Abstract Modern operating systems should be extensible and flexible. This means that the operating system should be able to accept new behaviour and change existing behaviour without too much trouble and that it should ideally also be able to do this without any, or very little, downtime. Furthermore, during the past years the importance of the network has increased drastically, creating a demand for operating systems to function in a distributed environment. To achieve this flexibility and distribut- edness, I have designed and evaluated ABOS, an Agent-Based Operating System. ABOS uses agents to solve all the tasks of the operating system kernel, thus moving away from traditional monolithic kernel structures. Early results show that I have gained in flexibility and modularity, cre- ating a fault-tolerant distributed operating system that can adapt and be adapted to almost any situa- tion with negligible decrease in performance. Within ABOS some tasks has been designed further, and there exists a demonstration of how the agent-based filesystem might work. Keywords: Operating systems, Practical application of multi-agent systems Author Mikael Svahnberg, [email protected] Advisors Håkan Grahn, [email protected] Paul Davidsson, [email protected] A Software Engineering Master’s Thesis at the University of Karlskrona/Ronneby, 1998. Table of Contents 1 Introduction 1.1 Overview.............................................................................. 3 1.2 Current Praxis...................................................................... 4 UNIX................................................................................. -

Mainframe History and the First User's Groups (SHARE) Dr. Steve Guendert

Mainframe History and the First User’s Groups (SHARE) Dr. Steve Guendert “Programming” (and programming support) was an old data processing concept that originally was broadly defined as the adaptation of general-purpose devices to specific tasks. Programming therefore goes back to Herman Hollerith wiring and rewiring (programming) his equipment to handle specific jobs. By the early 1930s IBM was distributing information about novel (for the time) plugboard wiring diagrams to customers via a publication called Pointers. Some of these diagrams were created by IBMers, but more importantly, many were created by customers who were willing to share their solutions with other customers. A culture of programming support and sharing was well in place among IBM and its customers long before the S/360. Actually, it was in place long before the first IBM 701 (the Defense Calculator) computer was installed. Adapting this culture to meet the evolving requirements of its customers proved crucial to IBM’s success. In August 1952, thirty representatives from the prospective customers for the IBM 701 were invited to the IBM facility at Poughkeepsie, NY for a week long training class. This class also gave these pioneering customers their first opportunity to test some programs they had written by running them on an engineering model of the IBM 701. These customers also began sharing their programs amongst themselves. They and their installations took a great deal of professional pride in developing programs and subroutines that were effective and put in use by other installations. Several of the participants at this training class decided to continue discussing programming and mutual problems on an informal basis. -

(OSS/FS)? Look at the Numbers!

Why Open Source Software / Free Software (OSS/FS)? Look at the Numbers! David A. Wheeler [email protected] Why Open Source Software / Free Software (OSS/FS)? Look at the Numbers! Table of Contents Why Open Source Software / Free Software (OSS/FS)? Look at the Numbers!..........................................1 Introduction.........................................................................................................................................................2 Market Share.......................................................................................................................................................4 Reliability...........................................................................................................................................................11 Performance......................................................................................................................................................15 Scaleability.........................................................................................................................................................21 Security..............................................................................................................................................................22 Total Cost of Ownership (TCO)......................................................................................................................31 Non−Quantitative Issues..................................................................................................................................36 -

CYBERSECURITY When Will You Be Hacked?

SUFFOLK ACADEMY OF LAW The Educational Arm of the Suffolk County Bar Association 560 Wheeler Road, Hauppauge, NY 11788 (631) 234-5588 CYBERSECURITY When Will You Be Hacked? FACULTY Victor John Yannacone, Jr., Esq. April 26, 2017 Suffolk County Bar Center, NY Cybersecurity Part I 12 May 2017 COURSE MATERIALS 1. A cybersecurity primer 3 – 1.1. Cybersecurity practices for law firms 5 – 1.2. Cybersecurity and the future of law firms 11 – 2. Information Security 14 – 2.1. An information security policy 33 – 2.2. Data Privacy & Cloud Computing 39 – 2.3. Encryption 47 – 3. Computer security 51 – 3.1. NIST Cybersecurity Framework 77 – 4. Cybersecurity chain of trust; third party vendors 113 – 5. Ransomware 117 – 5.1. Exploit kits 132 – 6. Botnets 137 – 7. BIOS 139 – 7.1. Universal Extensible Firmware Interface (UEFI) 154– 8. Operating Systems 172 – 8.1. Microsoft Windows 197 – 8.2. macOS 236– 8.3. Open source operating system comparison 263 – 9. Firmware 273 – 10. Endpoint Security Buyers Guide 278 – 11. Glossaries & Acronym Dictionaries 11.1. Common Computer Abbreviations 282 – 11.2. BABEL 285 – 11.3. Information Technology Acronymns 291 – 11.4. Glossary of Operating System Terms 372 – 2 Cyber Security Primer Network outages, hacking, computer viruses, and similar incidents affect our lives in ways that range from inconvenient to life-threatening. As the number of mobile users, digital applications, and data networks increase, so do the opportunities for exploitation. Cyber security, also referred to as information technology security, focuses on protecting computers, networks, programs, and data from unintended or unauthorized access, change, or destruction. -

Operating System Roots

Operating System Roots By Robert L. Patrick Written: December, 2006 CHM Reference number: R0369.2017 Operating System Roots By Robert L. Patrick Author Sketch: U of Nevada, engineering USAF 1951-1959: Convair, GM Research, CEIR, CSC 1959-1992: Independent consultant 1992: Retired Abstract: Robert L. Patrick describes the various computer systems he worked on in the early years of computing. His review covers the IBM Card Programmed Calculator through the IBM 701 with the Speedcode interpretive programming system through the IBM 704 with an early FORTRAN compiler. This was followed by the IBM 709 with the SOS operating system, developed jointly SHARE and IBM, and then the IBM 7090 with the IBSYS operating system developed by IBM. Successful features from all of these operating systems were incorporated into the design of System/360 when it was released in the mid-1960s. Introduction To understand operating system software, it helps to go back to the beginning. In the late 1940s, some engineers at Northrop Aviation (now Northrop-Grumman Corp.) cable-connected an IBM tabulator to an IBM calculating punch and the Card Programmed Calculator was born. IBM delivered CPCs in kit form consisting of manuals, big boxes of hardware, blank plugboards, and about 50 pounds of wires of various lengths. In 1949, IBM sponsored a seminar to discuss using the CPC as a general purpose, externally programmed, engineering computer. About this same time a team under Ev Yowell (at the National Bureau of Standards center on the UCLA campus) devised a set of three-address, decimal plugboards to support engineering calculations on the CPC. -

A Tour of Open Source on the Mainframe

A Tour of Open Source on the Mainframe Elizabeth K. Joseph, IBM 20 October 2020 $ whoami Elizabeth K. Joseph, @pleia2 I did on-prem things, then cloud things, now I do mainframe things… which are also on-prem and cloud things! ● Author: Official Ubuntu Book & Common OpenStack Deployments ● Linux Systems Administrator ● Developer Advocate for IBM Z 2 What is a mainframe? 3 What is a mainframe? 4 What is a mainframe? Not x86. (it's s390x!) 190 5.2ghz processor units (PUs), with 12 cores per chip But also... • 40TB of RAM • 60 PCIe control units across 12 PCIe I/O drawers • 22 dedicated I/O offload processors (SAPs) pre-allocated per system 5 What runs on a mainframe? • Linux! ○ SLES, RHEL, and Ubuntu, plus Debian, ClefOS, openSUSE, Fedora, & Alpine ○ Linux with KVM for virtualization (alternative to z/VM, below) • Several proprietary mainframe operating systems, including: ○ z/OS ○ z/VSE ○ z/TPF ○ Z/VM 6 Open Source Legacy: SHARE In 1955, the volunteer-run SHARE Inc was founded by users of the IBM 701. A key resource for this organization was the SHARE library of software that systems programmers would share among their peers, freely. In 1959, SHARE released the SHARE Operating System (SOS), one of the first true "operating systems"1 and Wikipedia says of SOS: "SOS was one of the first instances of "commons-based peer production" now widely used in the development of free and open-source software such as Linux and the GNU project." 1 https://en.wikipedia.org/wiki/SHARE_(computing) 7 Open Source Legacy: VM Collaboration between organizations, including companies, universities, and government entities has continued through the decades in communities like VM1. -

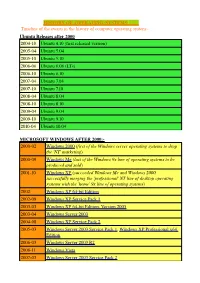

HISTORY of OPERATING SYSTEMS Timeline of The

HISTORY OF OPERATING SYSTEMS Timeline of the events in the history of computer operating system:- Ubuntu Releases after 2000 2004-10 Ubuntu 4.10 (first released version) 2005-04 Ubuntu 5.04 2005-10 Ubuntu 5.10 2006-06 Ubuntu 6.06 (LTs) 2006-10 Ubuntu 6.10 2007-04 Ubuntu 7.04 2007-10 Ubuntu 7.10 2008-04 Ubuntu 8.04 2008-10 Ubuntu 8.10 2009-04 Ubuntu 9.04 2009-10 Ubuntu 9.10 2010-04 Ubuntu 10.04 MICROSOFT WINDOWS AFTER 2000:- 2000-02 Windows 2000 (first of the Windows server operating systems to drop the ©NT© marketing) 2000-09 Windows Me (last of the Windows 9x line of operating systems to be produced and sold) 2001-10 Windows XP (succeeded Windows Me and Windows 2000, successfully merging the ©professional© NT line of desktop operating systems with the ©home© 9x line of operating systems) 2002 Windows XP 64-bit Edition 2002-09 Windows XP Service Pack 1 2003-03 Windows XP 64-bit Edition, Version 2003 2003-04 Windows Server 2003 2004-08 Windows XP Service Pack 2 2005-03 Windows Server 2003 Service Pack 1, Windows XP Professional x64 Edition 2006-03 Windows Server 2003 R2 2006-11 Windows Vista 2007-03 Windows Server 2003 Service Pack 2 2007-11 Windows Home Server 2008-02 Windows Vista Service Pack 1, Windows Server 2008 2008-04 Windows XP Service Pack 3 2009-05 Windows Vista Service Pack 2 2009-10 Windows 7(22 occtober 2009), Windows Server 2008 R2 EVENT IN HISTORY OF OS SINCE 1954:- 1950s 1954 MIT©s operating system made for UNIVAC 1103 1955 General Motors Operating System made for IBM 701 1956 GM-NAA I/O for IBM 704, based on General Motors -

RM-3327-PR the RAND-SHARE Operating System Manual for The

MEMORANDUM RM-3327-PR SEPTEMBER 1962 THE RAND-SHARE OPERATING SYSTEM MANUAL FOR THE IBM 7090 COMPUTER G. E. Bryan, Editor PREPARED FOR: UNITED STATES AIR FORCE PROJECT RAND BO [ID. SANTA MONICA • CALIFORNIA MEMORANDUM RM-3327-PR SEPTEMBER 1962 THE RAND-SHARE OPERATING SYSTEM MANUAL FOR THE IBM 7090 COMPUTER G. E. Bryan, Editor This research is sponsored by the United States Air Force under Project RAND — Con- tract No. AF 49 (638) -700 — monitored by the Directorate of Development Planning, Deputy Chief of Staff, Research and Technology, Hq USAF. Views or conclusions con- tained in this Memorandum should not be interpreted as representing the official opinion or policy of the United States Air Force. Permission to quote from or reproduce portions of this Memorandum must be obtained from The RAND Corporation. -*7& @&tfi&uittoH wiimr^"H I I \_J 1700 MAIN ST • SANTA MONICA • CALIFORNIA- iii PREFACE This manual is a workbook for the use of The RAND Corporation version of the SHARE Operating System (SOS) for the IBM 7090 computer. It describes the use of new features and tools which have been added to the system to aid programmers in the solution of problems on the computer, and to smooth the physical operations required in computer use. An operating system is a complex of computer routines which are used to get programs and data into and out of the machine, transform data (including program assembly and com- pilation), supervise job and task sequencing, and facilitate communication between the programmer and components of the operating system. The SHARE Operating System for the IBM 709/7090 Data Processing Systems was designed by a committee of SHARE, an organization of computer users formed to facili- tate the exchange of computer programs, and was implemented by IBM and some of the users.