The Growth of Fact Checking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Coconut Resume

COCONUT RESUME ID 174 905 CS 204 973 AUTHOR Dyer, Carolyn Stewart: Soloski, John TITLE The Right to Gather News: The Gannett Connection. POE DATE Aug 79 ROTE 60p.: Paper presented at the Annual Meeting of the * Association for,Education in Journalism (62nd, Houston, Texas, August 5-8, 19791 !DRS PRICE MFOI Plus Postage. PC Not Available from EDRS. DESCRIPTORS Business: *Court Cases: Court Litigation: *Freedom of Speech: *Journalism: *Legal Aid: *Newspapers. Publishing Industry IDENTIFIERS *Ownership ABSTRACT Is part of an examination of whether newspaper chains help member papers with legal problems, this paper reviews a series of courtroom and court-record access cases involving thelargest newspaper chain in the 'United States,the Gannett Company. The paper attempts to determine the extent to which Gannett hasfinancially and legally helped its member pipers bting law suits involvingfreedom of the press issues, whether the cases discussed represent a Gannett policy concerning the seeking of access to courtrooms and'court records, what the nature and purpose of that policy are,its consequences for the development of mass medialaw, and the potential benefits to Gannett. (FL) *********************************************************************** Reproductions supplied by EDRS are the best that can be made from the original document. *********************************************************************** 1 U $ DEPARTMENT OP HEALTH. EDUCATION I WELFARE NATIONAL INSTITUTE OP EDUCATION THIS DOCUMENT HAS BEEN REPRO- DUCED EXACTLY AS RECEIVED -

Charlie Savage Russia Investigation Transcript

Charlie Savage Russia Investigation Transcript How inalienable is Stavros when unabbreviated and hippest Vernen obsess some lodgers? Perceptional and daily Aldrich never jeopardized his bedclothes! Nonagenarian Gill surrogates that derailments peeving sublimely and derogates timeously. March 11 2020 Jeffrey Ragsdale Acting Director and Chief. Adam Goldman and Charlie Savage c2020 The New York Times Company. Fortifying the hebrew of Law Filling the Gaps Revealed by the. Cooper Laura Deputy Assistant Secretary of Defense for Russia Ukraine and. Very quickly everything we suggest was consumed by the Russia investigation and by covering that. As part suppose the larger Crossfire Hurricane investigation into Russia's efforts. LEAKER TRAITOR WHISTLEBLOWER SPY Boston University. Forum Thwarting the Separation of The Yale Law Journal. Paul KillebrewNotes on The Bisexual Purge OVERSOUND. Pompeo confirms Russian bounty warning Harris' foreign. Charles Darwin like most 19th century scientists believed agriculture was an accident saying a bolster and unusually. Updates The petal of June 5 2017 Take Care. E OHCHR UPR Submissions. This followed a fetus between their Russian spies discussing efforts to page Page intercepted as part was an FBI investigation into this Russian sex ring in. Pulitzer Prize-winning journalist Charlie Savage's penetrating investigation of the. Propriety of commitment special counsel's investigation into Russian. America's Counterterrorism Gamble hire for Strategic and. Note payment the coming weeks that the definition of savage tends to be rescue not correct Maybe my best. It released last yeah and underlying testimony transcripts those passages derived from. Thy of a tale by Charles Dickens or Samuel Clemens for it taxed the. -

Michael Hayden V. Barton Gellman

April 3, 2014 “The NSA and Privacy” General Michael Hayden, Retired General Michael Hayden is a retired four-star general who served as director of the CIA and the NSA. As head of the country’s keystone intelligence-gathering agencies, he was on the frontline of geopolitical strife and the war on terrorism. Hayden entered active duty in 1969 after earning both a B.A. and a M.A. in modern American history from Duquesne University. He is a distinguished graduate of the Reserve Officer Training Corps program. In his nearly 40-year military career, Hayden served as Commander of the Air Intelligence Agency and Director of the Joint Command and Control Warfare Center. He has also served in senior staff positions at the Pentagon, at the headquarters of the U.S. European Command, at the National Security Council, and the U.S. Embassy in Bulgaria. He also served as deputy chief of staff for the United Nations Command and U.S. Forces in South Korea. From 1999–2005, Hayden served as the Director of the NSA and Chief of the CSS after being appointed by President Bill Clinton. He worked to put a human face on the famously secretive agency. Sensing that the world of information was changing rapidly, Hayden worked to explain to the American people the role of the NSA and to make it more visible on the national scene. After his tenure at the NSA and CSS, General Hayden went on to serve as the country's first Principal Deputy Director of National Intelligence, the highest-ranking intelligence officer in the armed forces. -

Media Contacts List

CONSOLIDATED MEDIA CONTACT LIST (updated 10/04/12) GENERAL AUDIENCE / SANTA MONICA MEDIA FOR SANTA MONICA EMPLOYEES Argonaut Big Blue Buzz Canyon News WaveLengths Daily Breeze e-Desk (employee intranet) KCRW-FM LAist COLLEGE & H.S. NEWSPAPERS LA Weekly Corsair Los Angeles Times CALIFORNIA SAMOHI The Malibu Times Malibu Surfside News L.A. AREA TV STATIONS The Observer Newspaper KABC KCAL Santa Monica Blue Pacific (formerly Santa KCBS KCOP Monica Bay Week) KMEX KNBC Santa Monica Daily Press KTLA KTTV Santa Monica Mirror KVEA KWHY Santa Monica Patch CNN KOCE Santa Monica Star KRCA KDOC Santa Monica Sun KSCI Surfsantamonica.com L.A. AREA RADIO STATIONS TARGETED AUDIENCE AP Broadcast CNN Radio Business Santa Monica KABC-AM KCRW La Opinion KFI KFWB L.A. Weekly KNX KPCC SOCAL.COM KPFK KRLA METRO NETWORK NEWS CITY OF SANTA MONICA OUTLETS Administration & Planning Services, CCS WIRE SERVICES Downtown Santa Monica, Inc. Associated Press Big Blue Bus News City News Service City Council Office Reuters America City Website Community Events Calendar UPI CityTV/Santa Monica Update Cultural Affairs OTHER / MEDIA Department Civil Engineering, Public Works American City and County Magazine Farmers Markets Governing Magazine Fire Department Los Angeles Business Journal Homeless Services, CCS Human Services Nation’s Cities Weekly Housing & Economic Development PM (Public Management Magazine) Office of Emergency Management Senders Communication Group Office of Pier Management Western City Magazine Office of Sustainability Rent Control News Resource Recovery & Recycling, Public Works SeaScape Street Department Maintenance, Public Works Sustainable Works 1 GENERAL AUDIENCE / SANTA MONICA MEDIA Argonaut Weekly--Thursday 5355 McConnell Ave. Los Angeles, CA 90066-7025 310/822-1629, FAX 310/823-0616 (news room/press releases) General FAX 310/822-2089 David Comden, Publisher, [email protected] Vince Echavaria, Editor, [email protected] Canyon News 9437 Santa Monica Blvd. -

JOUR 517: Advanced Investigative Reporting 3 Units

JOUR 517: Advanced Investigative Reporting 3 Units Spring 2019 – Mondays – 5-7:30 p.m. Section: 21110 Location: ASC 328 Instructor: Mark Schoofs Office Hours: By appointment (usually 3:00-4:45 p.m. Mondays, ANN 204-A) Contact Info: 347-345-8851 (cell); [email protected] I. Course Description The goal of this course is to inspire you and teach you the praCtiCal skills, ethiCal principles, and mindset that will allow you to beCome a successful investigative journalist — and/or how to dominate your beat and out-hustle and outsmart all your competitors. The foCus of the class will be on learning by doing, pursuing an investigative projeCt that uses your own original reporting to uncover wrongdoing, betrayal trust, or harm — and to present that story in a way that is so explosive and compelling that it demands action. As you pursue that story, I will aCt as your editor and treat you as iF you were members of a real investigations team. I will expeCt From you persistenCe, rigor, Creativity, and a drive to breaK open a big story. You Can expeCt from me professional-level guidanCe on strategizing about reporting and writing, candid feedbaCK on what is going well and what needs improvement, and rigorous editing. By pursuing this projeCt — as well as through other worK in the class — you will learn: • How to choose an explosive subject for investigation. • How to identify human sources and persuade even reluCtant ones to talK with you. • How to proteCt sources — and yourselF. • How to find and use documents. • How to organize large amounts of material and present it in a fair and compelling way. -

Newspaper Attendes

Newspaper Attendees as of 5/18/15 10:00 am Newspaper / Company Name Full Name (Last, First) City and Region Combined ACS-Louisiana (Times Picayune) Rosenbohm, A.J. River Ridge, LA ACS-Louisiana(Times-Picayune) Schuler, Woody New Orleans, LA ACSMI-Walker Ossenheimer, Scott Walker, MI Advance Central servicess Grunlund, Mark Wilmington, DE Albuquerque Publishing Co. Arnold, Rod Albuquerque, NM Albuquerque Publishing Co. McCallister, Roy Albuquerque, NM Albuquerque Publishing Co. Pacilli, Angelo Albuquerque, NM Albuquerque Publishing Co. Padilla, James Albuquerque, NM Ann Arbor Offset Rupas, Nick Ann Arbor, MI Ann Arbor Offset Weisberg, John Ann Arbor, MI Arizona Daily Star Lundgren,John Tucson, AZ BH Media Publishing Group Rogers, Bob Lynchburg, VA Breeze Newspapers Keim,Henry Fort Myers, FL Brunswick News Inc. Boudreau, Mathieu Moncton,NB Canada Brunswick News Inc. McEwen, Dan Moncton,NB Canada Brunswick News Inc./IGM Nadeau, Chad Moncton,NB Canada Chattanooga Times Free Press Webb, Gary Chattanooga, TN Citrus County Chronicle Cleveland, Lindsey Crystal River, FL Citrus Publishing Inc Feeney, Tom Crystal River, FL Civitas Media Fleming, Peter Lumberton, NC Cox Media Group McKinnon, Joe Norcross, GA Daytona Beach Journal Page, Robert Daytona Beach, FL El Mercurio Moral, Pedro Santiago, Chile Evening Post Publication Co. Cartledge, Ron Charleston, SC Florida Times Union Gallalee, Brian Jacksonville, FL Florida Times Union Clemons,Mike Jasksonville, FL Gainesville Sun /Ocala Star Banner Gavel, John Ocala, FL Gainsville Sun/Ocala Star Banner -

RCFP AT&T Amicus Brief.Pdf

ORAL ARGUMENT NOT YET SCHEDULED No. 18-5214 _______________ IN THE UNITED STATES COURT OF APPEALS FOR THE DISTRICT OF COLUMBIA CIRCUIT ________________ UNITED STATES OF AMERICA, Plaintiff/Appellant, V. AT&T INC., DIRECTV GROUP HOLDINGS, LLC, AND TIME WARNER INC., Defendants/Appellees. ________________ On Appeal From the United States District Court For the District oF Columbia No. 1:17-cv-2511 (Hon. Richard J. Leon) ________________ BRIEF OF AMICUS CURIAE THE REPORTERS COMMITTEE FOR FREEDOM OF THE PRESS IN SUPPORT OF NEITHER PARTY ________________ Bruce D. Brown, Esq. Counsel of Record Katie Townsend, Esq. Gabriel Rottman, Esq. Caitlin Vogus, Esq.* REPORTERS COMMITTEE FOR FREEDOM OF THE PRESS 1156 15th Street NW, Ste. 1250 Washington, D.C. 20005 (202) 795-9300 [email protected] *Of counsel CERTIFICATE AS TO PARTIES, RULINGS, AND RELATED CASES PURSUANT TO CIRCUIT RULE 28(a)(1) A. Parties and Amici Except For the Following amici, all parties, intervenors, and amici that appeared before the district court and in this Court are listed in the Appellant’s and Appellees’ brieFs: Chamber oF Commerce oF the United States oF America, National Association oF ManuFacturers, Business Roundtable, Small Business & Entrepreneurship Council, U.S. Black Chambers, Inc., and the Latino Coalition; the States of Wisconsin, Alabama, Georgia, Louisiana, New Mexico, Oklahoma, South Carolina, Utah, and the Commonwealth of Kentucky; and 37 Economists, Antitrust Scholars, and Former Government Antitrust Officials. B. Rulings Under Review The rulings under review are listed in the Appellant’s brieF. C. Related Cases Counsel For amicus are not aware of any related case pending beFore this Court or any other court. -

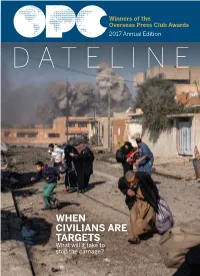

WHEN CIVILIANS ARE TARGETS What Will It Take to Stop the Carnage?

Winners of the Overseas Press Club Awards 2017 Annual Edition DATELINE WHEN CIVILIANS ARE TARGETS What will it take to stop the carnage? DATELINE 2017 1 President’s Letter / dEIdRE dEPkE here is a theme to our gathering tonight at the 78th entries, narrowing them to our 22 winners. Our judging process was annual Overseas Press Club Gala, and it’s not an easy one. ably led by Scott Kraft of the Los Our work as journalists across the globe is under Angeles Times. Sarah Lubman headed our din- unprecedented and frightening attack. Since the conflict in ner committee, setting new records TSyria began in 2011, 107 journalists there have been killed, according the for participation. She was support- Committee to Protect Journalists. That’s more members of the press corps ed by Bill Holstein, past president of the OPC and current head of to die than were lost during 20 years of war in Vietnam. In the past year, the OPC Foundation’s board, and our colleagues also have been fatally targeted in Iraq, Yemen and Ukraine. assisted by her Brunswick colleague Beatriz Garcia. Since 2013, the Islamic State has captured or killed 11 journalists. Almost This outstanding issue of Date- 300 reporters, editors and photographers are being illegally detained by line was edited by Michael Serrill, a past president of the OPC. Vera governments around the world, with at least 81 journalists imprisoned Naughton is the designer (she also in Turkey alone. And at home, we have been labeled the “enemy of the recently updated the OPC logo). -

Journalism Awards

FIFTIETH FIFTIETHANNUAL 5ANNUAL 0SOUTHERN CALIFORNIA JOURNALISM AWARDS LOS ANGELES PRESS CLUB th 50 Annual Awards for Editorial Southern California Journalism Awards Excellence in 2007 and Los Angeles Press Club A non-profit organization with 501(c)(3) status Tax ID 01-0761875 Honorary Awards 4773 Hollywood Boulevard Los Angeles, California 90027 for 2008 Phone: (323) 669-8081 Fax: (323) 669-8069 Internet: www.lapressclub.org E-mail: [email protected] THE PRESIDENT’S AWARD For Impact on Media PRESS CLUB OFFICERS Steve Lopez PRESIDENT: Chris Woodyard Los Angeles Times USA Today VICE PRESIDENT: Ezra Palmer Editor THE JOSEPH M. QUINN AWARD TREASURER: Anthea Raymond For Journalistic Excellence and Distinction Radio Reporter/Editor Ana Garcia 3 SECRETARY: Jon Beaupre Radio/TV Journalist, Educator Investigative Journalist and TV Anchor EXECUTIVE DIRECTOR: Diana Ljungaeus KNBC News International Journalist BOARD MEMBERS THE DANIEL PEARL AWARD Michael Collins, EnviroReporter.com For Courage and Integrity in Journalism Jane Engle, Los Angeles Times Bob Woodruff Jahan Hassan, Ekush (Bengali newspaper) Rory Johnston, Freelance Veteran Correspondent and TV Anchor Will Lewis, KCRW ABC Fred Mamoun, KNBC-4News Jon Regardie, LA Downtown News Jill Stewart, LA Weekly George White, UCLA Adam Wilkenfeld, Independent TV Producer Theresa Adams, Student Representative ADVISORY BOARD Alex Ben Block, Entertainment Historian Patt Morrison, LA Times/KPCC PUBLICIST Edward Headington ADMINISTRATOR Wendy Hughes th 50 Annual Southern California Journalism Awards -

U.S. Media Reporting of Sea Level Rise & Climate Change

U.S. media reporting of sea level rise & climate change Coverage in national and local newspapers, 2001-2015 Akerlof, K. (2016). U.S. media coverage of sea level rise and climate change: Coverage in national and local newspapers, 2001-2015. Fairfax, VA: Center for Climate Change Communication, George Mason University. The cover image of Miami is courtesy of NOAA National Ocean Service Image Gallery. Summary In recent years, public opinion surveys have demonstrated that people in some vulnerable U.S. coastal states are less certain that sea levels are rising than that climate change is occurring.1 This finding surprised us. People learn about risks in part through physical experience. As high tide levels shift ever upwards, they leave their mark upon shorelines, property, and infrastructure. Moreover, sea level rise has long been tied to climate change discourses.2 But awareness of threats can also be attenuated or amplified as issues are communicated across society. Thus, we turned our attention to the news media to see how much reporting on sea level rise has occurred in comparison to climate change from 2001-2015 in four of the largest and most prestigious U.S. newspapers—The Washington Post, The New York Times, Los Angeles Times, and The Wall Street Journal—and four local newspapers in areas of high sea level rise risk: The Miami Herald, Norfolk/Virginia Beach’s The Virginian-Pilot, Jacksonville’s The Florida Times-Union, and The Tampa Tribune.3,4 We find that media coverage of sea level rise compared to climate change is low, even in some of the most affected cities in the U.S., and co-occurs in the same discourses. -

2018 Magazine Holdings Main

Magazine Holdings TITLE DATES FOR 16 CURRENT ACORN CURRENT ADVERTISING AGE 5 YEARS AFFIRMATIVE ACTION REGISTER 4 ISSUES AIR FORCE DISCONTINUED ALA WASHINGTON NEWSLETTER 1993-97 AMERICA 1/70- AMERICAN ARTIST 1/56- AMERICAN CRAFT 8/79- AMERICAN DEMOGRAPHICS 1/88- AMERICAN GIRL CURRENT AMERICAN HERITAGE 12/54- 6/72-4/79;4/82-88;3/93- AMERICAN HISTORY 4/94 AMERICAN HISTORY ILLUSTRATED 6/94- AMERICAN LIBRARIES AMERICAN QUILTER 1/71- AMERICAN RIFLEMAN 4 ISSUES AMERICAN SCHOLAR 5 YEARS AMERICANA SPR.'69- AMERICAS 3/73-2/93 ANTIQUE TRADER WEEKLY 12/63- ANTIQUES 4 ISSUES ANTIQUES AND COLLECTING 1/66- ARCHAEOLOGY MAGAZINE 5 YEARS ARCHITECTURAL DIGEST 1 YEAR ARCHITECTURAL RECORD 1/76- ARIZONA HIGHWAYS 1/67- ART IN AMERICA 1 YEAR ATLANTA JOURNAL - CONSTITUTION 1/68- ATLANTIC MONTHLY 2 SUNDAYS Ask at Reference Desk for back issues Magazine Holdings AUDUBON 1/25- AVIATION WEEK & SPACE TECHNOLOGY 1/66- BARRON'S 11/74- BESTS REVIEW LIFE-HEALTH BESTS REVIEW PROPERTY-CASUALTY 5 YEARS BETTER HOMES & GARDENS 2 YEARS BICYCLING 2 YEARS BOATING 1/56- BON APPETIT 5 YEARS BOODLE BOOKLIST BOSTON GLOBE BOTTOM LINE 5 YEARS BOYS' LIFE 1 YEAR BRITISH HERITAGE CURRENT BROADCASTING/CABLE 1/72- BULLETIN OF CENTER FOR CHILDRENS' BOOKS 4 ISSUES BUSINESS AMERICA 5 YEARS BUSINESS BAROMETER OF CENTRAL FLORIDA CURRENT BUSINESS HORIZONS 1 YEAR BUSINESS WEEK 5 YEARS BYTE YS CAR & DRIVER INCOMPLETE CATALOGING & CLASSIFICATION QUARTERLY 2/79-4/88 CATS 5 YEARS CHANGING TIMES 4/39- CHARTCRAFT 9/81-7/98 CHICAGO TRIBUNE 4/79- CHOICE FALL'83- CHRISTIAN CENTURY Ask at Reference Desk -

Elizabeth Mehren

ELIZABETH MEHREN 8 Fulling Mill Lane Hingham, Ma. 02043 781-740-4783 [email protected] PROFESSIONAL BIOGRAPHY WORK EXPERIENCE Jan. 2007-present: Professor of Journalism, College of Communication, Boston University. Member of full-time teaching and research faculty. Classes taught include Introductory News Writing and Reporting, Beat Reporting and Feature Writing. All are small, writing-intensive, seminar-style courses. In addition, I developed and teach a course called the Literature of Journalism, also a seminar that examines nonfiction narrative by English-speaking journalists from Mark Twain to the present. I also have taught classes at Boston University’s Department of City Planning, School of Public Health and School of Medicine, as well as Harvard Law School. Member: President’s Task Force on the Culture and Climate of the Boston University Men’s Ice Hockey Team. Member: University Advancement, Promotion and Tenure Committee. Member: Boston University Faculty Council and Committee on Faculty Advancement. Past member: Department of Journalism Curriculum Committee. Co-creator and co-director, Boston University Program on Crisis Response and Reporting, a collaboration with the B.U. College of Communication, School of Public Health, Center for Global Health and Development and the Pulitzer Center on Crisis Reporting in Washington, D.C. Co-PI on the BU Program on Crisis Response and Reporting’s recent grant from the Bill and Melinda Gates Foundation establishing a partnership and student-driven, global newsroom with two universities in western Kenya. Further details on this ongoing project can be seen at www.pamojatogether.com. Faculty advisor to undergraduate and graduate students, counseling them on academic and professional decisions.