Adaptive Model-Driven User Interface Development Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Automated Support for Software Development with Frameworks

Automated Support for Software Development with Frameworks Albert Schappert and Peter Sommerlad Wolfgang Pree Siemens AG - Dept.: ZFE T SE C. Doppler Laboratory for Software Engineering D-81730 Munich, Germany Johannes Kepler University - A-4040 Linz, Austria Voice: ++49 89 636-48148 Fax: -45111 Voice: ++43 70 2468-9431 Fax: -9430 g E-mail: falbert.schappert,peter.sommerlad @zfe.siemens.de E-mail: [email protected] Abstract However, the object–oriented concepts and frameworks intro- duce additional complexity to software development. A framework This document presents some of the results of an industrial research gains its functionality by the cooperation of different components. project on automation of software development. The project’s ob- Thus, the use of a single component may be based on its interrela- jective is to improve productivity and quality of software devel- tionships with others that must be understood and mastered by the opment. We see software development based on frameworks and application developer. libraries of prefabricated components as a step in this direction. Present work on software architecture also emphasizes the in- An adequate development style consists of two complementary terrelationships between software components depending on each activities: the creation of frameworks and new components for other to get control on this additional complexity. There the term de- functionality not available and the composition and configuration sign pattern is used to describe and specify component interaction of existing components. mechanisms in an informal way[Pree, 1994a][Buschmann et al., Just providing adequate frameworks and components does not 1994][Gamma et al., 1994]. -

Comprehensive Support for Developing Graphical Highly

AN ABSTRACT OF THE THESIS OF J-Iuan -Chao Keh for the degree of Doctor of Philosophy in Computer Science presented on July 29. 1991 Title:Comprehensive Support for Developing Graphical. Highly Interactive User Interface Systems A Redacted for Privacy Abstract approved: ed G. Lewis The general problem of application development of interactive GUI applications has been addressed by toolkits, libraries, user interface management systems, and more recently domain-specific application frameworks. However, the most sophisticated solution offered by frameworks still lacks a number of features which are addressed by this research: 1) limited functionality -- the framework does little to help the developer implement the application's functionality. 2) weak model of the application -- the framework does not incorporate a strong model of the overall architecture of the application program. 3) representation of control sequences is difficult to understand, edit, and reuse -- higher-level, direct-manipulation tools are needed. We address these problems with a new framework design calledOregon Speedcode Universe version 3.0 (OSU v3.0) which is shown, by demonstration,to overcome the limitations above: 1) functionality is provided by a rich set of built-in functions organizedas a class hierarchy, 2) a strong model is provided by OSU v3.0 in the form ofa modified MVC paradigm, and a Petri net based sequencing language which together form the architectural structure of all applications produced by OSU v3.0. 3) representation of control sequences is easily constructed within OSU v3.0 using a Petri net editor, and other direct manipulation tools builton top of the framework. In ddition: 1) applications developed in OSU v3.0 are partially portable because the framework can be moved to another platform, and applicationsare dependent on the class hierarchy of OSU v3.0 rather than the operating system of a particular platform, 2) the functionality of OSU v3.0 is extendable through addition of classes, subclassing, and overriding of existing methods. -

Evolution and Composition of Object-Oriented Frameworks

Evolution and Composition of Object-Oriented Frameworks Michael Mattsson University of Karlskrona/Ronneby Department of Software Engineering and Computer Science ISBN 91-628-3856-3 © Michael Mattsson, 2000 Cover background: Digital imagery® copyright 1999 PhotoDisc, Inc. Printed in Sweden Kaserntryckeriet AB Karlskrona, 2000 To Nisse, my father-in-law - who never had the opportunity to study as much as he would have liked to This thesis is submitted to the Faculty of Technology, University of Karlskrona/Ronneby, in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Engineering. Contact Information: Michael Mattsson Department of Software Engineering and Computer Science University of Karlskrona/Ronneby Soft Center SE-372 25 RONNEBY SWEDEN Tel.: +46 457 38 50 00 Fax.: +46 457 27 125 Email: [email protected] URL: http://www.ipd.hk-r.se/rise Abstract This thesis comprises studies of evolution and composition of object-oriented frameworks, a certain kind of reusable asset. An object-oriented framework is a set of classes that embodies an abstract design for solutions to a family of related prob- lems. The work presented is based on and has its origin in industrial contexts where object-oriented frameworks have been developed, used, evolved and managed. Thus, the results are based on empirical observations. Both qualitative and quanti- tative approaches have been used in the studies performed which cover both tech- nical and managerial aspects of object-oriented framework technology. Historically, object-oriented frameworks are large monolithic assets which require several design iterations and are therefore costly to develop. With the requirement of building larger applications, software engineers have started to compose multiple frameworks, thereby encountering a number of problems. -

51. Graphical User Interface Programming

Brad A. Myers Graphical User Interface Programming - 1 51. Graphical User Interface Programming Brad A. Myers* Human Computer Interaction Institute Carnegie Mellon University 5000 Forbes Avenue Pittsburgh, PA 15213 [email protected] http://www.cs.cmu.edu/~bam (412) 268-5150 FAX: (412) 268-1266 *This paper is revised from an earlier version that appeared as: Brad A. Myers. “User Interface Software Tools,” ACM Transactions on Computer-Human Interaction. vol. 2, no. 1, March, 1995. pp. 64-103. Draft of: January 27, 2003 To appear in: CRC HANDBOOK OF COMPUTER SCIENCE AND ENGINEERING – 2nd Edition, 2003. Allen B. Tucker, Editor-in-chief Brad A. Myers Graphical User Interface Programming - 2 51.1. Introduction Almost as long as there have been user interfaces, there have been special software systems and tools to help design and implement the user interface software. Many of these tools have demonstrated significant productivity gains for programmers, and have become important commercial products. Others have proven less successful at supporting the kinds of user interfaces people want to build. Virtually all applications today are built using some form of user interface tool [Myers 2000]. User interface (UI) software is often large, complex and difficult to implement, debug, and modify. As interfaces become easier to use, they become harder to create [Myers 1994]. Today, direct manipulation interfaces (also called “GUIs” for Graphical User Interfaces) are almost universal. These interfaces require that the programmer deal with elaborate graphics, multiple ways for giving the same command, multiple asynchronous input devices (usually a keyboard and a pointing device such as a mouse), a “mode free” interface where the user can give any command at virtually any time, and rapid “semantic feedback” where determining the appropriate response to user actions requires specialized information about the objects in the program. -

Design and Implementation of ET++, a Seamless Object-Oriented Application Framework1

Design and Implementation of ET++, a Seamless Object-Oriented Application Framework1 André Weinand, Erich Gamma, Rudolf Marty Abstract: ET++ is a homogeneous object-oriented class library integrating user interface building blocks, basic data structures, and support for object input/output with high level application framework components. The main goals in designing ET++ have been the desire to substantially ease the building of highly interactive applications with consistent user interfaces following the well known desktop metaphor, and to combine all ET++ classes into a seamless system structure. Experience has proven that writing a complex application based on ET++ can result in a reduction in source code size of 80% and more compared to the same software written on top of a conventional graphic toolbox. ET++ is im- plemented in C++ and runs under UNIX™ and either SunWindows™, NeWS™, or the X11 window system. This paper discusses the design and implementation of ET++. It also reports key experience from working with C++ and ET++. A description of code browsing and object inspection tools for ET++ is included as well. ET++ is available in the public domain.2 Key Words: application framework, user interfaces, user interface toolkits, object-oriented programming, C++ programming language, programming environment 1 Introduction Making computers easier to use is one of the reasons for the current interest in interactive and graphical user interfaces that present information as pictures instead of text and numbers. They are easy to learn and fun to use. Constructing such interfaces, on the other hand, often requires considerable effort because they must not only provide the functionality of conventional programs, but also have to show data as well as manipulation concepts in a pictorial way. -

Rhapsody Developer's Guide

Jesse Feiler AP PROFESSIONAL AP Professional is a division of Academic Press Boston San Diego New York London Sydney Tokyo Toronto Find us on the Web! http:/ /www.apnet.com This book is printed on acid-free paper. @ Copyright © 1997 by Academic Press. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopy, recording, or any information storage and retrieval system, without permission in writing from the publisher. Excerpts from Chartsmith are copyright © 1997 by Blacksmith, Inc. All rights reserved. Excerpts from OpenBase are copyright © 1997 by OpenBase International, Ltd. All rights reserved. Excerpts from Create are copyright © 1997 by Stone Design, Inc. All rights reserved. Excerpts from OmniWeb are copyright © 1997 by Omni Development, Inc. All rights reserved. Excerpts from TIFFany are copyright © 1997 by Caffeine Software. All rights reserved. All brand names and product names mentioned in this book are trademarks or registered trademarks of their respective companies. Academic Press 525 B Street, Suite 1900, San Diego, CA 92101-4495 1300 Boylston Street, Chestnut Hill, MA 02167 United Kingdom Edition published by ACADEMIC PRESS LIMITED 24-28 Oval Road, London NW1 7DX ISBN 0-12-251334-7 Printed in the United States of America 97 98 99 00 CP 9 8 7 6 5 4 3 2 1 Table of Contents Advanced Mac Look and Feel i Y e llo w Box Mac OS 0P6NSTgP based JaCT^ Core OS: Microkernel, ^0, Fiie System... |Power Macintosh, PowerPC Piatform Hardware Semantics T ables ........................................................................................... xv Preface........................................................................................................... -

Garnet Comprehensive Support for Graphical, Highly Interactive User Interfaces

Garnet Comprehensive Support for Graphical, Highly Interactive User Interfaces Brad A. Myers, Dario A. Giuse, Roger B. Dannenberg, Brad Vander Zanden, David S. Kosbie, Edward Pervin, Andrew Mickish, and Philippe Marchal Carnegie Mellon University ser interface software is difficult terface Builder, Smethers Barnes’ Proto- and expensive to implement.’ typer for the Macintosh, and UIMX for X U Highly interactive interfaces are Windows. These programs let a designer among the hardest to create, since they Garnet helps create place only preprogrammed interaction must handle at least two asynchronous in- techniques in windows, and they usually put devices (such as a mouse and key- highly interactive user allow only a few parameters to be set. board), real-time feedback, multiple win- interfaces by Often, a designer will type the name of a dows, and elaborate, dynamic graphics. procedure to be called when the interaction The Garnet research project is creating a emphasizing easy technique is executed. Designers cannot set of tools to aid the design and implemen- modify or design the interaction techniques tation of highly interactive, graphical, di- specification of object themselves, and the interface builders do rect manipulation user interfaces. Garnet behavior, often by not address application-specific graphics also helps designers rapidly prototype dif- at all. ferent interfaces and explore various user demonstration and A user interface management system interface metaphors during early product without programming. helps a designer handle the dialogue, or design. sequencing, aspects of the user interface Most graphical interfaces are created - that is, what happens after each user using toolkits, collections of interaction action. Such systems have generally not techniques (sometimes called “widgets” or addressed the creation of toolkit compo- “gadgets”) such as menus, scroll bars, and nents (menus, scroll bars, etc.) or the spec- buttons. -

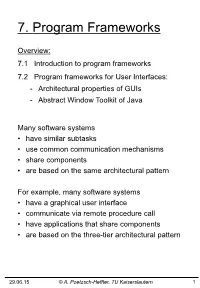

Program Frameworks

7. Program Frameworks Overview: 7.1 Introduction to program frameworks 7.2 Program frameworks for User Interfaces: - Architectural properties of GUIs - Abstract Window Toolkit of Java Many software systems • have similar subtasks • use common communication mechanisms • share components • are based on the same architectural pattern For example, many software systems • have a graphical user interface • communicate via remote procedure call • have applications that share components • are based on the three-tier architectural pattern 29.06.15 © A. Poetzsch-Heffter, TU Kaiserslautern 1 If systems share properties, there is a chance to reuse • architectural and design knowledge • implementation techniques • implementation parts • communication infrastructure An important method to support reuse is the construc- tion of frameworks that capture the shared properties. 7.1 Introduction to Program Frameworks • Program frameworks are an important technique for software reuse. • They are usually developed for certain tasks or domains. • From a static point of view, a program framework is an extensible and adaptable system of modules or classes that implement the core functionality and communication mechanisms of a general task or domain. 29.06.15 © A. Poetzsch-Heffter, TU Kaiserslautern 2 Program frameworks are written in some programming language (e.g. C, C++, or Java). Depending on the language, the elements of a program framework are • procedures, • modules, • classes, • interfaces, • or similar programming elements. In the following, we give answers to two questions: 1. What is the distinction between a program framework and an arbitrary set of library classes? 2. What is the distinction between program frameworks and other kinds of frameworks? Both answers will help to understand the relation between architectural properties and program frameworks. -

Carbon: an Essential Element of Mac OS X

ADC March 2001 2/6/01 1:44 PM Page 1 Apple Developer Connection Direct Carbon: An Essential Element of Mac OS X ne of the things that makes Mac OS X unique is its integra- developers, while at the same time delivering tion of five separate application runtime environments: all the performance, features, and reliability OCarbon, Cocoa, Classic, Java, and BSD. Never before has an that Mac OS X has to offer. About 70 percent operating system offered developers so many options. This series of of the classic Mac OS APIs are fully support- articles will give you the information you need to make an informed ed, and Carbon adds many new APIs specifi- decision as to which of these environments is right for your applica- cally to take advantage of Mac OS X. tion on Mac OS X. This month, I’m going to talk about Carbon and what it offers you as a Macintosh developer. What Diamonds Are Made Of A Brief History of Carbon Carbon offers a wealth of features to support and accelerate your Carbon represents the evolution of the Mac OS API, from a single- Mac OS X development efforts. Whether you’re new to the platform, user, cooperatively scheduled system, to a modern multi-user system or an old-timer, here are some of the reasons why you’ll find Carbon with memory protection and preemptive multitasking. Carbon a solid foundation for your software product: sprang from Apple’s desire to provide a gentle migration path for • Broad Language Support Apple provides interfaces, compilers, and tools for Carbon programming in C and C++, while other languages such as BASIC and FORTRAN are offered by other tool vendors. -

Develop-17 9403 March 1994.Pdf

develop, The Apple Technical Journal, E D I T O R I A L S T A F F a quarterly publication of Apple Computer’s Editor-in-Cheek Caroline Rose Developer Press group, is published in March, Technical Buckstopper Dave Johnson June, September, and December. Our Boss Greg Joswiak This issue’s CD. The develop Bookmark CD (or His Boss Dennis Matthews the Developer CD Series disc, Reference Library Review Board Pete (“Luke”) Alexander, edition) for March 1994 or later contains this Jim Reekes, Bryan K. (“Beaker”) Ressler, issue and all back issues of develop along with the Larry Rosenstein, Andy Shebanow, Gregg code that the articles describe. The develop issues Williams, Dean Yu and code are also available on AppleLink and via Managing Editor Cynthia Jasper anonymous ftp on ftp.apple.com. Note that some Contributing Editors Lorraine Anderson, software and documentation referred to as being Toni Haskell, Judy Helfand, Elaine Meyer, on this issue’s CD may be located on the Tool Rebecca Pepper Chest edition rather than the Reference Library Indexer Marc Savage edition of the Developer CD Series disc. Macintosh Technical Notes. Where references to Macintosh Technical Notes in develop are A R T & P R O D U C T I O N followed by something like “(Memory 13),” this Production/Art Director Diane Wilcox indicates the category and number of the Note Technical Illustration Shawn Morningstar, on this issue’s CD. John Ryan E-mail addresses. Most e-mail addresses Formatting Forbes Mill Press mentioned in develop are AppleLink addresses; Film Services Aptos Post, Inc. -

UI Programming

UI Programming (part of this content is based on previous classes from Anastasia, S. Huot, M. Beaudouin-Lafon, N.Roussel, O.Chapuis) Assignment 1 is out! Design and implement an interactive tool for creating the layout of comic strips https://www.lri.fr/~fanis/teaching/ISI2014/assignments/ass1/ Graphical interfaces GUIs: input is specified w.r.t. output Input peripherals specify commands at specific locations on the screen (pointing), where specific objects are drown by the system. Familiar behavior from physical world WIMP interfaces WIMP: Window, Icons, Menus and Pointing Presentation ! Windows, icons and other graphical objects Interaction ! Menus, dialog boxes, text input fields, etc Input ! pointing, selection, ink/path Perception-action loop ! feedback Software layers Application Applications/Communication (MacApp) Builders, Java Swing, JavaFX, Qt (C++), Interface Tools & Toolkits GTK+, MFC, Cocoa Graphics Library GDI+, Quartz, GTK+/Xlib, OpenGL Windowing System X Windows (+KDE or GNU) Input/Output Operating System Windows, Mac OS, Unix, Linux, Android, iOS, WindowsCE Software layers Application Interface Tools & Toolkits Graphics Library Windowing System Input/Output Operating System Input/output peripherals Input: where we give commands Output: where the system shows information & reveals its state Interactivity vs. computing Closed systems (computation): ! read input, compute, produce result ! final state (end of computation) Open systems (interaction): ! events/changes caused by environment ! infinite loop, non-deterministic -

FDK 10 Installation Guide

FDK Installation Guide VERSION 10 Frame Developer’s Kit, Adobe Systems Incorporated March 2012 Corporate Headquarters 345 Park Avenue San Jose, CA 95110-2704 (408) 536-6000 © 2012 Adobe Systems Incorporated and its licensors. All rights reserved. Adobe® FrameMaker® 10 for Windows® Frame Developer’s Kit for Windows This manual is protected under copyright law, furnished for informational use only, is subject to change without notice, and should not be construed as a commitment by Adobe Systems Incorporated. Adobe Systems Incorporated assumes no responsibility or liability for any errors or inaccuracies that may appear in the informational content contained in this manual. This manual is licensed for use under the terms of the Creative Commons Attribution Non-Commercial 3.0 License. This License allows users to copy, distribute, and transmit the manual for noncommercial purposes only so long as (1) proper attribution to Adobe is given as the owner of the manual; and (2) any reuse or distribution of the manual contains a notice that use of the manual is governed by these terms. The best way to provide notice is to include the following link. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc/3.0/us/ Adobe, the Adobe logo, Adobe AIR, Adobe Captivate, Adobe Type Manager, Acrobat, AIR, Creative Suite, Distiller, Flash, FrameMaker, Illustrator, PageMaker, Photoshop, PostScript, Reader, RoboHelp, and RoboScreenCapture are trademarks of Adobe Systems Incorporated in the United States and/or other countries. Microsoft, Windows, and Windows Vista are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries.