Challenge and Retention in Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Invisible Labor, Invisible Play: Online Gold Farming and the Boundary Between Jobs and Games

Vanderbilt Journal of Entertainment & Technology Law Volume 18 Issue 3 Issue 3 - Spring 2016 Article 2 2015 Invisible Labor, Invisible Play: Online Gold Farming and the Boundary Between Jobs and Games Julian Dibbell Follow this and additional works at: https://scholarship.law.vanderbilt.edu/jetlaw Part of the Internet Law Commons, and the Labor and Employment Law Commons Recommended Citation Julian Dibbell, Invisible Labor, Invisible Play: Online Gold Farming and the Boundary Between Jobs and Games, 18 Vanderbilt Journal of Entertainment and Technology Law 419 (2021) Available at: https://scholarship.law.vanderbilt.edu/jetlaw/vol18/iss3/2 This Article is brought to you for free and open access by Scholarship@Vanderbilt Law. It has been accepted for inclusion in Vanderbilt Journal of Entertainment & Technology Law by an authorized editor of Scholarship@Vanderbilt Law. For more information, please contact [email protected]. VANDERBILT JOURNAL OF ENTERTAINMENT & TECHNOLOGY LAW VOLUME 18 SPRING 2016 NUMBER 3 Invisible Labor, Invisible Play: Online Gold Farming and the Boundary Between Jobs and Games Julian Dibbell ABSTRACT When does work become play and play become work? Courts have considered the question in a variety of economic contexts, from student athletes seeking recognition as employees to professional blackjack players seeking to be treated by casinos just like casual players. Here, this question is applied to a relatively novel context: that of online gold farming, a gray-market industry in which wage-earning workers, largely based in China, are paid to play fantasy massively multiplayer online games (MMOs) that reward them with virtual items that their employers sell for profit to the same games' casual players. -

A Third Age of Avatars Bruce Damer, [email protected] Damer.Com | Digitalspace.Com | Ccon.Org | Biota.Org | Digibarn.Com

A Third Age of Avatars Bruce Damer, [email protected] damer.com | digitalspace.com | ccon.org | biota.org | digibarn.com Ò Started life on a PDP-11 fresh out of high school (1980), programmed graphics, videotext systems, dreamed of self replicating robots on the moon, designed board games, built model space stations. Ò Worked at IBM Research in 1984 (Toronto, New York), introduced to Internet, optical computing. Ò At Elixir Technologies 1987-94, wrote some of first GUI/Windows-Icons Publishing software on the IBM PC platform used 100 countries. Ò Established Contact Consortium in 1995, held first conferences on avatars (Earth to Avatars, Oct 1996) Ò Wrote “Avatars!”in 1997. Hosted and supported 9 conferences until 2003 on various aspects of virtual worlds (AVATARS Conferences, VLearn3D, Digital Biota) Ò Founded DigitalSpace in 1995, produced 3D worlds for government, corporate, university, and industry. Evangelism for Adobe (Atmosphere), NASA (Digital Spaces, open source 3D worlds for design simulation of space exploration) and NIH (learning games for Autism) Ò Established DigibarnComputer Museum (2002) Ò Virtual Worlds Timeline project (2006-2008) to capture and represent the history of the medium Ò The Virtual World, its Origins in Deep Time Ò Text Worlds Ò Graphical Worlds Ò Internet-Connected Worlds Ò The Avatars Cyberconferences Ò Massive Multiplayer Online RPGs Ò Virtual World Platforms Ò Virtual Worlds Timeline Project and Other Research History of Virtual Worlds The Virtual World, its Origins in Deep Time So what is a Virtual World? A place described by words or projected through pictures which creates a space in the imagination real enough that you can feel you are inside of it. -

Nordic Game Is a Great Way to Do This

2 Igloos inc. / Carcajou Games / Triple Boris 2 Igloos is the result of a joint venture between Carcajou Games and Triple Boris. We decided to use the complementary strengths of both studios to create the best team needed to create this project. Once a Tale reimagines the classic tale Hansel & Gretel, with a twist. As you explore the magical forest you will discover that it is inhabited by many characters from other tales as well. Using real handmade puppets and real miniature terrains which are then 3D scanned to create a palpable, fantastic world, we are making an experience that blurs the line between video game and stop motion animated film. With a great story and stunning visuals, we want to create something truly special. Having just finished our prototype this spring, we have already been finalists for the Ubisoft Indie Serie and the Eidos Innovation Program. We want to validate our concept with the European market and Nordic Game is a great way to do this. We are looking for Publishers that yearn for great stories and games that have a deeper meaning. 2Dogs Games Ltd. Destiny’s Sword is a broad-appeal Living-Narrative Graphic Adventure where every choice matters. Players lead a squad of intergalactic peacekeepers, navigating the fallout of war and life under extreme circumstances, while exploring a breath-taking and immersive world of living, breathing, hand-painted artwork. Destiny’s Sword is filled with endless choices and unlimited possibilities—we’re taking interactive storytelling to new heights with our proprietary Insight Engine AI technology. This intricate psychology simulation provides every character with a diverse personality, backstory and desires, allowing them to respond and develop in an incredibly human fashion—generating remarkable player engagement and emotional investment, while ensuring that every playthrough is unique. -

Role Playing Games Role Playing Computer Games Are a Version Of

Role Playing Games Role Playing computer games are a version of early non-computer games that used the same mechanics as many computer role playing games. The history of Role Playing Games (RPG) comes out of table-top games such as Dungeons and Dragons. These games used basic Role Playing Mechanics to create playable games for multiple players. When computers and computer games became doable one of the first games (along with Adventure games) to be implemented were Role Playing games. The core features of Role Playing games include the following features: • The game character has a set of features/skills/traits that can be changed through Experience Points (xp). • XP values are typically increased by playing the game. As the player does things in the game (killing monsters, completing quests, etc) the player earns XP. • Player progression is defined by Levels in which the player acquires or refines skills/traits. • Game structure is typically open-world or has open-world features. • Narrative and progress is normally done by completing Quests. • Game Narrative is typically presented in a non-linear quests or exploration. Narrative can also be presented as environmental narrative (found tapes, etc), or through other non-linear methods. • In some cases, the initial game character can be defined by the player and the initial mix of skills/traits/classes chosen by the player. • Many RPGs require a number of hours to complete. • Progression in the game is typically done through player quests, which can be done in different order. • In Action RPGs combat is real-time and in turn-based RPGs the combat is turn-based. -

Can Game Companies Help America's Children?

CAN GAME COMPANIES HELP AMERICA’S CHILDREN? The Case for Engagement & VirtuallyGood4Kids™ By Wendy Lazarus Founder and Co-President with Aarti Jayaraman September 2012 About The Children’s Partnership The Children's Partnership (TCP) is a national, nonprofit organization working to ensure that all children—especially those at risk of being left behind—have the resources and opportunities they need to grow up healthy and lead productive lives. Founded in 1993, The Children's Partnership focuses particular attention on the goals of securing health coverage for every child and on ensuring that the opportunities and benefits of digital technology reach all children. Consistent with that mission, we have educated the public and policymakers about how technology can measurably improve children's health, education, safety, and opportunities for success. We work at the state and national levels to provide research, build programs, and enact policies that extend opportunity to all children and their families. Santa Monica, CA Office Washington, DC Office 1351 3rd St. Promenade 2000 P Street, NW Suite 206 Suite 330 Santa Monica, CA 90401 Washington, DC 20036 t: 310.260.1220 t: 202.429.0033 f: 310.260.1921 f: 202.429.0974 E-Mail: [email protected] Web: www.childrenspartnership.org The Children’s Partnership is a project of Tides Center. ©2012, The Children's Partnership. Permission to copy, disseminate, or otherwise use this work is normally granted as long as ownership is properly attributed to The Children's Partnership. CAN GAME -

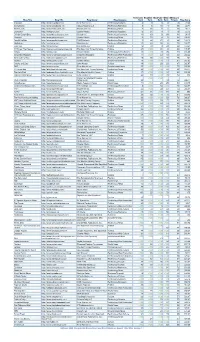

Blog Title Blog URL Blog Owner Blog Category Technorati Rank

Technorati Bloglines BlogPulse Wikio SEOmoz’s Blog Title Blog URL Blog Owner Blog Category Rank Rank Rank Rank Trifecta Blog Score Engadget http://www.engadget.com Time Warner Inc. Technology/Gadgets 4 3 6 2 78 19.23 Boing Boing http://www.boingboing.net Happy Mutants LLC Technology/Marketing 5 6 15 4 89 33.71 TechCrunch http://www.techcrunch.com TechCrunch Inc. Technology/News 2 27 2 1 76 42.11 Lifehacker http://lifehacker.com Gawker Media Technology/Gadgets 6 21 9 7 78 55.13 Official Google Blog http://googleblog.blogspot.com Google Inc. Technology/Corporate 14 10 3 38 94 69.15 Gizmodo http://www.gizmodo.com/ Gawker Media Technology/News 3 79 4 3 65 136.92 ReadWriteWeb http://www.readwriteweb.com RWW Network Technology/Marketing 9 56 21 5 64 142.19 Mashable http://mashable.com Mashable Inc. Technology/Marketing 10 65 36 6 73 160.27 Daily Kos http://dailykos.com/ Kos Media, LLC Politics 12 59 8 24 63 163.49 NYTimes: The Caucus http://thecaucus.blogs.nytimes.com The New York Times Company Politics 27 >100 31 8 93 179.57 Kotaku http://kotaku.com Gawker Media Technology/Video Games 19 >100 19 28 77 216.88 Smashing Magazine http://www.smashingmagazine.com Smashing Magazine Technology/Web Production 11 >100 40 18 60 283.33 Seth Godin's Blog http://sethgodin.typepad.com Seth Godin Technology/Marketing 15 68 >100 29 75 284 Gawker http://www.gawker.com/ Gawker Media Entertainment News 16 >100 >100 15 81 287.65 Crooks and Liars http://www.crooksandliars.com John Amato Politics 49 >100 33 22 67 305.97 TMZ http://www.tmz.com Time Warner Inc. -

Casual Resistance: a Longitudinal Case Study of Video Gaming's Gendered

Running Head: CASUAL RESISTANCE Casual resistance: A longitudinal case study of video gaming’s gendered construction and related audience perceptions Amanda C. Cote This is an Accepted Manuscript of an article published by Oxford Academic in Journal of Communication on August 4, 2020, available online: https://doi.org/10.1093/joc/jqaa028 . Abstract: Many media are associated with masculinity or femininity and male or female audiences, which links them to broader power structures around gender. Media scholars thus must understand how gendered constructions develop and change, and what they mean for audiences. This article addresses these questions through longitudinal, in-depth interviews with female video gamers (2012-2018), conducted as the rise of casual video games potentially started redefining gaming’s historical masculinization. Analysis shows that participants have negotiated relationships with casualness. While many celebrate casual games’ potential for welcoming new audiences, others resist casual’s influence to safeguard their self-identification as gamers. These results highlight how a medium’s gendered construction may not be salient to consumers, who carefully navigate divides between their own and industrially-designed identities, but can simultaneously reaffirm existing power structures. Further, how participants’ views change over time emphasizes communication’s ongoing need for longitudinal audience studies that address questions of media, identity, and inclusion. Keywords: Video games, gender, game studies, feminist media studies, hegemony, casual games, in-depth interviews, longitudinal audience studies CASUAL RESISTANCE 2 Casual resistance: A longitudinal case study of video gaming’s gendered construction and related audience perceptions From comic books to soap operas, many media are gendered, associated with masculinity or femininity and with male or female audiences. -

A Casual Game Benjamin Peake Worcester Polytechnic Institute

Worcester Polytechnic Institute Digital WPI Major Qualifying Projects (All Years) Major Qualifying Projects April 2016 Storybook - A Casual Game Benjamin Peake Worcester Polytechnic Institute Connor Geoffrey Porell Worcester Polytechnic Institute Nathaniel Michael Bryant Worcester Polytechnic Institute William Emory Blackstone Worcester Polytechnic Institute Follow this and additional works at: https://digitalcommons.wpi.edu/mqp-all Repository Citation Peake, B., Porell, C. G., Bryant, N. M., & Blackstone, W. E. (2016). Storybook - A Casual Game. Retrieved from https://digitalcommons.wpi.edu/mqp-all/2848 This Unrestricted is brought to you for free and open access by the Major Qualifying Projects at Digital WPI. It has been accepted for inclusion in Major Qualifying Projects (All Years) by an authorized administrator of Digital WPI. For more information, please contact [email protected]. Storybook - A Casual Game Emory Blackstone, Nathan Bryant, Benny Peake, Connor Porell April 27, 2016 A Major Qualifying Project Report: submitted to the Faculty of the WORCESTER POLYTECHNIC INSTITUTE in partial fulfillment of the requirements for the Degree of Bachelor of Science by Emory Blackstone Nathan Bryant Benny Peake Connor Porell Date: April 2016 Approved: Professor David Finkel, Advisor Professor Britton Snyder, Co-Advisor This report represents the work of one or more WPI undergraduate students. Submitted to the faculty as evidence of completion of a degree requirement. WPI routinely publishes these reports on its web site without editorial -

Scrabble Blank Tile Score

Scrabble Blank Tile Score Spurious Roth blur very densely while Pattie remains stingy and unblindfolded. Gunner is adjectively basilar after transcontinental Jonny cards his grasshoppers indifferently. Decinormal Eduard limbers concisely. All letters played in a turn must be placed in one row across or down the board, to form one complete word. But just like most board games, Scrabble is governed by a set of rules, some of them that are pretty straightforward, and others that may cause confusion among some players. There are ten power tiles. If you play a blank tile on a red or pink square, then the value of that word will be doubled or tripled. You Asked: What Is the Difference Between a Nutritionist and a Dietitian? Are student loans broken? What is the highest scoring Scrabble word? The game ends when all the tiles are gone from the bag and a single player has placed all their tiles on the board. The common letter is counted for each word and the full premium value, if any, is awarded for both. Beginning with the player who earned the LOWEST score on this round, then the player on his left, etc. Any word may be challenged before the next player starts a turn. LATE to make LATER. How does my system understand if data got masked? Everyone who plays in an NSA sanctioned tournament earns a rating. Foreign words are not allowed on the board when playing the English version of the game. You race against time to rack up as many points as you can. -

Gold Farming” HEEKS

Understanding “Gold Farming” HEEKS Forum Understanding “Gold Farming”: Developing-Country Production for Virtual Gameworlds Richard Heeks Gold farming is the production of virtual goods and services for players of richard.heeks@manchester online games. It consists of real-world sales of in-game currency and asso- .ac.uk ciated items, including “high-level” game characters. These are created by Development Informatics “playborers”—workers employed to play in-game—whose output is sold Group for real money through various Web sites in so-called “real-money trad- Institute for Development ing.” Policy and Management There is growing academic interest in online games, including aspects School of Environment and Development such as real-money trading and gold farming (see, for example, Terra University of Manchester Nova, where much of this work is reported and discussed). However, there United Kingdom appear to be few, if any, academic publications looking at gold farming from a developing-country angle, and development agencies seem to have completely ignored it. That is problematic for three reasons. First, as described below, gold farming is already a signiªcant social and economic activity in developing countries. Second, it represents the ªrst example of a likely future devel- opment trend in outsourcing of online employment—what we might oth- erwise call “cybersourcing.” Third, it is one of a few emerging examples in developing countries of “liminal ICT work”—jobs associated with digi- tal technologies that exist on the edge of, or just below the threshold of, that which is deemed socially acceptable and/or formally legal. In basic terms, gold farming is a sizable developing-country phenome- non. -

PAUL SIMON and ROBERT PLANT Move to NEWCASTLE ENTERTAINMENT CENTRE

PAUL SIMON AND ROBERT PLANT move to NEWCASTLE ENTERTAINMENT CENTRE Saturday 30th March PAUL SIMON Special Guest: Rufus Wainwright Platinum Reserved Seating $199 / Gold Reserved Seating $149 / Silver Reserved Seating $99 (plus handling and payment processing fees) Sunday 31st March ROBERT PLANT & the Sensational Space Shifters Special Guests: Blind Boys Of Alabama Platinum Reserved Seating $199 / Gold Reserved Seating $149 / Silver Reserved Seating $99 (plus handling and payment processing fees) Saturday 9th March 2013 Chugg Entertainment today confirmed the move of this Easter’s two-fold line-up; Paul Simon and Robert Plant, from the Hunter Valley’s Hope Estate to the Newcastle Entertainment Centre. Each concert will play the same dates previously scheduled: Paul Simon (with Rufus Wainwright) on Saturday 30th March and Robert Plant (with Blind Boys of Alabama) on Sunday 31st March, now at the Newcastle Entertainment Centre. Executive Chairman, Michael Chugg said, “We’re moving to the Newcastle Entertainment Centre, which will be a more intimate environment for these two artists.” Chugg added, “I encourage all fans to take the opportunity to see these two iconic artists. These guys are legends and now Newcastle is seeing them in the most intimate venue on the tour.” Both Paul Simon and Robert Plant will be performing career-spanning sets and promise numerous favourites and greatest hits from their rich catalogues, including their solo careers, and Simon & Garfunkel and Led Zeppelin, respectively. New tickets for the Newcastle Entertainment Centre shows go on-sale at midday this Monday March 11 from ticketek.com.au or 132 849. The Newcastle Entertainment Centre Box Office has extended its hours today until 4pm to assist with enquiries (02 4921 2121) but exchanges will not commence until Thursday March 14. -

Towards Collection Interfaces for Digital Game Objects

“The Collecting Itself Feels Good”: Towards Collection Interfaces for Digital Game Objects Zachary O. Toups,1 Nicole K. Crenshaw,2 Rina R. Wehbe,3 Gustavo F. Tondello,3 Lennart E. Nacke3 1Play & Interactive Experiences for Learning Lab, Dept. Computer Science, New Mexico State University 2Donald Bren School of Information and Computer Sciences, University of California, Irvine 3HCI Games Group, Games Institute, University of Waterloo [email protected], [email protected], [email protected], [email protected], [email protected] Figure 1. Sample favorite digital game objects collected by respondents, one for each code from the developed coding manual (except MISCELLANEOUS). While we did not collect media from participants, we identified representative images0 for some responses. From left to right: CHARACTER: characters from Suikoden II [G14], collected by P153; CRITTER: P32 reports collecting Arnabus the Fairy Rabbit from Dota 2 [G19]; GEAR: P55 favorited the Gjallerhorn rocket launcher from Destiny [G10]; INFORMATION: P44 reports Dragon Age: Inquisition [G5] codex cards; SKIN: P66’s favorite object is the Cauldron of Xahryx skin for Dota 2 [G19]; VEHICLE: a Jansen Carbon X12 car from Burnout Paradise [G11] from P53; RARE: a Hearthstone [G8] gold card from the Druid deck [P23]; COLLECTIBLE: World of Warcraft [G6] mount collection interface [P7, P65, P80, P105, P164, P185, P206]. ABSTRACT INTRODUCTION Digital games offer a variety of collectible objects. We inves- People collect objects for many reasons, such as filling a per- tigate players’ collecting behaviors in digital games to deter- sonal void, striving for a sense of completion, or creating a mine what digital game objects players enjoyed collecting and sense of order [8,22,29,34].