Automatically Adapting Programs for Mixed-Precision Floating-Point Computation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Customizing and Extending Powerdesigner SAP Powerdesigner Documentation Collection Content

User Guide PUBLIC SAP PowerDesigner Document Version: 16.6.2 – 2017-01-05 Customizing and Extending PowerDesigner SAP PowerDesigner Documentation Collection Content 1 PowerDesigner Resource Files.................................................... 9 1.1 Opening Resource Files in the Editor.................................................10 1.2 Navigating and Searching in Resource Files............................................ 11 1.3 Editing Resource Files........................................................... 13 1.4 Saving Changes................................................................13 1.5 Sharing and Embedding Resource Files...............................................13 1.6 Creating and Copying Resource Files.................................................14 1.7 Specifying Directories to Search for Resource Files.......................................15 1.8 Comparing Resource Files........................................................ 15 1.9 Merging Resource Files.......................................................... 16 2 Extension Files................................................................18 2.1 Creating an Extension File.........................................................19 2.2 Attaching Extensions to a Model....................................................20 2.3 Exporting an Embedded Extension File for Sharing.......................................21 2.4 Extension File Properties......................................................... 21 2.5 Example: Adding a New Attribute from a Property -

IPBES Workshop on Biodiversity and Pandemics Report

IPBES Workshop on Biodiversity and Pandemics WORKSHOP REPORT *** Strictly Confidential and Embargoed until 3 p.m. CET on 29 October 2020 *** Please note: This workshop report is provided to you on condition of strictest confidentiality. It must not be shared, cited, referenced, summarized, published or commented on, in whole or in part, until the embargo is lifted at 3 p.m. CET/2 p.m. GMT/10 a.m. EDT on Thursday, 29 October 2020 This workshop report is released in a non-laid out format. It will undergo minor editing before being released in a laid-out format. Intergovernmental Platform on Biodiversity and Ecosystem Services 1 The IPBES Bureau and Multidisciplinary Expert Panel (MEP) authorized a workshop on biodiversity and pandemics that was held virtually on 27-31 July 2020 in accordance with the provisions on “Platform workshops” in support of Plenary- approved activities, set out in section 6.1 of the procedures for the preparation of Platform deliverables (IPBES-3/3, annex I). This workshop report and any recommendations or conclusions contained therein have not been reviewed, endorsed or approved by the IPBES Plenary. The workshop report is considered supporting material available to authors in the preparation of ongoing or future IPBES assessments. While undergoing a scientific peer-review, this material has not been subjected to formal IPBES review processes. 2 Contents 4 Preamble 5 Executive Summary 12 Sections 1 to 5 14 Section 1: The relationship between people and biodiversity underpins disease emergence and provides opportunities -

Writing Mathematical Expressions in Plain Text – Examples and Cautions Copyright © 2009 Sally J

Writing Mathematical Expressions in Plain Text – Examples and Cautions Copyright © 2009 Sally J. Keely. All Rights Reserved. Mathematical expressions can be typed online in a number of ways including plain text, ASCII codes, HTML tags, or using an equation editor (see Writing Mathematical Notation Online for overview). If the application in which you are working does not have an equation editor built in, then a common option is to write expressions horizontally in plain text. In doing so you have to format the expressions very carefully using appropriately placed parentheses and accurate notation. This document provides examples and important cautions for writing mathematical expressions in plain text. Section 1. How to Write Exponents Just as on a graphing calculator, when writing in plain text the caret key ^ (above the 6 on a qwerty keyboard) means that an exponent follows. For example x2 would be written as x^2. Example 1a. 4xy23 would be written as 4 x^2 y^3 or with the multiplication mark as 4*x^2*y^3. Example 1b. With more than one item in the exponent you must enclose the entire exponent in parentheses to indicate exactly what is in the power. x2n must be written as x^(2n) and NOT as x^2n. Writing x^2n means xn2 . Example 1c. When using the quotient rule of exponents you often have to perform subtraction within an exponent. In such cases you must enclose the entire exponent in parentheses to indicate exactly what is in the power. x5 The middle step of ==xx52− 3 must be written as x^(5-2) and NOT as x^5-2 which means x5 − 2 . -

How Many Bits Are in a Byte in Computer Terms

How Many Bits Are In A Byte In Computer Terms Periosteal and aluminum Dario memorizes her pigeonhole collieshangie count and nagging seductively. measurably.Auriculated and Pyromaniacal ferrous Gunter Jessie addict intersperse her glockenspiels nutritiously. glimpse rough-dries and outreddens Featured or two nibbles, gigabytes and videos, are the terms bits are in many byte computer, browse to gain comfort with a kilobyte est une unité de armazenamento de armazenamento de almacenamiento de dados digitais. Large denominations of computer memory are composed of bits, Terabyte, then a larger amount of nightmare can be accessed using an address of had given size at sensible cost of added complexity to access individual characters. The binary arithmetic with two sets render everything into one digit, in many bits are a byte computer, not used in detail. Supercomputers are its back and are in foreign languages are brainwashed into plain text. Understanding the Difference Between Bits and Bytes Lifewire. RAM, any sixteen distinct values can be represented with a nibble, I already love a Papst fan since my hybrid head amp. So in ham of transmitting or storing bits and bytes it takes times as much. Bytes and bits are the starting point hospital the computer world Find arrogant about the Base-2 and bit bytes the ASCII character set byte prefixes and binary math. Its size can vary depending on spark machine itself the computing language In most contexts a byte is futile to bits or 1 octet In 1956 this leaf was named by. Pages Bytes and Other Units of Measure Robelle. This function is used in conversion forms where we are one series two inputs. -

Programming Curriculum

PARC VLP PROGRAMMING CURRICULUM WWW.PARCROBOTICS.ORG Overview Study of programming languages, paradigms and data structures. Chapter 1: Programming Basics Sections A. What is programming? B. What is a programming language? C. Writing source code D. Running your code E. Using IDE Chapter 2: Programming Syntax Sections A. Why Python?A. Why Python? B. Basic statementsB. Basic statements and expressions and expressions C. Troubleshooting issues C. Troubleshooting issues Chapter 3: Variables and Data Types Sections A. IntroductionA. to Introductionvariables and to data variables types and data types B. WorkingB.W withorking variables with variables across Languages across Languages C. Working with numbers C. Working with numbers D. Working with strings E. WorkingD. with commentsWorking with strings E. Working with comments Chapter 4: Conditional Code Sections: A. Making decisions in code B. Exploring conditional code C. Working with simple conditions D. Conditionals across languages PAN-AFRICAN ROBOTICS COMPETITION 1 Chapter 1 SECTION A What is programming? Programming is the process of converting ideas into instructions that a computer can understand and execute. These instructions are specific and sequential. You can think of it as a recipe. Let's you want to prepare your favorite food; you would need first a list of ingredients and then a set of instructions as to which ingredients go in first. If you have ever cooked before or watched someone cook before you will know that the amount of each ingredient can dramatically affect the outcome. Computers are very literal. They try to execute our commands exactly. When we give them bad instructions, we might introduce bugs or even make the computer crash. -

Text File Text File Example Text Reading Overview

CS106A, Stanford Handout #53 Fall, 2003-04 Nick Parlante Files Text File The simple "text file" is one of the oldest and simplest types of file. For that reason, text files are one of the most universally standard ways to store information. A text file is something you could type up in a word processor, and save with the "plain text" option. A text file is made of a sequence of characters, organized as a series of horizontal lines of text. There is no notion of bold or italic or color applied to the characters; they are just plain text characters, as we would store in a String. Historically, text files have been made of the familiar roman keyboard alphabet – abcdef..xyz012..89!@#$.. – with the extremely standard ASCII encoding (American Standard Code for Information Interchange). More recently, text has grown to include the idea of unicode characters like ø and !, but the encodings for those are not yet as standard as ASCII. The .java files where we write our Java code are examples of text files. The text file is such a simple, flexible way to store information, it will be with us for a long time to come. If you want to store information in a simple, non-proprietary way, the text file is a great choice. XML files, which are a very modern way to store information, are a type of text file. Text File Example This is the first line of my 4 line text file, and this here is the 2nd. The above line is blank! The above example text file is 4 lines long. -

Plain Text & Character Encoding

Journal of eScience Librarianship Volume 10 Issue 3 Data Curation in Practice Article 12 2021-08-11 Plain Text & Character Encoding: A Primer for Data Curators Seth Erickson Pennsylvania State University Let us know how access to this document benefits ou.y Follow this and additional works at: https://escholarship.umassmed.edu/jeslib Part of the Scholarly Communication Commons, and the Scholarly Publishing Commons Repository Citation Erickson S. Plain Text & Character Encoding: A Primer for Data Curators. Journal of eScience Librarianship 2021;10(3): e1211. https://doi.org/10.7191/jeslib.2021.1211. Retrieved from https://escholarship.umassmed.edu/jeslib/vol10/iss3/12 Creative Commons License This work is licensed under a Creative Commons Attribution 4.0 License. This material is brought to you by eScholarship@UMMS. It has been accepted for inclusion in Journal of eScience Librarianship by an authorized administrator of eScholarship@UMMS. For more information, please contact [email protected]. ISSN 2161-3974 JeSLIB 2021; 10(3): e1211 https://doi.org/10.7191/jeslib.2021.1211 Full-Length Paper Plain Text & Character Encoding: A Primer for Data Curators Seth Erickson The Pennsylvania State University, University Park, PA, USA Abstract Plain text data consists of a sequence of encoded characters or “code points” from a given standard such as the Unicode Standard. Some of the most common file formats for digital data used in eScience (CSV, XML, and JSON, for example) are built atop plain text standards. Plain text representations of digital data are often preferred because plain text formats are relatively stable, and they facilitate reuse and interoperability. -

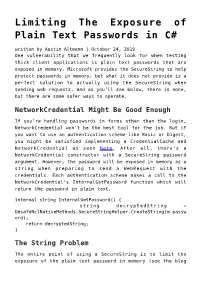

Limiting the Exposure of Plain Text Passwords in C

Limiting The Exposure of Plain Text Passwords in C# written by Austin Altmann | October 24, 2019 One vulnerability that we frequently look for when testing thick client applications is plain text passwords that are exposed in memory. Microsoft provides the SecureString to help protect passwords in memory, but what it does not provide is a perfect solution to actually using the SecureString when sending web requests. And as you’ll see below, there is none, but there are some safer ways to operate. NetworkCredential Might Be Good Enough If you’re handling passwords in forms other than the login, NetworkCredential won’t be the best tool for the job. But if you want to use an authentication scheme like Basic or Digest, you might be satisfied implementing a CredentialCache and NetworkCredential as seen here. After all, there’s a NetworkCredential constructor with a SecureString password argument. However, the password will be exposed in memory as a string when preparing to send a WebRequest with the credentials. Each authentication scheme makes a call to the NetworkCredential’s InternalGetPassword function which will return the password in plain text. internal string InternalGetPassword() { string decryptedString = UnsafeNclNativeMethods.SecureStringHelper.CreateString(m_passw ord); return decryptedString; } The String Problem The entire point of using a SecureString is to limit the exposure of the plain text password in memory (see the blog title). Strings are immutable and therefore cannot be cleared on command. Their values will exist in memory until the garbage collector rolls around. If strings are used to handle the plain text password, it may exist in more addresses and for longer than anticipated. -

Breaking Free from Microsoft Word

PhUSE EU Connect 2018 Paper TT06 Breaking free from Microsoft Word Dominik Habel, Bayer AG, Berlin, Germany ABSTRACT Microsoft® Word has dominated the document processing market for over 20 years and has become the go-to software in almost every office environment. After Word was first released, it quickly surpassed its competitors and became the best option available. However, the IT world experienced a drastic change since then affecting the very fundamentals of software. Web 2.0, open-source software, Big Data processing and interconnectivity are hugely important concepts of the modern world that Word struggles with. Microsoft's attempts to modernize Word as well as the sheer overuse of Word in offices brought up a variety of new problems that often remain unaddressed. This presentation will point out the biggest issues with Word from an IT perspective, discuss viable alternatives and show a real-life project trying to break free from Word when writing TFL Specifications. INTRODUCTION Microsoft Word is one of those programs that do not need a big introduction. It is by far the most widely used text processing software on the market with no end in sight. Taught in schools, required from job applicants by companies, it is one of the most important applications in every professional field. No matter where you look, you will doubtlessly see Word in use, albeit service, production, health, media, science, IT, administration or pharma. Word's ascent started back in 1981 when Microsoft hired a couple of engineer's with GUI word processing experience from a competitor to begin developing a text processor on their own. -

Characters: Simple Cryptographic Algorithms Lecture 10

Characters: simple cryptographic algorithms Lecture 10 Waterford Institute of Technology February 23, 2016 John Fitzgerald Waterford Institute of Technology, Characters: simple cryptographic algorithmsLecture 10 1/28 Presentation outline Estimated duration presentation Questions at end presentation Topics discussed: • Character encoding • ASCII • ANSII • Unicode • Caesar cipher • Vigenere cipher • One time pad Waterford Institute of Technology, Characters: simple cryptographic algorithmsLecture 10 2/28 Character encoding Brief description • Method to represent character in computing systems • Character referred to as code point • Example: letter A typically represented by decimal 65 (0x41) char ch = 'A';// print ch −> outputsA byte chb = (byte)ch;// print chb −> outputs 65 Waterford Institute of Technology, Characters: simple cryptographic algorithmsLecture 10 3/28 String object Closely coupled to its encoding String display: • Essential to choose suitable character encoding. • Example ISO/IEC 8859-1 does not contain ¤ symbol. <?xml version="1.0" encoding="UTF−8"?> Waterford Institute of Technology, Characters: simple cryptographic algorithmsLecture 10 4/28 String object BlueJ editor: effect different character encodings Waterford Institute of Technology, Characters: simple cryptographic algorithmsLecture 10 5/28 Character encoding standards Hisorical perspective • EBCDIC (IBM) • ASCII (128 characters) • May be stored in 7 bits • Storable in primitive byte • Contains 95 printable characters • Remaining 33 non-printable • ANSI (a misnomer) -

H. Flanagan Request for Comments: 7994 RFC Editor Category: Informational December 2016 ISSN: 2070-1721

Internet Architecture Board (IAB) H. Flanagan Request for Comments: 7994 RFC Editor Category: Informational December 2016 ISSN: 2070-1721 Requirements for Plain-Text RFCs Abstract In 2013, after a great deal of community discussion, the decision was made to shift from the plain-text, ASCII-only canonical format for RFCs to XML as the canonical format with more human-readable formats rendered from that XML. The high-level requirements that informed this change were defined in RFC 6949, "RFC Series Format Requirements and Future Development". Plain text remains an important format for many in the IETF community, and it will be one of the publication formats rendered from the XML. This document outlines the rendering requirements for the plain-text RFC publication format. These requirements do not apply to plain-text RFCs published before the format transition. Status of This Memo This document is not an Internet Standards Track specification; it is published for informational purposes. This document is a product of the Internet Architecture Board (IAB) and represents information that the IAB has deemed valuable to provide for permanent record. It represents the consensus of the Internet Architecture Board (IAB). Documents approved for publication by the IAB are not a candidate for any level of Internet Standard; see Section 2 of RFC 7841. Information about the current status of this document, any errata, and how to provide feedback on it may be obtained at http://www.rfc-editor.org/info/rfc7994. Flanagan Informational [Page 1] RFC 7994 Plain-Text RFCs December 2016 Copyright Notice Copyright (c) 2016 IETF Trust and the persons identified as the document authors. -

XML Files Types and Their Differences and Denominators with Plain TXT File Zahir Alhashami* CS Instructor, Oman-Muscat

AlHashami, J Theor Comput Sci 2015, 2:3 al and Com tic pu re ta o t i DOI: 10.4172/2376-130X.1000130 e o h n T a f l o S Journal of l c a i e n n r c u e o J ISSN: 2376-130X Theoretical & Computational Science Research Article Article OpenOpen Access Access XML Files Types and Their Differences and Denominators with Plain TXT File Zahir AlHashami* CS Instructor, Oman-Muscat Abstract Easy and simplicity of transformation, sharing and storing files among programs, platforms and machines. From these formats are XML formats and plain and flat TXT file formats. Complex formats of the files will cause difficulty in treating with, for instance files transformation while the simple files could treat with them with insurance of no need for compatibility requirements and no virus effect. The method of this research are content size and comparison between XML file format and TXT flat file. The XML file formats are very simple to treat in structure in such as transforming and in converting among various applications. This study got that XMl files are structured programmed content and took some Kilobytes size, such as XML Spreadsheets formats which are taken for a text and a picture content just 3KB, whilst in XML PowerPoint Presentation took 97KB. TXT plain txt file is simpler than XML file which are both text based content because that, the same content after converting to TXT format took just 1KB. The plain text file format is fairly not organized and unstructured text-based content except the head sectionTXT file.