Recent Trends on Research and Development of Quantum Computers and Standardization of Post-Quantum Cryptography

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Quantitative Study of Advanced Encryption Standard Performance

United States Military Academy USMA Digital Commons West Point ETD 12-2018 A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility Daniel Hawthorne United States Military Academy, [email protected] Follow this and additional works at: https://digitalcommons.usmalibrary.org/faculty_etd Part of the Information Security Commons Recommended Citation Hawthorne, Daniel, "A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility" (2018). West Point ETD. 9. https://digitalcommons.usmalibrary.org/faculty_etd/9 This Doctoral Dissertation is brought to you for free and open access by USMA Digital Commons. It has been accepted for inclusion in West Point ETD by an authorized administrator of USMA Digital Commons. For more information, please contact [email protected]. A QUANTITATIVE STUDY OF ADVANCED ENCRYPTION STANDARD PERFORMANCE AS IT RELATES TO CRYPTOGRAPHIC ATTACK FEASIBILITY A Dissertation Presented in Partial Fulfillment of the Requirements for the Degree of Doctor of Computer Science By Daniel Stephen Hawthorne Colorado Technical University December, 2018 Committee Dr. Richard Livingood, Ph.D., Chair Dr. Kelly Hughes, DCS, Committee Member Dr. James O. Webb, Ph.D., Committee Member December 17, 2018 © Daniel Stephen Hawthorne, 2018 1 Abstract The advanced encryption standard (AES) is the premier symmetric key cryptosystem in use today. Given its prevalence, the security provided by AES is of utmost importance. Technology is advancing at an incredible rate, in both capability and popularity, much faster than its rate of advancement in the late 1990s when AES was selected as the replacement standard for DES. Although the literature surrounding AES is robust, most studies fall into either theoretical or practical yet infeasible. -

Chapter 2 the Data Encryption Standard (DES)

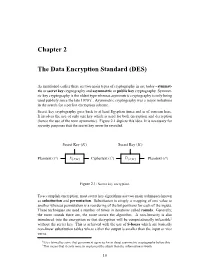

Chapter 2 The Data Encryption Standard (DES) As mentioned earlier there are two main types of cryptography in use today - symmet- ric or secret key cryptography and asymmetric or public key cryptography. Symmet- ric key cryptography is the oldest type whereas asymmetric cryptography is only being used publicly since the late 1970’s1. Asymmetric cryptography was a major milestone in the search for a perfect encryption scheme. Secret key cryptography goes back to at least Egyptian times and is of concern here. It involves the use of only one key which is used for both encryption and decryption (hence the use of the term symmetric). Figure 2.1 depicts this idea. It is necessary for security purposes that the secret key never be revealed. Secret Key (K) Secret Key (K) ? ? - - - - Plaintext (P ) E{P,K} Ciphertext (C) D{C,K} Plaintext (P ) Figure 2.1: Secret key encryption. To accomplish encryption, most secret key algorithms use two main techniques known as substitution and permutation. Substitution is simply a mapping of one value to another whereas permutation is a reordering of the bit positions for each of the inputs. These techniques are used a number of times in iterations called rounds. Generally, the more rounds there are, the more secure the algorithm. A non-linearity is also introduced into the encryption so that decryption will be computationally infeasible2 without the secret key. This is achieved with the use of S-boxes which are basically non-linear substitution tables where either the output is smaller than the input or vice versa. 1It is claimed by some that government agencies knew about asymmetric cryptography before this. -

TS 135 202 V7.0.0 (2007-06) Technical Specification

ETSI TS 135 202 V7.0.0 (2007-06) Technical Specification Universal Mobile Telecommunications System (UMTS); Specification of the 3GPP confidentiality and integrity algorithms; Document 2: Kasumi specification (3GPP TS 35.202 version 7.0.0 Release 7) 3GPP TS 35.202 version 7.0.0 Release 7 1 ETSI TS 135 202 V7.0.0 (2007-06) Reference RTS/TSGS-0335202v700 Keywords SECURITY, UMTS ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice Individual copies of the present document can be downloaded from: http://www.etsi.org The present document may be made available in more than one electronic version or in print. In any case of existing or perceived difference in contents between such versions, the reference version is the Portable Document Format (PDF). In case of dispute, the reference shall be the printing on ETSI printers of the PDF version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://portal.etsi.org/tb/status/status.asp If you find errors in the present document, please send your comment to one of the following services: http://portal.etsi.org/chaircor/ETSI_support.asp Copyright Notification No part may be reproduced except as authorized by written permission. -

Meet-In-The-Middle Attacks on Reduced-Round XTEA*

Meet-in-the-Middle Attacks on Reduced-Round XTEA⋆ Gautham Sekar⋆⋆, Nicky Mouha⋆ ⋆ ⋆, Vesselin Velichkov†, and Bart Preneel 1 Department of Electrical Engineering ESAT/SCD-COSIC, Katholieke Universiteit Leuven, Kasteelpark Arenberg 10, B-3001 Heverlee, Belgium. 2 Interdisciplinary Institute for BroadBand Technology (IBBT), Belgium. {Gautham.Sekar,Nicky.Mouha,Vesselin.Velichkov, Bart.Preneel}@esat.kuleuven.be Abstract. The block cipher XTEA, designed by Needham and Wheeler, was published as a technical report in 1997. The cipher was a result of fixing some weaknesses in the cipher TEA (also designed by Wheeler and Needham), which was used in Microsoft’s Xbox gaming console. XTEA is a 64-round Feistel cipher with a block size of 64 bits and a key size of 128 bits. In this paper, we present meet-in-the-middle attacks on twelve vari- ants of the XTEA block cipher, where each variant consists of 23 rounds. Two of these require only 18 known plaintexts and a computational ef- fort equivalent to testing about 2117 keys, with a success probability of 1−2 −1025. Under the standard (single-key) setting, there is no attack re- ported on 23 or more rounds of XTEA, that requires less time and fewer data than the above. This paper also discusses a variant of the classical meet-in-the-middle approach. All attacks in this paper are applicable to XETA as well, a block cipher that has not undergone public analysis yet. TEA, XTEA and XETA are implemented in the Linux kernel. Keywords: Cryptanalysis, block cipher, meet-in-the-middle attack, Feis- tel network, XTEA, XETA. -

Species Composition of the Largest Shark Fin Retail-Market in Mainland

www.nature.com/scientificreports OPEN Species composition of the largest shark fn retail‑market in mainland China Diego Cardeñosa1,2*, Andrew T. Fields1, Elizabeth A. Babcock3, Stanley K. H. Shea4, Kevin A. Feldheim5 & Demian D. Chapman6 Species‑specifc monitoring through large shark fn market surveys has been a valuable data source to estimate global catches and international shark fn trade dynamics. Hong Kong and Guangzhou, mainland China, are the largest shark fn markets and consumption centers in the world. We used molecular identifcation protocols on randomly collected processed fn trimmings (n = 2000) and non‑ parametric species estimators to investigate the species composition of the Guangzhou retail market and compare the species diversity between the Guangzhou and Hong Kong shark fn retail markets. Species diversity was similar between both trade hubs with a small subset of species dominating the composition. The blue shark (Prionace glauca) was the most common species overall followed by the CITES‑listed silky shark (Carcharhinus falciformis), scalloped hammerhead shark (Sphyrna lewini), smooth hammerhead shark (S. zygaena) and shortfn mako shark (Isurus oxyrinchus). Our results support previous indications of high connectivity between the shark fn markets of Hong Kong and mainland China and suggest that systematic studies of other fn trade hubs within Mainland China and stronger law‑enforcement protocols and capacity building are needed. Many shark populations have declined in the last four decades, mainly due to overexploitation to supply the demand for their fns in Asia and meat in many other countries 1–4. Mainland China was historically the world’s second largest importer of shark fns and foremost consumer of shark fn soup, yet very little is known about the species composition of shark fns in this trade hub2. -

Design and Low Power VLSI Implementation of Triple -DES Algorithm

Design and Low Power VLSI Implementation of Triple -DES Algorithm Alexandra Camacho, Isaac Sanchez, Eugene B. John and Ram Krishnan Department of Electrical and Computer Engineering The University of Texas at San Antonio One UTSA Circle, San Antonio, TX 78249-0669 ([email protected] ; [email protected]; [email protected]; [email protected]) Abstract — Triple DES (Data Encryption Standard) is a widely we seek to improve encryption by building on the DES chip used encryption algorithm known to achieve good performance and developing a Triple-DES algorithm that is more secure and high security. In this paper, we describe the design and low and commonly used by financial institutions for secure power VLSI implementation of the well-known triple DES transactions. algorithm. The implementation includes two main parts: key generation and the encryption/decryption process. In the DES II. BACKGROUND module, the key generation part takes the given key and produces 16 distinct keys to be used during the encryption stage. The DES A. Data Encryption Standard process is then repeated three times for added security. The chip The Data Encryption Standard (DES) is a block cipher that was implemented using TSMC 180nm process. The designed chip 2 uses shared secret encryption. DES is the archetypal block has an area of 766,359 µm and the power dissipation is 32.38mW cipher which is an algorithm that takes a fixed-length string of for a Vdd of 1.8V. plaintext bits and transforms it through a series of complicated Keywords-Encryption, DES, 3DES, Low Power, Cryptosystem. operations into another ciphertext bitstring of the same length. -

Data Encryption Standard (DES)

6 Data Encryption Standard (DES) Objectives In this chapter, we discuss the Data Encryption Standard (DES), the modern symmetric-key block cipher. The following are our main objectives for this chapter: + To review a short history of DES + To defi ne the basic structure of DES + To describe the details of building elements of DES + To describe the round keys generation process + To analyze DES he emphasis is on how DES uses a Feistel cipher to achieve confusion and diffusion of bits from the Tplaintext to the ciphertext. 6.1 INTRODUCTION The Data Encryption Standard (DES) is a symmetric-key block cipher published by the National Institute of Standards and Technology (NIST). 6.1.1 History In 1973, NIST published a request for proposals for a national symmetric-key cryptosystem. A proposal from IBM, a modifi cation of a project called Lucifer, was accepted as DES. DES was published in the Federal Register in March 1975 as a draft of the Federal Information Processing Standard (FIPS). After the publication, the draft was criticized severely for two reasons. First, critics questioned the small key length (only 56 bits), which could make the cipher vulnerable to brute-force attack. Second, critics were concerned about some hidden design behind the internal structure of DES. They were suspicious that some part of the structure (the S-boxes) may have some hidden trapdoor that would allow the National Security Agency (NSA) to decrypt the messages without the need for the key. Later IBM designers mentioned that the internal structure was designed to prevent differential cryptanalysis. -

TEA, a Tiny Encryption Algorithm

TEA, a Tiny Encryption Algorithm David J. Wheeler Roger M. Needham Computer Lab oratory Cambridge University England Abstract. Wegive a short routine which is based on a Feistel iteration and uses a large numb er of rounds to get security with simplicity. Intro duction We design a short program which will run on most machines and encypher safely.Itusesalargenumb er of iterations rather than a complicated program. It is hop ed that it can easily b e translated into most languages in a compatible way. The rst program is given b elow. It uses little set up time and do es a weak non linear iteration enough rounds to make it secure. There are no preset tables or long set up times. It assumes 32 bit words. Enco de Routine Routine, written in the C language, for enco ding with key k[0] - k[3]. Data in v[0] and v[1]. void code(long* v, long* k) { unsigned long y=v[0],z=v[1], sum=0, /* set up */ delta=0x9e3779b9, /* a key schedule constant */ n=32 ; while (n-->0) { /* basic cycle start */ sum += delta ; y += ((z<<4)+k[0]) ^ (z+sum) ^ ((z>>5)+k[1]) ; z += ((y<<4)+k[2]) ^ (y+sum) ^ ((y>>5)+k[3]) ; } /* end cycle */ v[0]=y ; v[1]=z ; } Basics of the routine It is a Feistel typ e routine although addition and subtraction are used as the reversible op erators rather than XOR. The routine relies on the alternate use of XOR and ADD to provide nonlinearity.A dual shift causes all bits of the key and data to b e mixed rep eatedly. -

CRYPTREC Report 2001

CRYPTREC 2001 CRYPTREC Report 2001 March 2002 Information-technology Promotion Agency, Japan Telecommunications Advancement Organization of Japan CRYPTREC 2001 Contents Introduction 1 On the CRYPTREC Evaluation Committee Report 3 Note on the use of this report 7 1 Overview of Cryptographic Technique Evaluation 8 1.1 Evaluation Organs and Schedule ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・8 1.2 How cryptography evaluation was carried out. ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・12 1.3 Terminology ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・13 1.4 Evaluation Committee Members ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・14 2 Evaluation of public key cryptographic techniques 17 2.1 Target of Evaluation and Evaluation Method ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・17 2.1.1 Evaluated Cryptographic Techniques ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・17 2.1.2 Evaluation Policy・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・17 2.1.3 Evaluation Method ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・19 2.2 Evaluation result ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・21 2.2.1 Outline of evaluation result ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・21 2.2.2 General Evaluation of the Difficulty of Arithmetic Problems・・・・・・・・・・・・・・・・・23 2.2.3 Overall Judgment of Cryptographic Techniques that were the Target of Detailed Evaluation ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・23 2.2.4 Overall Judgment of Cryptographic Techniques under Observation ・・・・・・・・・・・26 2.2.5 Overall Judgment of Cryptosystems that were Targets of Screening Evaluations in 2001 -

FPGA Modeling and Optimization of a SIMON Lightweight Block Cipher

sensors Article FPGA Modeling and Optimization of a SIMON Lightweight Block Cipher Sa’ed Abed 1,* , Reem Jaffal 1, Bassam Jamil Mohd 2 and Mohammad Alshayeji 1 1 Department of Computer Engineering, Kuwait University, Safat 13060, Kuwait; [email protected] (R.J.); [email protected] (M.A.) 2 Department of Computer Engineering, Hashemite University, Zarqa 13115, Jordan; [email protected] * Correspondence: [email protected]; Tel.: +965-249-837-90 Received: 18 December 2018; Accepted: 18 February 2019; Published: 21 February 2019 Abstract: Security of sensitive data exchanged between devices is essential. Low-resource devices (LRDs), designed for constrained environments, are increasingly becoming ubiquitous. Lightweight block ciphers provide confidentiality for LRDs by balancing the required security with minimal resource overhead. SIMON is a lightweight block cipher targeted for hardware implementations. The objective of this research is to implement, optimize, and model SIMON cipher design for LRDs, with an emphasis on energy and power, which are critical metrics for LRDs. Various implementations use field-programmable gate array (FPGA) technology. Two types of design implementations are examined: scalar and pipelined. Results show that scalar implementations require 39% less resources and 45% less power consumption. The pipelined implementations demonstrate 12 times the throughput and consume 31% less energy. Moreover, the most energy-efficient and optimum design is a two-round pipelined implementation, which consumes 31% of the best scalar’s implementation energy. The scalar design that consumes the least energy is a four-round implementation. The scalar design that uses the least area and power is the one-round implementation. -

Secure Message Transfer Using Triple DES

International Journal of Computer Applications (0975 – 8887) Volume 165 – No.8, May 2017 Secure Message Transfer using Triple DES Somya Garg Tarun Garg Bhawna Mallick, PhD Computer Science Department Computer Science Department Professor & Head at GCET, GCET, Greater Noida GCET, Greater Noida Greater Noida ABSTRACT into non readable message before the transmission of actual With the rapid growing of internet and networks applications, message. For example, “Ajd672#@91ukl8*^5%” is a Cipher data security becomes more important than ever before. Text produced for “Hello Friend how are you”. Encryption algorithms play a crucial role in information Encryption- A process of converting Plain Text into Cipher security systems. In this paper, we have a study of a popular Text is called as Encryption. Cryptography uses the encryption algorithm: Triple DES. We overviewed the base encryption technique to send confidential messages through functions and analyzed the security for the algorithm. We an insecure channel. The process of encryption requires two have succesfully sent mails from one user to other and if a things- an encryption algorithm and a key. An encryption suspicious word is encountered then the mail is being sent to algorithm means the technique that has been used in the admin instead of that user. encryption. Encryption takes place at the sender side. Keywords Decryption- A reverse process of encryption is called as Triple DES, Encryption, Security, Suspicious word, Cipher Decryption. It is a process of converting Cipher Text into text, Decryption. Plain Text. Cryptography uses the decryption technique at the receiver side to obtain the original message from non readable 1. -

The Impact of Quantum Computing on Present Cryptography

(IJACSA) International Journal of Advanced Computer Science and Applications, Vol. 9, No. 3, 2018 The Impact of Quantum Computing on Present Cryptography Vasileios Mavroeidis, Kamer Vishi, Mateusz D. Zych, Audun Jøsang Department of Informatics, University of Oslo, Norway Email(s): fvasileim, kamerv, mateusdz, josangg@ifi.uio.no Abstract—The aim of this paper is to elucidate the impli- challenge of building a true quantum computer. Furthermore, cations of quantum computing in present cryptography and we introduce two important quantum algorithms that can to introduce the reader to basic post-quantum algorithms. In have a huge impact in asymmetric cryptography and less in particular the reader can delve into the following subjects: present symmetric, namely Shor’s algorithm and Grover’s algorithm cryptographic schemes (symmetric and asymmetric), differences respectively. Finally, post-quantum cryptography is presented. between quantum and classical computing, challenges in quantum Particularly, an emphasis is given on the analysis of quantum computing, quantum algorithms (Shor’s and Grover’s), public key encryption schemes affected, symmetric schemes affected, the im- key distribution and some mathematical based solutions such pact on hash functions, and post quantum cryptography. Specif- as lattice-based cryptography, multivariate-based cryptography, ically, the section of Post-Quantum Cryptography deals with hash-based signatures, and code-based cryptography. different quantum key distribution methods and mathematical- based solutions, such as the BB84 protocol, lattice-based cryptog- II. PRESENT CRYPTOGRAPHY raphy, multivariate-based cryptography, hash-based signatures and code-based cryptography. In this chapter we explain briefly the role of symmetric algorithms, asymmetric algorithms and hash functions in mod- Keywords—quantum computers; post-quantum cryptography; Shor’s algorithm; Grover’s algorithm; asymmetric cryptography; ern cryptography.