The Law of Large Numbers and the Central Limit Theorem (PDF)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

5. the Student T Distribution

Virtual Laboratories > 4. Special Distributions > 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 5. The Student t Distribution In this section we will study a distribution that has special importance in statistics. In particular, this distribution will arise in the study of a standardized version of the sample mean when the underlying distribution is normal. The Probability Density Function Suppose that Z has the standard normal distribution, V has the chi-squared distribution with n degrees of freedom, and that Z and V are independent. Let Z T= √V/n In the following exercise, you will show that T has probability density function given by −(n +1) /2 Γ((n + 1) / 2) t2 f(t)= 1 + , t∈ℝ ( n ) √n π Γ(n / 2) 1. Show that T has the given probability density function by using the following steps. n a. Show first that the conditional distribution of T given V=v is normal with mean 0 a nd variance v . b. Use (a) to find the joint probability density function of (T,V). c. Integrate the joint probability density function in (b) with respect to v to find the probability density function of T. The distribution of T is known as the Student t distribution with n degree of freedom. The distribution is well defined for any n > 0, but in practice, only positive integer values of n are of interest. This distribution was first studied by William Gosset, who published under the pseudonym Student. In addition to supplying the proof, Exercise 1 provides a good way of thinking of the t distribution: the t distribution arises when the variance of a mean 0 normal distribution is randomized in a certain way. -

Chapter 8 Fundamental Sampling Distributions And

CHAPTER 8 FUNDAMENTAL SAMPLING DISTRIBUTIONS AND DATA DESCRIPTIONS 8.1 Random Sampling pling procedure, it is desirable to choose a random sample in the sense that the observations are made The basic idea of the statistical inference is that we independently and at random. are allowed to draw inferences or conclusions about a Random Sample population based on the statistics computed from the sample data so that we could infer something about Let X1;X2;:::;Xn be n independent random variables, the parameters and obtain more information about the each having the same probability distribution f (x). population. Thus we must make sure that the samples Define X1;X2;:::;Xn to be a random sample of size must be good representatives of the population and n from the population f (x) and write its joint proba- pay attention on the sampling bias and variability to bility distribution as ensure the validity of statistical inference. f (x1;x2;:::;xn) = f (x1) f (x2) f (xn): ··· 8.2 Some Important Statistics It is important to measure the center and the variabil- ity of the population. For the purpose of the inference, we study the following measures regarding to the cen- ter and the variability. 8.2.1 Location Measures of a Sample The most commonly used statistics for measuring the center of a set of data, arranged in order of mag- nitude, are the sample mean, sample median, and sample mode. Let X1;X2;:::;Xn represent n random variables. Sample Mean To calculate the average, or mean, add all values, then Bias divide by the number of individuals. -

Chapter 4: Central Tendency and Dispersion

Central Tendency and Dispersion 4 In this chapter, you can learn • how the values of the cases on a single variable can be summarized using measures of central tendency and measures of dispersion; • how the central tendency can be described using statistics such as the mode, median, and mean; • how the dispersion of scores on a variable can be described using statistics such as a percent distribution, minimum, maximum, range, and standard deviation along with a few others; and • how a variable’s level of measurement determines what measures of central tendency and dispersion to use. Schooling, Politics, and Life After Death Once again, we will use some questions about 1980 GSS young adults as opportunities to explain and demonstrate the statistics introduced in the chapter: • Among the 1980 GSS young adults, are there both believers and nonbelievers in a life after death? Which is the more common view? • On a seven-attribute political party allegiance variable anchored at one end by “strong Democrat” and at the other by “strong Republican,” what was the most Democratic attribute used by any of the 1980 GSS young adults? The most Republican attribute? If we put all 1980 95 96 PART II :: DESCRIPTIVE STATISTICS GSS young adults in order from the strongest Democrat to the strongest Republican, what is the political party affiliation of the person in the middle? • What was the average number of years of schooling completed at the time of the survey by 1980 GSS young adults? Were most of these twentysomethings pretty close to the average on -

Notes Mean, Median, Mode & Range

Notes Mean, Median, Mode & Range How Do You Use Mode, Median, Mean, and Range to Describe Data? There are many ways to describe the characteristics of a set of data. The mode, median, and mean are all called measures of central tendency. These measures of central tendency and range are described in the table below. The mode of a set of data Use the mode to show which describes which value occurs value in a set of data occurs most frequently. If two or more most often. For the set numbers occur the same number {1, 1, 2, 3, 5, 6, 10}, of times and occur more often the mode is 1 because it occurs Mode than all the other numbers in the most frequently. set, those numbers are all modes for the data set. If each number in the set occurs the same number of times, the set of data has no mode. The median of a set of data Use the median to show which describes what value is in the number in a set of data is in the middle if the set is ordered from middle when the numbers are greatest to least or from least to listed in order. greatest. If there are an even For the set {1, 1, 2, 3, 5, 6, 10}, number of values, the median is the median is 3 because it is in the average of the two middle the middle when the numbers are Median values. Half of the values are listed in order. greater than the median, and half of the values are less than the median. -

Volatility Modeling Using the Student's T Distribution

Volatility Modeling Using the Student’s t Distribution Maria S. Heracleous Dissertation submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Economics Aris Spanos, Chair Richard Ashley Raman Kumar Anya McGuirk Dennis Yang August 29, 2003 Blacksburg, Virginia Keywords: Student’s t Distribution, Multivariate GARCH, VAR, Exchange Rates Copyright 2003, Maria S. Heracleous Volatility Modeling Using the Student’s t Distribution Maria S. Heracleous (ABSTRACT) Over the last twenty years or so the Dynamic Volatility literature has produced a wealth of uni- variateandmultivariateGARCHtypemodels.Whiletheunivariatemodelshavebeenrelatively successful in empirical studies, they suffer from a number of weaknesses, such as unverifiable param- eter restrictions, existence of moment conditions and the retention of Normality. These problems are naturally more acute in the multivariate GARCH type models, which in addition have the problem of overparameterization. This dissertation uses the Student’s t distribution and follows the Probabilistic Reduction (PR) methodology to modify and extend the univariate and multivariate volatility models viewed as alternative to the GARCH models. Its most important advantage is that it gives rise to internally consistent statistical models that do not require ad hoc parameter restrictions unlike the GARCH formulations. Chapters 1 and 2 provide an overview of my dissertation and recent developments in the volatil- ity literature. In Chapter 3 we provide an empirical illustration of the PR approach for modeling univariate volatility. Estimation results suggest that the Student’s t AR model is a parsimonious and statistically adequate representation of exchange rate returns and Dow Jones returns data. -

The Value at the Mode in Multivariate T Distributions: a Curiosity Or Not?

The value at the mode in multivariate t distributions: a curiosity or not? Christophe Ley and Anouk Neven Universit´eLibre de Bruxelles, ECARES and D´epartement de Math´ematique,Campus Plaine, boulevard du Triomphe, CP210, B-1050, Brussels, Belgium Universit´edu Luxembourg, UR en math´ematiques,Campus Kirchberg, 6, rue Richard Coudenhove-Kalergi, L-1359, Luxembourg, Grand-Duchy of Luxembourg e-mail: [email protected]; [email protected] Abstract: It is a well-known fact that multivariate Student t distributions converge to multivariate Gaussian distributions as the number of degrees of freedom ν tends to infinity, irrespective of the dimension k ≥ 1. In particular, the Student's value at the mode (that is, the normalizing ν+k Γ( 2 ) constant obtained by evaluating the density at the center) cν;k = k=2 ν converges towards (πν) Γ( 2 ) the Gaussian value at the mode c = 1 . In this note, we prove a curious fact: c tends k (2π)k=2 ν;k monotonically to ck for each k, but the monotonicity changes from increasing in dimension k = 1 to decreasing in dimensions k ≥ 3 whilst being constant in dimension k = 2. A brief discussion raises the question whether this a priori curious finding is a curiosity, in fine. AMS 2000 subject classifications: Primary 62E10; secondary 60E05. Keywords and phrases: Gamma function, Gaussian distribution, value at the mode, Student t distribution, tail weight. 1. Foreword. One of the first things we learn about Student t distributions is the fact that, when the degrees of freedom ν tend to infinity, we retrieve the Gaussian distribution, which has the lightest tails in the Student family of distributions. -

Reliable Reasoning”

Abstracta SPECIAL ISSUE III, pp. 10 – 17, 2009 COMMENTS ON HARMAN AND KULKARNI’S “RELIABLE REASONING” Glenn Shafer Gil Harman and Sanjeev Kulkarni have written an enjoyable and informative book that makes Vladimir Vapnik’s ideas accessible to a wide audience and explores their relevance to the philosophy of induction and reliable reasoning. The undertaking is important, and the execution is laudable. Vapnik’s work with Alexey Chervonenkis on statistical classification, carried out in the Soviet Union in the 1960s and 1970s, became popular in computer science in the 1990s, partly as the result of Vapnik’s books in English. Vapnik’s statistical learning theory and the statistical methods he calls support vector machines now dominate machine learning, the branch of computer science concerned with statistical prediction, and recently (largely after Harman and Kulkarni completed their book) these ideas have also become well known among mathematical statisticians. A century ago, when the academic world was smaller and less specialized, philosophers, mathematicians, and scientists interested in probability, induction, and scientific methodology talked with each other more than they do now. Keynes studied Bortkiewicz, Kolmogorov studied von Mises, Le Dantec debated Borel, and Fisher debated Jeffreys. Today, debate about probability and induction is mostly conducted within more homogeneous circles, intellectual communities that sometimes cross the boundaries of academic disciplines but overlap less in their discourse than in their membership. Philosophy of science, cognitive science, and machine learning are three of these communities. The greatest virtue of this book is that it makes ideas from these three communities confront each other. In particular, it looks at how Vapnik’s ideas in machine learning can answer or dissolve questions and puzzles that have been posed by philosophers. -

18.600: Lecture 31 .1In Strong Law of Large Numbers and Jensen's

18.600: Lecture 31 Strong law of large numbers and Jensen's inequality Scott Sheffield MIT Outline A story about Pedro Strong law of large numbers Jensen's inequality Outline A story about Pedro Strong law of large numbers Jensen's inequality I One possibility: put the entire sum in government insured interest-bearing savings account. He considers this completely risk free. The (post-tax) interest rate equals the inflation rate, so the real value of his savings is guaranteed not to change. I Riskier possibility: put sum in investment where every month real value goes up 15 percent with probability :53 and down 15 percent with probability :47 (independently of everything else). I How much does Pedro make in expectation over 10 years with risky approach? 100 years? Pedro's hopes and dreams I Pedro is considering two ways to invest his life savings. I Riskier possibility: put sum in investment where every month real value goes up 15 percent with probability :53 and down 15 percent with probability :47 (independently of everything else). I How much does Pedro make in expectation over 10 years with risky approach? 100 years? Pedro's hopes and dreams I Pedro is considering two ways to invest his life savings. I One possibility: put the entire sum in government insured interest-bearing savings account. He considers this completely risk free. The (post-tax) interest rate equals the inflation rate, so the real value of his savings is guaranteed not to change. I How much does Pedro make in expectation over 10 years with risky approach? 100 years? Pedro's hopes and dreams I Pedro is considering two ways to invest his life savings. -

Stochastic Models Laws of Large Numbers and Functional Central

This article was downloaded by: [Stanford University] On: 20 July 2010 Access details: Access Details: [subscription number 731837804] Publisher Taylor & Francis Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered office: Mortimer House, 37- 41 Mortimer Street, London W1T 3JH, UK Stochastic Models Publication details, including instructions for authors and subscription information: http://www.informaworld.com/smpp/title~content=t713597301 Laws of Large Numbers and Functional Central Limit Theorems for Generalized Semi-Markov Processes Peter W. Glynna; Peter J. Haasb a Department of Management Science and Engineering, Stanford University, Stanford, California, USA b IBM Almaden Research Center, San Jose, California, USA To cite this Article Glynn, Peter W. and Haas, Peter J.(2006) 'Laws of Large Numbers and Functional Central Limit Theorems for Generalized Semi-Markov Processes', Stochastic Models, 22: 2, 201 — 231 To link to this Article: DOI: 10.1080/15326340600648997 URL: http://dx.doi.org/10.1080/15326340600648997 PLEASE SCROLL DOWN FOR ARTICLE Full terms and conditions of use: http://www.informaworld.com/terms-and-conditions-of-access.pdf This article may be used for research, teaching and private study purposes. Any substantial or systematic reproduction, re-distribution, re-selling, loan or sub-licensing, systematic supply or distribution in any form to anyone is expressly forbidden. The publisher does not give any warranty express or implied or make any representation that the contents will be complete or accurate or up to date. The accuracy of any instructions, formulae and drug doses should be independently verified with primary sources. The publisher shall not be liable for any loss, actions, claims, proceedings, demand or costs or damages whatsoever or howsoever caused arising directly or indirectly in connection with or arising out of the use of this material. -

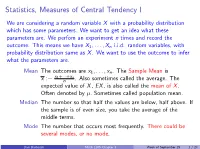

Statistics, Measures of Central Tendency I

Statistics, Measures of Central TendencyI We are considering a random variable X with a probability distribution which has some parameters. We want to get an idea what these parameters are. We perfom an experiment n times and record the outcome. This means we have X1;:::; Xn i.i.d. random variables, with probability distribution same as X . We want to use the outcome to infer what the parameters are. Mean The outcomes are x1;:::; xn. The Sample Mean is x1+···+xn x := n . Also sometimes called the average. The expected value of X , EX , is also called the mean of X . Often denoted by µ. Sometimes called population mean. Median The number so that half the values are below, half above. If the sample is of even size, you take the average of the middle terms. Mode The number that occurs most frequently. There could be several modes, or no mode. Dan Barbasch Math 1105 Chapter 9 Week of September 25 1 / 24 Statistics, Measures of Central TendencyII Example You have a coin for which you know that P(H) = p and P(T ) = 1 − p: You would like to estimate p. You toss it n times. You count the number of heads. The sample mean should be an estimate of p: EX = p, and E(X1 + ··· + Xn) = np: So X + ··· + X E 1 n = p: n Dan Barbasch Math 1105 Chapter 9 Week of September 25 2 / 24 Descriptive StatisticsI Frequency Distribution Divide into a number of equal disjoint intervals. For each interval count the number of elements in the sample occuring. -

Exploring the Mode – the Most Likely Value? Jerzy Letkowski Western New England University

OC13077 Exploring the Mode – the Most Likely Value? Jerzy Letkowski Western New England University Abstract The mean, median and mode are typical summary measures, each representing some focal tendency in random data. Even so the focus of each of the measures is different; they usually are packaged together as measures of central tendency. Among these three measures, the mode is granted the least amount of attention and it is frequently misinterpreted in many introductory textbooks on statistics. Also its centrality is questionable in some situations. The purpose of the paper is to provide a formal definition of the mode and to show appropriate interpretation of this measure with respect to different random data types: qualitative and quantitative (discrete and continuous). Several cases are presented to exemplify this purpose. Detail data processing steps are shown in Google spreadsheets. Keywords: mode, statistics, summary measures, probability distribution, data types, spreadsheet. Exploring the Mode – the Most Likely Value? OC13077 WHAT IS THE MODE? In general, in the context of a population, the concept of the mode of a random variable, X, having domain DX, is quite simple. Formally speaking (Spiegel, 1975, p. 84, McClave, 2011, p. 58, Sharpie, 2010, p. 115), it is a value, x*, of the variable that uniquely maximizes the probability-distribution function, f(x) : x* : f (x* ) = max {f (x)} or None (i) x∈DX One could refer to such a definition of the mode as a probability-distribution driven definition. In a way, it is a universal definition. For example, the maximum point for the Normal distribution (density function) is µ. -

On the Law of the Iterated Logarithm for L-Statistics Without Variance

Bulletin of the Institute of Mathematics Academia Sinica (New Series) Vol. 3 (2008), No. 3, pp. 417-432 ON THE LAW OF THE ITERATED LOGARITHM FOR L-STATISTICS WITHOUT VARIANCE BY DELI LI, DONG LIU AND ANDREW ROSALSKY Abstract Let {X, Xn; n ≥ 1} be a sequence of i.i.d. random vari- ables with distribution function F (x). For each positive inte- ger n, let X1:n ≤ X2:n ≤ ··· ≤ Xn:n be the order statistics of X1, X2, · · · , Xn. Let H(·) be a real Borel-measurable function de- fined on R such that E|H(X)| < ∞ and let J(·) be a Lipschitz function of order one defined on [0, 1]. Write µ = µ(F,J,H) = ← 1 n i E L : (J(U)H(F (U))) and n(F,J,H) = n Pi=1 J n H(Xi n), n ≥ 1, where U is a random variable with the uniform (0, 1) dis- ← tribution and F (t) = inf{x; F (x) ≥ t}, 0 <t< 1. In this note, the Chung-Smirnov LIL for empirical processes and the Einmahl- Li LIL for partial sums of i.i.d. random variables without variance are used to establish necessary and sufficient conditions for having L with probability 1: 0 < lim supn→∞ pn/ϕ(n) | n(F,J,H) − µ| < ∞, where ϕ(·) is from a suitable subclass of the positive, non- decreasing, and slowly varying functions defined on [0, ∞). The almost sure value of the limsup is identified under suitable con- ditions. Specializing our result to ϕ(x) = 2(log log x)p,p > 1 and to ϕ(x) = 2(log x)r,r > 0, we obtain an analog of the Hartman- Wintner-Strassen LIL for L-statistics in the infinite variance case.