Scalable Tools for Non-Intrusive Performance Debugging of Parallel Linux Workloads

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Kernel Boot-Time Tracing

Kernel Boot-time Tracing Linux Plumbers Conference 2019 - Tracing Track Masami Hiramatsu <[email protected]> Linaro, Ltd. Speaker Masami Hiramatsu - Working for Linaro and Linaro members - Tech Lead for a Landing team - Maintainer of Kprobes and related tracing features/tools Why Kernel Boot-time Tracing? Debug and analyze boot time errors and performance issues - Measure performance statistics of kernel boot - Analyze driver init failure - Debug boot up process - Continuously tracing from boot time etc. What We Have There are already many ftrace options on kernel command line ● Setup options (trace_options=) ● Output to printk (tp_printk) ● Enable events (trace_events=) ● Enable tracers (ftrace=) ● Filtering (ftrace_filter=,ftrace_notrace=,ftrace_graph_filter=,ftrace_graph_notrace=) ● Add kprobe events (kprobe_events=) ● And other options (alloc_snapshot, traceoff_on_warning, ...) See Documentation/admin-guide/kernel-parameters.txt Example of Kernel Cmdline Parameters In grub.conf linux /boot/vmlinuz-5.1 root=UUID=5a026bbb-6a58-4c23-9814-5b1c99b82338 ro quiet splash tp_printk trace_options=”sym-addr” trace_clock=global ftrace_dump_on_oops trace_buf_size=1M trace_event=”initcall:*,irq:*,exceptions:*” kprobe_event=”p:kprobes/myevent foofunction $arg1 $arg2;p:kprobes/myevent2 barfunction %ax” What Issues? Size limitation ● kernel cmdline size is small (< 256bytes) ● A half of the cmdline is used for normal boot Only partial features supported ● ftrace has too complex features for single command line ● per-event filters/actions, instances, histograms. Solutions? 1. Use initramfs - Too late for kernel boot time tracing 2. Expand kernel cmdline - It is not easy to write down complex tracing options on bootloader (Single line options is too simple) 3. Reuse structured boot time data (Devicetree) - Well documented, structured data -> V1 & V2 series based on this. Boot-time Trace: V1 and V2 series V1 and V2 series posted at June. -

Hiding Process Memory Via Anti-Forensic Techniques

DIGITAL FORENSIC RESEARCH CONFERENCE Hiding Process Memory via Anti-Forensic Techniques By: Frank Block (Friedrich-Alexander Universität Erlangen-Nürnberg (FAU) and ERNW Research GmbH) and Ralph Palutke (Friedrich-Alexander Universität Erlangen-Nürnberg) From the proceedings of The Digital Forensic Research Conference DFRWS USA 2020 July 20 - 24, 2020 DFRWS is dedicated to the sharing of knowledge and ideas about digital forensics research. Ever since it organized the first open workshop devoted to digital forensics in 2001, DFRWS continues to bring academics and practitioners together in an informal environment. As a non-profit, volunteer organization, DFRWS sponsors technical working groups, annual conferences and challenges to help drive the direction of research and development. https://dfrws.org Forensic Science International: Digital Investigation 33 (2020) 301012 Contents lists available at ScienceDirect Forensic Science International: Digital Investigation journal homepage: www.elsevier.com/locate/fsidi DFRWS 2020 USA d Proceedings of the Twentieth Annual DFRWS USA Hiding Process Memory Via Anti-Forensic Techniques Ralph Palutke a, **, 1, Frank Block a, b, *, 1, Patrick Reichenberger a, Dominik Stripeika a a Friedrich-Alexander Universitat€ Erlangen-Nürnberg (FAU), Germany b ERNW Research GmbH, Heidelberg, Germany article info abstract Article history: Nowadays, security practitioners typically use memory acquisition or live forensics to detect and analyze sophisticated malware samples. Subsequently, malware authors began to incorporate anti-forensic techniques that subvert the analysis process by hiding malicious memory areas. Those techniques Keywords: typically modify characteristics, such as access permissions, or place malicious data near legitimate one, Memory subversion in order to prevent the memory from being identified by analysis tools while still remaining accessible. -

Improving the Performance of Hybrid Main Memory Through System Aware Management of Heterogeneous Resources

IMPROVING THE PERFORMANCE OF HYBRID MAIN MEMORY THROUGH SYSTEM AWARE MANAGEMENT OF HETEROGENEOUS RESOURCES by Juyoung Jung B.S. in Information Engineering, Korea University, 2000 Master in Computer Science, University of Pittsburgh, 2013 Submitted to the Graduate Faculty of the Kenneth P. Dietrich School of Arts and Sciences in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science University of Pittsburgh 2016 UNIVERSITY OF PITTSBURGH KENNETH P. DIETRICH SCHOOL OF ARTS AND SCIENCES This dissertation was presented by Juyoung Jung It was defended on December 7, 2016 and approved by Rami Melhem, Ph.D., Professor at Department of Computer Science Bruce Childers, Ph.D., Professor at Department of Computer Science Daniel Mosse, Ph.D., Professor at Department of Computer Science Jun Yang, Ph.D., Associate Professor at Electrical and Computer Engineering Dissertation Director: Rami Melhem, Ph.D., Professor at Department of Computer Science ii IMPROVING THE PERFORMANCE OF HYBRID MAIN MEMORY THROUGH SYSTEM AWARE MANAGEMENT OF HETEROGENEOUS RESOURCES Juyoung Jung, PhD University of Pittsburgh, 2016 Modern computer systems feature memory hierarchies which typically include DRAM as the main memory and HDD as the secondary storage. DRAM and HDD have been extensively used for the past several decades because of their high performance and low cost per bit at their level of hierarchy. Unfortunately, DRAM is facing serious scaling and power consumption problems, while HDD has suffered from stagnant performance improvement and poor energy efficiency. After all, computer system architects have an implicit consensus that there is no hope to improve future system’s performance and power consumption unless something fundamentally changes. -

Review Der Linux Kernel Sourcen Von 4.9 Auf 4.10

Review der Linux Kernel Sourcen von 4.9 auf 4.10 Reviewed by: Tested by: stecan stecan Period of Review: Period of Test: From: Thursday, 11 January 2018 07:26:18 o'clock +01: From: Thursday, 11 January 2018 07:26:18 o'clock +01: To: Thursday, 11 January 2018 07:44:27 o'clock +01: To: Thursday, 11 January 2018 07:44:27 o'clock +01: Report automatically generated with: LxrDifferenceTable, V0.9.2.548 Provided by: Certified by: Approved by: Account: stecan Name / Department: Date: Friday, 4 May 2018 13:43:07 o'clock CEST Signature: Review_4.10_0_to_1000.pdf Page 1 of 793 May 04, 2018 Review der Linux Kernel Sourcen von 4.9 auf 4.10 Line Link NR. Descriptions 1 .mailmap#0140 Repo: 9ebf73b275f0 Stephen Tue Jan 10 16:57:57 2017 -0800 Description: mailmap: add codeaurora.org names for nameless email commits ----------- Some codeaurora.org emails have crept in but the names don't exist for them. Add the names for the emails so git can match everyone up. Link: http://lkml.kernel.org/r/[email protected] 2 .mailmap#0154 3 .mailmap#0160 4 CREDITS#2481 Repo: 0c59d28121b9 Arnaldo Mon Feb 13 14:15:44 2017 -0300 Description: MAINTAINERS: Remove old e-mail address ----------- The ghostprotocols.net domain is not working, remove it from CREDITS and MAINTAINERS, and change the status to "Odd fixes", and since I haven't been maintaining those, remove my address from there. CREDITS: Remove outdated address information ----------- This address hasn't been accurate for several years now. -

Thread Scheduling in Multi-Core Operating Systems Redha Gouicem

Thread Scheduling in Multi-core Operating Systems Redha Gouicem To cite this version: Redha Gouicem. Thread Scheduling in Multi-core Operating Systems. Computer Science [cs]. Sor- bonne Université, 2020. English. tel-02977242 HAL Id: tel-02977242 https://hal.archives-ouvertes.fr/tel-02977242 Submitted on 24 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Ph.D thesis in Computer Science Thread Scheduling in Multi-core Operating Systems How to Understand, Improve and Fix your Scheduler Redha GOUICEM Sorbonne Université Laboratoire d’Informatique de Paris 6 Inria Whisper Team PH.D.DEFENSE: 23 October 2020, Paris, France JURYMEMBERS: Mr. Pascal Felber, Full Professor, Université de Neuchâtel Reviewer Mr. Vivien Quéma, Full Professor, Grenoble INP (ENSIMAG) Reviewer Mr. Rachid Guerraoui, Full Professor, École Polytechnique Fédérale de Lausanne Examiner Ms. Karine Heydemann, Associate Professor, Sorbonne Université Examiner Mr. Etienne Rivière, Full Professor, University of Louvain Examiner Mr. Gilles Muller, Senior Research Scientist, Inria Advisor Mr. Julien Sopena, Associate Professor, Sorbonne Université Advisor ABSTRACT In this thesis, we address the problem of schedulers for multi-core architectures from several perspectives: design (simplicity and correct- ness), performance improvement and the development of application- specific schedulers. -

Embedded Linux Conference Europe 2019

Embedded Linux Conference Europe 2019 Linux kernel debugging: going beyond printk messages Embedded Labworks By Sergio Prado. São Paulo, October 2019 ® Copyright Embedded Labworks 2004-2019. All rights reserved. Embedded Labworks ABOUT THIS DOCUMENT ✗ This document is available under Creative Commons BY- SA 4.0. https://creativecommons.org/licenses/by-sa/4.0/ ✗ The source code of this document is available at: https://e-labworks.com/talks/elce2019 Embedded Labworks $ WHOAMI ✗ Embedded software developer for more than 20 years. ✗ Principal Engineer of Embedded Labworks, a company specialized in the development of software projects and BSPs for embedded systems. https://e-labworks.com/en/ ✗ Active in the embedded systems community in Brazil, creator of the website Embarcados and blogger (Portuguese language). https://sergioprado.org ✗ Contributor of several open source projects, including Buildroot, Yocto Project and the Linux kernel. Embedded Labworks THIS TALK IS NOT ABOUT... ✗ printk and all related functions and features (pr_ and dev_ family of functions, dynamic debug, etc). ✗ Static analysis tools and fuzzing (sparse, smatch, coccinelle, coverity, trinity, syzkaller, syzbot, etc). ✗ User space debugging. ✗ This is also not a tutorial! We will talk about a lot of tools and techniches and have fun with some demos! Embedded Labworks DEBUGGING STEP-BY-STEP 1. Understand the problem. 2. Reproduce the problem. 3. Identify the source of the problem. 4. Fix the problem. 5. Fixed? If so, celebrate! If not, go back to step 1. Embedded Labworks TYPES OF PROBLEMS ✗ We can consider as the top 5 types of problems in software: ✗ Crash. ✗ Lockup. ✗ Logic/implementation error. ✗ Resource leak. ✗ Performance. -

Walking the Linux Kernel

Walking the Linux Kernel Stanislav Kozina Associate Manager April 2016 AGENDA Linux Kernel in general Debugging kernel issues without crash Prepare the system for crashing Crash it with systemtap See what we can get from the crash dump 2 Linux Kernel in general – boring ● Just software… ● Written in C & asm ● List of expected features ● Boot and initialization process ● Memory and process management ● Hardware abstraction ● Files, directories, sockets, … ● Resources abstraction ● CPU, memory, … ● POSIX 3 Linux Kernel in general – BUT! ● Quite big (20mil LOC) ● No libc (many other standards functions instead) ● Special environment ● Preemptive, shared memory space ● Early boot code is tricky ● No dynamic allocator, AP, scheduler, even locks! ● Special security requirements ● Kernel should not just die and/or leak anything 4 Linux Kernel in general – development system ● Open source ● List of maintainers in MAINTAINERS ● Patches posted via email ● Documentation/SubmittingPatches ● LKML ● Usually companies care about support of their stuff ● Hardware vendors… ● “We don't break userland” 5 DIGGING IN Observing kernel is not trivial ● Hard to get a consistent picture ● If we stop it, how we observe it? ● Using Vms? ● printk() ● Statistics, /proc, perf, strace, ftrace, ... 7 8 Debugging kernel issues ● Oops messages ● Message buffer, registers, stack/backtrace ● Oops leaves the system running, but unstable! ● Current task is killed ● Printk() ● Systemtap, ftrace ● crash ● Sysrq triggers 9 Kernel oops [[ 32.580355]32.580355] SysRqSysRq :: TriggerTrigger aa crashcrash [[ 32.581331]32.581331] BUG:BUG: unableunable toto handlehandle kernelkernel NULLNULL pointerpointer dereferencedereference atat [[ 32.582703]32.582703] IP:IP: [<ffffffff813b9716>][<ffffffff813b9716>] sysrq_handle_crash+0x16/0x20sysrq_handle_crash+0x16/0x20 [[ 32.583781]32.583781] PGDPGD 3b5030673b503067 PUDPUD 3b5020673b502067 PMDPMD 00 [[ 32.584609]32.584609] Oops:Oops: 00020002 [#1][#1] SMPSMP [[ 32.585210]32.585210] ModulesModules linkedlinked in:in: ip6t_rpfilterip6t_rpfilter (...(.. -

Red Hat Enterprise Linux for Real Time 7 Tuning Guide

Red Hat Enterprise Linux for Real Time 7 Tuning Guide Advanced tuning procedures for Red Hat Enterprise Linux for Real Time Radek Bíba David Ryan Cheryn Tan Lana Brindley Alison Young Red Hat Enterprise Linux for Real Time 7 Tuning Guide Advanced tuning procedures for Red Hat Enterprise Linux for Real Time Radek Bíba Red Hat Customer Content Services [email protected] David Ryan Red Hat Customer Content Services [email protected] Cheryn Tan Red Hat Customer Content Services Lana Brindley Red Hat Customer Content Services Alison Young Red Hat Customer Content Services Legal Notice Copyright © 2015 Red Hat, Inc. This document is licensed by Red Hat under the Creative Commons Attribution-ShareAlike 3.0 Unported License. If you distribute this document, or a modified version of it, you must provide attribution to Red Hat, Inc. and provide a link to the original. If the document is modified, all Red Hat trademarks must be removed. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, MetaMatrix, Fedora, the Infinity Logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. -

Red Hat Enterprise Linux 7 Kernel Administration Guide

Red Hat Enterprise Linux 7 Kernel Administration Guide Examples of Tasks for Managing the Kernel Last Updated: 2018-05-21 Red Hat Enterprise Linux 7 Kernel Administration Guide Examples of Tasks for Managing the Kernel Marie Dolezelova Red Hat Customer Content Services [email protected] Mark Flitter Red Hat Customer Content Services Douglas Silas Red Hat Customer Content Services Eliska Slobodova Red Hat Customer Content Services Jaromir Hradilek Red Hat Customer Content Services Maxim Svistunov Red Hat Customer Content Services Robert Krátký Red Hat Customer Content Services Stephen Wadeley Red Hat Customer Content Services Florian Nadge Red Hat Customer Content Services Legal Notice Copyright © 2018 Red Hat, Inc. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/ . In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. -

Linux Kernel User Documentation V4.20.0

usepackagefontspec setsansfontDejaVu Sans setromanfontDejaVu Serif setmonofontDejaVu Sans Mono Linux Kernel User Documentation v4.20.0 The kernel development community 1 16, 2019 Contents 1 Linux kernel release 4.x <http://kernel.org/> 3 2 The kernel’s command-line parameters 9 3 Linux allocated devices (4.x+ version) 109 4 L1TF - L1 Terminal Fault 171 5 Reporting bugs 181 6 Security bugs 185 7 Bug hunting 187 8 Bisecting a bug 193 9 Tainted kernels 195 10 Ramoops oops/panic logger 197 11 Dynamic debug 201 12 Explaining the dreaded “No init found.” boot hang message 207 13 Rules on how to access information in sysfs 209 14 Using the initial RAM disk (initrd) 213 15 Control Group v2 219 16 Linux Serial Console 245 17 Linux Braille Console 247 18 Parport 249 19 RAID arrays 253 20 Kernel module signing facility 263 21 Linux Magic System Request Key Hacks 267 i 22 Unicode support 273 23 Software cursor for VGA 277 24 Kernel Support for miscellaneous (your favourite) Binary Formats v1.1 279 25 Mono(tm) Binary Kernel Support for Linux 283 26 Java(tm) Binary Kernel Support for Linux v1.03 285 27 Reliability, Availability and Serviceability 293 28 A block layer cache (bcache) 309 29 ext4 General Information 319 30 Power Management 327 31 Thunderbolt 349 32 Linux Security Module Usage 353 33 Memory Management 369 ii Linux Kernel User Documentation, v4.20.0 The following is a collection of user-oriented documents that have been added to the kernel over time. There is, as yet, little overall order or organization here — this material was not written to be a single, coherent document! With luck things will improve quickly over time. -

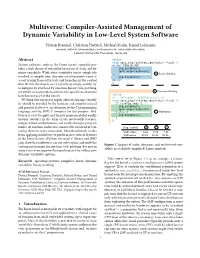

Multiverse: Compiler-Assisted Management of Dynamic Variability in Low-Level System Software

Multiverse: Compiler-Assisted Management of Dynamic Variability in Low-Level System Software Florian Rommel, Christian Dietrich, Michael Rodin, Daniel Lohmann {rommel, dietrich, lohmann}@sra.uni-hannover.de, [email protected] Leibniz Universität Hannover, Germany Abstract inline void spin_irq_lock(raw_spinlock_t *lock) { System software, such as the Linux kernel, typically pro- #ifdef CONFIG_SMP vides a high degree of versatility by means of static and dy- irq_disable(); _ namic variability. While static variability can be completely spin acquire(&lock); #else A Static Binding resolved at compile time, dynamic variation points come at irq_disable(); a cost arising from extra tests and branches in the control #endif flow. Kernel developers use it (a) only sparingly and (b)try } to mitigate its overhead by run-time binary code patching, for which several problem/architecture-specific mechanisms B Dynamic Binding have become part of the kernel. inline {+ __attribute__((multiverse)) +} We think that means for highly efficient dynamic variabil- void spin_irq_lock(raw_spinlock_t *lock) { ity should be provided by the language and compiler instead if (config_smp) { _ and present multiverse, an extension to the C programming irq disable(); spin_acquire(&lock); language and the GNU C compiler for this purpose. Mul- } else{ C Multiverse tiverse is easy to apply and targets program-global config- irq_disable(); uration switches in the form of (de-)activatable features, } } integer-valued configurations, and rarely-changing program modes. At run time, multiverse removes the overhead of eval- [avg. cycles] A B C uating them on every invocation. Microbenchmark results SMP=false 6.64 9.75 7.48 from applying multiverse to performance-critical features SMP=true 28.82 28.91 28.86 of the Linux kernel, cPython, the musl C-library and GNU grep show that multiverse can not only replace and unify the Figure 1. -

Speed up Your Kernel Development Cycle with QEMU

Speed up your kernel development cycle with QEMU Stefan Hajnoczi <[email protected]> Kernel Recipes 2015 1 KERNEL RECIPES 2015 | STEFAN HAJNOCZI Agenda ● Kernel development cycle ● Introduction to QEMU ● Basics ● Testing kernels inside virtual machines ● Debugging virtual machines ● Advanced topics ● Cross-architecture testing ● Device bring-up ● Error injection 2 KERNEL RECIPES 2015 | STEFAN HAJNOCZI About me QEMU contributor since 2010 ● Subsystem maintainer ● Google Summer of Code & Outreachy mentor/admin ● http://qemu-advent-calendar.org/ Occassional kernel patch contributor ● vsock, tcm_vhost, virtio_scsi, line6 staging driver Work in Red Hat's Virtualization team 3 KERNEL RECIPES 2015 | STEFAN HAJNOCZI Kernel development cycle Write code Test Build kernel/modules Deploy This presentation 4 KERNEL RECIPES 2015 | STEFAN HAJNOCZI If you are doing kernel development... USB ftrace Device drivers PCI Tracing etc ebpf LIO SCSI target . Storage File systems . device-mapper targets . Network protocols Networking Netfilter OpenVSwitch Resource Cgroups management & Linux Security Modules security Namespaces 5 KERNEL RECIPES 2015 | STEFAN HAJNOCZI ...you might have these challenges In situ debugging mechanisms like kgdb or kdump ● Not 100% reliable since they share the environment ● Crashes interrupt your browser/text editor session Web Text test.ko R.I.P. browser editor All My Work Gone CRASH! 2015/09/01 Development machine 6 KERNEL RECIPES 2015 | STEFAN HAJNOCZI Dedicated test machines Ex situ debugging requires an additional machine ● More cumbersome to deploy code and run tests ● May require special hardware (JTAG, etc) ● Less mobile, hard to travel with multiple machines PXE boot kernel/initramfs Test Run tests Dev Machine Machine Debug or collect crash dump 7 KERNEL RECIPES 2015 | STEFAN HAJNOCZI Virtual machines: best of both worlds! ● Easy to start/stop ● Full access to memory & CPU state ● Cross-architecture support using emulation ● Programmable hardware (e.g.