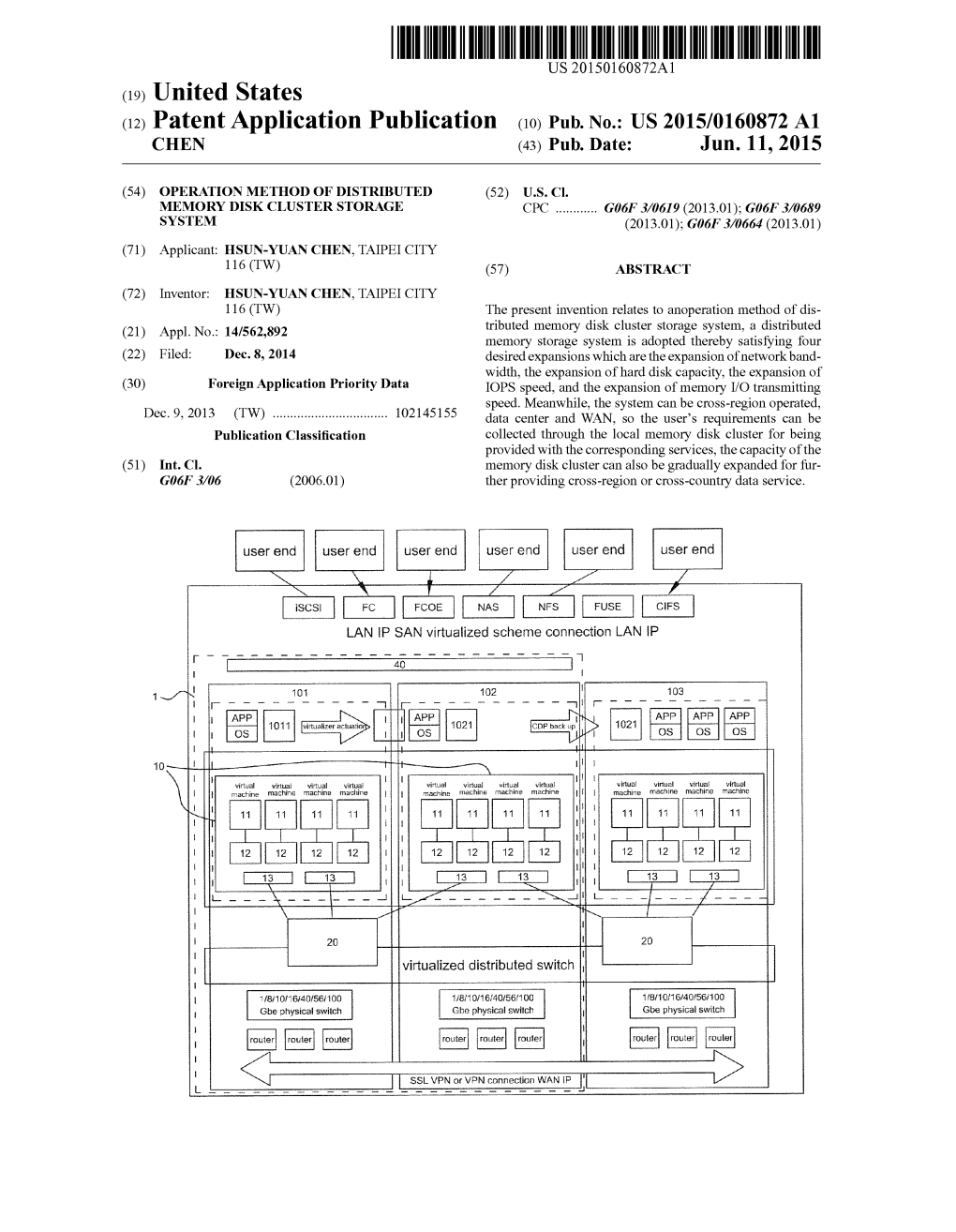

US 2015/0160872 A1 CHEN (43) Pub

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Administració De Sistemes GNU Linux Mòdul4 Administració

Administració local Josep Jorba Esteve PID_00238577 GNUFDL • PID_00238577 Administració local Es garanteix el permís per a copiar, distribuir i modificar aquest document segons els termes de la GNU Free Documentation License, Version 1.3 o qualsevol altra de posterior publicada per la Free Software Foundation, sense seccions invariants ni textos de la oberta anterior o posterior. Podeu consultar els termes de la llicència a http://www.gnu.org/licenses/fdl-1.3.html. GNUFDL • PID_00238577 Administració local Índex Introducció.................................................................................................. 5 1. Eines bàsiques per a l'administrador........................................... 7 1.1. Eines gràfiques i línies de comandes .......................................... 8 1.2. Documents d'estàndards ............................................................. 10 1.3. Documentació del sistema en línia ............................................ 13 1.4. Eines de gestió de paquets .......................................................... 15 1.4.1. Paquets TGZ ................................................................... 16 1.4.2. Fedora/Red Hat: paquets RPM ....................................... 19 1.4.3. Debian: paquets DEB ..................................................... 24 1.4.4. Nous formats d'empaquetat: Snap i Flatpak .................. 28 1.5. Eines genèriques d'administració ................................................ 36 1.6. Altres eines ................................................................................. -

Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4

SUSE Linux Enterprise Server 12 SP4 Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4 Provides information about how to manage storage devices on a SUSE Linux Enterprise Server. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xii 2 Giving Feedback xiv 3 Documentation Conventions xiv 4 Product Life Cycle and Support xvi Support Statement for SUSE Linux Enterprise Server xvii • Technology Previews xviii I FILE SYSTEMS AND MOUNTING 1 1 Overview -

Early Experiences with Storage Area Networks and CXFS John Lynch

Early Experiences with Storage Area Networks and CXFS John Lynch Aerojet 6304 Spine Road Boulder CO 80516 Abstract This paper looks at the design, integration and application issues involved in deploying an early access, very large, and highly available storage area network. Covered are topics from filesystem failover, issues regarding numbers of nodes in a cluster, and using leading edge solutions to solve complex issues in a real-time data processing network. 1 Introduction SAN technology can be categorized in two distinct approaches. Both Aerojet designed and installed a highly approaches use the storage area network available, large scale Storage Area to provide access to multiple storage Network over spring of 2000. This devices at the same time by one or system due to it size and diversity is multiple hosts. The difference is how known to be one of a kind and is the storage devices are accessed. currently not offered by SGI, but would serve as a prototype system. The most common approach allows the hosts to access the storage devices across The project’s goal was to evaluate Fibre the storage area network but filesystems Channel and SAN technology for its are not shared. This allows either a benefits and applicability in a second- single host to stripe data across a greater generation, real-time data processing number of storage controllers, or to network. SAN technology seemed to be share storage controllers among several the technology of the future to replace systems. This essentially breaks up a the traditional SCSI solution. large storage system into smaller distinct pieces, but allows for the cost-sharing of The approach was to conduct and the most expensive component, the evaluation of SAN technology as a storage controller. -

Study of File System Evolution

Study of File System Evolution Swaminathan Sundararaman, Sriram Subramanian Department of Computer Science University of Wisconsin {swami, srirams} @cs.wisc.edu Abstract File systems have traditionally been a major area of file systems are typically developed and maintained by research and development. This is evident from the several programmer across the globe. At any point in existence of over 50 file systems of varying popularity time, for a file system, there are three to six active in the current version of the Linux kernel. They developers, ten to fifteen patch contributors but a single represent a complex subsystem of the kernel, with each maintainer. These people communicate through file system employing different strategies for tackling individual file system mailing lists [14, 16, 18] various issues. Although there are many file systems in submitting proposals for new features, enhancements, Linux, there has been no prior work (to the best of our reporting bugs, submitting and reviewing patches for knowledge) on understanding how file systems evolve. known bugs. The problems with the open source We believe that such information would be useful to the development approach is that all communication is file system community allowing developers to learn buried in the mailing list archives and aren’t easily from previous experiences. accessible to others. As a result when new file systems are developed they do not leverage past experience and This paper looks at six file systems (Ext2, Ext3, Ext4, could end up re-inventing the wheel. To make things JFS, ReiserFS, and XFS) from a historical perspective worse, people could typically end up doing the same (between kernel versions 1.0 to 2.6) to get an insight on mistakes as done in other file systems. -

CXFSTM Client-Only Guide for SGI® Infinitestorage

CXFSTM Client-Only Guide for SGI® InfiniteStorage 007–4507–016 COPYRIGHT © 2002–2008 SGI. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of SGI. LIMITED RIGHTS LEGEND The software described in this document is "commercial computer software" provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and conventions. This document is provided with limited rights as defined in 52.227-14. TRADEMARKS AND ATTRIBUTIONS SGI, Altix, the SGI cube and the SGI logo are registered trademarks and CXFS, FailSafe, IRIS FailSafe, SGI ProPack, and Trusted IRIX are trademarks of SGI in the United States and/or other countries worldwide. Active Directory, Microsoft, Windows, and Windows NT are registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. AIX and IBM are registered trademarks of IBM Corporation. Brocade and Silkworm are trademarks of Brocade Communication Systems, Inc. AMD, AMD Athlon, AMD Duron, and AMD Opteron are trademarks of Advanced Micro Devices, Inc. Apple, Mac, Mac OS, Power Mac, and Xserve are registered trademarks of Apple Computer, Inc. Disk Manager is a registered trademark of ONTRACK Data International, Inc. Engenio, LSI Logic, and SANshare are trademarks or registered trademarks of LSI Corporation. -

ECE 598 – Advanced Operating Systems Lecture 19

ECE 598 { Advanced Operating Systems Lecture 19 Vince Weaver http://web.eece.maine.edu/~vweaver [email protected] 7 April 2016 Announcements • Homework #7 was due • Homework #8 will be posted 1 Why use FAT over ext2? • FAT simpler, easy to code • FAT supported on all major OSes • ext2 faster, more robust filename and permissions 2 btrfs • B-tree fs (similar to a binary tree, but with pages full of leaves) • overwrite filesystem (overwite on modify) vs CoW • Copy on write. When write to a file, old data not overwritten. Since old data not over-written, crash recovery better Eventually old data garbage collected • Data in extents 3 • Copy-on-write • Forest of trees: { sub-volumes { extent-allocation { checksum tree { chunk device { reloc • On-line defragmentation • On-line volume growth 4 • Built-in RAID • Transparent compression • Snapshots • Checksums on data and meta-data • De-duplication • Cloning { can make an exact snapshot of file, copy-on- write different than link, different inodles but same blocks 5 Embedded • Designed to be small, simple, read-only? • romfs { 32 byte header (magic, size, checksum,name) { Repeating files (pointer to next [0 if none]), info, size, checksum, file name, file data • cramfs 6 ZFS Advanced OS from Sun/Oracle. Similar in idea to btrfs indirect still, not extent based? 7 ReFS Resilient FS, Microsoft's answer to brtfs and zfs 8 Networked File Systems • Allow a centralized file server to export a filesystem to multiple clients. • Provide file level access, not just raw blocks (NBD) • Clustered filesystems also exist, where multiple servers work in conjunction. -

USB Composite Gadget Using CONFIG-FS on Dra7xx Devices

Application Report SPRACB5–September 2017 USB Composite Gadget Using CONFIG-FS on DRA7xx Devices RaviB ABSTRACT This application note explains how to create a USB composite gadget, network control model (NCM) and abstract control model (ACM) from the user space using Linux® CONFIG-FS on the DRA7xx platform. Contents 1 Introduction ................................................................................................................... 2 2 USB Composite Gadget Using CONFIG-FS ............................................................................. 3 3 Creating Composite Gadget From User Space.......................................................................... 4 4 References ................................................................................................................... 8 List of Figures 1 Block Diagram of USB Composite Gadget............................................................................... 3 2 Selection of CONFIGFS Through menuconfig........................................................................... 4 3 Select USB Configuration Through menuconfig......................................................................... 4 4 Composite Gadget Configuration Items as Files and Directories ..................................................... 5 5 VID, PID, and Manufacturer String Configuration ....................................................................... 6 6 Kernel Logs Show Enumeration of USB Composite Gadget by Host ................................................ 6 7 Ping -

Load Management and Demand Response in Small and Medium Data Centers

Thiago Lara Vasques Load Management and Demand Response in Small and Medium Data Centers PhD Thesis in Sustainable Energy Systems, supervised by Professor Pedro Manuel Soares Moura, submitted to the Department of Mechanical Engineering, Faculty of Sciences and Technology of the University of Coimbra May 2018 Load Management and Demand Response in Small and Medium Data Centers by Thiago Lara Vasques PhD Thesis in Sustainable Energy Systems in the framework of the Energy for Sustainability Initiative of the University of Coimbra and MIT Portugal Program, submitted to the Department of Mechanical Engineering, Faculty of Sciences and Technology of the University of Coimbra Thesis Supervisor Professor Pedro Manuel Soares Moura Department of Electrical and Computers Engineering, University of Coimbra May 2018 This thesis has been developed under the Energy for Sustainability Initiative of the University of Coimbra and been supported by CAPES (Coordenação de Aperfeiçoamento de Pessoal de Nível Superior – Brazil). ACKNOWLEDGEMENTS First and foremost, I would like to thank God for coming to the conclusion of this work with health, courage, perseverance and above all, with a miraculous amount of love that surrounds and graces me. The work of this thesis has also the direct and indirect contribution of many people, who I feel honored to thank. I would like to express my gratitude to my supervisor, Professor Pedro Manuel Soares Moura, for his generosity in opening the doors of the University of Coimbra by giving me the possibility of his orientation when I was a stranger. Subsequently, by his teachings, guidance and support in difficult times. You, Professor, inspire me with your humbleness given the knowledge you possess. -

Silicon Graphics, Inc. Scalable Filesystems XFS & CXFS

Silicon Graphics, Inc. Scalable Filesystems XFS & CXFS Presented by: Yingping Lu January 31, 2007 Outline • XFS Overview •XFS Architecture • XFS Fundamental Data Structure – Extent list –B+Tree – Inode • XFS Filesystem On-Disk Layout • XFS Directory Structure • CXFS: shared file system ||January 31, 2007 Page 2 XFS: A World-Class File System –Scalable • Full 64 bit support • Dynamic allocation of metadata space • Scalable structures and algorithms –Fast • Fast metadata speeds • High bandwidths • High transaction rates –Reliable • Field proven • Log/Journal ||January 31, 2007 Page 3 Scalable –Full 64 bit support • Large Filesystem – 18,446,744,073,709,551,615 = 264-1 = 18 million TB (exabytes) • Large Files – 9,223,372,036,854,775,807 = 263-1 = 9 million TB (exabytes) – Dynamic allocation of metadata space • Inode size configurable, inode space allocated dynamically • Unlimited number of files (constrained by storage space) – Scalable structures and algorithms (B-Trees) • Performance is not an issue with large numbers of files and directories ||January 31, 2007 Page 4 Fast –Fast metadata speeds • B-Trees everywhere (Nearly all lists of metadata information) – Directory contents – Metadata free lists – Extent lists within file – High bandwidths (Storage: RM6700) • 7.32 GB/s on one filesystem (32p Origin2000, 897 FC disks) • >4 GB/s in one file (same Origin, 704 FC disks) • Large extents (4 KB to 4 GB) • Request parallelism (multiple AGs) • Delayed allocation, Read ahead/Write behind – High transaction rates: 92,423 IOPS (Storage: TP9700) -

Hardware-Driven Evolution in Storage Software by Zev Weiss A

Hardware-Driven Evolution in Storage Software by Zev Weiss A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Computer Sciences) at the UNIVERSITY OF WISCONSIN–MADISON 2018 Date of final oral examination: June 8, 2018 ii The dissertation is approved by the following members of the Final Oral Committee: Andrea C. Arpaci-Dusseau, Professor, Computer Sciences Remzi H. Arpaci-Dusseau, Professor, Computer Sciences Michael M. Swift, Professor, Computer Sciences Karthikeyan Sankaralingam, Professor, Computer Sciences Johannes Wallmann, Associate Professor, Mead Witter School of Music i © Copyright by Zev Weiss 2018 All Rights Reserved ii To my parents, for their endless support, and my cousin Charlie, one of the kindest people I’ve ever known. iii Acknowledgments I have taken what might be politely called a “scenic route” of sorts through grad school. While Ph.D. students more focused on a rapid graduation turnaround time might find this regrettable, I am glad to have done so, in part because it has afforded me the opportunities to meet and work with so many excellent people along the way. I owe debts of gratitude to a large cast of characters: To my advisors, Andrea and Remzi Arpaci-Dusseau. It is one of the most common pieces of wisdom imparted on incoming grad students that one’s relationship with one’s advisor (or advisors) is perhaps the single most important factor in whether these years of your life will be pleasant or unpleasant, and I feel exceptionally fortunate to have ended up iv with the advisors that I’ve had. -

CXFSTM Administration Guide for SGI® Infinitestorage

CXFSTM Administration Guide for SGI® InfiniteStorage 007–4016–025 CONTRIBUTORS Written by Lori Johnson Illustrated by Chrystie Danzer Engineering contributions to the book by Vladmir Apostolov, Rich Altmaier, Neil Bannister, François Barbou des Places, Ken Beck, Felix Blyakher, Laurie Costello, Mark Cruciani, Rupak Das, Alex Elder, Dave Ellis, Brian Gaffey, Philippe Gregoire, Gary Hagensen, Ryan Hankins, George Hyman, Dean Jansa, Erik Jacobson, John Keller, Dennis Kender, Bob Kierski, Chris Kirby, Ted Kline, Dan Knappe, Kent Koeninger, Linda Lait, Bob LaPreze, Jinglei Li, Yingping Lu, Steve Lord, Aaron Mantel, Troy McCorkell, LaNet Merrill, Terry Merth, Jim Nead, Nate Pearlstein, Bryce Petty, Dave Pulido, Alain Renaud, John Relph, Elaine Robinson, Dean Roehrich, Eric Sandeen, Yui Sakazume, Wesley Smith, Kerm Steffenhagen, Paddy Sreenivasan, Roger Strassburg, Andy Tran, Rebecca Underwood, Connie Woodward, Michelle Webster, Geoffrey Wehrman, Sammy Wilborn COPYRIGHT © 1999–2007 SGI. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of SGI. LIMITED RIGHTS LEGEND The software described in this document is "commercial computer software" provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond -

A Modern Primer on Processing in Memory

A Modern Primer on Processing in Memory Onur Mutlua,b, Saugata Ghoseb,c, Juan Gomez-Luna´ a, Rachata Ausavarungnirund SAFARI Research Group aETH Z¨urich bCarnegie Mellon University cUniversity of Illinois at Urbana-Champaign dKing Mongkut’s University of Technology North Bangkok Abstract Modern computing systems are overwhelmingly designed to move data to computation. This design choice goes directly against at least three key trends in computing that cause performance, scalability and energy bottlenecks: (1) data access is a key bottleneck as many important applications are increasingly data-intensive, and memory bandwidth and energy do not scale well, (2) energy consumption is a key limiter in almost all computing platforms, especially server and mobile systems, (3) data movement, especially off-chip to on-chip, is very expensive in terms of bandwidth, energy and latency, much more so than computation. These trends are especially severely-felt in the data-intensive server and energy-constrained mobile systems of today. At the same time, conventional memory technology is facing many technology scaling challenges in terms of reliability, energy, and performance. As a result, memory system architects are open to organizing memory in different ways and making it more intelligent, at the expense of higher cost. The emergence of 3D-stacked memory plus logic, the adoption of error correcting codes inside the latest DRAM chips, proliferation of different main memory standards and chips, specialized for different purposes (e.g., graphics, low-power, high bandwidth, low latency), and the necessity of designing new solutions to serious reliability and security issues, such as the RowHammer phenomenon, are an evidence of this trend.