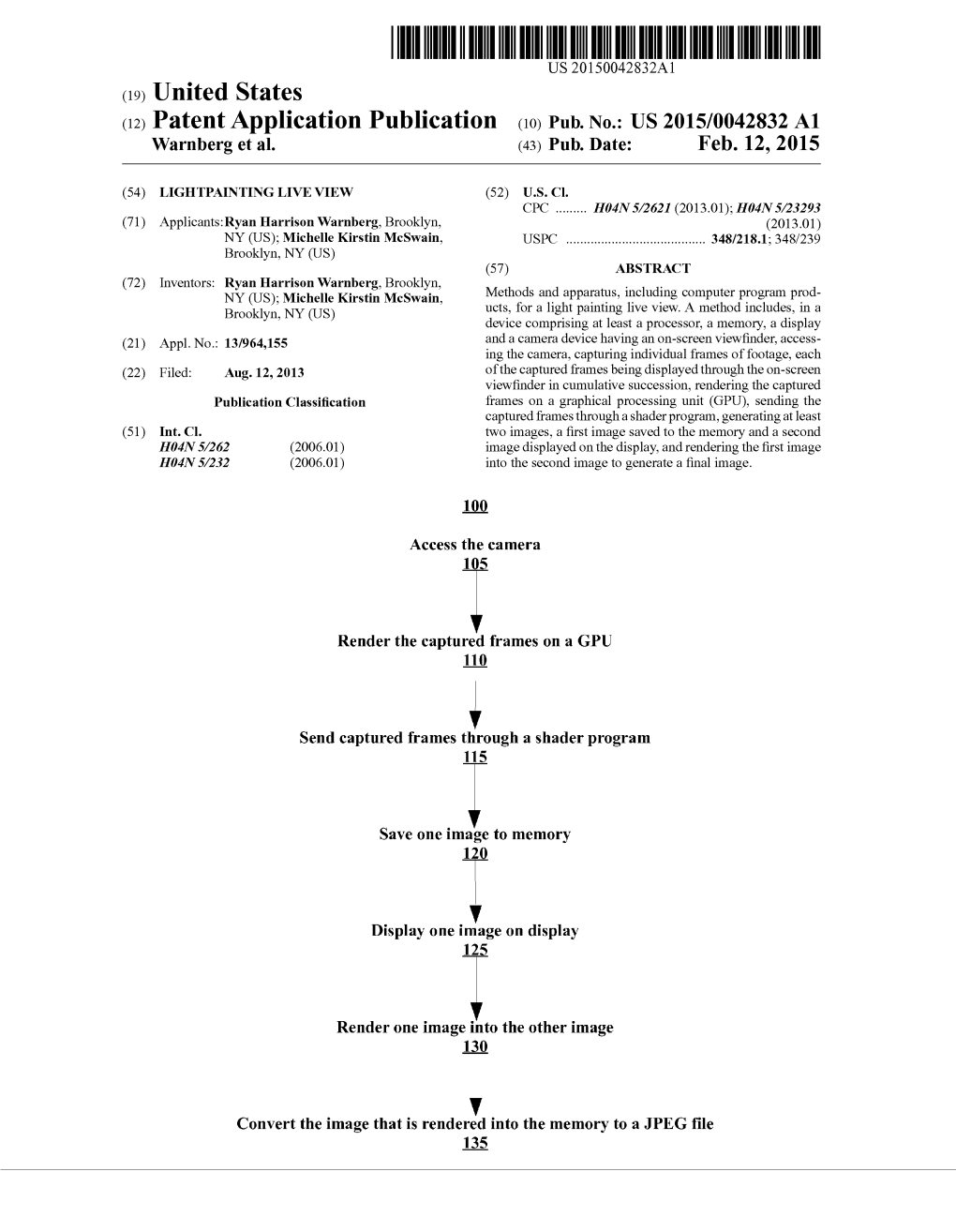

Methods Apparatus, Including Computer Program Prod Brooklyn NY (Us) Ucts, for a Light Painting Live View

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Light Painting Slikanje Svjetlom

Light painting slikanje svjetlom Dr. sc. Maja Strgar Kurečić Sadržaj predavanja 1. Slikanje svjetlom - što je to? 2. Kratka povijest 3. Komercijalna primjena 4. Tehnike slikanja svjetlom 5. Oprema 6. Primjeri Primjena digitalne fotografije u reprodukcijskim medijima - Ak. god. 2014/15 Slikanje svjetlom - što je to? Slikanje svjetlom je tehnika fotografiranja u kojoj se sa izvorom svjetlosti (lampe, baterije, prskalice ili sl.), uz duže vrijeme ekspozicije, crtaju određeni oblici kako bi se dobili zanimljivi efekti. Light Painting Freezelight Light Graffiti Light Art Primjena digitalne fotografije u reprodukcijskim medijima - Ak. god. 2014/15 Slikanje svjetlom - što je to? Kratka povijest 1889. Étienne-Jules Marey i Georges Demeny Prva poznata fotografija nastala tehnikom slikanja svjetlom. Dvoje psihologa, u sklopu istraživanja pokreta, došli na ideju snimanja pokreta - na zglobove pričvrstili žarulje i snimili sa produženim vremenom ekspozicije. Primjena digitalne fotografije u reprodukcijskim medijima - Ak. god. 2014/15 Kratka povijest 1914. Frank Gilbreth i Lillian Moller Gilbreth U želji za povećanjem produktivnosti svojih radnika i pojednostavljenjem posla, koristili male svjetiljke pričvršćene na ruke radnika i fotografirali ih uz produženu ekspoziciju, koja je zabilježila sve pokrete u određenom vremenu. Primjena digitalne fotografije u reprodukcijskim medijima - Ak. god. 2014/15 Kratka povijest 1935. Man Ray Prvi fotograf koji je koristio ovu tehniku u umjetničke svrhe. Napravio seriju autoportreta tehnikom slikanja svjetlom (ciklus “Space Writing”). Primjena digitalne fotografije u reprodukcijskim medijima - Ak. god. 2014/15 Kratka povijest 1930. - 1940. Gjon Mili Albanski fotograf, živio i radio u Americi, velik doprinos razvoju tehnike slikanja svjetlom. Fotoflash tehnika, upotreba stroboskopskog svjetla za snimanje kretanja u jednoj ekspoziciji. Izvor svjetla postavljen na klizaljke klizačice Carol Lynne. -

Troy Paiva's Lost (And Found) America

Late Delivery: One of Paiva’s workshop students brought this doll to a junkyard shoot and Paiva says he “fell in love with it” and ended up carrying her around as a prop all night. This was shot just after 2AM with a Canon 60D and a Tokina 12-14mm zoom (at 12mm). The exposure (at ISO 200 with the white balance set for 3800K) was 178 sec at f/8. The overall lighting was from the full moon and the warm white, lime and red set lighting was from a ProtoMachines flashlight. with the ecstasy of creation.” While the technique of light painting has been around since the beginnings of photography, Paiva uses a skillful and experimental combination of moonlight (he works exclusively by the light of the full moon) and handheld lighting tools to reimagine and expand the parameters of light painting and night photography. He has published two books of his work: Lost America: Night Photography of the Abandoned Roadside West (Motorbook International, 2003) and Night Vision: The Art of Urban Exploration (Chronicle Books, 2008). His images have been used to illustrate countless magazine articles and dozens of book and CD covers— perhaps most notably the iconic images on the cover of Stephen King’s books Boxy But Safe: A Volvo station wagon hangs in the jaws of a massive junkyard-shearing machine. Paiva shot the scene in a California auto graveyard with a Canon 60D and Christine and From a Buick 8. a Tokina 12-24mm zoom (at 12mm). He exposed the scene for 167 sec at f/8 (at ISO 200, with the white balance set at 3800K). -

PHYSIOGRAM / Light Painting

PHYSIOGRAM / Light painting Light painting, or light drawing, is a photographic technique in which exposures are made by moving a hand-held light source while taking a long exposure photograph, either to illuminate a subject or to shine a point of light directly at the camera. Light painting / PHYSIOGRAM Light painting (also called light drawing) dates back to 1889 when Étienne-Jules Marey and Georges Demeny traced human motion in the first known light painting “Pathological Walk From in Front”. Light painting / PHYSIOGRAM A variety of light sources can be used, ranging from simple flashlights to dedicated devices. Fiber optic light pen, candles, fireworks, lighter flints, glow sticks, and Poi are also popular. Light painting / PHYSIOGRAM A tripod is usually necessary due to the long exposure times involved. Alternatively, the camera may be placed on or braced against a table or other solid support. Light painting / PHYSIOGRAM A shutter release cable or self timer is generally employed in order to minimize camera shake. Color gels can also be used to color the light sources. Light painting / PHYSIOGRAM OLYMPIA,Light paintingMARC ROMEO / PHYSIOGRAM LLORENTE, JONESSA MARIE 85.8″ f/22 160 Physiograms are patterns created by a light source and a fixed swinging motion. 1 . A small light source (like a mini mag light) , 2 . A length of string, 3 . An overhead support (Put a cup hook in my ceiling) The Set Up: All you need to do is attach the string to the light source, (the pattern really depends on the length of string) and attach the string to a hook on your support. -

Photography Techniques Intermediate Skills

Photography Techniques Intermediate Skills PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Wed, 21 Aug 2013 16:20:56 UTC Contents Articles Bokeh 1 Macro photography 5 Fill flash 12 Light painting 12 Panning (camera) 15 Star trail 17 Time-lapse photography 19 Panoramic photography 27 Cross processing 33 Tilted plane focus 34 Harris shutter 37 References Article Sources and Contributors 38 Image Sources, Licenses and Contributors 39 Article Licenses License 41 Bokeh 1 Bokeh In photography, bokeh (Originally /ˈboʊkɛ/,[1] /ˈboʊkeɪ/ BOH-kay — [] also sometimes heard as /ˈboʊkə/ BOH-kə, Japanese: [boke]) is the blur,[2][3] or the aesthetic quality of the blur,[][4][5] in out-of-focus areas of an image. Bokeh has been defined as "the way the lens renders out-of-focus points of light".[6] However, differences in lens aberrations and aperture shape cause some lens designs to blur the image in a way that is pleasing to the eye, while others produce blurring that is unpleasant or distracting—"good" and "bad" bokeh, respectively.[2] Bokeh occurs for parts of the scene that lie outside the Coarse bokeh on a photo shot with an 85 mm lens and 70 mm entrance pupil diameter, which depth of field. Photographers sometimes deliberately use a shallow corresponds to f/1.2 focus technique to create images with prominent out-of-focus regions. Bokeh is often most visible around small background highlights, such as specular reflections and light sources, which is why it is often associated with such areas.[2] However, bokeh is not limited to highlights; blur occurs in all out-of-focus regions of the image. -

How to Create Physiogram Long Exposure Photographs a Physiogram Is a Single Long Exposure of a Flashlight Spinning Around on a String That Is Tied to a Ceiling

How to Create Physiogram Long Exposure Photographs A physiogram is a single long exposure of a flashlight spinning around on a string that is tied to a ceiling. You heard me, simply find a way to attach your flashlight to a string or shoelace or something, then put your camera on the ground with the widest angle you can get (after getting the light in focus, then switching the focus off), turn out the light, turn on the flashlight, give it a good push, then take a long exposure of the physiogram. You can also achieve different kinds of effects by zooming in and out, or focusing in and out while the exposure is taking place. You can also use different colored lights, and blend them later in Photoshop. When you take the photo, I recommend using a 30 second (the maximum shutter time on most DSLR cameras, unfortunately) exposure, or, if you have a BULB mode on your camera, you can use that. Examples of Physiography: This last image was manipulated later on a computer. You could theoretically create a physiogram with a sparkler too. Yep, a firework SPARKLER. How awesome would that be? I haven't done it before, however the idea would be to put your camera on the ground facing upwards underneath some protective glass. Tie the sparkler to a tree branch, weight it down somehow, and take the shot the same way you would take a regular physiogram. You could also use a tripod pointing down at a mirror, with the light source above the mirror. -

Light Painting

Light Painting Best for Ages Workspace Is electricity required? 6+ Any roomy, dark space Yes Description "Light Painting" is a photographic Art Form. Using a slow exposure camera or a similar app on a phone or tablet, you will capture images of the things you draw in the air. You can add color by using different colored lights to make light painting art! What to Do Materials 1. A DSLR camera is preferred but ● Light sources: LEDs you can use an SLR camera. Set and coin cell battery, your shutter speed- between 11 to holiday lights, flashlight, 30 seconds for a longer exposure lights of different colors, a cell phone, etc. 2. Set the Aperture between f/8 and f/32 ISO - 100 or 200 ● Light/Photo Capture Device: SLR camera 3. When you use a digital camera, with an F-Stop high you can view pictures immediately enough for a slow and adjust settings as necessary. exposure photograph or 4. If you don’t have a camera, simply download a slow download an app like “Light Trace exposure photographic Artist” and follow the instructions app on phone or tablet included in the app. 5. Now that you understand how to use the camera or the app, take your light sources to a dark space. Concepts Explored 6. For practice, start your camera/app and move the light through the air ● Technology in a circular motion. Observe the photo and make adjustments as ● Design necessary. Repeat. 7. Now that you understand the process and camera/app, experiment with different lights, colors, speed and motions to create unique light paintings. -

Spring 2021 LIGHT PAINTING LESSON PLAN

Digital and Film Photography Mr. Stiles - Spring 2021 LIGHT PAINTING LESSON PLAN: Samples of Light Painting on line Written tutorial 1 Written tutorial 2 Video tutorial Sprint commercial 1 Sprint commercial 2 Light Painting Tools Here First sit your camera on a stable surface or on a tripod. With shutter speed controls use Manual (on bulb) or Shutter Priority and set a 5-15 second shutter speed. Don’t forget to Pre-focus & Frame your shot beforehand with lights on if indoors. Now turn lights off and take a shot. Aim your light source at the camera lens to draw with the light or aim your light source at the subject for less distinct light. Try bothand see what you like! To do this with no shutter speed controls (as on some point & shoots) try “Night mode” or “Fireworks mode” or “Indoor mode” or “Slow Sync Flash mode.” Experiment! Objective: To learn how to use very slow shutter speeds at night. Assessment: Students will turn in 12 photos using this technique. Due: Monday 3/15/21 , Value: 100 Pts. for Photo 1 & 2 Lesson Plan Outline Instructor: James Stiles Course: Digital Photography What Students Will Learn or Be Able to Do As A Result of This Lesson (main learning objectives for lesson-identify section in course outline where objective is located; for example, section S.A.I) 1.Students will learn how to use very slow shutter speeds at night and in a darkened room, in order to create "Light Painting Photographs". 2.Through this they will understand the creative effects of subject motion and shutter speeds and how the two relate to each other. -

Night & Long Exposure Photography

Night & Long Exposure Photography Equipment & Basic Settings Take only photos, leave only foot prints Equipment Camera • Camera with ability for manual exposure (aperture, ISO, exposure time, white balance) • Remove the strap or tie it down to prevent movement by wind (blurs long exposure images) Tripod • Sturdy tripod; individual leg adjustments, ball-heads are more flexible than 3-way heads. • Velcro strips are useful to hold the timer, camera strap etc. Lenses • Best range is below 100 mm full frame (70 mm crop sensor). Wide angles are often used. • You will rarely zoom in, as lens motion is amplified. Remove any filters (reflections!) • Important: bring your lens hoods! They avoid flares from street lights. Intervalometer or Timer • Many night exposures are >>30 s, most camera’s (and internal intervalometer’s) limit • Intervalometers are more powerful than remote on/off control. Must-have for star trails Flashlights (no head lights!) • Bring a VERY DIM light (pen light or key chain light) for camera or lens adjustments • Brighter or colored flashlights for light painting. • Be considerate of others when using flashlights! Minimize the use, do not blind yourself. Other stuff • Hiking boots; Water, snacks; suitable clothing (it’s cooler at night and you are standing around, not moving much); microfiber cloth, spare batteries (and charge the one in the camera!). Camera Settings • It’s more difficult to see in the dark: Learn to use your controls blindly (less flashlight use!) o Practice this on your living room couch! Turn off the lights and play with your camera. • Shoot RAW image format! Much more latitude in recovering highlights and shadows • Learn to use LiveView and zooming in with +/- controls. -

The Photographic Application of Piet Mondrian's Neo-Plastic Principles

THE PHOTOGRAPHIC APPLICATION OF PIET MONDRIAN’S NEO-PLASTIC PRINCIPLES by William Adam Sawalich A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Photography. Brooks Institute of Photography Santa Barbara, California June 2001 © 2001 William Adam Sawalich ALL RIGHTS RESERVED ii TABLE OF CONTENTS List of Plates iii List of Figures vi Acknowledgments vii Abstract viii Preface ix Chapter One: The Need For Neo-Plasticism 1 Chapter Two: Mondrian And De Stijl 5 Chapter Three: Photography 19 Chapter Four: A Historical Perspective 31 Chapter Five: Contemporary Photography 42 Chapter Six: Application Of The Neo-Plastic Method 56 Chapter Seven: Interpreting Results 61 Chapter Eight: The New Plastic In Photography 78 Works Cited 85 Appendices 88 iii PLATES 1. Unknown. Piet Mondrian in a Theosophic meditational pose. 5, 100 2. Arnold Newman. Piet Mondrian in his studio. 5, 36, 101 3. Piet Mondrian. Composition With Red, Yellow And Blue (1921). 6, 102 4. Piet Mondrian. Composition. 6, 103 5. Piet Mondrian. Composition In A Square. 6, 104 6. Piet Mondrian. Composition With Red, Yellow And Blue (1932). 6, 105 7. Piet Mondrian. Trafalgar Square. 6, 106 8. Piet Mondrian. Mill At Evening. 7, 107 9. Piet Mondrian. Composition In Blue, Grey And Pink. 9, 108 10. Piet Mondrian. Composition Number 9 (Blue Façade). 9, 109 11. Edward Weston. Pepper. 32, 110 12. Edward Weston. Cabbage Leaf. 32, 111 13. Harry Callahan. Weeds Against Sky, Detroit. 33, 112 14. Harry Callahan. Chicago. 33, 113 15. Aaron Siskind. New York No. 6. 34, 114 16. -

Drawing and Photography a Resource for Teachers

1 Drawing and Photography A Resource for Teachers DRAWING AND PHOTOGRAPHY A RESOURCE FOR TEACHERS Suitable for Key Stage 2–5 Art & Design Lynn Weddle July 2017 Juliette Buss photoworks.org.uk #photoworks @photoworks_uk 2 Drawing and Photography A Resource for Teachers DRAWING AND PHOTOGRAPHY A RESOURCE FOR TEACHERS CONTENTS [4] Introduction [5] How to use this pack [6] Activities [13] Artists working with drawing and photography [15] Share your students’ work with us [16] Useful links All images: Lynn Weddle 3 Drawing and Photography A Resource for Teachers “ Drawing as a form of communication has transcended history and cultures. In arts education it is viewed as central to students’ visual and creative thinking. Drawing is a key skill for pupils wishing to work in the sector.” Making a mark: art, craft and design education, Ofsted, 2011 4 Drawing and Photography A Resource for Teachers INTRODUCTION Drawing and photography both investigate representation and present themselves via the two- dimensional surface. Parallels exist within the grain of film, the texture of paper, metallic surfaces of graphite and the silver within emulsion. Children draw as a way to record and respond to their experiences. In early years children are confident in imaginative drawing, play and creative mark making. Drawing becomes more representational as Drawing and photography can take they progress through the education many forms and be integrated in system and many teachers comment many ways. This resource invites you on confidence levels falling. However, toembrace, challenge and explore at the same time, many children also the links between the two mediums gravitate to drawing, preferring this and how they can be creatively to any other activity. -

Painting with Light: Generative Artworks Or “Setting in Motion” the Fourth Dimension

10th Generative Art Conference GA2007 Painting with light: generative artworks or “setting in motion” the fourth dimension Dr Marcella Giulia Lorenzi, PhD Laboratory for Scientific Communication, University of Calabria, Arcavacata (CS), Italy e-mail: [email protected] Prof. Mauro Francaviglia Department of Mathematics, University of Torino, Torino, Italy. Laboratory for Scientific Communication, University of Calabria, Arcavacata (CS), Italy e-mail: [email protected] The Camera and the Visible "I'm an eye. A mechanical eye. I, the machine, show you a world the way only I can see it. I free myself for today and forever from human immobility. I'm in constant movement. I approach and pull away from objects. I creep under them. I move alongside a running horse's mouth. I fall and rise with the falling and rising bodies. This is I, the machine, manoeuvring in the chaotic movements, recording one movement after another in the most complex combinations. Freed from the boundaries of time and space, I co-ordinate any and all points of the universe, wherever I want them to be. My way leads towards the creation of a fresh perception of the world. Thus I explain in a new way the world unkown to you." Dziga Vertov, 19231 Abstract The word “Photography” comes from two Greek words, Photos (light) and Graphos (writing, painting), so “drawing with the light”. Taking pictures needs some devices and a particular process in Space and Time. According to Einstein the basic structure of our world is SpaceTime and things exist in a spacetime continuum, a world of four dimensions: height, width, depth and time. -

LIGHT PAINTING A” How To” to Getting Started

LIGHT PAINTING A” how to” to getting started. WHAT IS LIGHT PAINTING Light painting, or light drawing, is a photographic technique where a photo is created by moving a hand-held light source using it as either direct light source or to illuminate a subject while taking a long exposure. You can also have a stationary light source and move the camera for light painting effects. WHAT’S NEEDED TO LIGHT PAINT • Camera. • Wider angle lens, 18mm-55mm, 24mm-70mm, etc. • Remote trigger that works with bulb mode. • Tripod. • Light source…..flashlight, candle, lantern, flame, sparklers etc. • Some darkness. • Dark clothing. CREATING THE SHOT • Pick your site, something of interest. • Walk through in your mind what you want to do and pick the appropriate tools. Give it some thought! • Set up camera and choose your settings . Camera in bulb mode. ISO: starts at 100 f/stop: start at f/5.6 – f/7.1 Exposure setting Matrix or Evaluative. • Shine light on your subject, frame your composition and focus, auto focus is OK. Remember to leave enough room to light paint. You can always crop later • Turn off your auto focus or use back button focus. TOOLS • Universal adaptor. • Light swords. • Light blades. • Light pens, light writers. • Fiber optics and fiber optic whips. • Light sticks, light wands. • Light hoops. • Light strings, fairy lights. • Plastic bottles, florescent bulb covers, colored gels. FLASHLIGHT TYPES • Can go as low as 100 lumen for direct light painting or close up illumination. • Recommend 700-1000 lumen with a Cree led (square yellow chip) and 18650 battery to illuminate subjects that are farther away.