A Review of Current and Next Generation Spam Filtering Tools

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Trendmicro™ Hosted Email Security

TrendMicro™ Hosted Email Security Best Practice Guide Trend Micro Incorporated reserves the right to make changes to this document and to the products described herein without notice. The names of companies, products, people, characters, and/or data mentioned herein are fictitious and are in no way intended to represent any real individual, company, product, or event, unless otherwise noted. Complying with all applicable copyright laws is the responsibility of the user. Copyright © 2016 Trend Micro Incorporated. All rights reserved. Trend Micro, the Trend Micro t-ball logo, and TrendLabs are trademarks or registered trademarks of Trend Micro, Incorporated. All other brand and product names may be trademarks or registered trademarks of their respective companies or organizations. No part of this publication may be reproduced, photocopied, stored in a retrieval system, or transmitted without the express prior written consent of Trend Micro Incorporated. Authors : Michael Mortiz, Jefferson Gonzaga Editorial : Jason Zhang Released : June 2016 Table of Contents 1 Best Practice Configurations ................................................................................................................................. 8 1.1 Activating a domain ....................................................................................................................................... 8 1.2 Adding Approved/Blocked Sender ................................................................................................................ 8 1.3 HES order -

Show Me the Money: Characterizing Spam-Advertised Revenue

Show Me the Money: Characterizing Spam-advertised Revenue Chris Kanich∗ Nicholas Weavery Damon McCoy∗ Tristan Halvorson∗ Christian Kreibichy Kirill Levchenko∗ Vern Paxsonyz Geoffrey M. Voelker∗ Stefan Savage∗ ∗ y Department of Computer Science and Engineering International Computer Science Institute University of California, San Diego Berkeley, CA z Computer Science Division University of California, Berkeley Abstract money at all [6]. This situation has the potential to distort Modern spam is ultimately driven by product sales: policy and investment decisions that are otherwise driven goods purchased by customers online. However, while by intuition rather than evidence. this model is easy to state in the abstract, our under- In this paper we make two contributions to improving standing of the concrete business environment—how this state of affairs using measurement-based methods to many orders, of what kind, from which customers, for estimate: how much—is poor at best. This situation is unsurpris- ing since such sellers typically operate under question- • Order volume. We describe a general technique— able legal footing, with “ground truth” data rarely avail- purchase pair—for estimating the number of orders able to the public. However, absent quantifiable empiri- received (and hence revenue) via on-line store order cal data, “guesstimates” operate unchecked and can dis- numbering. We use this approach to establish rough, tort both policy making and our choice of appropri- but well-founded, monthly order volume estimates ate interventions. In this paper, we describe two infer- for many of the leading “affiliate programs” selling ence techniques for peering inside the business opera- counterfeit pharmaceuticals and software. tions of spam-advertised enterprises: purchase pair and • Purchasing behavior. -

Zambia and Spam

ZAMNET COMMUNICATION SYSTEMS LTD (ZAMBIA) Spam – The Zambian Experience Submission to ITU WSIS Thematic meeting on countering Spam By: Annabel S Kangombe – Maseko June 2004 Table of Contents 1.0 Introduction 1 1.1 What is spam? 1 1.2 The nature of Spam 1 1.3 Statistics 2 2.0 Technical view 4 2.1 Main Sources of Spam 4 2.1.1 Harvesting 4 2.1.2 Dictionary Attacks 4 2.1.3 Open Relays 4 2.1.4 Email databases 4 2.1.5 Inadequacies in the SMTP protocol 4 2.2 Effects of Spam 5 2.3 The fight against spam 5 2.3.1 Blacklists 6 2.3.2 White lists 6 2.3.3 Dial‐up Lists (DUL) 6 2.3.4 Spam filtering programs 6 2.4 Challenges of fighting spam 7 3.0 Legal Framework 9 3.1 Laws against spam in Zambia 9 3.2 International Regulations or Laws 9 3.2.1 US State Laws 9 3.2.2 The USA’s CAN‐SPAM Act 10 4.0 The Way forward 11 4.1 A global effort 11 4.2 Collaboration between ISPs 11 4.3 Strengthening Anti‐spam regulation 11 4.4 User education 11 4.5 Source authentication 12 4.6 Rewriting the Internet Mail Exchange protocol 12 1.0 Introduction I get to the office in the morning, walk to my desk and switch on the computer. One of the first things I do after checking the status of the network devices is to check my email. -

Email Phishing for IT Providers How Phishing Emails Have Changed and How to Protect Your IT Clients

Email Phishing for IT Providers How phishing emails have changed and how to protect your IT clients 1 © 2016 Calyptix Security Corporation. All rights reserved. I [email protected] I (800) 650 – 8930 (800) 650-8930 I [email protected] Contents Introduction ............................................................................................ 2 Phishing overview .................................................................................. 3 Trends in phishing emails ...................................................................... 6 Email phishing tactics .......................................................................... 11 Steps for MSP & VARS .......................................................................... 24 Advice for your clients .......................................................................... 29 Sources .................................................................................................. 35 1 © 2016 Calyptix Security Corporation. All rights reserved. I [email protected] I (800) 650 – 8930 Introduction There are only so many ways to break into a bank. You can march through the door. You can climb through a window. You can tunnel through the floor. There is the service entrance, the employee entrance, and access on the roof. Criminals who want to rob a bank will probably use an open route – such as a side door. It’s easier than breaking down a wall. Criminals who want to break into your network face a similar challenge. They need to enter. They can look for a weakness in your -

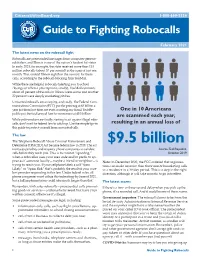

CUB Guide to Fighting Robocalls

CitizensUtilityBoard.org 1-800-669-5556 Guide to Fighting Robocalls February 2021 The latest news on the robocall fi ght Robocalls are prerecorded messages from computer-generat- ed dialers, and Illinois is one of the nation’s hardest hit states. In early 2021, for example, the state received more than 153 million robocalls (about 57 per second) in the span of just one month. That ranked Illinois eighth in the country for these calls, according to the robocall-blocking fi rm YouMail. While there are helpful robocalls (alerting you to school closings or when a prescription is ready), YouMail estimates about 42 percent of the calls in Illinois were scams and another 22 percent were simply marketing pitches. Unwanted robocalls are annoying, and costly. The Federal Com- munications Commission (FCC) put the price tag at $3 billion a year just from lost time, not even counting any fraud. TechRe- One in 10 Americans public put the total annual loss for consumers at $9.5 billion. are scammed each year, While policymakers are fi nally starting to act against illegal robo- calls, don’t wait for federal law to catch up. Use the simple tips in resulting in an annual loss of this guide to protect yourself from unwanted calls. The law The Telephone Robocall Abuse Criminal Enforcement and $9.5 billion Deterrence (TRACED) Act became federal law in 2019. The act increases penalties and requires phone companies to validate Source: TechRepublic, calls before they reach you. This is to combat “spoofi ng,” October 2019 when a robocaller uses your area code and/or prefi x to ap- pear as if someone locally—maybe a friend or neighbor—is Note: In December 2020, the FCC ordered that organiza- trying to reach you. -

Locating Spambots on the Internet

BOTMAGNIFIER: Locating Spambots on the Internet Gianluca Stringhinix, Thorsten Holzz, Brett Stone-Grossx, Christopher Kruegelx, and Giovanni Vignax xUniversity of California, Santa Barbara z Ruhr-University Bochum fgianluca,bstone,chris,[email protected] [email protected] Abstract the world-wide email traffic [20], and a lucrative busi- Unsolicited bulk email (spam) is used by cyber- ness has emerged around them [12]. The content of spam criminals to lure users into scams and to spread mal- emails lures users into scams, promises to sell cheap ware infections. Most of these unwanted messages are goods and pharmaceutical products, and spreads mali- sent by spam botnets, which are networks of compro- cious software by distributing links to websites that per- mised machines under the control of a single (malicious) form drive-by download attacks [24]. entity. Often, these botnets are rented out to particular Recent studies indicate that, nowadays, about 85% of groups to carry out spam campaigns, in which similar the overall spam traffic on the Internet is sent with the mail messages are sent to a large group of Internet users help of spamming botnets [20,36]. Botnets are networks in a short amount of time. Tracking the bot-infected hosts of compromised machines under the direction of a sin- that participate in spam campaigns, and attributing these gle entity, the so-called botmaster. While different bot- hosts to spam botnets that are active on the Internet, are nets serve different, nefarious goals, one important pur- challenging but important tasks. In particular, this infor- pose of botnets is the distribution of spam emails. -

The History of Spam Timeline of Events and Notable Occurrences in the Advance of Spam

The History of Spam Timeline of events and notable occurrences in the advance of spam July 2014 The History of Spam The growth of unsolicited e-mail imposes increasing costs on networks and causes considerable aggravation on the part of e-mail recipients. The history of spam is one that is closely tied to the history and evolution of the Internet itself. 1971 RFC 733: Mail Specifications 1978 First email spam was sent out to users of ARPANET – it was an ad for a presentation by Digital Equipment Corporation (DEC) 1984 Domain Name System (DNS) introduced 1986 Eric Thomas develops first commercial mailing list program called LISTSERV 1988 First know email Chain letter sent 1988 “Spamming” starts as prank by participants in multi-user dungeon games by MUDers (Multi User Dungeon) to fill rivals accounts with unwanted electronic junk mail. 1990 ARPANET terminates 1993 First use of the term spam was for a post from USENET by Richard Depew to news.admin.policy, which was the result of a bug in a software program that caused 200 messages to go out to the news group. The term “spam” itself was thought to have come from the spam skit by Monty Python's Flying Circus. In the sketch, a restaurant serves all its food with lots of spam, and the waitress repeats the word several times in describing how much spam is in the items. When she does this, a group of Vikings in the corner start a song: "Spam, spam, spam, spam, spam, spam, spam, spam, lovely spam! Wonderful spam!" Until told to shut up. -

A Survey of Learning-Based Techniques of Email Spam Filtering

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Unitn-eprints Research A SURVEY OF LEARNING-BASED TECHNIQUES OF EMAIL SPAM FILTERING Enrico Blanzieri and Anton Bryl January 2008 (Updated version) Technical Report # DIT-06-056 A Survey of Learning-Based Techniques of Email Spam Filtering Enrico Blanzieri, University of Trento, Italy, and Anton Bryl University of Trento, Italy, Create-Net, Italy [email protected] January 11, 2008 Abstract vertising pornography, pyramid schemes, etc. [68]. The total worldwide financial losses caused by spam Email spam is one of the major problems of the to- in 2005 were estimated by Ferris Research Analyzer day’s Internet, bringing financial damage to compa- Information Service at $50 billion [31]. nies and annoying individual users. Among the ap- Lately, Goodman et al. [39] presented an overview proaches developed to stop spam, filtering is an im- of the field of anti-spam protection, giving a brief portant and popular one. In this paper we give an history of spam and anti-spam and describing major overview of the state of the art of machine learn- directions of development. They are quite optimistic ing applications for spam filtering, and of the ways in their conclusions, indicating learning-based spam of evaluation and comparison of different filtering recognition, together with anti-spoofing technologies methods. We also provide a brief description of and economic approaches, as one of the measures other branches of anti-spam protection and discuss which together will probably lead to the final victory the use of various approaches in commercial and non- over email spammers in the near future. -

Detecting Phishing in Emails Srikanth Palla and Ram Dantu, University of North Texas, Denton, TX

1 Detecting Phishing in Emails Srikanth Palla and Ram Dantu, University of North Texas, Denton, TX announcements. The third category of spammers is called Abstract — Phishing attackers misrepresent the true sender and phishers. Phishing attackers misrepresent the true sender and steal consumers' personal identity data and financial account steal the consumers' personal identity data and financial credentials. Though phishers try to counterfeit the websites in account credentials. These spammers send spoofed emails and the content, they do not have access to all the fields in the lead consumers to counterfeit websites designed to trick email header. Our classification method is based on the recipients into divulging financial data such as credit card information provided in the email header (rather than the numbers, account usernames, passwords and social security content of the email). We believe the phisher cannot modify numbers. By hijacking brand names of banks, e-retailers and the complete header, though he can forge certain fields. We credit card companies, phishers often convince the recipients based our classification on three kinds of analyses on the to respond. Legislation can not help since a majority number header: DNS-based header analysis, Social Network analysis of phishers do not belong to the United States. In this paper we and Wantedness analysis. In the DNS-based header analysis, present a new method for recognizing phishing attacks so that we classified the corpus into 8 buckets and used social the consumers can be vigilant and not fall prey to these network analysis to further reduce the false positives. We counterfeit websites. Based on the relation between credibility introduced a concept of wantedness and credibility, and and phishing frequency, we classify the phishers into i) derived equations to calculate the wantedness values of the Prospective Phishers ii) Suspects iii) Recent Phishers iv) Serial email senders. -

Efficient Spam Filtering System Based on Smart Cooperative Subjective and Objective Methods*

Int. J. Communications, Network and System Sciences, 2013, 6, 88-99 http://dx.doi.org/10.4236/ijcns.2013.62011 Published Online February 2013 (http://www.scirp.org/journal/ijcns) Efficient Spam Filtering System Based on Smart * Cooperative Subjective and Objective Methods Samir A. Elsagheer Mohamed1,2 1College of Computer, Qassim University, Qassim, KSA 2Electrical Engineering Department, Faculty of Engineering, Aswan University, Aswan, Egypt Email: [email protected], [email protected] Received September 17, 2012; revised January 16, 2013; accepted January 25, 2013 ABSTRACT Most of the spam filtering techniques are based on objective methods such as the content filtering and DNS/reverse DNS checks. Recently, some cooperative subjective spam filtering techniques are proposed. Objective methods suffer from the false positive and false negative classification. Objective methods based on the content filtering are time con- suming and resource demanding. They are inaccurate and require continuous update to cope with newly invented spammer’s tricks. On the other side, the existing subjective proposals have some drawbacks like the attacks from mali- cious users that make them unreliable and the privacy. In this paper, we propose an efficient spam filtering system that is based on a smart cooperative subjective technique for content filtering in addition to the fastest and the most reliable non-content-based objective methods. The system combines several applications. The first is a web-based system that we have developed based on the proposed technique. A server application having extra features suitable for the enter- prises and closed work groups is a second part of the system. Another part is a set of standard web services that allow any existing email server or email client to interact with the system. -

Zerohack Zer0pwn Youranonnews Yevgeniy Anikin Yes Men

Zerohack Zer0Pwn YourAnonNews Yevgeniy Anikin Yes Men YamaTough Xtreme x-Leader xenu xen0nymous www.oem.com.mx www.nytimes.com/pages/world/asia/index.html www.informador.com.mx www.futuregov.asia www.cronica.com.mx www.asiapacificsecuritymagazine.com Worm Wolfy Withdrawal* WillyFoReal Wikileaks IRC 88.80.16.13/9999 IRC Channel WikiLeaks WiiSpellWhy whitekidney Wells Fargo weed WallRoad w0rmware Vulnerability Vladislav Khorokhorin Visa Inc. Virus Virgin Islands "Viewpointe Archive Services, LLC" Versability Verizon Venezuela Vegas Vatican City USB US Trust US Bankcorp Uruguay Uran0n unusedcrayon United Kingdom UnicormCr3w unfittoprint unelected.org UndisclosedAnon Ukraine UGNazi ua_musti_1905 U.S. Bankcorp TYLER Turkey trosec113 Trojan Horse Trojan Trivette TriCk Tribalzer0 Transnistria transaction Traitor traffic court Tradecraft Trade Secrets "Total System Services, Inc." Topiary Top Secret Tom Stracener TibitXimer Thumb Drive Thomson Reuters TheWikiBoat thepeoplescause the_infecti0n The Unknowns The UnderTaker The Syrian electronic army The Jokerhack Thailand ThaCosmo th3j35t3r testeux1 TEST Telecomix TehWongZ Teddy Bigglesworth TeaMp0isoN TeamHav0k Team Ghost Shell Team Digi7al tdl4 taxes TARP tango down Tampa Tammy Shapiro Taiwan Tabu T0x1c t0wN T.A.R.P. Syrian Electronic Army syndiv Symantec Corporation Switzerland Swingers Club SWIFT Sweden Swan SwaggSec Swagg Security "SunGard Data Systems, Inc." Stuxnet Stringer Streamroller Stole* Sterlok SteelAnne st0rm SQLi Spyware Spying Spydevilz Spy Camera Sposed Spook Spoofing Splendide -

Account Administrator's Guide

ePrism Email Security Account Administrator’s Guide - V10.4 4225 Executive Sq, Ste 1600 Give us a call: Send us an email: For more info, visit us at: La Jolla, CA 92037-1487 1-800-782-3762 [email protected] www.edgewave.com © 2001—2016 EdgeWave. All rights reserved. The EdgeWave logo is a trademark of EdgeWave Inc. All other trademarks and registered trademarks are hereby acknowledged. Microsoft and Windows are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. Other product and company names mentioned herein may be the trademarks of their respective owners. The Email Security software and its documentation are copyrighted materials. Law prohibits making unauthorized copies. No part of this software or documentation may be reproduced, transmitted, transcribed, stored in a retrieval system, or translated into another language without prior permission of EdgeWave. 10.4 Contents Chapter 1 Overview 1 Overview of Services 1 Email Filtering (EMF) 2 Archive 3 Continuity 3 Encryption 4 Data Loss Protection (DLP) 4 Personal Health Information 4 Personal Financial Information 5 Document Conventions 6 Other Conventions 6 Supported Browsers 7 Reporting Spam to EdgeWave 7 Contacting Us 7 Additional Resources 7 Chapter 2 Portal Overview 8 Navigation Tree 9 Work Area 10 Navigation Icons 10 Getting Started 11 Logging into the portal for the first time 11 Logging into the portal after registration 12 Changing Your Personal Information 12 Configuring Accounts 12 Chapter 3 EdgeWave Administrator