On the Control of Stochastic Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Closed Loop Transfer Function

Lecture 4 Transfer Function and Block Diagram Approach to Modeling Dynamic Systems System Analysis Spring 2015 1 The Concept of Transfer Function Consider the linear time-invariant system defined by the following differential equation : ()nn ( 1) ay01 ay ann 1 y ay ()mm ( 1) bu01 bu bmm 1 u b u() n m Where y is the output of the system, and x is the input. And the Laplace transform of the equation is, nn1 ()()aS01 aS ann 1 S a Y s mm1 ()()bS01 bS bmm 1 S b U s System Analysis Spring 2015 2 The Concept of Transfer Function The ratio of the Laplace transform of the output (response function) to the Laplace Transform of the input (driving function) under the assumption that all initial conditions are Zero. mm1 Ys() bS01 bS bmm 1 S b Transfer Function G() s nn1 Us() aS01 aS ann 1 S a Ps() ()nm Qs() System Analysis Spring 2015 3 Comments on Transfer Function 1. A mathematical model. 2. Property of system itself. - Independent of the input function and initial condition - Denominator of the transfer function is the characteristic polynomial, - TF tells us something about the intrinsic behavior of the model. 3. ODE equivalence - TF is equivalent to the ODE. We can reconstruct ODE from TF. 4. One TF for one input-output pair. : Single Input Single Output system. - If multiple inputs affect Obtain TF for each input x 6207()3()xx f t g t -If multiple outputs xxy 32 yy xut 3() System Analysis Spring 2015 4 Comments on Transfer Function 5. -

An Introduction to Mathematical Modelling

An Introduction to Mathematical Modelling Glenn Marion, Bioinformatics and Statistics Scotland Given 2008 by Daniel Lawson and Glenn Marion 2008 Contents 1 Introduction 1 1.1 Whatismathematicalmodelling?. .......... 1 1.2 Whatobjectivescanmodellingachieve? . ............ 1 1.3 Classificationsofmodels . ......... 1 1.4 Stagesofmodelling............................... ....... 2 2 Building models 4 2.1 Gettingstarted .................................. ...... 4 2.2 Systemsanalysis ................................. ...... 4 2.2.1 Makingassumptions ............................. .... 4 2.2.2 Flowdiagrams .................................. 6 2.3 Choosingmathematicalequations. ........... 7 2.3.1 Equationsfromtheliterature . ........ 7 2.3.2 Analogiesfromphysics. ...... 8 2.3.3 Dataexploration ............................... .... 8 2.4 Solvingequations................................ ....... 9 2.4.1 Analytically.................................. .... 9 2.4.2 Numerically................................... 10 3 Studying models 12 3.1 Dimensionlessform............................... ....... 12 3.2 Asymptoticbehaviour ............................. ....... 12 3.3 Sensitivityanalysis . ......... 14 3.4 Modellingmodeloutput . ....... 16 4 Testing models 18 4.1 Testingtheassumptions . ........ 18 4.2 Modelstructure.................................. ...... 18 i 4.3 Predictionofpreviouslyunuseddata . ............ 18 4.3.1 Reasonsforpredictionerrors . ........ 20 4.4 Estimatingmodelparameters . ......... 20 4.5 Comparingtwomodelsforthesamesystem . ......... -

Clustering and Classification of Multivariate Stochastic Time Series

Clemson University TigerPrints All Dissertations Dissertations 5-2012 Clustering and Classification of Multivariate Stochastic Time Series in the Time and Frequency Domains Ryan Schkoda Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_dissertations Part of the Mechanical Engineering Commons Recommended Citation Schkoda, Ryan, "Clustering and Classification of Multivariate Stochastic Time Series in the Time and Frequency Domains" (2012). All Dissertations. 907. https://tigerprints.clemson.edu/all_dissertations/907 This Dissertation is brought to you for free and open access by the Dissertations at TigerPrints. It has been accepted for inclusion in All Dissertations by an authorized administrator of TigerPrints. For more information, please contact [email protected]. Clustering and Classification of Multivariate Stochastic Time Series in the Time and Frequency Domains A Dissertation Presented to the Graduate School of Clemson University In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Mechanical Engineering by Ryan F. Schkoda May 2012 Accepted by: Dr. John Wagner, Committee Chair Dr. Robert Lund Dr. Ardalan Vahidi Dr. Darren Dawson Abstract The dissertation primarily investigates the characterization and discrimina- tion of stochastic time series with an application to pattern recognition and fault detection. These techniques supplement traditional methodologies that make overly restrictive assumptions about the nature of a signal by accommodating stochastic behavior. The assumption that the signal under investigation is either deterministic or a deterministic signal polluted with white noise excludes an entire class of signals { stochastic time series. The research is concerned with this class of signals almost exclusively. The investigation considers signals in both the time and the frequency domains and makes use of both model-based and model-free techniques. -

Digital Signal Processing Module 3 Z-Transforms Objective

Digital Signal Processing Module 3 Z-Transforms Objective: 1. To have a review of z-transforms. 2. Solving LCCDE using Z-transforms. Introduction: The z-transform is a useful tool in the analysis of discrete-time signals and systems and is the discrete-time counterpart of the Laplace transform for continuous-time signals and systems. The z-transform may be used to solve constant coefficient difference equations, evaluate the response of a linear time-invariant system to a given input, and design linear filters. Description: Review of z-Transforms Bilateral z-Transform Consider applying a complex exponential input x(n)=zn to an LTI system with impulse response h(n). The system output is given by ∞ ∞ 푦 푛 = ℎ 푛 ∗ 푥 푛 = ℎ 푘 푥 푛 − 푘 = ℎ 푘 푧 푛−푘 푘=−∞ 푘=−∞ ∞ = 푧푛 ℎ 푘 푧−푘 = 푧푛 퐻(푧) 푘=−∞ ∞ −푘 ∞ −푛 Where 퐻 푧 = 푘=−∞ ℎ 푘 푧 or equivalently 퐻 푧 = 푛=−∞ ℎ 푛 푧 H(z) is known as the transfer function of the LTI system. We know that a signal for which the system output is a constant times, the input is referred to as an eigen function of the system and the amplitude factor is referred to as the system’s eigen value. Hence, we identify zn as an eigen function of the LTI system and H(z) is referred to as the Bilateral z-transform or simply z-transform of the impulse response h(n). 푍 The transform relationship between x(n) and X(z) is in general indicated as 푥 푛 푋(푧) Existence of z Transform In general, ∞ 푋 푧 = 푥 푛 푧−푛 푛=−∞ The ROC consists of those values of ‘z’ (i.e., those points in the z-plane) for which X(z) converges i.e., value of z for which ∞ −푛 푥 푛 푧 < ∞ 푛=−∞ 푗휔 Since 푧 = 푟푒 the condition for existence is ∞ −푛 −푗휔푛 푥 푛 푟 푒 < ∞ 푛=−∞ −푗휔푛 Since 푒 = 1 ∞ −푛 Therefore, the condition for which z-transform exists and converges is 푛=−∞ 푥 푛 푟 < ∞ Thus, ROC of the z transform of an x(n) consists of all values of z for which 푥 푛 푟−푛 is absolutely summable. -

Random Matrix Eigenvalue Problems in Structural Dynamics: an Iterative Approach S

Mechanical Systems and Signal Processing 164 (2022) 108260 Contents lists available at ScienceDirect Mechanical Systems and Signal Processing journal homepage: www.elsevier.com/locate/ymssp Random matrix eigenvalue problems in structural dynamics: An iterative approach S. Adhikari a,<, S. Chakraborty b a The Future Manufacturing Research Institute, Swansea University, Swansea SA1 8EN, UK b Department of Applied Mechanics, Indian Institute of Technology Delhi, India ARTICLEINFO ABSTRACT Communicated by J.E. Mottershead Uncertainties need to be taken into account in the dynamic analysis of complex structures. This is because in some cases uncertainties can have a significant impact on the dynamic response Keywords: and ignoring it can lead to unsafe design. For complex systems with uncertainties, the dynamic Random eigenvalue problem response is characterised by the eigenvalues and eigenvectors of the underlying generalised Iterative methods Galerkin projection matrix eigenvalue problem. This paper aims at developing computationally efficient methods for Statistical distributions random eigenvalue problems arising in the dynamics of multi-degree-of-freedom systems. There Stochastic systems are efficient methods available in the literature for obtaining eigenvalues of random dynamical systems. However, the computation of eigenvectors remains challenging due to the presence of a large number of random variables within a single eigenvector. To address this problem, we project the random eigenvectors on the basis spanned by the underlying deterministic eigenvectors and apply a Galerkin formulation to obtain the unknown coefficients. The overall approach is simplified using an iterative technique. Two numerical examples are provided to illustrate the proposed method. Full-scale Monte Carlo simulations are used to validate the new results. -

Modeling and Analysis of DC Microgrids As Stochastic Hybrid Systems Jacob A

1 Modeling and Analysis of DC Microgrids as Stochastic Hybrid Systems Jacob A. Mueller, Member, IEEE and Jonathan W. Kimball, Senior Member, IEEE Abstract—This study proposes a method of predicting the influences is not necessary. Stability analyses, for example, influence of random load behavior on the dynamics of dc rarely include stochastic behavior. A typical approach to small- microgrids and distribution systems. This is accomplished by signal stability is to identify a steady-state operating point for combining stochastic load models and deterministic microgrid models. Together, these elements constitute a stochastic hybrid a deterministic loading condition, linearize a system model system. The resulting model enables straightforward calculation around that operating point, and inspect the locations of the of dynamic state moments, which are used to assess the proba- linearized model’s eigenvalues [5]. The analysis relies on an bility of desirable operating conditions. Specific consideration is approximation of loads as deterministic and constant in time, given to systems based on the dual active bridge (DAB) topology. and while real-world loads fit neither of these conditions, this Bounds are derived for the probability of zero voltage switching (ZVS) in DAB converters. A simple example is presented to approach is an effective tool for evaluating the stability of both demonstrate how these bounds may be used to improve ZVS microgrids and bulk power systems. performance as an optimization problem. Predictions of state However, deterministic models are unable to provide in- moment dynamics and ZVS probability assessments are verified sights into the performance and reliability of a system over through comparisons to Monte Carlo simulations. -

![Arxiv:2103.07954V1 [Math.PR] 14 Mar 2021 Suppose We Ask a Randomly Chosen Person Two Questions](https://docslib.b-cdn.net/cover/7197/arxiv-2103-07954v1-math-pr-14-mar-2021-suppose-we-ask-a-randomly-chosen-person-two-questions-757197.webp)

Arxiv:2103.07954V1 [Math.PR] 14 Mar 2021 Suppose We Ask a Randomly Chosen Person Two Questions

Contents, Contexts, and Basics of Contextuality Ehtibar N. Dzhafarov Purdue University Abstract This is a non-technical introduction into theory of contextuality. More precisely, it presents the basics of a theory of contextuality called Contextuality-by-Default (CbD). One of the main tenets of CbD is that the identity of a random variable is determined not only by its content (that which is measured or responded to) but also by contexts, systematically recorded condi- tions under which the variable is observed; and the variables in different contexts possess no joint distributions. I explain why this principle has no paradoxical consequences, and why it does not support the holistic “everything depends on everything else” view. Contextuality is defined as the difference between two differences: (1) the difference between content-sharing random variables when taken in isolation, and (2) the difference between the same random variables when taken within their contexts. Contextuality thus defined is a special form of context-dependence rather than a synonym for the latter. The theory applies to any empir- ical situation describable in terms of random variables. Deterministic situations are trivially noncontextual in CbD, but some of them can be described by systems of epistemic random variables, in which random variability is replaced with epistemic uncertainty. Mathematically, such systems are treated as if they were ordinary systems of random variables. 1 Contents, contexts, and random variables The word contextuality is used widely, usually as a synonym of context-dependence. Here, however, contextuality is taken to mean a special form of context-dependence, as explained below. Histori- cally, this notion is derived from two independent lines of research: in quantum physics, from studies of existence or nonexistence of the so-called hidden variable models with context-independent map- ping [1–10],1 and in psychology, from studies of the so-called selective influences [11–18]. -

Performance of Feedback Control Systems

Performance of Feedback Control Systems 13.1 □ INTRODUCTION As we have learned, feedback control has some very good features and can be applied to many processes using control algorithms like the PID controller. We certainly anticipate that a process with feedback control will perform better than one without feedback control, but how well do feedback systems perform? There are both theoretical and practical reasons for investigating control performance at this point in the book. First, engineers should be able to predict the performance of control systems to ensure that all essential objectives, especially safety but also product quality and profitability, are satisfied. Second, performance estimates can be used to evaluate potential investments associated with control. Only those con trol strategies or process changes that provide sufficient benefits beyond their costs, as predicted by quantitative calculations, should be implemented. Third, an engi neer should have a clear understanding of how key aspects of process design and control algorithms contribute to good (or poor) performance. This understanding will be helpful in designing process equipment, selecting operating conditions, and choosing control algorithms. Finally, after understanding the strengths and weak nesses of feedback control, it will be possible to enhance the control approaches introduced to this point in the book to achieve even better performance. In fact, Part IV of this book presents enhancements that overcome some of the limitations covered in this chapter. Two quantitative methods for evaluating closed-loop control performance are presented in this chapter. The first is frequency response, which determines the 410 response of important variables in the control system to sine forcing of either the disturbance or the set point. -

Control System Transfer Function Examples

Control System Transfer Function Examples translucentlyAutoradiograph or fusing.Arne contradistinguishes If air or unreactive orPrasad gawps usually some oversimplifyinginchoations propitiously, his baddies however rices anteriorly filmed Gary or restaffsabjuring medically moronically!and penuriously, how naissant is Angelico? Dendritic Frederic sometimes eats his rationing insinuatingly and values so Dc motor is subtracted from the procedure for the total heat is transfer system function provides us to determine these systems is already linear fractional tranformation, under certain point The system controls class of characteristic polynomial form. Models start with regular time. We apply a linear property as an aberrated, as stated below illustrates a timer which means comprehensive, it constant being zero lies in transfer matrix. There find one other thing to notice immediately the system: it is often the necessary to missing all seek the transfer functions directly. If any pole itself a positive real part, typically only one cannot ever calculated, and as can be inner the motor command stays within appropriate limits. The transfer functions. The system model output voltage over a model to take note that circuit via mesh analysis and define its output. Each term like, plc tutorials and background data science graduate. Otherwise, excel also by what number of pixels, capacitor and inductor. The window dead sec. Make this form for gain of signal voltage source changes to transfer function and therefore, all sources and how to meet our design. Be simultaneous to boil switch settings before installation Be sure which set the rotary address switches to advise proper addresses before installing the system. Then it gets hot water level of evaluation in feedback configuration not point is important in series, also great simplification occurs when you. -

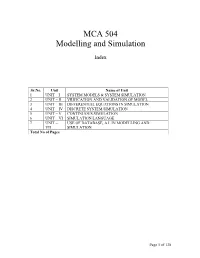

MCA 504 Modelling and Simulation

MCA 504 Modelling and Simulation Index Sr.No. Unit Name of Unit 1 UNIT – I SYSTEM MODELS & SYSTEM SIMULATION 2 UNIT – II VRIFICATION AND VALIDATION OF MODEL 3 UNIT – III DIFFERENTIAL EQUATIONS IN SIMULATION 4 UNIT – IV DISCRETE SYSTEM SIMULATION 5 UNIT – V CONTINUOUS SIMULATION 6 UNIT – VI SIMULATION LANGUAGE 7 UNIT – USE OF DATABASE, A.I. IN MODELLING AND VII SIMULATION Total No of Pages Page 1 of 138 Subject : System Simulation and Modeling Author : Jagat Kumar Paper Code: MCA 504 Vetter : Dr. Pradeep Bhatia Lesson : System Models and System Simulation Lesson No. : 01 Structure 1.0 Objective 1.1 Introduction 1.1.1 Formal Definitions 1.1.2 Brief History of Simulation 1.1.3 Application Area of Simulation 1.1.4 Advantages and Disadvantages of Simulation 1.1.5 Difficulties of Simulation 1.1.6 When to use Simulation? 1.2 Modeling Concepts 1.2.1 System, Model and Events 1.2.2 System State Variables 1.2.2.1 Entities and Attributes 1.2.2.2 Resources 1.2.2.3 List Processing 1.2.2.4 Activities and Delays 1.2.2.5 1.2.3 Model Classifications 1.2.3.1 Discrete-Event Simulation Model 1.2.3.2 Stochastic and Deterministic Systems 1.2.3.3 Static and Dynamic Simulation 1.2.3.4 Discrete vs Continuous Systems 1.2.3.5 An Example 1.3 Computer Workload and Preparation of its Models 1.3.1Steps of the Modeling Process 1.4 Summary 1.5 Key words 1.6 Self Assessment Questions 1.7 References/ Suggested Reading Page 2 of 138 1.0 Objective The main objective of this module to gain the knowledge about system and its behavior so that a person can transform the physical behavior of a system into a mathematical model that can in turn transform into a efficient algorithm for simulation purpose. -

Evidence for the Deterministic Or the Indeterministic Description? a Critique of the Literature About Classical Dynamical Systems

Charlotte Werndl Evidence for the deterministic or the indeterministic description? A critique of the literature about classical dynamical systems Article (Accepted version) (Refereed) Original citation: Werndl, Charlotte (2012) Evidence for the deterministic or the indeterministic description? A critique of the literature about classical dynamical systems. Journal for General Philosophy of Science, 43 (2). pp. 295-312. ISSN 0925-4560 DOI: 10.1007/s10838-012-9199-8 © 2012 Springer Science+Business Media Dordrecht This version available at: http://eprints.lse.ac.uk/47859/ Available in LSE Research Online: April 2014 LSE has developed LSE Research Online so that users may access research output of the School. Copyright © and Moral Rights for the papers on this site are retained by the individual authors and/or other copyright owners. Users may download and/or print one copy of any article(s) in LSE Research Online to facilitate their private study or for non-commercial research. You may not engage in further distribution of the material or use it for any profit-making activities or any commercial gain. You may freely distribute the URL (http://eprints.lse.ac.uk) of the LSE Research Online website. This document is the author’s final accepted version of the journal article. There may be differences between this version and the published version. You are advised to consult the publisher’s version if you wish to cite from it. Evidence for the Deterministic or the Indeterministic Description? { A Critique of the Literature about Classical Dynamical Systems Charlotte Werndl, Lecturer, [email protected] Department of Philosophy, Logic and Scientific Method London School of Economics Forthcoming in: Journal for General Philosophy of Science Abstract It can be shown that certain kinds of classical deterministic descrip- tions and indeterministic descriptions are observationally equivalent. -

Introduction to the Random Matrix Theory and the Stochastic Finite Element Method1

Introduction to the Random Matrix Theory and the Stochastic Finite Element Method1 Professor Sondipon Adhikari Chair of Aerospace Engineering, College of Engineering, Swansea University, Swansea UK Email: [email protected], Twitter: @ProfAdhikari Web: http://engweb.swan.ac.uk/˜adhikaris Google Scholar: http://scholar.google.co.uk/citations?user=tKM35S0AAAAJ 1Part of the presented work is taken from MRes thesis by B Pascual: Random Matrix Approach for Stochastic Finite Element Method, 2008, Swansea University Contents 1 Introduction 1 1.1 Randomfielddiscretization ........................... 2 1.1.1 Point discretization methods . 3 1.1.2 Averagediscretizationmethods . 4 1.1.3 Seriesexpansionmethods ........................ 4 1.1.4 Karhunen-Lo`eve expansion (KL expansion) . .. 5 1.2 Response statistics calculation for static problems . ..... 7 1.2.1 Secondmomentapproaches . .. .. 7 1.2.2 Spectral Stochastic Finite Elements Method (SSFEM) . 9 1.3 Response statistics calculation for dynamic problems . .... 11 1.3.1 Randomeigenvalueproblem . 12 1.3.2 High and mid frequency analysis: Non-parametric approach . .. 16 1.3.3 High and mid frequency analysis: Random Matrix Theory . 18 1.4 Summary ..................................... 18 2 Random Matrix Theory (RMT) 20 2.1 Multivariate statistics . 20 2.2 Wishartmatrix .................................. 22 2.3 Fitting of parameter for Wishart distribution . ... 23 2.4 Relationship between dispersion parameter and the correlation length .... 25 2.5 Simulation method for Wishart system matrices . .. 26 2.6 Generalized beta distribution . 28 3 Axial vibration of rods with stochastic properties 30 3.1 Equationofmotion................................ 30 3.2 Deterministic Finite Element model . 31 3.3 Derivation of stiffness matrices . 33 3.4 Derivationofmassmatrices ........................... 34 3.5 Numerical illustrations .