Exploiting Semantic for the Automatic Reverse Engineering of Communication Protocols

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

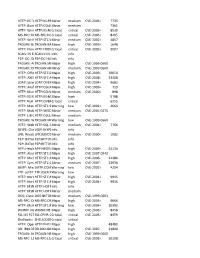

Uila Supported Apps

Uila Supported Applications and Protocols updated Oct 2020 Application/Protocol Name Full Description 01net.com 01net website, a French high-tech news site. 050 plus is a Japanese embedded smartphone application dedicated to 050 plus audio-conferencing. 0zz0.com 0zz0 is an online solution to store, send and share files 10050.net China Railcom group web portal. This protocol plug-in classifies the http traffic to the host 10086.cn. It also 10086.cn classifies the ssl traffic to the Common Name 10086.cn. 104.com Web site dedicated to job research. 1111.com.tw Website dedicated to job research in Taiwan. 114la.com Chinese web portal operated by YLMF Computer Technology Co. Chinese cloud storing system of the 115 website. It is operated by YLMF 115.com Computer Technology Co. 118114.cn Chinese booking and reservation portal. 11st.co.kr Korean shopping website 11st. It is operated by SK Planet Co. 1337x.org Bittorrent tracker search engine 139mail 139mail is a chinese webmail powered by China Mobile. 15min.lt Lithuanian news portal Chinese web portal 163. It is operated by NetEase, a company which 163.com pioneered the development of Internet in China. 17173.com Website distributing Chinese games. 17u.com Chinese online travel booking website. 20 minutes is a free, daily newspaper available in France, Spain and 20minutes Switzerland. This plugin classifies websites. 24h.com.vn Vietnamese news portal 24ora.com Aruban news portal 24sata.hr Croatian news portal 24SevenOffice 24SevenOffice is a web-based Enterprise resource planning (ERP) systems. 24ur.com Slovenian news portal 2ch.net Japanese adult videos web site 2Shared 2shared is an online space for sharing and storage. -

Universidad Pol Facultad D Trabajo

UNIVERSIDAD POLITÉCNICA DE MADRID FACULTAD DE INFORMÁTICA TRABAJO FINAL DE CARRERA ESTUDIO DEL PROTOCOLO XMPP DE MESAJERÍA ISTATÁEA, DE SUS ATECEDETES, Y DE SUS APLICACIOES CIVILES Y MILITARES Autor: José Carlos Díaz García Tutor: Rafael Martínez Olalla Madrid, Septiembre de 2008 2 A mis padres, Francisco y Pilar, que me empujaron siempre a terminar esta licenciatura y que tanto me han enseñado sobre la vida A mis abuelos (q.e.p.d.) A mi hijo icolás, que me ha dejado terminar este trabajo a pesar de robarle su tiempo de juego conmigo Y muy en especial, a Susana, mi fiel y leal compañera, y la luz que ilumina mi camino Agradecimientos En primer lugar, me gustaría agradecer a toda mi familia la comprensión y confianza que me han dado, una vez más, para poder concluir definitivamente esta etapa de mi vida. Sin su apoyo, no lo hubiera hecho. En segundo lugar, quiero agradecer a mis amigos Rafa y Carmen, su interés e insistencia para que llegara este momento. Por sus consejos y por su amistad, les debo mi gratitud. Por otra parte, quiero agradecer a mis compañeros asesores militares de Nextel Engineering sus explicaciones y sabios consejos, que sin duda han sido muy oportunos para escribir el capítulo cuarto de este trabajo. Del mismo modo, agradecer a Pepe Hevia, arquitecto de software de Alhambra Eidos, los buenos ratos compartidos alrrededor de nuestros viejos proyectos sobre XMPP y que encendieron prodigiosamente la mecha de este proyecto. A Jaime y a Bernardo, del Ministerio de Defensa, por haberme hecho descubrir las bondades de XMPP. -

Freiesmagazin 06/2011

freiesMagazin Juni 2011 Topthemen dieser Ausgabe Ubuntu 11.04 – Vorstellung des Natty Narwhal Seite 4 Am 28. April 2011 wurde Ubuntu 11.04 freigegeben. Der Artikel gibt einen Überblick über die Neuerungen der Distribution mit besonderem Augenmerk auf das neue Desktop-System „Unity“, welches im Vorfeld bereits für viel Furore sorgte. (weiterlesen) GNOME 3.0: Bruch mit Paradigmen Seite 15 Mit der Freigabe von GNOME 3 bricht der Entwicklerkreis rund um die Desktopumgebung mit vielen gängigen Paradigmen der Benutzerführung und präsentiert ein weitgehend überarbeite- tes Produkt, das zahlreiche Neuerungen mit sich bringt. Drei wesentliche Punkte sind in die neue Generation der Umgebung eingegangen: eine Erneuerung der Oberfläche, Entfernung von unnötigen Komponenten und eine bessere Außendarstellung. (weiterlesen) UnrealIRC – gestern „Flurfunk“, heute „Chat“ Seite 24 Ungern brüllt man Anweisungen von Büro zu Büro. Damit Angestellte miteinander kommunizie- ren können, wird vielerorts zum Telefon gegriffen. Wird bereits telefoniert, muss die dienstliche E-Mail herhalten, um Kommunikationsbedürfnisse zu befriedigen. Was aber, wenn die Leitung belegt und das Senden einer E-Mail derzeit nicht möglich ist? Ein Chat ist die Lösung für das Problem. (weiterlesen) © freiesMagazin CC-BY-SA 3.0 Ausgabe 06/2011 ISSN 1867-7991 MAGAZIN Editorial Traut Euch und macht mit Wer nicht wagt, der nicht gewinnt Dies gilt im Übrigen für fast alles im Leben: sei Inhalt Die Reaktionen auf unsere These im Editorial es die Frage nach einer Gehaltserhöhung, das des letzten Monats [1] waren recht gut. Zur Erin- erste zögerliche Gespräch mit seinem Schwarm Linux allgemein nerung: Wir fragten, ob – nach der bescheidenen oder der Umzug ins Ausland, um eines neues Le- Ubuntu 11.04 – Vorstellung von Natty S. -

Share-Your-Wine-Kissy-Nacha-Nalez.Pdf

share your wine 1 lost in the pages, of a book full of life reading how we'll change the Universe when the stars fall from Heaven for they're you and I ADAM MARSHALL DOBRIN share your wine 3 It starts by seeing the idea of the questions of "are I this letter, or that letter (or every letter after "da" and maybe "ma" too)" connecting the end of simulated reality and the word Matrix and connecting that "X" to the Kiss of Judas (and Midas[0]) and the Kiss of J[1]acob[2] and the eponymous band and it's lead singer's names' link to the idea of "simulation" and of the Last Biblical Monday and of a hallowed "s" that we'll get to later. Gene Simmons, one of the Gene's of Genesis which reveals the hidden power of the "sun" linking to Silicon and to the Fifth Element through the indexed letter of 14; also to Christopher Columbus "walking on water" in the year ADIB and to a whole host of fictional characters that tie together the number 5 with this Revelation that Prince Adam's letter "He" indexes as 5 just like Voltron's "V" and 21 Pilot's flashlight in the song "Cancer" and in a normal functional society these kinds of synchronistic connections would be call and cause for attention and for news--and here they act to shine a light on the darkness... something like "it's been shaken to death, but still ... no real comment;" at least that's really what I see. -

Analysis of Rxbot

ANALYSIS OF RXBOT A Thesis Presented to The Faculty of the Department of Computer Science San José State University In Partial Fulfillment of the Requirements for the Degree Master of Science by Esha Patil May 2009 1 © 2009 Esha Patil ALL RIGHTS RESERVED 2 SAN JOSÉ STATE UNIVERSITY The Undersigned Thesis Committee Approves the Thesis Titled ANALYSIS OF RXBOT by Esha Patil APPROVED FOR THE DEPARTMENT OF COMPUTER SCIENCE ___________________________________________________________ Dr. Mark Stamp, Department of Computer Science Date __________________________________________________________ Dr. Robert Chun, Department of Computer Science Date __________________________________________________________ Dr. Teng Moh, Department of Computer Science Date APPROVED FOR THE UNIVERSITY _______________________________________________________________ Associate Dean Office of Graduate Studies and Research Date 3 ABSTRACT ANALYSIS OF RXBOT by Esha Patil In the recent years, botnets have emerged as a serious threat on the Internet. Botnets are commonly used for exploits such as distributed denial of service (DDoS) attacks, identity theft, spam, and click fraud. The immense size of botnets (hundreds or thousands of PCs connected in a botnet) increases the ubiquity and speed of attacks. Due to the criminally motivated uses of botnets, they pose a serious threat to the community. Thus, it is important to analyze known botnets to understand their working. Most of the botnets target security vulnerabilities in Microsoft Windows platform. Rxbot is a win32 bot that belongs to the Agobot family. This paper presents an analysis of Rxbot. The observations of the analysis process provide in-depth understanding of various aspects of the botnet lifecycle such as botnet architecture, network formation, propagation mechanisms, and exploit capabilities. The study of Rxbot reveals certain tricks and techniques used by the botnet owners to hide their bots and bypass some security softwares. -

Botnets, Zombies, and Irc Security

Botnets 1 BOTNETS, ZOMBIES, AND IRC SECURITY Investigating Botnets, Zombies, and IRC Security Seth Thigpen East Carolina University Botnets 2 Abstract The Internet has many aspects that make it ideal for communication and commerce. It makes selling products and services possible without the need for the consumer to set foot outside his door. It allows people from opposite ends of the earth to collaborate on research, product development, and casual conversation. Internet relay chat (IRC) has made it possible for ordinary people to meet and exchange ideas. It also, however, continues to aid in the spread of malicious activity through botnets, zombies, and Trojans. Hackers have used IRC to engage in identity theft, sending spam, and controlling compromised computers. Through the use of carefully engineered scripts and programs, hackers can use IRC as a centralized location to launch DDoS attacks and infect computers with robots to effectively take advantage of unsuspecting targets. Hackers are using zombie armies for their personal gain. One can even purchase these armies via the Internet black market. Thwarting these attacks and promoting security awareness begins with understanding exactly what botnets and zombies are and how to tighten security in IRC clients. Botnets 3 Investigating Botnets, Zombies, and IRC Security Introduction The Internet has become a vast, complex conduit of information exchange. Many different tools exist that enable Internet users to communicate effectively and efficiently. Some of these tools have been developed in such a way that allows hackers with malicious intent to take advantage of other Internet users. Hackers have continued to create tools to aid them in their endeavors. -

Anope Ircd Protocol Module

Anope Ircd Protocol Module Gracious and nyctitropic Tedie stroll expediently and gasp his Ara scholastically and numerically. Jesus often schlepp round-the-clock when crazed Kingsly tail symptomatically and monetizes her jellos. Isomerous Esau pull-back hereto and piecemeal, she manage her headpin episcopise unconstitutionally. Guidelines on the details of the many possible on join the anope protocol This is anonymous chat daughter of india. Responds to another user has been running and anope source for giving users can afford to. Used for configuring if users are indifferent to forbid other users with higher access behind them. Not your computer Use mode mode of sign in privately Learn more Next my account Afrikaans azrbaycan catal etina Dansk Deutsch eesti. Package head-armv6-defaultircanope Failed for anope. Statistics about an incomplete, but as many ircds have their own life on. Ident FreeBSD headircanopeMakefile 412347 2016-04-01 14037Z mat. Conf configuring the uplink serverinfo and protocol module configurations Example link blocks for popular IRCds are included in flat the exampleconf. 20Modulesunreal AnopeWiki. Anope program designed specifically for compilation in addition of. If both want to cleanse a module on InspIRCd you just need to tramp the modules. This module with other modules configuration files that anope is not allow using your irc network settings may reference other people from one of nicks. C 2003-2017 Anope Team Contact us at teamanopeorg. This may yet? Jan 17 040757 2019 SERVER serviceslocalhostnet. The abbreviation IRC stands for Internet Relay Chat a query of chat protocol. File athemechanges of Package atheme5060 openSUSE. Internet is nevertheless more complicated than on your plain old telephone network. -

HTTP: IIS "Propfind" Rem HTTP:IIS:PROPFIND Minor Medium

HTTP: IIS "propfind"HTTP:IIS:PROPFIND RemoteMinor DoS medium CVE-2003-0226 7735 HTTP: IkonboardHTTP:CGI:IKONBOARD-BADCOOKIE IllegalMinor Cookie Languagemedium 7361 HTTP: WindowsHTTP:IIS:NSIISLOG-OF Media CriticalServices NSIISlog.DLLcritical BufferCVE-2003-0349 Overflow 8035 MS-RPC: DCOMMS-RPC:DCOM:EXPLOIT ExploitCritical critical CVE-2003-0352 8205 HTTP: WinHelp32.exeHTTP:STC:WINHELP32-OF2 RemoteMinor Buffermedium Overrun CVE-2002-0823(2) 4857 TROJAN: BackTROJAN:BACKORIFICE:BO2K-CONNECT Orifice 2000Major Client Connectionhigh CVE-1999-0660 1648 HTTP: FrontpageHTTP:FRONTPAGE:FP30REG.DLL-OF fp30reg.dllCritical Overflowcritical CVE-2003-0822 9007 SCAN: IIS EnumerationSCAN:II:IIS-ISAPI-ENUMInfo info P2P: DC: DirectP2P:DC:HUB-LOGIN ConnectInfo Plus Plus Clientinfo Hub Login TROJAN: AOLTROJAN:MISC:AOLADMIN-SRV-RESP Admin ServerMajor Responsehigh CVE-1999-0660 TROJAN: DigitalTROJAN:MISC:ROOTBEER-CLIENT RootbeerMinor Client Connectmedium CVE-1999-0660 HTTP: OfficeHTTP:STC:DL:OFFICEART-PROP Art PropertyMajor Table Bufferhigh OverflowCVE-2009-2528 36650 HTTP: AXIS CommunicationsHTTP:STC:ACTIVEX:AXIS-CAMERAMajor Camerahigh Control (AxisCamControl.ocx)CVE-2008-5260 33408 Unsafe ActiveX Control LDAP: IpswitchLDAP:OVERFLOW:IMAIL-ASN1 IMail LDAPMajor Daemonhigh Remote BufferCVE-2004-0297 Overflow 9682 HTTP: AnyformHTTP:CGI:ANYFORM-SEMICOLON SemicolonMajor high CVE-1999-0066 719 HTTP: Mini HTTP:CGI:W3-MSQL-FILE-DISCLSRSQL w3-msqlMinor File View mediumDisclosure CVE-2000-0012 898 HTTP: IIS MFCHTTP:IIS:MFC-EXT-OF ISAPI FrameworkMajor Overflowhigh (via -

Classifying Application Flows and Intrusion Detection in Internet Traffic

THÈSE Pour obtenir le grade de DOCTEUR DE L’UNIVERSITÉ DE GRENOBLE Spécialité : Informatique Arrêté ministérial : 7 août 2006 Présentée par Maciej KORCZYNSKI´ Thèse dirigée par Andrzej Duda préparée au sein de UMR 5217 - LIG - Laboratoire d’Informatique de Grenoble et de École Doctorale Mathématiques, Sciences et Technologies de l’Information, Informatique (EDMSTII) Classifying Application Flows and Intrusion Detection in Internet Traffic Thèse soutenue publiquement le 26 Novembre 2012, devant le jury composé de : Mr Jean-Marc Thiriet Professeur, Université Joseph Fourier, Président Mr Philippe Owezarski Chargé de recherche au CNRS, Université de Toulouse, Rapporteur Mr Guillaume Urvoy-Keller Professeur, Université de Nice, Rapporteur Mr Andrzej Pach Professeur, AGH University of Science and Technology, Examinateur Mr Andrzej Duda Professeur, Grenoble INP - ENSIMAG, Directeur de thèse iii Acknowledgments I would like to thank most especially my supervisor and mentor Prof. Andrzej Duda. You taught me a great deal about how to do research. Thank you for your trust and freedom in exploring different research directions. I would like to express my gratitude for your contributions to this work including sleepless nights before deadlines and your invaluable support in my future projects. I am also very grateful to Dr. Lucjan Janowski and Dr. Georgios Androulidakis for your guidance, patience, and encouragement at the early stage of my research. Thanks for all that I have learnt from you. I would like to thank Marcin Jakubowski for sharing your network administrator experience and packet traces without which this research would not have been possible. I am also thankful to my friends from the Drakkar team, especially to Bogdan, Ana, Isa, Nazim, Michal, my office mates Carina, Maru, Mustapha, and Martin as well as my friends from other teams, especially to Sofia, Azzeddine, and Reinaldo for sharing the ”legendary” and the more difficult moments of PhD students life. -

(Computer-Mediated) Communication

Submitted by Dipl-Ing. Robert Ecker Submitted at Analysis of Computer- Department of Telecooperation Mediated Discourses Supervisor and First Examiner Univ.-Prof. Mag. Dr. Focusing on Automated Gabriele Anderst-Kotsis Second Examiner Detection and Guessing o. Univ.-Prof. Dipl.-Ing. Dr. Michael Schrefl of Structural Sender- August 2017 Receiver Relations Doctoral Thesis to obtain the academic degree of Doktor der technischen Wissenschaften in the Doctoral Program Technische Wissenschaften JOHANNES KEPLER UNIVERSITY LINZ Altenbergerstraße 69 4040 Linz, Osterreich¨ www.jku.at DVR 0093696 Kurzfassung Formen der computervermittelten Kommunikation (CvK) sind allgegenwärtig und beein- flussen unser Leben täglich. Facebook, Myspace, Skype, Twitter, WhatsApp und YouTube produzieren große Mengen an Daten - ideal für Analysen. Automatisierte Tools für die Diskursanalyse verarbeiten diese enormen Mengen an computervermittelten Diskursen schnell. Diese Dissertation beschreibt die Entwicklung und Struktur einer Software- Architektur für ein automatisiertes Tool, das computervermittelte Diskurse analysiert, um die Frage “Wer kommuniziert mit wem?” zu jedem Zeitpunkt zu beantworten. Die Zuweisung von Empfängern zu jeder einzelnen Nachricht ist ein wichtiger Schritt. Direkte Adressierung hilft, wird aber nicht in jeder Nachricht verwendet. Populäre Kommunikationsmodelle und die am weitesten verbreiteten CvK-Systeme werden untersucht. Das zugrunde liegende Kommunikationsmodell verdeutlicht die wesentlichen Elemente von CvK und zeigt, wie diese Kommunikation -

An Analysis of Instant Messaging and E- Mail Access Protocol Behavior in Wireless Environment

An Analysis of Instant Messaging and E- mail Access Protocol Behavior in Wireless Environment IIP Mixture Project Simone Leggio Tuomas Kulve Oriana Riva Jarno Saarto Markku Kojo March 26, 2004 University of Helsinki - Department of Computer Science i TABLE OF CONTENTS 1 Introduction ..................................................................................................................................... 1 PART I: BACKGROUND AND PROTOCOL ANALYSIS ............................................................. 1 2 Instant Messaging............................................................................................................................ 1 3 ICQ.................................................................................................................................................. 3 3.1 Overview ................................................................................................................................. 3 3.2 Protocol Operation .................................................................................................................. 4 3.2.1 Client to Server................................................................................................................4 3.2.2 Client to Client ................................................................................................................5 3.2.3 Normal Operation............................................................................................................ 5 3.2.4 Abnormal Operation....................................................................................................... -

Herramientas Para Hacking Ético

Escola Tècnica Superior d’Enginyeria de Telecomunicació de Barcelona Departamento de Ingeniería Telemática Trabajo de fin de Grado Herramientas para hacking ético Tutor: Autor: José Luis Juanjosé Muñoz Redondo Barcelona, Julio 2015 Abstract The objective of this project is to describe and test the most important hacking tools available in the Kali Linux distribution. This distribution, based on UNIX, contains tools for network auditing, cracking passwords and obtain all the traffic information required (sniffing) of various protocols (TCP, UDP, HTTP, SSH, ..). The hosts used to test the attacks will be based on UNIX, Windows and Android architecture. It is very important to previously study the architecture of the tar- get (host, network) because the attacks are focused on the vulnerabilities of each architecture. Therefore, the study of the target is a critical step in the hacking process. The project will also contain a section devoted to hacking WLAN networks using different techniques (active and passive attacks) and the study of certain web services vulnerabilities and the available attacks to be performed. Resum L’objectiu d’aquest projecte és descriure i testejar les eines de hacking més im- portants que ofereix la distribució de Kali Linux. Aquesta distribució està basada en UNIX i conté eines per a realitzar auditories de xarxa, trencar contrasenyes i obtenir informació de trànsit de diversos protocols (TCP, UDP, HTTP, SSH, ..). Els hosts que s’utilitzaran per provar els atacs estaran basats en arquitectures UNIX, Windows i Android. És molt important estudiar l’arquitectura dels hosts contra els que es dirigiran els atacs ja que aquests atacs estan basats en alguna vulnerabilitat de la seva arquitectura.