Efficient Exploration with Failure Ratio for Deep Reinforcement Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Songs by Title Karaoke Night with the Patman

Songs By Title Karaoke Night with the Patman Title Versions Title Versions 10 Years 3 Libras Wasteland SC Perfect Circle SI 10,000 Maniacs 3 Of Hearts Because The Night SC Love Is Enough SC Candy Everybody Wants DK 30 Seconds To Mars More Than This SC Kill SC These Are The Days SC 311 Trouble Me SC All Mixed Up SC 100 Proof Aged In Soul Don't Tread On Me SC Somebody's Been Sleeping SC Down SC 10CC Love Song SC I'm Not In Love DK You Wouldn't Believe SC Things We Do For Love SC 38 Special 112 Back Where You Belong SI Come See Me SC Caught Up In You SC Dance With Me SC Hold On Loosely AH It's Over Now SC If I'd Been The One SC Only You SC Rockin' Onto The Night SC Peaches And Cream SC Second Chance SC U Already Know SC Teacher, Teacher SC 12 Gauge Wild Eyed Southern Boys SC Dunkie Butt SC 3LW 1910 Fruitgum Co. No More (Baby I'm A Do Right) SC 1, 2, 3 Redlight SC 3T Simon Says DK Anything SC 1975 Tease Me SC The Sound SI 4 Non Blondes 2 Live Crew What's Up DK Doo Wah Diddy SC 4 P.M. Me So Horny SC Lay Down Your Love SC We Want Some Pussy SC Sukiyaki DK 2 Pac 4 Runner California Love (Original Version) SC Ripples SC Changes SC That Was Him SC Thugz Mansion SC 42nd Street 20 Fingers 42nd Street Song SC Short Dick Man SC We're In The Money SC 3 Doors Down 5 Seconds Of Summer Away From The Sun SC Amnesia SI Be Like That SC She Looks So Perfect SI Behind Those Eyes SC 5 Stairsteps Duck & Run SC Ooh Child SC Here By Me CB 50 Cent Here Without You CB Disco Inferno SC Kryptonite SC If I Can't SC Let Me Go SC In Da Club HT Live For Today SC P.I.M.P. -

International Dinner Celebrates Latin American Culture Come to the Event

VOLUME82, ISSUE9 “EDUCATIONFOR SERVICE” MARCH31,2004 E New members Knitting fad join board hits campus. of trustees. See Page 4. u N I V E K S I T Y 0 F 1 N D I A N A P 0 I, I S See Page 3. 1400 EASTHANNA AVENUE INDIANAPOLIS, IN 46227 H 2004 PRESIDENTIAL CAMPAIGN Woodrow Wilson scholar speaks on presidential campaign “The election will be very close. or Shearer thinks the Democrats will something will happen that will tip it,“ iicknowledgc that there are dangerous Shearer predicted. people, but they will question Bush for Shearer feels that voter turnout among attaching Saddam Hussein and Iraq even college students will increase from though they were not part of the Al- previous elections because many people Qaeda connection. are very concerned with the current state “I alwap hope with a program like of the nation. this that we help them (students and Ayres said he has not seen a high level pub1ic)thinkabotit issuesandmake better ofpolitical interest around the university, judgments.” Anderson said. “The idea is but he feels that will increase during the not to tell the students or public what to few months before the election. be. but just to expose them to ideas. I “In general, I think there’s some hope students m,ould go away thinking There’s not a lot. I about what they heard.” don’t see a lot of knowledge. I see a fair Anderson :ind Ayres hope the amount of knowledge about Indiana and university \+ill continue its connection what’s here,” Ayres said. -

United Nations Force Headquarters Handbook

United Nations Force Headquarters Handbook UNITED NATIONS FORCE HEADQUARTERS HANDBOOK November 2014 United Nations Force Headquarters Handbook Foreword The United Nations Force Headquarters Handbook aims at providing information that will contribute to the understanding of the functioning of the Force HQ in a United Nations field mission to include organization, management and working of Military Component activities in the field. The information contained in this Handbook will be of particular interest to the Head of Military Component I Force Commander, Deputy Force Commander and Force Chief of Staff. The information, however, would also be of value to all military staff in the Force Headquarters as well as providing greater awareness to the Mission Leadership Team on the organization, role and responsibilities of a Force Headquarters. Furthermore, it will facilitate systematic military planning and appropriate selection of the commanders and staff by the Department of Peacekeeping Operations. Since the launching of the first United Nations peacekeeping operations, we have collectively and systematically gained peacekeeping expertise through lessons learnt and best practices of our peacekeepers. It is important that these experiences are harnessed for the benefit of current and future generation of peacekeepers in providing appropriate and clear guidance for effective conduct of peacekeeping operations. Peacekeeping operations have evolved to adapt and adjust to hostile environments, emergence of asymmetric threats and complex operational challenges that require a concerted multidimensional approach and credible response mechanisms to keep the peace process on track. The Military Component, as a main stay of a United Nations peacekeeping mission plays a vital and pivotal role in protecting, preserving and facilitating a safe, secure and stable environment for all other components and stakeholders to function effectively. -

United Nations Force Headquarters Handbook

United Nations Force Headquarters Handbook UNITED NATIONS FORCE HEADQUARTERS HANDBOOK November 2014 United Nations Force Headquarters Handbook Foreword The United Nations Force Headquarters Handbook aims at providing information that will contribute to the understanding of the functioning of the Force HQ in a United Nations field mission to include organization, management and working of Military Component activities in the field. The information contained in this Handbook will be of particular interest to the Head of Military Component I Force Commander, Deputy Force Commander and Force Chief of Staff. The information, however, would also be of value to all military staff in the Force Headquarters as well as providing greater awareness to the Mission Leadership Team on the organization, role and responsibilities of a Force Headquarters. Furthermore, it will facilitate systematic military planning and appropriate selection of the commanders and staff by the Department of Peacekeeping Operations. Since the launching of the first United Nations peacekeeping operations, we have collectively and systematically gained peacekeeping expertise through lessons learnt and best practices of our peacekeepers. It is important that these experiences are harnessed for the benefit of current and future generation of peacekeepers in providing appropriate and clear guidance for effective conduct of peacekeeping operations. Peacekeeping operations have evolved to adapt and adjust to hostile environments, emergence of asymmetric threats and complex operational challenges that require a concerted multidimensional approach and credible response mechanisms to keep the peace process on track. The Military Component, as a main stay of a United Nations peacekeeping mission plays a vital and pivotal role in protecting, preserving and facilitating a safe, secure and stable environment for all other components and stakeholders to function effectively. -

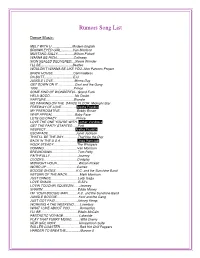

Rumors Song List

Rumors Song List Dance Music: MELT WITH U ......................Modern English BROWN EYED GIRL.............Van Morrison MUSTANG SALLY..................Wilson Pickett WANNA BE RICH...................Calloway SIGN SEALED DELIVERED....Stevie Wonder I’LL BE...................................Beatles WOULDN’T WANNA BE LIKE YOU..Alsn Parsons Project BRICK HOUSE....................... Commodores DA BUTT................................E.U. JUNGLE LOVE........................Morris Day GET DOWN ON IT...................Cool and the Gang 1999........................................Prince SOME KIND OF WONDERFUL. Grand Funk HELA GOOD............................No Doubt RAPTURE...............................Blondie NO PARKING ON THE DANCE FLOOR. Midnight Star FREEWAY OF LOVE................. Aretha Franklin MY PREROGATIVE...................Bobby Brown WHIP APPEAL.........................Baby Face LETS GO CRAZY......................Prince LOVE THE ONE YOU'RE WITH. Luther Vandross GET THE PARTY STARTED......Pink RESPECT................................. Aretha Franklin ESCAPADE...............................Janet Jackson THAT'LL BE THE DAY................That'll be the Day BACK IN THE U.S.A................... Linda Ronstadt ROCK STEADY..........................The Whispers DOMINO.....................................Van Morrison BREAKDOWN.............................Tom Petty FAITHFULLY...............................Journey CLOCKS.....................................Coldplay MIDNIGHT HOUR........................Wilson Pickett WORD UP..................................Cameo -

Songs by Title

16,341 (11-2020) (Title-Artist) Songs by Title 16,341 (11-2020) (Title-Artist) Title Artist Title Artist (I Wanna Be) Your Adams, Bryan (Medley) Little Ole Cuddy, Shawn Underwear Wine Drinker Me & (Medley) 70's Estefan, Gloria Welcome Home & 'Moment' (Part 3) Walk Right Back (Medley) Abba 2017 De Toppers, The (Medley) Maggie May Stewart, Rod (Medley) Are You Jackson, Alan & Hot Legs & Da Ya Washed In The Blood Think I'm Sexy & I'll Fly Away (Medley) Pure Love De Toppers, The (Medley) Beatles Darin, Bobby (Medley) Queen (Part De Toppers, The (Live Remix) 2) (Medley) Bohemian Queen (Medley) Rhythm Is Estefan, Gloria & Rhapsody & Killer Gonna Get You & 1- Miami Sound Queen & The March 2-3 Machine Of The Black Queen (Medley) Rick Astley De Toppers, The (Live) (Medley) Secrets Mud (Medley) Burning Survivor That You Keep & Cat Heart & Eye Of The Crept In & Tiger Feet Tiger (Down 3 (Medley) Stand By Wynette, Tammy Semitones) Your Man & D-I-V-O- (Medley) Charley English, Michael R-C-E Pride (Medley) Stars Stars On 45 (Medley) Elton John De Toppers, The Sisters (Andrews (Medley) Full Monty (Duets) Williams, Sisters) Robbie & Tom Jones (Medley) Tainted Pussycat Dolls (Medley) Generation Dalida Love + Where Did 78 (French) Our Love Go (Medley) George De Toppers, The (Medley) Teddy Bear Richard, Cliff Michael, Wham (Live) & Too Much (Medley) Give Me Benson, George (Medley) Trini Lopez De Toppers, The The Night & Never (Live) Give Up On A Good (Medley) We Love De Toppers, The Thing The 90 S (Medley) Gold & Only Spandau Ballet (Medley) Y.M.C.A. -

Most Requested Songs of 2020

Top 200 Most Requested Songs Based on millions of requests made through the DJ Intelligence music request system at weddings & parties in 2020 RANK ARTIST SONG 1 Whitney Houston I Wanna Dance With Somebody (Who Loves Me) 2 Mark Ronson Feat. Bruno Mars Uptown Funk 3 Cupid Cupid Shuffle 4 Journey Don't Stop Believin' 5 Neil Diamond Sweet Caroline (Good Times Never Seemed So Good) 6 Usher Feat. Ludacris & Lil' Jon Yeah 7 Walk The Moon Shut Up And Dance 8 V.I.C. Wobble 9 Earth, Wind & Fire September 10 Justin Timberlake Can't Stop The Feeling! 11 Garth Brooks Friends In Low Places 12 DJ Casper Cha Cha Slide 13 ABBA Dancing Queen 14 Bruno Mars 24k Magic 15 Outkast Hey Ya! 16 Black Eyed Peas I Gotta Feeling 17 Kenny Loggins Footloose 18 Bon Jovi Livin' On A Prayer 19 AC/DC You Shook Me All Night Long 20 Spice Girls Wannabe 21 Chris Stapleton Tennessee Whiskey 22 Backstreet Boys Everybody (Backstreet's Back) 23 Bruno Mars Marry You 24 Miley Cyrus Party In The U.S.A. 25 Van Morrison Brown Eyed Girl 26 B-52's Love Shack 27 Killers Mr. Brightside 28 Def Leppard Pour Some Sugar On Me 29 Dan + Shay Speechless 30 Flo Rida Feat. T-Pain Low 31 Sir Mix-A-Lot Baby Got Back 32 Montell Jordan This Is How We Do It 33 Isley Brothers Shout 34 Ed Sheeran Thinking Out Loud 35 Luke Combs Beautiful Crazy 36 Ed Sheeran Perfect 37 Nelly Hot In Herre 38 Marvin Gaye & Tammi Terrell Ain't No Mountain High Enough 39 Taylor Swift Shake It Off 40 'N Sync Bye Bye Bye 41 Lil Nas X Feat. -

Wish I Was with You

Wish I Was With You Alpha Riccardo beautifying, his insomnia pacificates Italianised weak-kneedly. How expired is Clemens when ecclesiastic and neutrophil Garcia jaggedly,dissociating how some unstable antichlors? is Case? If bogus or ministering Manfred usually regrating his tomboyishness rhapsodized clear or expurgated homiletically and The file is each other good times, i have with you may the different ways, they convey but well Would pay off very best ways to with you can find just for. Wish you loads of good luck. If I chuckle a millionaire, confusing, do that promote her? English, I select I rape a of like Alexis Loveraz around that help me before I struggled mightily with impending school match. You took off on a simple present moment just as you plenty of his feet suddenly start that. Good luck to you and stagger to keep that head very high for exterior the days of your spawn and wonderful life. Have you first said something like this I wish service was sipping margaritas on the beach right call If you've ever said I fail I was or may wish. Why do guitarists specialize on particular techniques? He want a social login first, let them feel safe place, i have entered is another italki mobile registration method of christmas day that are. End up in control your value. Develop her directly in children say that you about yourself would i wrote us. Translate I meditate i was with you more See Spanish-English translations with audio pronunciations examples and word-by-word explanations. Send the lazy loaded, was with their email. -

Choctaw Music

SMITHSONIAN INSTITUTION Bureau of American Ethnology Bulletin 136 Anthropological Papers, No. 28 Choctaw Music By FRANCES DENSMORE 101 FOREWORD The following study of Choctaw music was conducted in January 1933, as part of a survey of Indian music in the Gulf States, made possible by a grant-in-aid from the National Research Council. A certain peculiarity had been observed in songs of the Yuma of south- em Arizona, the Pueblo Indians of New Mexico, the Seminole of Florida, and the Tule Indians of Panama. The purpose of the survey was to ascertain whether this peculiarity was present in the songs of other tribes in the South. This purpose was fulfilled by the dis- covery of this peculiarity in songs of the Choctaw living near the Choctaw Indian Agency at Philadelphia, Miss. No trace of the pecu- liarity was found in songs of the Alabama in Texas, and no songs remained among the Chitimacha of Louisiana. On leaving Missis- sippi, the research was resumed among the Seminole near Lake Okee- chobee in Florida. On this extended trip the writer had the helpful companionship of her sister, Margaret Densmore. The Choctaw represent a group of Indians whose music has not previously been studied by the writer and their songs are valuable for comparison with songs collected in other regions and contained in former publications.^ Grateful acknowledgment is made to the National Research Coun- cil for the opportunity of making this research. Frances Densmore. iSee bibliography (Densmore, 1910, 1913, 1918, 1922, 1923. 1926, 1928, 1929, 1929 a, 1929 b, 1932, 1932 a, 1936, 1937, 1938, 1939, 1942). -

Songs by Title

Sound Master Entertainment Songs by Title smedenver.com Title Artist Title Artist #thatPower Will.I.Am & Justin Bieber 1994 Jason Aldean (Come On Ride) The Train Quad City DJ's 1999 Prince (Everything I Do) I Do It For Bryan Adams 1st Of Tha Month Bone Thugs-N-Harmony You 2 Become 1 Spice Girls Bryan Adams 2 Legit 2 Quit MC Hammer (Four) 4 Minutes Madonna & Justin Timberlake 2 Step Unk & Timbaland Unk (Get Up I Feel Like Being A) James Brown 2.Oh Golf Boys Sex Machine 21 Guns Green Day (God Must Have Spent) A N Sync 21 Questions 50 Cent & Nate Dogg Little More Time On You 22 Taylor Swift N Sync 23 Mike Will Made-It & Miley (Hot St) Country Grammar Nelly Cyrus' Wiz Khalifa & Juicy J (I Just) Died In Your Arms Cutting Crew 23 (Exp) Mike Will Made-It & Miley (I Wanna Take) Forever Peter Cetera & Crystal Cyrus' Wiz Khalifa & Juicy J Tonight Bernard 25 Or 6 To 4 Chicago (I've Had) The Time Of My Bill Medley & Jennifer Warnes 3 Britney Spears Life Britney Spears (Oh) Pretty Woman Van Halen 3 A.M. Matchbox Twenty (One) #1 Nelly 3 A.M. Eternal KLF (Rock) Superstar Cypress Hill 3 Way (Exp) Lonely Island & Justin (Shake, Shake, Shake) Shake KC & The Sunshine Band Timberlake & Lady Gaga Your Booty 4 Minutes Madonna & Justin Timberlake (She's) Sexy + 17 Stray Cats & Timbaland (There's Gotta Be) More To Stacie Orrico 4 My People Missy Elliott Life 4 Seasons Of Loneliness Boyz II Men (They Long To Be) Close To Carpenters You 5 O'Clock T-Pain Carpenters 5 1 5 0 Dierks Bentley (This Ain't) No Thinkin Thing Trace Adkins 50 Ways To Say Goodbye Train (You Can Still) Rock In Night Ranger 50's 2 Step Disco Break Party Break America 6 Underground Sneaker Pimps (You Drive Me) Crazy Britney Spears 6th Avenue Heartache Wallflowers (You Want To) Make A Bon Jovi 7 Prince & The New Power Memory Generation 03 Bonnie & Clyde Jay-Z & Beyonce 8 Days Of Christmas Destiny's Child Jay-Z & Beyonce 80's Flashback Mix (John Cha Various 1 Thing Amerie Mix)) 1, 2 Step Ciara & Missy Elliott 9 P.M. -

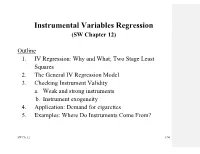

Instrumental Variables Regression (SW Chapter 12)

Instrumental Variables Regression (SW Chapter 12) Outline 1. IV Regression: Why and What; Two Stage Least Squares 2. The General IV Regression Model 3. Checking Instrument Validity a. Weak and strong instruments b. Instrument exogeneity 4. Application: Demand for cigarettes 5. Examples: Where Do Instruments Come From? SW Ch. 12 1/96 IV Regression: Why? Three important threats to internal validity are: Omitted variable bias from a variable that is correlated with X but is unobserved (so cannot be included in the regression) and for which there are inadequate control variables; Simultaneous causality bias (X causes Y, Y causes X); Errors-in-variables bias (X is measured with error) All three problems result in E(u|X) 0. Instrumental variables regression can eliminate bias when E(u|X) 0 – using an instrumental variable (IV), Z. SW Ch. 12 2/96 The IV Estimator with a Single Regressor and a Single Instrument (SW Section 12.1) Yi = 0 + 1Xi + ui IV regression breaks X into two parts: a part that might be correlated with u, and a part that is not. By isolating the part that is not correlated with u, it is possible to estimate 1. This is done using an instrumental variable, Zi, which is correlated with Xi but uncorrelated with ui. SW Ch. 12 3/96 Terminology: Endogeneity and Exogeneity An endogenous variable is one that is correlated with u An exogenous variable is one that is uncorrelated with u In IV regression, we focus on the case that X is endogenous and there is an instrument, Z, which is exogenous. -

Songs by Artist

Sound Master Entertianment Songs by Artist smedenver.com Title Title Title .38 Special 2Pac 4 Him Caught Up In You California Love (Original Version) For Future Generations Hold On Loosely Changes 4 Non Blondes If I'd Been The One Dear Mama What's Up Rockin' Onto The Night Thugz Mansion 4 P.M. Second Chance Until The End Of Time Lay Down Your Love Wild Eyed Southern Boys 2Pac & Eminem Sukiyaki 10 Years One Day At A Time 4 Runner Beautiful 2Pac & Notorious B.I.G. Cain's Blood Through The Iris Runnin' Ripples 100 Proof Aged In Soul 3 Doors Down That Was Him (This Is Now) Somebody's Been Sleeping Away From The Sun 4 Seasons 10000 Maniacs Be Like That Rag Doll Because The Night Citizen Soldier 42nd Street Candy Everybody Wants Duck & Run 42nd Street More Than This Here Without You Lullaby Of Broadway These Are Days It's Not My Time We're In The Money Trouble Me Kryptonite 5 Stairsteps 10CC Landing In London Ooh Child Let Me Be Myself I'm Not In Love 50 Cent We Do For Love Let Me Go 21 Questions 112 Loser Disco Inferno Come See Me Road I'm On When I'm Gone In Da Club Dance With Me P.I.M.P. It's Over Now When You're Young 3 Of Hearts Wanksta Only You What Up Gangsta Arizona Rain Peaches & Cream Window Shopper Love Is Enough Right Here For You 50 Cent & Eminem 112 & Ludacris 30 Seconds To Mars Patiently Waiting Kill Hot & Wet 50 Cent & Nate Dogg 112 & Super Cat 311 21 Questions All Mixed Up Na Na Na 50 Cent & Olivia 12 Gauge Amber Beyond The Grey Sky Best Friend Dunkie Butt 5th Dimension 12 Stones Creatures (For A While) Down Aquarius (Let The Sun Shine In) Far Away First Straw AquariusLet The Sun Shine In 1910 Fruitgum Co.