Intro to Neural Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1919 Golden Globe Nominees 1

1919 Golden Globe Nominees 1. BEST TELEVISION SERIES – DRAMA a. THE AMERICANS FX NETWORKS Fox 21 Television Studios / FX Productions b. BODYGUARD NETFLIX World Productions / an ITV Studios company c. HOMECOMING PRIME VIDEO Universal Cable Productions LLA / Amazon Studios d. KILLING EVE BBC AMERICA BBC AMERICA / Sid Gentle Films Ltd e. POSE FX NETWORKS Fox 21 Television Studios / FX Productions 2. BEST PERFORMANCE BY AN ACTRESS IN A TELEVISION SERIES – DRAMA a. CAITRIONA BALFE OUTLANDER b. ELISABETH MOSS THE HANDMAID'S TALE c. SANDRA OH KILLING EVE d. JULIA ROBERTS HOMECOMING e. KERI RUSSELL THE AMERICANS 3. BEST PERFORMANCE BY AN ACTOR IN A TELEVISION SERIES – DRAMA a. JASON BATEMAN OZARK b. STEPHAN JAMES HOMECOMING c. RICHARD MADDEN BODYGUARD d. BILLY PORTER POSE e. MATTHEW RHYS THE AMERICANS 4. BEST TELEVISION SERIES – MUSICAL OR COMEDY a. BARRY HBO HBO Entertainment / Alec Berg / Hanarply b. THE GOOD PLACE NBC Universal Television / Fremulon / 3 Arts Entertainment c. KIDDING SHOWTIME SHOWTIME / SOME KIND OF GARDEN / Aggregate Films / Broadlawn Films d. THE KOMINSKY METHOD NETFLIX Warner Bros. Television e. THE MARVELOUS MRS. MAISEL PRIME VIDEO Amazon Studios 5. BEST PERFORMANCE BY AN ACTRESS IN A TELEVISION SERIES – MUSICAL OR COMEDY a. KRISTEN BELL THE GOOD PLACE b. CANDICE BERGEN MURPHY BROWN c. ALISON BRIE GLOW d. RACHEL BROSNAHAN THE MARVELOUS MRS. MAISEL e. DEBRA MESSING WILL & GRACE 6. BEST PERFORMANCE BY AN ACTOR IN A TELEVISION SERIES – MUSICAL OR COMEDY a. SACHA BARON COHEN WHO IS AMERICA b. JIM CARREY KIDDING c. MICHAEL DOUGLAS THE KOMINSKY METHOD d. DONALD GLOVER ATLANTA e. BILL HADER BARRY 7. -

Dads, Daughters Dance the Night Away

SATURDAY, FEBRUARY 3, 2018 Barely time to breathe Lynn mayor marks rst 30 days in of ce By Thor Jourgensen ITEM NEWS EDITOR LYNN — Floods, re and city nance worries — Mayor Thomas M. McGee has packed much into his rst 30 days as the city’s chief executive. Lynn’s 58th mayor, surrounded by fami- ly and friends, savored the moment when he was sworn into of ce during his Jan. 2 inaugural. But McGee barely had a day Lynn Mayor Thom- to spare before the demands of his new as M. McGee looks job became reality. He was surrounded by out on the city from police, re and Inspectional Services De- his of ce. partment representatives on Jan. 4 as the ITEM PHOTO | SPENSER HASAK McGEE, A7 Homecoming Dads, daughters for new Peabody dance the Chamber night away director By Adam Swift ITEM STAFF PEABODY — Malden’s loss is Peabody’s gain. The Peabody Area Chamber of Com- merce has hired Jenna Coccimiglio as their new executive director. She has led the Malden Chamber since 2013, and will By Daniel Kane dance. start her new position next month. FOR THE ITEM “It’s our rst time, we’re having a blast,” they said. “We’re pleased to welcome Jenna to MARBLEHEAD — Amelie Benner the Peabody Area Chamber of Com- DJ Kathy Zerkle led the crowd and her father, Greg, went all out on through a variety of popular dances, merce team,” said Christopher Feazel, the Greg Ben- the dance oor Friday night as he games like the limbo, and changed board’s chairman and an A ac sales coor- ner lifts his swung her around by her arms at the pace with a slow dance several times dinator. -

Sxsw Film Festival Announces 2018 Features and Opening Night Film a Quiet Place

SXSW FILM FESTIVAL ANNOUNCES 2018 FEATURES AND OPENING NIGHT FILM A QUIET PLACE Film Festival Celebrates 25th Edition Austin, Texas, January 31, 2018 – The South by Southwest® (SXSW®) Conference and Festivals announced the features lineup and opening night film for the 25th edition of the Film Festival, running March 9-18, 2018 in Austin, Texas. The acclaimed program draws thousands of fans, filmmakers, press, and industry leaders every year to immerse themselves in the most innovative, smart and entertaining new films of the year. During the nine days of SXSW 132 features will be shown, with additional titles yet to be announced. The full lineup will include 44 films from first-time filmmakers, 86 World Premieres, 11 North American Premieres and 5 U.S. Premieres. These films were selected from 2,458 feature-length film submissions, with a total of 8,160 films submitted this year. “2018 marks the 25th edition of the SXSW Film Festival and my tenth year at the helm. As we look back on the body of work of talent discovered, careers launched and wonderful films we’ve enjoyed, we couldn’t be more excited about the future,” said Janet Pierson, Director of Film. “This year’s slate, while peppered with works from many of our alumni, remains focused on new voices, new directors and a range of films that entertain and enlighten.” “We are particularly pleased to present John Krasinski’s A Quiet Place as our Opening Night Film,” Pierson added.“Not only do we love its originality, suspense and amazing cast, we love seeing artists stretch and explore. -

7-26-18 Transcript Bulletin

Soto will lead sports programs TOOELE at Excelsior See A10 TRANSCRIPT S T C BULLETIN S THURSDAY July 26, 2018 www.TooeleOnline.com Vol. 125 No. 16 $1.00 Rezone referendum now off election ballot County attorney: Investigation revealed ‘pattern’ of Water Wheel Harvest improper signature verification during petition process Stansbury Park Rezone Dispute Area Wheatridge TIM GILLIE the petition sponsors. “It is STAFF WRITER with heavy heart that I am Mavrerik It looks like Tooele County unable to certify the petition, residents won’t get to vote on however. When I was sworn Stansbury Parkway repealing a rezone of property into office I took an oath to Millpond in Stansbury Park that paves support, obey, and defend the the way for a high-density law and discharge the duties of Gateway Dr development. my office with fidelity.” Tooele County Clerk/ Referendum sponsors hoped Crystal Bay Dr Auditor Marilyn Gillette to give voters the opportunity Clubhouse informed referendum spon- to over turn the Tooele County sors Wednesday that she Commission’s decision to was unable to certify the rezone 5.38 acres of property Subject Property referendum on the rezone in on the southwest corner of N Clubhouse Dr Stansbury Park because too Clubhouse Drive and Country Marilyn Gillette many signatures were not veri- Club Drive from commercial Tooele County Clerk/Auditor fied by the signature gatherer shopping and single-family The “subject property” labeled in red is 36 in the manner prescribed by residential to R-M-15. The son who gathers signatures on the 5.38 acres of ground referendum state law. -

2019 Olden Lobes Ballot

CD 2019 �olden �lobes Ballot BEST MOTION PICTURE / BEST PERFORMANCE BY AN ACTOR IN A BEST PERFORMANCE BY AN ACTRESS MUSICAL OR COMEDY TELEVISION SERIES / MUSICAL OR COMEDY IN A SUPPORTING ROLE IN A SERIES, ¨ Crazy Rich Asians ¨ Sasha Baron Cohen Who Is America? LIMITED SERIES OR MOTION PICTURE MADE ¨ The Favourite ¨ Jim Carrey Kidding FOR TELEVISION ¨ Green Book ¨ Michael Douglas The Kominsky Method ¨ Alex Bornstein The Marvelous Mrs. Maisel ¨ Mary Poppins Returns ¨ Donald Glover Atlanta ¨ Patricia Clarkson Sharp Objects ¨ Vice ¨ Bill Hader Barry ¨ Penelope Cruz The Assassination of Gianni Versace: American Crime Story ¨ Thandie Newton Westworld BEST MOTION PICTURE / DRAMA BEST PERFORMANCE BY AN ACTRESS ¨ Yvonne Strahovski The Handmaid’s Tale ¨ Black Panther IN A TELEVISION SERIES / DRAMA ¨ BlacKkKlansman ¨ Caitriona Balfe Outlander BEST PERFORMANCE BY AN ACTOR ¨ Bohemian Rhapsody ¨ Elisabeth Moss Handmaid’s Tale IN A SUPPORTING ROLE IN A SERIES, ¨ Sandra Oh Killing Eve ¨ If Beale Streat Could Talk LIMITED SERIES OR MOTION PICTURE MADE ¨ A Star Is Born ¨ Julia Roberts Homecoming FOR TELEVISION ¨ Keri Russell The Americans ¨ Alan Arkin The Kominsky Method BEST TELEVISION SERIES / ¨ ¨ Kieran Culkin Succession MUSICAL OR COMEDY BEST PERFORMANCE BY AN ACTOR ¨ Edgar Ramirez The Assassination ¨ Barry HBO IN A TELEVISION SERIES / DRAMA of Gianni Versace: American Crime Story ¨ The Good Place NBC ¨ Jason Bateman Ozark ¨ Ben Wishaw A Very English Scandal ¨ Kidding Showtime ¨ Stephan James Homecoming ¨ Henry Winkler Barry ¨ The Kominsky Method -

70Th Emmy Awards Nominations Announcements July 12, 2018 (A Complete List of Nominations, Supplemental Facts and Figures May Be Found at Emmys.Com)

70th Emmy Awards Nominations Announcements July 12, 2018 (A complete list of nominations, supplemental facts and figures may be found at Emmys.com) Emmy Nominations Previous Wins 70th Emmy Nominee Program Network to date to date Nominations Total (across all categories) (across all categories) LEAD ACTRESS IN A DRAMA SERIES Claire Foy The Crown Netflix 1 2 0 Tatiana Maslany Orphan Black BBC America 1 3 1 Elisabeth Moss The Handmaid's Tale Hulu 1 10 2 Sandra Oh Killing Eve BBC America 1 6 0 Keri Russell The Americans FX Networks 1 3 0 Evan Rachel Wood Westworld HBO 1 3 0 LEAD ACTOR IN A DRAMA SERIES Jason Bateman Ozark Netflix 2* 4 0 Sterling K. Brown This Is Us NBC 2* 4 2 Ed Harris Westworld HBO 1 3 0 Matthew Rhys The Americans FX Networks 1 4 0 Milo Ventimiglia This Is Us NBC 1 2 0 Jeffrey Wright Westworld HBO 1 3 1 * NOTE: Jason Bateman also nominated for Directing for Ozark * NOTE: Sterling K. Brown also nominated for Guest Actor in a Comedy Series for Brooklyn Nine-Nine LEAD ACTRESS IN A COMEDY SERIES Pamela Adlon Better Things FX Networks 1 7 1 Rachel Brosnahan The Marvelous Mrs. Maisel Prime Video 1 2 0 Allison Janney Mom CBS 1 14 7 Issa Rae Insecure HBO 1 1 NA Tracee Ellis Ross black-ish ABC 1 3 0 Lily Tomlin Grace And Frankie Netflix 1 25 6 LEAD ACTOR IN A COMEDY SERIES Anthony Anderson black-ish ABC 1 6 0 Ted Danson The Good Place NBC 1 16 2 Larry David Curb Your Enthusiasm HBO 1 26 2 Donald Glover Atlanta FX Networks 4* 8 2 Bill Hader Barry HBO 4* 14 1 William H. -

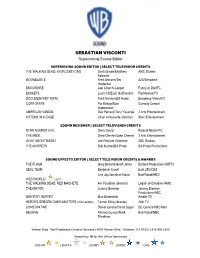

SEBASTIAN VISCONTI Supervising Sound Editor

SEBASTIAN VISCONTI Supervising Sound Editor SUPERVISING SOUND EDITOR | SELECT TELEVISION CREDITS THE WALKING DEAD: WORLD BEYOND Scott Gimple/Matthew AMC Studios Negrette MOONBASE 8 Fred Armisen/Tim A24/Showtime Heidecker BROCKMIRE Joel Church-Cooper Funny or Die/IFC BASKETS Louis CK/Zach Galifianakis Pig Newton/FX DOCUMENTARY NOW! Fred Armisen/Bill Hader Broadway Video/IFC CORPORATE Pat Bishop/Matt Comedy Central Ingebretson AMERICAN VANDAL Dan Perrault/Tony Yacenda 3 Arts Entertainment KITTENS IN A CAGE Jillian Armenante (director) Stoic Entertainment SOUND DESIGNER | SELECT TELEVISION CREDITS STAN AGAINST EVIL Dana Gould Radical Media/IFC THE MICK Dave Chernin/John Chernin 3 Arts Entertainment WHAT ABOUT BARB? Joe Port/Joe Wiseman ABC Studios THE MUPPETS Bob Kushell/Bill Prady Bill Prady Productions SOUND EFFECTS EDITOR | SELECT TELEVISION CREDITS & AWARDS THE FLASH Greg Berlanti/Geoff Johns Berlanti Productions/WBTV SEAL TEAM Benjamin Cavell East 25C/CBS Lisa Joy/Jonathan Nolan Bad Robot/HBO WESTWORLD (2017) THE WALKING DEAD: RED MACHETE Avi Youabian (director) Legion of Creatives/AMC THE MAYOR Jeremy Bronson Jeremy Bronson Productions/ABC MINORITY REPORT Max Borenstein Amblin TV HEROES REBORN: DARK MATTERS (mini-series) Tanner Kling (director) 20th TV CONSTANTINE Daniel Cerone/David Goyer DC Comics/HBO Max BELIEVE Alfonso Cuaron/Mark Bab Robot/NBC Friedman Warner Bros. Post Production Creative Services | 4000 Warner Blvd. | Burbank, CA 91522 | 818.954.5305 Award Key: W for Win | N for Nominated OSCAR | BAFTA | EMMY | MPSE | CAS . -

Emmy Award Winners

CATEGORY 2035 2034 2033 2032 Outstanding Drama Title Title Title Title Lead Actor Drama Name, Title Name, Title Name, Title Name, Title Lead Actress—Drama Name, Title Name, Title Name, Title Name, Title Supp. Actor—Drama Name, Title Name, Title Name, Title Name, Title Supp. Actress—Drama Name, Title Name, Title Name, Title Name, Title Outstanding Comedy Title Title Title Title Lead Actor—Comedy Name, Title Name, Title Name, Title Name, Title Lead Actress—Comedy Name, Title Name, Title Name, Title Name, Title Supp. Actor—Comedy Name, Title Name, Title Name, Title Name, Title Supp. Actress—Comedy Name, Title Name, Title Name, Title Name, Title Outstanding Limited Series Title Title Title Title Outstanding TV Movie Name, Title Name, Title Name, Title Name, Title Lead Actor—L.Ser./Movie Name, Title Name, Title Name, Title Name, Title Lead Actress—L.Ser./Movie Name, Title Name, Title Name, Title Name, Title Supp. Actor—L.Ser./Movie Name, Title Name, Title Name, Title Name, Title Supp. Actress—L.Ser./Movie Name, Title Name, Title Name, Title Name, Title CATEGORY 2031 2030 2029 2028 Outstanding Drama Title Title Title Title Lead Actor—Drama Name, Title Name, Title Name, Title Name, Title Lead Actress—Drama Name, Title Name, Title Name, Title Name, Title Supp. Actor—Drama Name, Title Name, Title Name, Title Name, Title Supp. Actress—Drama Name, Title Name, Title Name, Title Name, Title Outstanding Comedy Title Title Title Title Lead Actor—Comedy Name, Title Name, Title Name, Title Name, Title Lead Actress—Comedy Name, Title Name, Title Name, Title Name, Title Supp. Actor—Comedy Name, Title Name, Title Name, Title Name, Title Supp. -

Henry Winkler

BILL ROSENDAHL PUBLIC SERVICE AWARD 2019 for Contributions to the Public Good HENRY WINKLER’S COOLEST ROLE IS HELPING KIDS THE AWARD-WINNING STAR OF ‘HAPPY DAYS’ AND ‘BARRY’ RECEIVES THE ROSENDAHL PUBLIC SERVICE fy AWARD FOR HIS CHILDREN’S BOOKS BY ALEX BEN BLOCK ifty years ago, Henry Winkler exploded as the hottest star on American TV by Shawn Miller Fplaying the super cool Arthur “Fonzie” Fonzarelli on “Happy Days”—for which he won two Golden Globes. This past September, he won his first Emmy, for his role on the HBO show “Barry.” At age 73, Winkler is enjoying a career renais- sance, with critics applauding his perfor- mance as the complicated, tortured acting teacher Gene Cousineau. Starting with his frst screen appearances in 1974’s Crazy Joe and The Lords of Flatbush, Winkler has been part of America’s cultural wife Stacey for more than 40 years, having consciousness. He appeared in three Adam three children and fve grandkids—is being Sandler flms including playing Coach Klein the co-author of 34 children’s books, with in the ever popular The Waterboy and he more on the way. directed Billy Crystal in Memories of Me. He This commitment to books and children starred opposite Michael Keaton in his frst has prompted the Los Angeles Press Club to calls, “a direct line to the reluctant reader.” “I’m not a professional,” he explains. “But Top, reading at a book festival in flm role in Ron Howard’s cult classic Night present Winkler tonight with the Bill Rosen- He works with a partner on the books, Lin in my way I support the child who learns 2016; Henry Winkler stands with the Shift. -

Love Is Strange. 04

Love is strange. 04 14. BILL HADER 18. GUEST STAR SIMON RICH 14. EXECUTIVE PRODUCER | CREATOR MICHAEL HOGAN GUEST STAR 19. 14. LORNE MICHAELS VANESSA BAYER EXECUTIVE PRODUCER GUEST STAR 20. 07 14. ANDREW SINGER MINKA KELLY EXECUTIVE PRODUCER GUEST STAR 20. 15. JONATHAN KRISEL FRED ARMISEN EXECUTIVE PRODUCER GUEST STAR | DIRECTOR 15. 21. RAEANNA GUITARD IAN MAXTONE - GRAHAM GUEST STAR CO - EXECUTIVE PRODUCER | WRITER 08 15. OKSANA BAIUL 21. GUEST STAR ROBERT PADNICK CO - PRODUCER 15. | WRITER MATT LUCAS GUEST STAR 22. DAN MIRK STAFF WRITER 22. SOFIA ALVAREZ 11 STAFF WRITER 22. BEN BERMAN DIRECTOR 23. TIM KIRKBY DIRECTOR 23. HARTLEY GORENSTEIN 12 LINE PRODUCER A SWEET AND SURREAL LOOK AT THE (Unforgettable) as “Liz,” Josh’s intimidating older life-and-death stakes of dating, Man Seeking sister; and Maya Erskine (Betas) as “Maggie,” Woman follows naïve twenty-something “Josh the ex-girlfriend Josh can never quite forget. Greenberg” (Jay Baruchel, How to Train Your Man Seeking Woman is based on Simon Rich’s Dragon) on his unrelenting quest for love. Josh book of short stories, The Last Girlfriend on soldiers through one-night stands, painful break- Earth. Rich created the 10-episode scripted ups, a blind date with a troll, time travel, sex comedy and also serves as Executive Producer/ aliens, many deaths and a Japanese penis Showrunner. Jonathan Krisel, Andrew Singer monster named “Tanaka” on his fantastical and Lorne Michaels, and Broadway Video also journey to find love. serve as Executive Producers. Man Seeking Starring alongside Baruchel are Eric Andre Woman is produced by FX Productions. -

This Is Comedy.Pdf

le inématographe nantes THIS IS COMEDY THIS IS COMEDY la nouvelle comédie américaine en 7 films du 10 au 22 janvier 2018 au Cinématographe Tout commence par une émission à sketchs de la télévision américaine : Le Saturday Night Live (SNL pour les intimes), institution télévisuelle toujours en activité dont nous avons du mal en France à percevoir la popularité. Elle a révélé de nombreux talents depuis 1975 : Bill Murray, John Belushi, Eddy Murphy, Ben Stiller, Will Ferrell, Tina Fey, Amy Poehler, Kristen Wiig… Puis nous arrivent deux frères, Peter et Bobby Farrelly, accompagnés d’un acteur, Jim Carrey. Ensem- ble, ils réalisent Dumb and Dumber en 1994, véritable pierre angulaire de ce renouveau comi- que. Avec un humour trash, transgressif mais également attachant, ils nous font rire des losers, des geeks, des freaks et mettent en lumière tous ceux que l’Amérique ne souhaite pas voir. Cette détonation change le visage de la comédie américaine, de Ben Stiller au tandem totale- ment loufoque et absurde d’Adam McKay/Will Ferrell, et jusqu’à la veine plus personnelle de Judd Apatow et son équipe. Une nouvelle comédie américaine qui se décline au gré des incon- gruités de ce monde. Cette programmation vous permettra d’en apprécier une partie sur grand écran : soyez prêt·e·s à rire, this is comedy ! Rencontre avec le journaliste et réalisateur Jacky Goldberg à l’issue de la projection de son documentaire This Is Comedy Jacky Goldberg s’entretient avec Apatow à propos des scènes clés de ses émissions de télévision et ses films, ainsi qu’avec les comédien·ne·s et les cinéastes de son gang. -

Nomination Press Release

Outstanding Comedy Series 30 Rock • NBC • Broadway Video, Little Stranger, Inc. in association with Universal Television The Big Bang Theory • CBS • Chuck Lorre Productions, Inc. in association with Warner Tina Fey as Liz Lemon Bros. Television Veep • HBO • Dundee Productions in Curb Your Enthusiasm • HBO • HBO association with HBO Entertainment Entertainment Julia Louis-Dreyfus as Selina Meyer Girls • HBO • Apatow Productions and I am Jenni Konner Productions in association with HBO Entertainment Outstanding Lead Actor In A Modern Family • ABC • Levitan-Lloyd Comedy Series Productions in association with Twentieth The Big Bang Theory • CBS • Chuck Lorre Century Fox Television Productions, Inc. in association with Warner 30 Rock • NBC • Broadway Video, Little Bros. Television Stranger, Inc. in association with Universal Jim Parsons as Sheldon Cooper Television Curb Your Enthusiasm • HBO • HBO Veep • HBO • Dundee Productions in Entertainment association with HBO Entertainment Larry David as Himself House Of Lies • Showtime • Showtime Presents, Crescendo Productions, Totally Outstanding Lead Actress In A Commercial Films, Refugee Productions, Comedy Series Matthew Carnahan Circus Products Don Cheadle as Marty Kaan Girls • HBO • Apatow Productions and I am Jenni Konner Productions in association with Louie • FX Networks • Pig Newton, Inc. in HBO Entertainment association with FX Productions Lena Dunham as Hannah Horvath Louis C.K. as Louie Mike & Molly • CBS • Bonanza Productions, 30 Rock • NBC • Broadway Video, Little Inc. in association with Chuck Lorre Stranger, Inc. in association with Universal Productions, Inc. and Warner Bros. Television Television Alec Baldwin as Jack Donaghy Melissa McCarthy as Molly Flynn Two And A Half Men • CBS • Chuck Lorre New Girl • FOX • Chernin Entertainment in Productions Inc., The Tannenbaum Company association with Twentieth Century Fox in association with Warner Bros.