Counterpoints: Advanced Defensive Metrics for NBA Basketball

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2008-09 Review

2008-09 REVIEW 2008-09 SEASON RESULTS Overall Home Away Neutral All Games 26-9 18-1 6-4 2-4 Pac-10 Conference 14-4 8-1 6-3 0-0 Non-Conference 12-5 10-0 0-1 2-4 Date Opponent W / L Score Attend High Scorer High Rebounder HUSKY ATHLETICS 11/15/08 at Portland L 74-80 2617 (30)Brockman, Jon (14)Brockman, Jon 11/18/08 # CLEVELAND STATE W 78-63 7316 (23)Brockman, Jon (13)Brockman, Jon 11/20/08 # FLORIDA INT'L W 74-51 7532 (21)Dentmon, Justin (7)Brockman, Jon (7)Holiday, Justin 11/24/08 ^ vs Kansas L 54-73 14720 (17)Thomas, Isaiah (18)Brockman, Jon 11/25/08 ^ vs Florida L 84-86 16988 (22)Brockman, Jon (11)Brockman, Jon 11/29/08 PACIFIC W 72-54 7527 (16)Pondexter, Quincy (12)Pondexter, Quincy 12/04/08 + OKLAHOMA STATE W 83-65 7789 (18)Thomas, Isaiah (11)Brockman, Jon 12/06/08 TEXAS SOUTHERN W 88-52 7241 (18)Bryan-Amaning, Matt (11)Brockman, Jon 12/14/08 PORTLAND STATE W 84-83 7280 (23)Bryan-Amaning, Matt (12)Bryan-Amaning, Matt 12/20/08 EASTERN WASHINGTON W 83-50 7401 (17)Brockman, Jon (7)Bryan-Amaning, Matt 12/28/08 MONTANA W 75-53 9045 (13)Brockman, Jon (15)Bryan-Amaning, Matt THIS IS HUSKY BASKETBALL (13)Thomas, Isaiah 12/30/08 MORGAN STATE W 81-67 8260 (27)Thomas, Isaiah (6)Pondexter, Quincy 01/03/09 * at Washington State W 68-48 8107 (19)Thomas, Isaiah (7)Pondexter, Quincy 01/08/09 * STANFORD W 84-83 9291 (19)Brockman, Jon (18)Brockman, Jon 01/10/09 * CALIFORNIA L 3ot 85-88 9946 (24)Dentmon, Justin (18)Brockman, Jon 01/15/09 * at Oregon W 84-67 8237 (23)Thomas, Isaiah (10)Brockman, Jon OUTLOOK 01/17/09 * at Oregon State W 85-59 6648 (16)Brockman, -

2019-20 Game Notes

@WKUBasketball WKUBasketball @wkubasketball WKUSports 2019-20 GAME NOTES WKUSports.com MEDIA CONTACT: Zach Greenwell, Associate AD of Communications • [email protected] • (270) 668-4716 LOUISIANA TECH (17-5, 8-2 C-USA) SECONDARY CONTACT: Dana Brown, Media Relations Assistant • [email protected] • (317) 752-6161 AT WKU (14-8, 7-3 C-USA) KEY PAGES Thursday, Feb. 6 | 8 p.m. (CT) | Bowling Green, Ky. E.A. Diddle Arena (7,523) Page 2 | Streaks/Standings Page 10 | Chasing Record Book Pgs. 16-27 | Player Bios Page 3 | Roster/Coaching Staff Page 13 | Last Time It Happened Page 43 | 2019-20 Stats LISTEN Hilltopper Sports Network, TuneIn Page 4 | Last Series Meeting Page 14-15 | Rick Stansbury Page 45 | Radio/TV Roster • Randy Lee (pxp), Hal Schmitt (analysis) WATCH CBS Sports Network THE TIP-OFF • Michael Grady (pxp), Chris Walker (analysis) WKU Hilltopper Basketball hosts a key game in the stand- PROJECTED STARTING LINEUP ings against Louisiana Tech at 8 p.m. CT Thursday at E.A. NOVEMBER (6-2) Diddle Arena. The game will air on CBS Sports Network. 2 Kentucky State (EXH) W, 85-45 5 Tennessee Tech W, 76-64 The Hilltoppers lost two games last week at Florida Atlan- 9 Austin Peay W, 97-75 tic and FIU. They’re 7-3 in C-USA and one game out of first G | Hollingsworth G | Rawls G | Anderson 15 at Eastern Kentucky W, 79-71 place, trailing both Louisiana Tech and North Texas at 8-2. 18 Campbellsville W, 109-66 Lineup 22 (1) vs. -

Fastest 40 Minutes in Basketball, 2012-2013

University of Arkansas, Fayetteville ScholarWorks@UARK Arkansas Men’s Basketball Athletics 2013 Media Guide: Fastest 40 Minutes in Basketball, 2012-2013 University of Arkansas, Fayetteville. Athletics Media Relations Follow this and additional works at: https://scholarworks.uark.edu/basketball-men Citation University of Arkansas, Fayetteville. Athletics Media Relations. (2013). Media Guide: Fastest 40 Minutes in Basketball, 2012-2013. Arkansas Men’s Basketball. Retrieved from https://scholarworks.uark.edu/ basketball-men/10 This Periodical is brought to you for free and open access by the Athletics at ScholarWorks@UARK. It has been accepted for inclusion in Arkansas Men’s Basketball by an authorized administrator of ScholarWorks@UARK. For more information, please contact [email protected]. TABLE OF CONTENTS This is Arkansas Basketball 2012-13 Razorbacks Razorback Records Quick Facts ........................................3 Kikko Haydar .............................48-50 1,000-Point Scorers ................124-127 Television Roster ...............................4 Rashad Madden ..........................51-53 Scoring Average Records ............... 128 Roster ................................................5 Hunter Mickelson ......................54-56 Points Records ...............................129 Bud Walton Arena ..........................6-7 Marshawn Powell .......................57-59 30-Point Games ............................. 130 Razorback Nation ...........................8-9 Rickey Scott ................................60-62 -

2018-19 Phoenix Suns Media Guide 2018-19 Suns Schedule

2018-19 PHOENIX SUNS MEDIA GUIDE 2018-19 SUNS SCHEDULE OCTOBER 2018 JANUARY 2019 SUN MON TUE WED THU FRI SAT SUN MON TUE WED THU FRI SAT 1 SAC 2 3 NZB 4 5 POR 6 1 2 PHI 3 4 LAC 5 7:00 PM 7:00 PM 7:00 PM 7:00 PM 7:00 PM PRESEASON PRESEASON PRESEASON 7 8 GSW 9 10 POR 11 12 13 6 CHA 7 8 SAC 9 DAL 10 11 12 DEN 7:00 PM 7:00 PM 6:00 PM 7:00 PM 6:30 PM 7:00 PM PRESEASON PRESEASON 14 15 16 17 DAL 18 19 20 DEN 13 14 15 IND 16 17 TOR 18 19 CHA 7:30 PM 6:00 PM 5:00 PM 5:30 PM 3:00 PM ESPN 21 22 GSW 23 24 LAL 25 26 27 MEM 20 MIN 21 22 MIN 23 24 POR 25 DEN 26 7:30 PM 7:00 PM 5:00 PM 5:00 PM 7:00 PM 7:00 PM 7:00 PM 28 OKC 29 30 31 SAS 27 LAL 28 29 SAS 30 31 4:00 PM 7:30 PM 7:00 PM 5:00 PM 7:30 PM 6:30 PM ESPN FSAZ 3:00 PM 7:30 PM FSAZ FSAZ NOVEMBER 2018 FEBRUARY 2019 SUN MON TUE WED THU FRI SAT SUN MON TUE WED THU FRI SAT 1 2 TOR 3 1 2 ATL 7:00 PM 7:00 PM 4 MEM 5 6 BKN 7 8 BOS 9 10 NOP 3 4 HOU 5 6 UTA 7 8 GSW 9 6:00 PM 7:00 PM 7:00 PM 5:00 PM 7:00 PM 7:00 PM 7:00 PM 11 12 OKC 13 14 SAS 15 16 17 OKC 10 SAC 11 12 13 LAC 14 15 16 6:00 PM 7:00 PM 7:00 PM 4:00 PM 8:30 PM 18 19 PHI 20 21 CHI 22 23 MIL 24 17 18 19 20 21 CLE 22 23 ATL 5:00 PM 6:00 PM 6:30 PM 5:00 PM 5:00 PM 25 DET 26 27 IND 28 LAC 29 30 ORL 24 25 MIA 26 27 28 2:00 PM 7:00 PM 8:30 PM 7:00 PM 5:30 PM DECEMBER 2018 MARCH 2019 SUN MON TUE WED THU FRI SAT SUN MON TUE WED THU FRI SAT 1 1 2 NOP LAL 7:00 PM 7:00 PM 2 LAL 3 4 SAC 5 6 POR 7 MIA 8 3 4 MIL 5 6 NYK 7 8 9 POR 1:30 PM 7:00 PM 8:00 PM 7:00 PM 7:00 PM 7:00 PM 8:00 PM 9 10 LAC 11 SAS 12 13 DAL 14 15 MIN 10 GSW 11 12 13 UTA 14 15 HOU 16 NOP 7:00 -

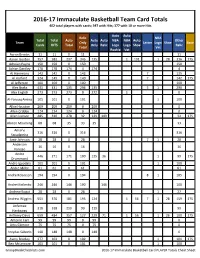

2016-17 Immaculate Basketball Player Checklist;

2016-17 Immaculate Basketball Team Card Totals 402 total players with cards; 397 with Hits; 377 with 10 or more Hits Auto Auto Relic NBA Total Total Auto Auto Auto NBA NBA Auto Other Team Only Letter Logo Shoe Base Cards HITS Total Only Relic Logo Logo Shoe Relic Total Vet Rookie Vet Aaron Brooks 11 11 0 11 11 Aaron Gordon 757 582 237 345 135 1 101 1 28 316 175 Adreian Payne 150 150 0 150 150 Adrian Dantley 178 178 174 4 174 4 AJ Hammons 142 142 0 142 7 135 Al Horford 324 149 0 149 7 142 175 Al Jefferson 100 100 0 100 100 Alec Burks 431 431 135 296 135 5 1 290 Alex English 273 273 273 0 272 1 0 Al-Farouq Aminu 101 101 0 101 1 100 Allan Houston 209 209 209 0 209 0 Allen Crabbe 124 124 124 0 124 0 Allen Iverson 485 310 278 32 129 149 32 175 Alonzo Mourning 68 68 35 33 35 33 Amar'e 316 316 0 316 316 Stoudemire Amir Johnson 28 28 0 28 7 1 20 Anderson 16 16 0 16 16 Varejao Andre 446 271 171 100 135 36 1 99 175 Drummond Andre Iguodala 101 101 0 101 1 100 Andre Miller 61 61 0 61 61 Andre Roberson 194 194 0 194 8 1 185 Andrei Kirilenko 246 246 146 100 146 100 Andrew Bogut 28 28 0 28 1 27 Andrew Wiggins 551 376 181 195 124 1 56 7 1 28 159 175 Anfernee 318 318 219 99 219 99 Hardaway Anthony Davis 659 484 357 127 229 71 1 56 1 26 100 175 Antoine Carr 99 99 99 0 99 0 Artis Gilmore 75 75 75 0 75 0 Arvydas Sabonis 148 148 148 0 148 0 Avery Bradley 277 102 0 102 1 101 175 Ben McLemore 101 101 0 101 1 100 GroupBreakChecklists.com 2016-17 Immaculate Basketball Card PLAYER Totals Cheat Sheet Auto Auto Relic NBA Total Total Auto Auto Auto NBA NBA Auto Other -

Open Andrew Bryant SHC Thesis.Pdf

THE PENNSYLVANIA STATE UNIVERSITY SCHREYER HONORS COLLEGE DEPARTMENT OF ECONOMICS REVISITING THE SUPERSTAR EXTERNALITY: LEBRON’S ‘DECISION’ AND THE EFFECT OF HOME MARKET SIZE ON EXTERNAL VALUE ANDREW DAVID BRYANT SPRING 2013 A thesis submitted in partial fulfillment of the requirements for baccalaureate degrees in Mathematics and Economics with honors in Economics Reviewed and approved* by the following: Edward Coulson Professor of Economics Thesis Supervisor David Shapiro Professor of Economics Honors Adviser * Signatures are on file in the Schreyer Honors College. i ABSTRACT The movement of superstar players in the National Basketball Association from small- market teams to big-market teams has become a prominent issue. This was evident during the recent lockout, which resulted in new league policies designed to hinder this flow of talent. The most notable example of this superstar migration was LeBron James’ move from the Cleveland Cavaliers to the Miami Heat. There has been much discussion about the impact on the two franchises directly involved in this transaction. However, the indirect impact on the other 28 teams in the league has not been discussed much. This paper attempts to examine this impact by analyzing the effect that home market size has on the superstar externality that Hausman & Leonard discovered in their 1997 paper. A road attendance model is constructed for the 2008-09 to 2011-12 seasons to compare LeBron’s “superstar effect” in Cleveland versus his effect in Miami. An increase of almost 15 percent was discovered in the LeBron superstar variable, suggesting that the move to a bigger market positively affected LeBron’s fan appeal. -

P18 3.E$S 4 Layout 1

THURSDAY, MAY 11, 2017 SPORTS India can be the next China for NBA: Top official NEW DELHI: While China is the National said on Tuesday. “With the availability of down to the D-League where he now games on their platform. We have an why they picked the Asian neighbors, Basketball Association’s biggest market these games and these competitions represents Texas Legends. India website now with localized con- also the world’s two most populous outside the United States, NBA Deputy from around the world, I think more and “There is a strong culture and history tent and we have extensive grassroots nations, Tatum said: “We have 113 play- Commissioner Mark Tatum has told more the sporting culture is starting to of playing the game of basketball in development programs which has ers from 41 different countries and terri- Reuters that India is ripe to grow the change. “Now is the time for a sport like China. What we are doing now is we’re reached six million kids and trained tories and not one from China, not one game as its changing sporting culture the NBA, a league like the NBA to contin- establishing that here in India. We really 5,000 coaches.” from India. It’s not that passion for the begins to offer its 1.3 billion people ue to grow our business here.” do believe that India will be the next All India needs now is its own Yao game is not there. In China 300 million alternatives to cricket. Speaking in an Basketball goes back 100 years in China for the NBA,” Tatum said. -

Graphical Model for Baskeball Match Simulation

Graphical Model for Baskeball Match Simulation Min-hwan Oh, Suraj Keshri, Garud Iyengar Columbia University New York, NY, USA, 10027 [email protected], [email protected] [email protected] Abstract Conventional approaches to simulate matches have ignored that in basketball the dynamics of ball movement is very sensitive to the lineups on the court and unique identities of players on both offense and defense sides. In this paper, we propose the simulation infrastructure that can bridge the gap between player identity and team level network. We model the progression of a basketball match using a probabilistic graphical model. We model every touch and event in a game as a sequence of transitions between discrete states. We treat the progression of a match as a graph, where each node is a network structure of players on the court, their actions, events, etc., and edges denote possible moves in the game flow. Our results show that either changes in the team lineup or changes in the opponent team lineup significantly affects the dynamics of a match progression. Evaluation on the match data for the 2013-14 NBA season suggests that the graphical model approach is appropriate for modeling a basketball match. 1 Introduction Predicting the outcomes of professional sports events is one of the most popular practices in the sports media, fan communities and, of course, sport betting related industries. Predictions range from human prediction to statistical analysis of historical data. In recent years, basketball, specifically the NBA, has received much atten- tion as a domain of analytics with the advent of player tracking data. -

National Basketball Association

NATIONAL BASKETBALL ASSOCIATION OFFICIAL SCORER'S REPORT FINAL BOX Wednesday, October 25, 2017 AmericanAirlines Arena, Miami, FL Officials: #13 Monty McCutchen, #3 Nick Buchert, #68 Jacyn Goble Game Duration: 2:15 Attendance: 19600 (Sellout) VISITOR: San Antonio Spurs (4-0) POS MIN FG FGA 3P 3PA FT FTA OR DR TOT A PF ST TO BS +/- PTS 1 Kyle Anderson F 27:16 4 8 0 0 4 6 1 9 10 2 1 1 0 0 5 12 12 LaMarcus Aldridge F 38:09 12 20 1 1 6 7 1 6 7 1 4 2 2 1 16 31 16 Pau Gasol C 19:00 5 8 0 1 3 4 1 8 9 0 2 1 3 1 1 13 14 Danny Green G 34:40 6 7 3 4 0 0 1 6 7 3 3 0 1 1 20 15 5 Dejounte Murray G 24:19 0 6 0 0 0 0 1 2 3 3 3 0 1 0 9 0 22 Rudy Gay 26:29 6 8 1 2 9 11 2 1 3 4 0 2 3 0 16 22 8 Patty Mills 25:55 1 4 1 2 0 0 0 1 1 4 1 0 3 0 3 3 20 Manu Ginobili 21:48 6 12 2 5 0 0 0 3 3 1 2 0 0 0 9 14 11 Bryn Forbes 03:06 0 0 0 0 0 0 0 0 0 0 1 0 0 0 -6 0 3 Brandon Paul 19:18 2 3 2 2 1 2 1 0 1 0 3 0 0 0 12 7 42 Davis Bertans DNP - Coach's decision 77 Joffrey Lauvergne NWT - Injury/Illness - Sprained Right Ankle 4 Derrick White DNP - Coach's decision 240:00 42 76 10 17 23 30 8 36 44 18 20 6 13 3 17 117 55.3% 58.8% 76.7% TM REB: 7 TOT TO: 13 (17 PTS) HOME: MIAMI HEAT (2-2) POS MIN FG FGA 3P 3PA FT FTA OR DR TOT A PF ST TO BS +/- PTS 0 Josh Richardson F 33:00 1 8 0 4 4 4 2 3 5 3 6 3 3 0 -12 6 16 James Johnson F 36:01 8 14 1 3 4 4 0 9 9 4 4 1 4 0 -20 21 13 Bam Adebayo C 19:35 2 6 0 0 0 0 1 7 8 0 3 0 0 1 -12 4 11 Dion Waiters G 39:38 6 15 2 7 3 4 0 2 2 5 1 0 0 0 -10 17 7 Goran Dragic G 37:23 9 16 2 4 0 0 1 3 4 1 2 1 1 0 -12 20 8 Tyler Johnson 33:16 7 13 3 6 6 6 0 0 0 3 2 1 0 1 -7 23 9 Kelly Olynyk 12:07 2 3 1 1 0 0 0 2 2 1 2 0 1 0 2 5 20 Justise Winslow 22:24 2 5 0 1 0 0 0 1 1 1 3 0 0 0 -11 4 2 Wayne Ellington 06:18 0 0 0 0 0 0 0 1 1 1 2 0 0 0 -1 0 15 Okaro White 00:18 0 0 0 0 0 0 0 0 0 0 0 0 0 0 -2 0 40 Udonis Haslem DNP - Coach's decision 25 Jordan Mickey DNP - Coach's decision 12 Matt Williams Jr. -

SOUTHERN at ARKANSAS 28 Sat NORTH TEXAS SECN Plus W 69 54 Fayetteville, Ark

2020-21 SCHEDULE / RESULTS NOVEMBER 2-0 25 Wed MISSISSIPPI VALLEY STATE SECN Plus W 142 62 Fayetteville, Ark. • Bud Walton Arena/Nolan Richardson Court GAME 5: SOUTHERN AT ARKANSAS 28 Sat NORTH TEXAS SECN Plus W 69 54 Fayetteville, Ark. • Bud Walton Arena/Nolan Richardson Court Dec. 9, 2020 • Wednesday • 7:00 pm • Fayetteville, Ark. • Bud Walton Arena (19,200)/Nolan Richardson Court DECEMBER (All TIMES CT) ARKANSAS RAZORBACKS OVERALL 4-0 SEC 0-0 SERIES INFO: 2 Wed UT ARLINGTON SEC Network W 72 60 Eric Musselman (San Diego ‘87) at UA: 24-12 (2nd) Collegiate: 134-46 (6th) OVERALL .......ARK Leads .............2-0 Fayetteville, Ark. • Bud Walton Arena/Nolan Richardson Court Home: ............ARK Leads ................... 1-0 Away: .............ARK Leads .....................-- 5 Sat LIPSCOMB SECN Plus W 86 50 Neutral: .........ARK Leads ................... 1-0 Fayetteville, Ark. • Bud Walton Arena/Nolan Richardson Court SOUTHERN JAGUARS OVERALL 0-2 SWAC 0-0 Sean Woods (Kentucky ‘92) at SU: 17-41 (3rd) Overall: 151-192 (12th) 8 Tues at Tulsa ESPN+ Postponed SERIES HISTORY: Tulsa, Okla. • Donald W. Reynolds Center 9 Wed SOUTHERN SECN Plus 7:00 pm SEC NETWORK 11/29/85 W 76-75 (PB) N Fayetteville, Ark. • Bud Walton Arena/Nolan Richardson Court Brett Dolan (PxP) 11/13/15 W 86-68 H 12 Sat CENTRAL ARKANSAS SECN Plus 7:00 pm Manuale Watkins (Analyst) Fayetteville, Ark. • Bud Walton Arena/Nolan Richardson Court 20 Sun ORAL ROBERTS SEC Network 2:00 pm RAZORBACK SPORTS NETWORK SATELLITE RADIO Fayetteville, Ark. • Bud Walton Arena/Nolan Richardson Court Chuck Barrett (PxP) XM: TBA • Sirius: -- 22 Tues ABILENE CHRISTIAN SECN Plus 7:00 pm Matt Zimmerman (Analyst) Online Channel: TBA Fayetteville, Ark. -

2010-11 Louisiana Men's Basketball Record Book

2010-11 Louisiana Men’s Basketball Record Book 2010-11 Louisiana Basketball Record Book year in review 2009-10 2009-10 SEASON AT A GLANCE >>> RECORD ALL HOME AWAY NEUT >> UL posted double-digit SBC on-court wins for the 12th combined 112 games missed to injury. ALL GAMES 13-17 10-4 3-12 0-1 time in 19 seasons. >> Of Louisiana’s 17 losses, 12 were by single digits. During CONFERENCE 10-8 9-0 1-8 0-0 >> For only the second time since joining the league (1991- a key stretch in the Sun Belt schedule, the Cajuns lost three NON-CONFERENCE 3-9 3-4 0-5 0-1 92), Louisiana finished undefeated at the Cajundome in games by a combined eight points. >> Louisiana completed the sixth season under the Sun Belt play with a 9-0 mark. Only once before had UL >> The Cajuns defense improved, holding opponents to direction of former head coach Robert Lee, who was not finished perfect at the ‘Dome in Sun Belt play (7-0 in 2001- 67.5 points per game – three points lower than the 2008- retained at the end of the season. 02). 09 average. >> For the first time since the 1993-94 season, a member >> After the turn of the calendar year UL limited 12 of 17 >> Four seniors ended their career in 2009-10. Corey of the Ragin’ Cajuns squad was named Sun Belt Player of opponents below 70 points (five under 60). Opponents Bloom, Tyren Johnson, Willie Lago and Lamar Roberson the Year. Tyren Johnson collected the award. -

2019-20 Horizon League Men's Basketball

2019-20 Horizon League Men’s Basketball Horizon League Players of the Week Final Standings November 11 .....................................Daniel Oladapo, Oakland November 18 .................................................Marcus Burk, IUPUI Horizon League Overall November 25 .................Dantez Walton, Northern Kentucky Team W L Pct. PPG OPP W L Pct. PPG OPP December 2 ....................Dantez Walton, Northern Kentucky Wright State$ 15 3 .833 81.9 71.8 25 7 .781 80.6 70.8 December 9 ....................Dantez Walton, Northern Kentucky Northern Kentucky* 13 5 .722 70.7 65.3 23 9 .719 72.4 65.3 December 16 ......................Tyler Sharpe, Northern Kentucky Green Bay 11 7 .611 81.8 80.3 17 16 .515 81.6 80.1 December 23 ............................JayQuan McCloud, Green Bay December 31 ..................................Loudon Love, Wright State UIC 10 8 .556 70.0 67.4 18 17 .514 68.9 68.8 January 6 ...................................Torrey Patton, Cleveland State Youngstown State 10 8 .556 75.3 74.9 18 15 .545 72.8 71.2 January 13 ........................................... Te’Jon Lucas, Milwaukee Oakland 8 10 .444 71.3 73.4 14 19 .424 67.9 69.7 January 20 ...........................Tyler Sharpe, Northern Kentucky Cleveland State 7 11 .389 66.9 70.4 11 21 .344 64.2 71.8 January 27 ......................................................Marcus Burk, IUPUI Milwaukee 7 11 .389 71.5 73.9 12 19 .387 71.5 72.7 February 3 ......................................... Rashad Williams, Oakland February 10 ........................................