Introduction to Reproducible Science in R 2 Contents

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linux Fundamentals (GL120) U8583S This Is a Challenging Course That Focuses on the Fundamental Tools and Concepts of Linux and Unix

Course data sheet Linux Fundamentals (GL120) U8583S This is a challenging course that focuses on the fundamental tools and concepts of Linux and Unix. Students gain HPE course number U8583S proficiency using the command line. Beginners develop a Course length 5 days solid foundation in Unix, while advanced users discover Delivery mode ILT, vILT patterns and fill in gaps in their knowledge. The course View schedule, local material is designed to provide extensive hands-on View now pricing, and register experience. Topics include basic file manipulation; basic and View related courses View now advanced filesystem features; I/O redirection and pipes; text manipulation and regular expressions; managing jobs and processes; vi, the standard Unix editor; automating tasks with Why HPE Education Services? shell scripts; managing software; secure remote • IDC MarketScape leader 5 years running for IT education and training* administration; and more. • Recognized by IDC for leading with global coverage, unmatched technical Prerequisites Supported distributions expertise, and targeted education consulting services* Students should be comfortable with • Red Hat Enterprise Linux 7 • Key partnerships with industry leaders computers. No familiarity with Linux or other OpenStack®, VMware®, Linux®, Microsoft®, • SUSE Linux Enterprise 12 ITIL, PMI, CSA, and SUSE Unix operating systems is required. • Complete continuum of training delivery • Ubuntu 16.04 LTS options—self-paced eLearning, custom education consulting, traditional classroom, video on-demand -

Research Computing and Cyberinfrastructure Team

Research Computing and CyberInfrastructure team ! Working with the Linux Shell on the CWRU HPC 31 January 2019 7 February 2019 ! Presenter Emily Dragowsky KSL Data Center RCCI Team: Roger Bielefeld, Mike Warfe, Hadrian Djohari! Brian Christian, Emily Dragowsky, Jeremy Fondran, Cal Frye,! Sanjaya Gajurel, Matt Garvey, Theresa Griegger, Cindy Martin, ! Sean Maxwell, Jeno Mozes, Nasir Yilmaz, Lee Zickel Preface: Prepare your environment • User account ! # .bashrc ## cli essentials ! if [ -t 1 ] then bind '"\e[A": history-search-backward' bind '"\e[B": history-search-forward' bind '"\eOA": history-search-backward' bind '"\eOB": history-search-forward' fi ! This is too useful to pass up! Working with Linux • Preamble • Intro Session Linux Review: Finding our way • Files & Directories: Sample work flow • Shell Commands • Pipes & Redirection • Scripting Foundations • Shell & environment variables • Control Structures • Regular expressions & text manipulation • Recap & Look Ahead Rider Cluster Components ! rider.case.edu ondemand.case.edu University Firewall ! Admin Head Nodes SLURM Science Nodes Master DMZ Resource ! Data Manager Transfer Disk Storage Nodes Batch nodes GPU nodes SMP nodes Running a job: where is it? slide from Hadrian Djohari Storing Data on the HPC table from Nasir Yilmaz How data moves across campus • Buildings on campus are each assigned to a zone. Data connections go from every building to the distribution switch at the center of the zone and from there to the data centers at Kelvin Smith Library and Crawford Hall. slide -

Bash Guide for Beginners

Bash Guide for Beginners Machtelt Garrels Garrels BVBA <tille wants no spam _at_ garrels dot be> Version 1.11 Last updated 20081227 Edition Bash Guide for Beginners Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions, translations and availability.........................................................................................2 4. Revision History..................................................................................................................................2 5. Contributions.......................................................................................................................................3 6. Feedback..............................................................................................................................................3 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................4 9. Conventions used in this -

Linux Cheat Sheet

1 of 4 ########################################### # 1.1. File Commands. # Name: Bash CheatSheet # # # # A little overlook of the Bash basics # ls # lists your files # # ls -l # lists your files in 'long format' # Usage: A Helpful Guide # ls -a # lists all files, including hidden files # # ln -s <filename> <link> # creates symbolic link to file # Author: J. Le Coupanec # touch <filename> # creates or updates your file # Date: 2014/11/04 # cat > <filename> # places standard input into file # Edited: 2015/8/18 – Michael Stobb # more <filename> # shows the first part of a file (q to quit) ########################################### head <filename> # outputs the first 10 lines of file tail <filename> # outputs the last 10 lines of file (-f too) # 0. Shortcuts. emacs <filename> # lets you create and edit a file mv <filename1> <filename2> # moves a file cp <filename1> <filename2> # copies a file CTRL+A # move to beginning of line rm <filename> # removes a file CTRL+B # moves backward one character diff <filename1> <filename2> # compares files, and shows where differ CTRL+C # halts the current command wc <filename> # tells you how many lines, words there are CTRL+D # deletes one character backward or logs out of current session chmod -options <filename> # lets you change the permissions on files CTRL+E # moves to end of line gzip <filename> # compresses files CTRL+F # moves forward one character gunzip <filename> # uncompresses files compressed by gzip CTRL+G # aborts the current editing command and ring the terminal bell gzcat <filename> # -

Cooper (2007) Advanced Bash Scripting Guide

Advanced Bash-Scripting Guide An in-depth exploration of the art of shell scripting Mendel Cooper <[email protected]> 5.1 10 November 2007 Revision History Revision 4.3 29 Apr 2007 Revised by: mc 'INKBERRY' release: Minor Update. Revision 5.0 24 Jun 2007 Revised by: mc 'SERVICEBERRY' release: Major Update. Revision 5.1 10 Nov 2007 Revised by: mc 'LINGONBERRY' release: Minor Update. This tutorial assumes no previous knowledge of scripting or programming, but progresses rapidly toward an intermediate/advanced level of instruction . all the while sneaking in little snippets of UNIX® wisdom and lore. It serves as a textbook, a manual for self-study, and a reference and source of knowledge on shell scripting techniques. The exercises and heavily-commented examples invite active reader participation, under the premise that the only way to really learn scripting is to write scripts. This book is suitable for classroom use as a general introduction to programming concepts. The latest update of this document, as an archived, bzip2-ed "tarball" including both the SGML source and rendered HTML, may be downloaded from the author's home site. A pdf version is also available ( pdf mirror site). See the change log for a revision history. Dedication For Anita, the source of all the magic Advanced Bash-Scripting Guide Table of Contents Chapter 1. Why Shell Programming?...............................................................................................................1 Chapter 2. Starting Off With a Sha-Bang........................................................................................................3 -

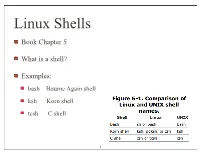

Linux Shells Book Chapter 5

Linux Shells Book Chapter 5 What is a shell? Examples: bash Bourne Again shell ksh Korn shell tcsh C shell 1 Linux Shells Linux default shell /bin/bash How do I know what shell I am running? echo $SHELL env 2 Shell Operations Shell invocation sequence 1. read special startup file containing initialization info 2. display prompt and wait for user command 3. execute command if “end of file” exit shell otherwise execute command entered 3 Shells operations Shell command examples ls ps -ef | sort | ul -tdumb | lp “\” serves as line extension character e.g.: echo this is a long command that does \ not fit in one line 4 Commands - executables Where are the shell commands? most commands invoke utility programs shell executes executable stored in file system e.g., ls (\bin\ls) 5 Commands - built-in Where are the shell commands? other commands are built-in, e.g., echo [option]... [string] which displays line of text cd bash built-ins man cd outputs bash, :, ., [, alias, bg, bind, break, builtin, cd, command, compgen, complete, continue, declare, dirs, disown, echo, enable, eval, exec, exit, export, fc, fg, getopts, hash, help, history, jobs, kill, let, local, logout, popd, printf, pushd, pwd, read, readonly, return, set, shift, shopt, source, suspend, test, times, trap, type, typeset, ulimit, umask, unalias, unset, wait - bash built-in commands, see bash(1) 6 Shell Metacharacters 7 Redirection Output Redirection Store output of process in file echo hello world > file writes string into file echo more words >> file append string to file Input Redirection -

Kamiak Cheat Sheet

Kamiak Cheat Sheet Logging in to Kamiak ssh [email protected] ssh -X [email protected] X11 graphics Transferring Files to and from Kamiak From your laptop, not logged into Kamiak scp -r myFile [email protected]:~ Copy to Kamiak scp -r [email protected]:~/myFile . Copy from Kamiak rsync -ravx myFile/ [email protected]:~/myFile Synchronize Linux Commands cd Go to home directory cd .. Go up one level (.. is parent, . is current) cd ~/myPath Go to path relative to home (~ is home) ls List members of current directory pwd Show path of current directory mkdir -pv myFolder Create a directory (folder is synonymous with directory) cp -r -i myFrom myTo Copy file, -r for entire folder, -i prompt before overwrite mv -i myFrom myTo Move file or folder (-i to prompt before overwrite) rm -i myFile Delete file (-i to prompt before overwrite) rm -r -I myFolder Delete folder (-I prompt if delete more than 3 files) rm -r -f myFolder Delete entire folder, without asking rmdir myFolder Delete folder only if empty more myFile Display text file, one page at a time cat myFile Display entire file cat myFile* Matches all files beginning with myFile du -hd 1 . See disk space on folder df -h . See disk space on volume man cp Manual page for command Ctl-c Kill current command Ctl-z Suspend current command bg Run suspended command in background fg Run suspended command in foreground disown -h Disconnect from terminal - 1 - hpc.wsu.edu/cheat-sheetJuly 8, 2021 hpc.wsu.edu/training/slides hpc.wsu.edu/training/follow-along Startup and -

What's a Script?

Linux shell scripting – “Getting started ”* David Morgan *based on chapter by the same name in Classic Shell Scripting by Robbins and Beebe What ’s a script? text file containing commands executed as a unit “command” means a body of code from somewhere from where? – the alias list, in the shell’s memory – the keywords, embedded in the shell – the functions that are in shell memory – the builtins, embedded in the shell code itself – a “binary” file, outboard to the shell 1 Precedence of selection for execution aliases keywords (a.k.a. reserved words) functions builtins files (binary executable and script) – hash table – PATH Keywords (a.k.a. reserved words) RESERVED WORDS Reserved words are words that have a special meaning to the shell. The following words are recognized as reserved when unquoted and either the first word of a simple command … or the third word of a case or for command: ! case do done elif else esac fi for function if in select then until while { } time [[ ]] bash man page 2 bash builtin executables source continue fc popd test alias declare fg printf times bg typeset getopts pushd trap bind dirs hash pwd type break disown help read ulimit builtin echo history readonly umask cd enable jobs return unalias caller eval kill set unset command exec let shift wait compgen exit local shopt complete export logout suspend * code for a bash builtin resides in file /bin/bash, along with the rest of the builtins plus the shell program as a whole. Code for a utility like ls resides in its own dedicated file /bin/ls, which holds nothing else. -

Bash Guide for Beginners

Bash Guide for Beginners Machtelt Garrels Xalasys.com <tille wants no spam _at_ xalasys dot com> Version 1.8 Last updated 20060315 Edition Bash Guide for Beginners Table of Contents Introduction.........................................................................................................................................................1 1. Why this guide?...................................................................................................................................1 2. Who should read this book?.................................................................................................................1 3. New versions, translations and availability.........................................................................................2 4. Revision History..................................................................................................................................2 5. Contributions.......................................................................................................................................3 6. Feedback..............................................................................................................................................3 7. Copyright information.........................................................................................................................3 8. What do you need?...............................................................................................................................4 9. Conventions used in this -

Learning the Bash Shell, 3Rd Edition

1 Learning the bash Shell, 3rd Edition Table of Contents 2 Preface bash Versions Summary of bash Features Intended Audience Code Examples Chapter Summary Conventions Used in This Handbook We'd Like to Hear from You Using Code Examples Safari Enabled Acknowledgments for the First Edition Acknowledgments for the Second Edition Acknowledgments for the Third Edition 1. bash Basics 3 1.1. What Is a Shell? 1.2. Scope of This Book 1.3. History of UNIX Shells 1.3.1. The Bourne Again Shell 1.3.2. Features of bash 1.4. Getting bash 1.5. Interactive Shell Use 1.5.1. Commands, Arguments, and Options 1.6. Files 1.6.1. Directories 1.6.2. Filenames, Wildcards, and Pathname Expansion 1.6.3. Brace Expansion 1.7. Input and Output 1.7.1. Standard I/O 1.7.2. I/O Redirection 1.7.3. Pipelines 1.8. Background Jobs 1.8.1. Background I/O 1.8.2. Background Jobs and Priorities 1.9. Special Characters and Quoting 1.9.1. Quoting 1.9.2. Backslash-Escaping 1.9.3. Quoting Quotation Marks 1.9.4. Continuing Lines 1.9.5. Control Keys 4 1.10. Help 2. Command-Line Editing 2.1. Enabling Command-Line Editing 2.2. The History List 2.3. emacs Editing Mode 2.3.1. Basic Commands 2.3.2. Word Commands 2.3.3. Line Commands 2.3.4. Moving Around in the History List 2.3.5. Textual Completion 2.3.6. Miscellaneous Commands 2.4. vi Editing Mode 2.4.1. -

1.5 Declaring Pre-Tasks

Invoke Release Jul 09, 2021 Contents 1 Getting started 3 1.1 Getting started..............................................3 2 The invoke CLI tool 9 2.1 inv[oke] core usage..........................................9 3 Concepts 15 3.1 Configuration............................................... 15 3.2 Invoking tasks.............................................. 22 3.3 Using Invoke as a library......................................... 30 3.4 Loading collections........................................... 34 3.5 Constructing namespaces........................................ 35 3.6 Testing Invoke-using codebases..................................... 42 3.7 Automatically responding to program output.............................. 46 4 API 49 4.1 __init__ ................................................ 49 4.2 collection .............................................. 50 4.3 config ................................................. 52 4.4 context ................................................ 58 4.5 exceptions .............................................. 63 4.6 executor ................................................ 66 4.7 loader ................................................. 68 4.8 parser ................................................. 69 4.9 program ................................................ 72 4.10 runners ................................................ 76 4.11 tasks .................................................. 87 4.12 terminals ............................................... 89 4.13 util .................................................. -

Bash Reference Manual Reference Documentation for Bash Edition 2.5, for Bash Version 2.05

Bash Reference Manual Reference Documentation for Bash Edition 2.5, for Bash Version 2.05. Mar 2001 Chet Ramey, Case Western Reserve University Brian Fox, Free Software Foundation Copyright c 1991-1999 Free Software Foundation, Inc. Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this manual under the con- ditions for verbatim copying, provided that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this manual into another lan- guage, under the above conditions for modified versions, except that this permission notice may be stated in a translation approved by the Free Software Foundation. Chapter 1: Introduction 1 1 Introduction 1.1 What is Bash? Bash is the shell, or command language interpreter, for the gnu operating system. The name is an acronym for the `Bourne-Again SHell', a pun on Stephen Bourne, the author of the direct ancestor of the current Unix shell /bin/sh, which appeared in the Seventh Edition Bell Labs Research version of Unix. Bash is largely compatible with sh and incorporates useful features from the Korn shell ksh and the C shell csh. It is intended to be a conformant implementation of the ieee posix Shell and Tools specification (ieee Working Group 1003.2). It offers functional improvements over sh for both interactive and programming use. While the gnu operating system provides other shells, including a version of csh, Bash is the default shell.