Prediction of a Typhoon Track Using a Generative Adversarial Network And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Member Report

MEMBER REPORT ESCAP/WMO Typhoon Committee 10 th Integrated Workshop REPUBLIC OF KOREA 26-29 October 2015 Kuala Lumpur, Malaysia CONTENTS I. Overview of tropical cyclones which have affected/impacted Member’s area since the last Typhoon Committee Session (as of 10 October) II. Summary of progress in Key Result Areas (1) Starting the Tropical Depression Forecast Service (2) Typhoon Post-analysis procedure in KMA (3) Capacity Building on the Typhoon Analysis and Forecast (4) Co-Hosting the 8 th China-Korea Joint Workshop on Tropical Cyclones (5) Theweb-based portal to provide the products of seasonal typhoon activity outlook for the TC Members (POP5) (6) Implementation of Typhoon Analysis and Prediction System (TAPS)inthe Thai Meteorological Department and Lao PDR Department of Meteorology and Hydrology (POP4) (7) Development and application of multi-model ensemble technique for improving tropical cyclone track and intensity forecast (8) Improvement in TC analysis using automated ADT and SDT operationally by COMS data and GPM microwave data in NMSC/KMA (9) Typhoon monitoring using ocean drifting buoys around the Korea (10) Case study of typhoon CHAN-HOM using Yong-In Test-bed dual-polarization radar in Korea (11) Achievementsaccording toExtreme Flood Forecasting System (AOP2) (12) Technical Report on Assessment System of Flood Control Measures (ASFCM) (13) Progress on Extreme Flood Management Guideline (AOP6) (14) Flood Information Mobile Application (15) The 4 th Meeting and Workshop of TC WGH and WGH Homepage (16) 2015 Northern Mindanao Project in Philippines by NDMI and PAGASA (17) Upgrade of the functions in Typhoon Committee Disaster Information System (TCDIS) (18) The 9 th WGDRR Annual Workshop (19) 2015 Feasibility Studies to disseminate Disaster Prevention Technology in Vietnam and Lao PDR I. -

P1.24 a Typhoon Loss Estimation Model for China

P1.24 A TYPHOON LOSS ESTIMATION MODEL FOR CHINA Peter J. Sousounis*, H. He, M. L. Healy, V. K. Jain, G. Ljung, Y. Qu, and B. Shen-Tu AIR Worldwide Corporation, Boston, MA 1. INTRODUCTION the two. Because of its wind intensity (135 mph maximum sustained winds), it has been Nowhere 1 else in the world do tropical compared to Hurricane Katrina 2005. But Saomai cyclones (TCs) develop more frequently than in was short lived, and although it made landfall as the Northwest Pacific Basin. Nearly thirty TCs are a strong Category 4 storm and generated heavy spawned each year, 20 of which reach hurricane precipitation, it weakened quickly. Still, economic or typhoon status (cf. Fig. 1). Five of these reach losses were ~12 B RMB (~1.5 B USD). In super typhoon status, with windspeeds over 130 contrast, Bilis, which made landfall a month kts. In contrast, the North Atlantic typically earlier just south of where Saomai hit, was generates only ten TCs, seven of which reach actually only tropical storm strength at landfall hurricane status. with max sustained winds of 70 mph. Bilis weakened further still upon landfall but turned Additionally, there is no other country in the southwest and traveled slowly over a period of world where TCs strike with more frequency than five days across Hunan, Guangdong, Guangxi in China. Nearly ten landfalling TCs occur in a and Yunnan Provinces. It generated copious typical year, with one to two additional by-passing amounts of precipitation, with large areas storms coming close enough to the coast to receiving more than 300 mm. -

Typhoon Neoguri Disaster Risk Reduction Situation Report1 DRR Sitrep 2014‐001 ‐ Updated July 8, 2014, 10:00 CET

Typhoon Neoguri Disaster Risk Reduction Situation Report1 DRR sitrep 2014‐001 ‐ updated July 8, 2014, 10:00 CET Summary Report Ongoing typhoon situation The storm had lost strength early Tuesday July 8, going from the equivalent of a Category 5 hurricane to a Category 3 on the Saffir‐Simpson Hurricane Wind Scale, which means devastating damage is expected to occur, with major damage to well‐built framed homes, snapped or uprooted trees and power outages. It is approaching Okinawa, Japan, and is moving northwest towards South Korea and the Philippines, bringing strong winds, flooding rainfall and inundating storm surge. Typhoon Neoguri is a once‐in‐a‐decade storm and Japanese authorities have extended their highest storm alert to Okinawa's main island. The Global Assessment Report (GAR) 2013 ranked Japan as first among countries in the world for both annual and maximum potential losses due to cyclones. It is calculated that Japan loses on average up to $45.9 Billion due to cyclonic winds every year and that it can lose a probable maximum loss of $547 Billion.2 What are the most devastating cyclones to hit Okinawa in recent memory? There have been 12 damaging cyclones to hit Okinawa since 1945. Sustaining winds of 81.6 knots (151 kph), Typhoon “Winnie” caused damages of $5.8 million in August 1997. Typhoon "Bart", which hit Okinawa in October 1999 caused damages of $5.7 million. It sustained winds of 126 knots (233 kph). The most damaging cyclone to hit Japan was Super Typhoon Nida (reaching a peak intensity of 260 kph), which struck Japan in 2004 killing 287 affecting 329,556 people injuring 1,483, and causing damages amounting to $15 Billion. -

TIROS V VIEWS FINAL STAGES in the LIFE of TYPHOON SARAH AUGUST 1962 CAPT.ROBERT W

MONTHLY M7EBTHER REVIEW 367 TIROS V VIEWS FINAL STAGES IN THE LIFE OF TYPHOON SARAH AUGUST 1962 CAPT.ROBERT w. FETT, AWS MEMBER National Weather Satellite Center, Washington D C [Manuscript Received April 18 1963, Revised May 28, 19631 ABSTRACT Four TIROS V mosaics showing typhoon Sarah on consecutive days during the period of its dccliiie are deecribcd. The initial development of what later became typhoon Vera is also shown. It is found that marked changes in storm intensity are rcflected in corresponding changes of appearance in the cloud patterns view-ed from the satellite. 1. INTRODUCTION read-out station shortly after the pictures were taken is also shown. Through cross reference from the niosaic to . The 3-week period froni the middle of August through the nephanalysis, locations of cloud I’eatures can con- the first week of September 1962, was one oE unusual veniently be determined. Photographic distortions of the activity for the western Pacific. No fewer than 6 ty- pictures and niosaic presentation are also rectified on the phoons developed, ran their devastating courses, and nephanalysis. The pictures begin in the Southern Hem- finally dissipated in mid-latitudes during this short spa11 isphere and extend northeastward past the Philippines, of time. over Formosa, Korea, and Japan, to the southern tip ol The TIROS V meteorological satellite was in position the Kamchatka Peninsula. The predominant cloud to view niany of these developments during various stages reatures in the southern portion of the mosaic consist of growth from formation to final decay. This provided mainly of clusters of cumulonimbus with anvil tops an unparalleled opportunity to obtain a visual record sheared toward the west-soutliwest by strong upper-level for extensive research into inany of the still unresolved east-northeasterly winds. -

Typhoon Goni

Emergency appeal Philippines: Typhoon Goni Revised Appeal n° MDRPH041 To be assisted: 100,000 people Appeal launched: 02/11/2020 Glide n°: TC-2020-000214-PHL DREF allocated: 750,000 Swiss francs Revision n° 1; issued: 13/11/2020 Federation-wide funding requirements: 16 million Swiss Appeal ends: 30/11/2022 (24 months) Francs for 24 months IFRC funding requirement: 8.5 million Swiss Francs Funding gap: 8.4 million Swiss francs funding gap for secretariat component This revised Emergency Appeal seeks a total of some 8.5 million Swiss francs (revised from CHF 3.5 million) to enable the IFRC to support the Philippine Red Cross (PRC) to deliver assistance to and support the immediate and early recovery needs of 100,000 people for 24 months, with a focus on the following areas of focus and strategies of implementation: shelter and settlements, livelihoods and basic needs, health, water, sanitation and hygiene promotion (WASH), disaster risk reduction, community engagement and accountability (CEA) as well as protection, gender and inclusion (PGI). Funding raised through the Emergency Appeal will contribute to the overall PRC response operation of CHF 16 million. The revision is based on the results of rapid assessment and other information available at this time and will be adjusted based on detailed assessments. The economy of the Philippines has been negatively impacted by the COVID-19 pandemic, with millions of people losing livelihoods due to socio-economic impacts of the pandemic. As COVID-19 continues to spread, the Philippines have kept preventive measures, including community quarantine and restriction to travel, in place. -

Chapter 7. Building a Safe and Comfortable Society

Section 1 Realizing a Universal Society Building a Safe and Comfortable Chapter 7 Society Section 1 Realizing a Universal Society 1 Realizing Accessibility through a Universal Design Concept The “Act on Promotion of Smooth Transportation, etc. of Elderly Persons, Disabled Persons, etc.” embodies the universal design concept of “freedom and convenience for anywhere and anyone”, making it mandatory to comply with “Accessibility Standards” when newly establishing various facilities (passenger facilities, various vehicles, roads, off- street parking facilities, city parks, buildings, etc.), mandatory best effort for existing facilities as well as defining a development target for the end of FY2020 under the “Basic Policy on Accessibility” to promote accessibility. Also, in accordance with the local accessibility plan created by municipalities, focused and integrated promotion of accessibility is carried out in priority development district; to increase “caring for accessibility”, by deepening the national public’s understanding and seek cooperation for the promotion of accessibility, “accessibility workshops” are hosted in which you learn to assist as well as virtually experience being elderly, disabled, etc.; these efforts serve to accelerate II accessibility measures (sustained development in stages). Chapter 7 (1) Accessibility of Public Transportation In accordance with the “Act on Figure II-7-1-1 Current Accessibility of Public Transportation Promotion of Smooth Transportation, etc. (as of March 31, 2014) of Elderly Persons, Disabled -

Summary of 2014 NW Pacific Typhoon Season and Verification of Authors’ Seasonal Forecasts

Summary of 2014 NW Pacific Typhoon Season and Verification of Authors’ Seasonal Forecasts Issued: 27th January 2015 by Dr Adam Lea and Professor Mark Saunders Dept. of Space and Climate Physics, UCL (University College London), UK. Summary The 2014 NW Pacific typhoon season was characterised by activity slightly below the long-term (1965-2013) norm. The TSR forecasts over-predicted activity but mostly to within the forecast error. The Tropical Storm Risk (TSR) consortium presents a validation of their seasonal forecasts for the NW Pacific basin ACE index, numbers of intense typhoons, numbers of typhoons and numbers of tropical storms in 2014. These forecasts were issued on the 6th May, 3rd July and the 5th August 2014. The 2014 NW Pacific typhoon season ran from 1st January to 31st December. Features of the 2014 NW Pacific Season • Featured 23 tropical storms, 12 typhoons, 8 intense typhoons and a total ACE index of 273. These numbers were respectively 12%, 25%, 0% and 7% below their corresponding long-term norms. Seven out of the last eight years have now had a NW Pacific ACE index below the 1965-2013 climate norm value of 295. • The peak months of August and September were unusually quiet, with only one typhoon forming within the basin (Genevieve formed in the NE Pacific and crossed into the NW Pacific basin as a hurricane). Since 1965 no NW Pacific typhoon season has seen less than two typhoons develop within the NW Pacific basin during August and September. This lack of activity in 2014 was in part caused by an unusually strong and persistent suppressing phase of the Madden-Julian Oscillation. -

Observational Analysis of Tropical Cyclone Formation Associated with Monsoon Gyres

APRIL 2013 W U E T A L . 1023 Observational Analysis of Tropical Cyclone Formation Associated with Monsoon Gyres LIGUANG WU,HUIJUN ZONG, AND JIA LIANG Key Laboratory of Meteorological Disaster of Ministry of Education, Nanjing University of Information Science and Technology, Nanjing, and State Key Laboratory of Severe Weather, Chinese Academy of Meteorological Sciences, Beijing, China (Manuscript received 6 April 2012, in final form 31 October 2012) ABSTRACT Large-scale monsoon gyres and the involved tropical cyclone formation over the western North Pacific have been documented in previous studies. The aim of this study is to understand how monsoon gyres affect tropical cyclone formation. An observational study is conducted on monsoon gyres during the period 2000–10, with a focus on their structures and the associated tropical cyclone formation. A total of 37 monsoon gyres are identified in May–October during 2000–10, among which 31 monsoon gyres are accompanied with the formation of 42 tropical cyclones, accounting for 19.8% of the total tropical cyclone formation. Monsoon gyres are generally located on the poleward side of the composited monsoon trough with a peak occurrence in August–October. Extending about 1000 km outward from the center at lower levels, the cyclonic circulation of the composited monsoon gyre shrinks with height and is replaced with negative relative vorticity above 200 hPa. The maximum winds of the composited monsoon gyre appear 500–800 km away from 2 the gyre center with a magnitude of 6–10 m s 1 at 850 hPa. In agreement with previous studies, the com- posited monsoon gyre shows enhanced southwesterly flow and convection on the south-southeastern side. -

Global Catastrophe Review – 2015

GC BRIEFING An Update from GC Analytics© March 2016 GLOBAL CATASTROPHE REVIEW – 2015 The year 2015 was a quiet one in terms of global significant insured losses, which totaled around USD 30.5 billion. Insured losses were below the 10-year and 5-year moving averages of around USD 49.7 billion and USD 62.6 billion, respectively (see Figures 1 and 2). Last year marked the lowest total insured catastrophe losses since 2009 and well below the USD 126 billion seen in 2011. 1 The most impactful event of 2015 was the Port of Tianjin, China explosions in August, rendering estimated insured losses between USD 1.6 and USD 3.3 billion, according to the Guy Carpenter report following the event, with a December estimate from Swiss Re of at least USD 2 billion. The series of winter storms and record cold of the eastern United States resulted in an estimated USD 2.1 billion of insured losses, whereas in Europe, storms Desmond, Eva and Frank in December 2015 are expected to render losses exceeding USD 1.6 billion. Other impactful events were the damaging wildfires in the western United States, severe flood events in the Southern Plains and Carolinas and Typhoon Goni affecting Japan, the Philippines and the Korea Peninsula, all with estimated insured losses exceeding USD 1 billion. The year 2015 marked one of the strongest El Niño periods on record, characterized by warm waters in the east Pacific tropics. This was associated with record-setting tropical cyclone activity in the North Pacific basin, but relative quiet in the North Atlantic. -

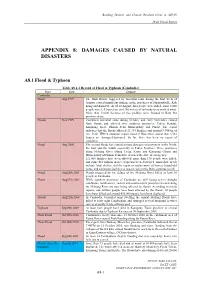

Appendix 8: Damages Caused by Natural Disasters

Building Disaster and Climate Resilient Cities in ASEAN Draft Finnal Report APPENDIX 8: DAMAGES CAUSED BY NATURAL DISASTERS A8.1 Flood & Typhoon Table A8.1.1 Record of Flood & Typhoon (Cambodia) Place Date Damage Cambodia Flood Aug 1999 The flash floods, triggered by torrential rains during the first week of August, caused significant damage in the provinces of Sihanoukville, Koh Kong and Kam Pot. As of 10 August, four people were killed, some 8,000 people were left homeless, and 200 meters of railroads were washed away. More than 12,000 hectares of rice paddies were flooded in Kam Pot province alone. Floods Nov 1999 Continued torrential rains during October and early November caused flash floods and affected five southern provinces: Takeo, Kandal, Kampong Speu, Phnom Penh Municipality and Pursat. The report indicates that the floods affected 21,334 families and around 9,900 ha of rice field. IFRC's situation report dated 9 November stated that 3,561 houses are damaged/destroyed. So far, there has been no report of casualties. Flood Aug 2000 The second floods has caused serious damages on provinces in the North, the East and the South, especially in Takeo Province. Three provinces along Mekong River (Stung Treng, Kratie and Kompong Cham) and Municipality of Phnom Penh have declared the state of emergency. 121,000 families have been affected, more than 170 people were killed, and some $10 million in rice crops has been destroyed. Immediate needs include food, shelter, and the repair or replacement of homes, household items, and sanitation facilities as water levels in the Delta continue to fall. -

An Incoherent Subseasonal Pattern of Tropical Convective and Zonal Wind

An incoherent subseasonal pattern of tropical convective and zonal wind anomalies contributed to weak signals on both the Wheeler-Hendon (RMM) and CPC MJO indices during the past week. The spatial pattern of upper-level velocity potential anomalies exhibited influences from higher frequency modes, such as tropical cyclone activity over the eastern Pacific and anomalous divergence over Africa. During the past several days, however, these conflicting signals have weakened. Additionally, constructive interference of a strong equatorial Rossby Wave (ERW) and a Kelvin Wave over the eastern Indian Ocean and Maritime Continent contributed to an ongoing large area of enhanced convection. The RMM Index has responded to this evolution and is currently projecting a signal in Phase-4. Dynamical model MJO index forecasts are consistent with a rapid propagation of this signal eastward over the Maritime Continent during the upcoming week, likely due to influence from the Kelvin Wave. Beyond this period, however, the GFS weakens the MJO signal while the CFS, UKMET, and ECMWF models continue a slower eastward propagation over the western Pacific. There is uncertainty whether the enhanced convection over the Maritime Continent associated with the interactions between a more transient Kelvin Wave and the ERW will evolve into a more robust subseasonal signal on the MJO time scale. Due to the consensus among numerous dynamical models, however, impacts of MJO propagation from the Maritime Continent to the West Pacific were considered for this outlook. Tropical cyclones developed over both the eastern and western Pacific basins during the past week. Super Typhoon Neoguri, the third typhoon of 2014 and the first major cyclone, developed east of the Philippines on 3 July. -

North Pacific, on August 31

Marine Weather Review MARINE WEATHER REVIEW – NORTH PACIFIC AREA May to August 2002 George Bancroft Meteorologist Marine Prediction Center Introduction near 18N 139E at 1200 UTC May 18. Typhoon Chataan: Chataan appeared Maximum sustained winds increased on MPC’s oceanic chart area just Low-pressure systems often tracked from 65 kt to 120 kt in the 24-hour south of Japan at 0600 UTC July 10 from southwest to northeast during period ending at 0000 UTC May 19, with maximum sustained winds of 65 the period, while high pressure when th center reached 17.7N 140.5E. kt with gusts to 80 kt. Six hours later, prevailed off the west coast of the The system was briefly a super- the Tenaga Dua (9MSM) near 34N U.S. Occasionally the high pressure typhoon (maximum sustained winds 140E reported south winds of 65 kt. extended into the Bering Sea and Gulf of 130 kt or higher) from 0600 to By 1800 UTC July 10, Chataan of Alaska, forcing cyclonic systems 1800 UTC May 19. At 1800 UTC weakened to a tropical storm near coming off Japan or eastern Russia to May 19 Hagibis attained a maximum 35.7N 140.9E. The CSX Defender turn more north or northwest or even strength of 140-kt (sustained winds), (KGJB) at that time encountered stall. Several non-tropical lows with gusts to 170 kt near 20.7N southwest winds of 55 kt and 17- developed storm-force winds, mainly 143.2E before beginning to weaken. meter seas (56 feet). The system in May and June.