Pellet-Size Estimation of a Ferrochrome Pelletizer Circuit Using Computer Vision Techniques

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ferrochrome Waste Management - Addressing Current Gaps

Ferrochrome waste management - addressing current gaps SP du Preez orcid.org/ 0000-0001-5214-3693 Thesis submitted for the degree Doctor of Philosophy in Chemistry at the North-West University Promoter: Prof JP Beukes Co-promoter: Prof PG van Zyl Graduation May 2018 21220212 SOLEM DECLARATION I, Stephanus Petrus du Preez, declare herewith that the thesis entitled: Ferrochrome waste management - addressing current gaps, which I herewith submit to the North-West University (NWU) as completion of the requirement set for the Doctor in Philosophiae in Chemistry degree, is my own work, unless specifically indicated otherwise, has been text edited as required, and has not been submitted to any other tertiary institution other than the NWU. Signature of the candidate: University number: 21220212 Signed at Potchefstroom on 20 November 2017 SOLEMN DECLARATION i ACKNOWLEDGMENTS “A sluggard’s appetite is never filled, but the desires of the diligent are fully satisfied” Proverbs 12:4 God Almighty, thank you for the strength and perseverance to undertake each task that came across my path. Without your grace and love, I am nothing. I would sincerely like to thank and convey my most genuine gratitude towards the following people for their support, assistance and guidance during the past three years. They played a vital role in the completion of my thesis and helped me to grow both academically and as a person. My supervisor Prof Paul Beukes, and co-supervisor Dr Pieter van Zyl. I am endlessly thankful for your excellent guidance, patience, and the critical roles that both of you played in my personal and academical growth. -

South Africanferroalloys Handbook 2013

HANDBOOK H1/2013 SOUTH AFRICANFERROALLOYS HANDBOOK 2013 DIRECTORATE: MINERAL ECONOMICS HANDBOOK H1/2013 SOUTH AFRICAN FERROALLOYS HANDBOOK 2013 DIRECTORATE: MINERAL ECONOMICS Compiled by: Ms K Ratshomo Email: ([email protected]) Picture on front cover Source: The BoshoekSmelter, North West Province www.meraferesources.co.za Issued by and obtainable from The Director: Mineral Economics, Trevenna Campus, 70 Meintjies Street, Arcadia, Pretoria 0001, Private Bag X59, Arcadia 0001 Telephone (012)444-3531, Telefax (012) 444-3134 Website: http://www.dmr.gov.za DEPARTMENT OF MINERAL RESOURCES Director-General Dr. T Ramontja MINERAL POLICY AND PROMOTION BRANCH Deputy Director-General Mr. M Mabuza MINERAL PROMOTION CHIEF DIRECTORATE Chief Director Ms. S Mohale DIRECTORATE MINERAL ECONOMICS Director: Mineral Economics Mr. TR Masetlana Deputy Director: Precious Metals and Minerals Ms. L Malebo and Ferrous Minerals THIS, THE FIRST EDITION, PUBLISHED IN 2013 ISBN: 978-0-621-42052-4 COPYRIGHT RESERVED DISCLAIMER Whereas the greatest care has been taken in the compilation of the contents of this publication, the Department of Mineral Resources does not hold itself responsible for any errors or omissions. TABLE OF CONTENTS Contents Page 1. INTRODUCTION .................................................................................................................1 2. SOUTH AFRICA’S FERROUS ALLOYS OVERVIEW ..........................................................1 3. FERROCHROME ................................................................................................................3 -

Ferrochrome (Fecr)

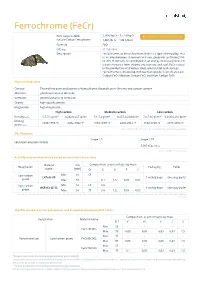

Ferrochrome (FeCr) Price range in 2020 2,78 €/kg Cr – 3,77 €/kg Cr for Low Carbon Ferrochrome 1,49 $/Ib Cr – 1,90 $/Ib Cr Formula FeCr CAS no. 11114-46-8 Description Ferrochrome, or Ferrochromium (FeCr) is a type of ferroalloy, that is, an alloy between chromium and iron, generally containing 50% to 70% chromium. It is produced in an energy intensive process in electric furnaces from chrome ore, iron ore and coal. FeCr is used in the production of stainless steel, special steel and castings. Ferrochrome is divided up in three main products which are Low Carbon FeCr, Medium Carbon FeCr and High Carbon FeCr. Physical Properties General The melting point and density of ferrochrome depends on its chrome and carbon content Abrasion good resistance to abrasion Corrosion good resistance to corrosion Gravity high specific gravity Magnetism high magnetism High carbon Medium carbon Low carbon DensityFeCr70 6,7-7,1 g/cm³ 0,242-0,257 Ib/in³ 7,1-7,3 g/cm³ 0,257-0,264 Ib/in³ 7,3-7,35 g/cm³ 0,264-0,266 Ib/in³ Melting 1350-1650 °C 2462-3002 °F 1360-1600 °C 2480-2912 °F 1580-1690 °C 2876-3074 °F pointFeCr70 Source: Volkert, G. & Frank, K.-D.: Die Metallurgie der Ferrolegierungen CO2 Footprint Scope 2 * Scope 3 ** Upstream emission factors - 5,987 tCo2 / tFeCr Source: worldsteel association Actually requested materials based on metalshub transactions Material Size Composition, as percentages by mass Designation Packaging Pallet name [mm] Cr C Si P S Low carbon Min. 10 65 - LCFeCr 65 1 mt big bags One way pallet grade Max. -

Influences of Alkali Fluxes on Direct Reduction of Chromite for Ferrochrome Production by D

http://dx.doi.org/10.17159/2411-9717/2018/v118n12a9 Influences of alkali fluxes on direct reduction of chromite for ferrochrome production by D. Paktunc*, Y. Thibault*, S. Sokhanvaran*, and D. Yu* process. In comparison to the conventional $+DE;CFC smelting processes, this prereduction process Prereduction and flux-aided direct reduction of chromite provide lowers the overall energy consumption and significant advantages in reducing energy consumption and greenhouse greenhouse gas emissions by about one-third gas emissions during ferrochrome production. In this investigation, a (Naiker, 2007). comparative evaluation of the influences of several alkali fluxes was Prereduction of chromite with the use of carried out based on experimental observations supplemented by various fluxes or additives has been the topic advanced material characterization and thermodynamic predictions. Direct of many studies over at least three decades. reduction of a chromite ore with alkali fluxes at 1300°C for 1 hour The additives tested since 1986 include produced (Cr,Fe)7C3 type alloys with Cr/Fe mass ratios from 0.7 to 2.3. borates, NaCl, NaF, and CaF2 (Katayama, Among the alkali fluxes, reduction aided by NaOH resulted in a high Tokuda, and Ohtani, 1986), CaF2 and NaF degree (85%) of Cr metallization with the ferrochrome alloy being (Dawson and Edwards, 1986), K2CO3, CaO, Cr4.2–4.6Fe2.4–2.8C3. The formation of liquid slag, which facilitated Cr SiO2, Al2O3, and MgO (van Deventer, 1988), metallization, was limited by the formation of NaAlO2 between 800 and 1300°C. This, in turn, restricted the collection and transport of the charged granite and CaF2 (Nunnington and Barcza, ionic Cr species (i.e. -

Low-Carbon Ferrochromium Imports Not Causing Serious !~Jury

UNITED STATES INTERNATIONAL TRADE COMMISSION WW-CARBON FERROCHROMIUM Report to the President on Investigation No. TA-201-20 Under Section 201 of the Trade Act of 1974 USITC Publication 825 Washington, D. C. July 1977 UNITED STATES INTERNATIONAL TRADE COMMISSION COMMISSIONERS Daniel Minchew, Chairman Joseph O. Parker, Vice Chairman George M. Moore Catherine Bedell Italo H. Ablondi Kenneth R. Mason, Secretary to the Commission This report was principally prepared by Nicholas C. Talerico, Minerals and Metals Division James S. Kennedy, Minerals and Metals Division assisted by Marvin C. Claywell, Operations Division N. Timothy Yaworski, Office of the General Counsel Charles W. Ervin, Supervisory Investigator Address all communications to United States International Trade Commission Washington, D .. c. 20436 FOR RELEASE AT WILL CONTACT: Kenneth R. Mason July 11,.1977 (202) 523-0161 USITC 77-052 LOW-CARBON FERROCHROMIUM IMPORTS NOT CAUSING SERIOUS !~JURY The United States International Trade Commission today reported to the President pursuant to the provisions of section 201 of the Trade Act of 1974 that imports of low-carbon ferro- chromium are not causing serious injury or the threat there- of to the relevant U.S. industry. Commissioners Daniel Minchew, Catherine Bedell, and Italo H. Ablondi formed the majority with their negative findings. Commissioner George M. Moore found in the affirmative that increased imports of low-carbon ferrochromium were a sub- stantial cause of the threat of serious iTijury to the domestic industry. Commissioner Joseph 0. Parker did not participate in the decision. There are three U.S. producers of low-carbon ferrochromi~m: Satralloy, Inc.; Globe Metallurgical Division, Interlake, Inc.; and Union Carbide Corp. -

Potential Toxic Effects of Chromium, Chromite Mining and Ferrochrome Production: a Literature Review

Chromium, Chromite Mining and Ferrochrome Production 2012 Y Potential Toxic Effects of Chromium, Chromite Mining and Ferrochrome Production: A Literature Review MiningWatch Canada May 2012 This document is part of a series produced by MiningWatch Canada about the risks of chromium exposure. Additional fact sheets summarizing risks to the environment, chromite workers and nearby populations are also available online at: www.miningwatch.ca/chromium Chromium, Chromite Mining and Ferrochrome Production 2012 Executive Summary Chromium (Cr) is an element that can exist in six valence states, 0, II, III, IV, V and VI, which represent the number of bonds an atom is capable of making. Trivalent (Cr-III) and hexavalent (Cr-VI) are the most common chromium species found environmentally. Trivalent is the most stable form and its compounds are often insoluble in water. Hexavalent chromium is the second most stable form, and the most toxic. Many of its compounds are soluble. Chromium-VI has the ability to easily pass into the cells of an organism, where it exerts toxicity through its reduction to Cr-V, IV and III. Most Cr-VI in the environment is created by human activities. Chromium-III is found in the mineral chromite. The main use for chromite ore mined today is the production of an iron-chromium alloy called ferrochrome (FeCr), which is used to make stainless steel. Extensive chromite deposits have been identified in northern Ontario 500 km north-east of Thunder Bay in the area dubbed the Ring of Fire. They are the largest deposits to be found in North America, and possibly in the world. -

A Brief History of Chromite Smelting

A Brief History of Chromite Smelting Rodney Jones 15 October 2020 Some important questions •What have Victoria Falls and King Solomon’s Mines to do with FeCr? •Was FeCr first smelted commercially in South Africa or in Canada? •The importance of: –People –Technology –Raw materials –Power •Talk outline: –Chromium –Smelting –South Africa –Canada –A chromite smelting link between the two countries 1 Wikipedia contains articles about almost everything … …except “Chromite smelting”: The page “Chromite smelting”does not exist Chromium •Chromium was discovered and named by the French chemist, Louis Nicolas Vauquelin (1763-1829) in 1797, during the years of the French Revolution •The following year, he isolated the metal by reduction of the Siberian red lead ore (crocoite, PbCrO4) with carbon •The brilliant hue of this chromate mineral inspired Vauquelin to give the metal its current name (from Greek chrōmos, “colour”) •Chromium is chemically inert, and has a high melting point, 1907°C •It is said that the first use of chromium as an alloying agent in the manufacture of steel took place in France in the 1860s •About three quarters of the chromium produced is used in the production of stainless steel 2 Michael Faraday’s Fe-Cr-Ni alloys • In about 1820 James Stodartand Michael Faraday from England, and French metallurgist, Pierre Berthierrecognised that iron-chromium alloys were able to resist attacks by some acids • Shown here are some samples of 79 experimental steel alloys (to be used for surgical instruments) made by Michael Faraday in a forced-draft -

Valuable Waste

RESEARCH & DEVELOPMENT Dr. D. S. Rao S. I. Angadi Institute of Minerals and Materials Technology (CSIR), Institute of Minerals and Mineralogy Department Materials Technology (CSIR) Bhubaneswar/India Bhubaneswar/India www.immt.res.in S.D.Muduli D. S. Rao studied Geology at Berhampur University, Institute of Minerals and where he passed out in 1986. He was a Fellow Scientist Materials Technology (CSIR) at the Institute of Minerals and Materials Technology, Bhubaneswar/India Bhubaneswar/India before joining National Metallurgical Laboratory (NML), Jamshedpur and then moved to B. D. Nayak National Metallurgical Laboratory – Madras Centre Institute of Minerals and (1997–2007). Since January 2008 he is Scientist-EII at Materials Technology (CSIR) Institute of Minerals and Materials Technology. Bhubaneswar/India Valuable waste Recovery of chromite values from ferrochrome industry flue dust Summary: Ferrochrome industry waste, such as flue dust, contains chromite minerals that are considered to be hazardous materials if left untreated, stockpiled or landfilled. Recovery of chromite values, by physical beneficiation techniques, can be applied to support recycling, the remaining waste being transformed into non-toxic materials for safe disposal. It is beneficial both with regard to saving raw material resources as well as reducing chromium pollution. To understand the nature of this waste and to develop a process for recovery of the chromium values, studies were conducted, with closer examination of the methods and equipment used, e.g. optical microscopy, XRD, Mozley mineral separator and magnetic separation. 1 Introduction residue. The key strategy is to minimize as well as reuse the Waste is a source of secondary raw materials, but at the same volume of such hazardous waste. -

Modelling of the Refining Processes in the Production of Ferrochrome and Stainless Steel

4 Modelling of the Refining Processes in the Production of Ferrochrome and Stainless Steel Eetu-Pekka Heikkinen and Timo Fabritius University of Oulu, Finland 1. Introduction In stainless steel production - as in almost any kind of industrial activity - it is important to know what kind of influence different factors such as process variables and conditions have on the process outcome. In order to productionally, economically and ecologically optimize the refining processes used in the production of stainless steels, one has to know these connections between the process outcomes and the process variables. In an effort to obtain this knowledge, process modelling and simulation - as well as experimental procedures and analyses - can be used as valuable tools (Heikkinen et al., 2010a). Process modelling and optimization requires information concerning the physical and chemical phenomena inside the process. However, even a deep understanding of these phenomena alone is not sufficient without the knowledge concerning the connections between the phenomena and the applications because of which the process modelling is carried out in the first place. The process engineer needs to seek the answers for questions such as: What are the applications and process outcomes (processes, product quantities, product qualities and properties, raw materials, emissions, residues and other environmental effects, refractory materials, etc.) that need to be modelled? What are the essential phenomena (chemical, thermal, mechanical, physical) influencing these applications? What variables need to be considered? What are the relations between these variables and process outcomes? How these relations should be modelled? (Heikkinen et al., 2010a) The purpose of this chapter is to seek answers to these questions in the context of ferrochrome and stainless steel production using models and modelling as a connection between the phenomena and the applications. -

Up-Concentration of Chromium in Stainless Steel Slag and Ferrochromium Slags by Magnetic and Gravity Separation

minerals Article Up-Concentration of Chromium in Stainless Steel Slag and Ferrochromium Slags by Magnetic and Gravity Separation Frantisek Kukurugya * , Peter Nielsen and Liesbeth Horckmans Vlaamse Instelling Voor Technologisch Onderzoek (VITO), 2400 Mol, Belgium; [email protected] (P.N.); [email protected] (L.H.) * Correspondence: [email protected] Received: 31 August 2020; Accepted: 9 October 2020; Published: 12 October 2020 Abstract: Slags coming from stainless steel (SS) and ferrochromium (FeCr) production generally contain between 1 and 10% Cr, mostly present in entrapped metallic particles (Fe–Cr alloys) and in spinel structures. To recover Cr from these slags, magnetic and gravity separation techniques were tested for up-concentrating Cr in a fraction for further processing. In case of SS slag and low carbon (LC) FeCr slag a wet high intensity magnetic separation can up-concentrate Cr in the SS slag (fraction <150 µm) from 2.3 wt.% to almost 9 wt.% with a yield of 7 wt.%, and in the LC FeCr slag from 3.1 wt.% to 11 wt.% with a yield of 3 wt.%. Different behavior of Cr-containing spinel’s in the two slag types observed during magnetic separation can be explained by the presence or absence of Fe in the lattice of the Cr-containing spinel’s, which affects their magnetic susceptibility. The Cr content of the concentrates is low compared to chromium ores, indicating that additional processing steps are necessary for a recovery process. In the case of high carbon (HC) FeCr slag, a Cr up-concentration by a factor of more than three (from 9 wt.% to 28 wt.%) can be achieved on the as received slag, after a single dry low intensity magnetic separation step, due to the well-liberated Cr-rich compounds present in this slag. -

Locating and Estimating Air Emissions from Sources of Chromium

United States Office of Air Quality EPA-450/4-84-007g Environmental Protection Planning And Standards Agency Research Triangle Park, NC 27711 July 1984 AIR EPA LOCATING AND ESTIMATING AIR EMISSIONS FROM SOURCES OF CHROMIUM L &E EPA-450/4-84-007G July 1984 Locating and Estimating Air Emissions From Sources of Chromium U.S ENVIRONMENTAL PROTECTION AGENCY Office of Air and Radiation Office of Air Quality Planning and Standards Research Triangle Park, North Carolina, NC 27711 This report has been reviewed by the Office of Air Quality Planning and Standards, U.S. Environmental Protection Agency, and has been approved for publication as recieved from Radian Corporation. Approval does not signify that the contents necessarily reflect the views and policies of the Agency, neither does mention of trade names or commercial products constitute endorsement or recommendation for use. TABLE OF CONTENTS Page List of Tables .......................................................... v List of Figures ...................................................... viii 1. Purpose of Document ......................................... 1 2. Overview of Document Contents ............................... 3 3. Background ........................................... 5 Nature of Pollutant ................................... 5 Overview of Production and Use ........................ 9 Chromium production ............................. 9 Chromium uses .................................. 19 References for Section 3 ............................. 27 4. Chromium Emission Sources ................................. -

OR FERROCHROME by Miningwatch Canada About the Risks of Chromium Mining and Processing

MiningWatch Canada Chromite Series Fact Sheet # 03 Introduction Chromite is a mineral that contains the element chromium. The major use for mined chromite is the production of fer- rochrome, an iron-chromium alloy used to make stainless steel. Recently, chromite deposits have been Living near a identified in Northern Ontario, Canada. Located 500 km north-east of Thunder Bay in a pristine area dubbed the “Ring of Fire”, they are the largest deposits found in North America. Cliffs Natural Resources CHROMITE is evaluating a plan for an open pit/under- ground chromite mine and ore processing facility in the Ring of Fire and a ferro- chrome production facility to be located somewhere in Ontario. A number of other companies also have plans for mining chromite and other metals in the area. This fact sheet is part of a series produced OR FERROCHROME by MiningWatch Canada about the risks of chromium mining and processing. Ad- MINE ditional fact sheets and a more extensive FACILITY: review of relevant scientific research enti- tled Overview of Chromium, Chromite and Toxicity, are also available on our website. References for the information presented in this fact sheet can be found in the full review. 1 things you should know Is chromium dangerous? The two most common types of chromi- um are trivalent chromium (Cr-III), which is found in the mineral chromite, and hexavalent chromium (Cr-VI). While Cr-III is considered an essential trace element in human diets, high doses can cause health problems and harm sensitive plants and animals. Human activities such as chro- mite mining and ferrochrome processing can convert Cr-III into Cr-VI, which is 100- 1000 times more toxic than Cr-III and is known to cause cancer.