Of Effective Teaching; However the Correlation Between the Ratingsand Criteria Were Usually Modest

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Khat Chewing, Cardiovascular Diseases and Other Internal Medical Problems: the Current Situation and Directions for Future Research

Journal of Ethnopharmacology 132 (2010) 540–548 Contents lists available at ScienceDirect Journal of Ethnopharmacology journal homepage: www.elsevier.com/locate/jethpharm Review Khat chewing, cardiovascular diseases and other internal medical problems: The current situation and directions for future research A. Al-Motarreb a, M. Al-Habori b, K.J. Broadley c,∗ a Cardiac Centre, Internal Medicine Department, Sana’a University, Sana’a, Yemen b Biochemical Department, Faculty of Medicine, Sana’a University, Sana’a, Yemen c Division of Pharmacology, Welsh School of Pharmacy, Cardiff University, King Edward VII Avenue, Cardiff CF10 3NB, UK article info abstract Article history: The leaves of khat (Catha edulis Forsk.) are chewed as a social habit for the central stimulant action of their Received 9 October 2009 cathinone content. This review summarizes the prevalence of the habit worldwide, the actions, uses, con- Received in revised form 4 June 2010 stituents and adverse health effects of khat chewing. There is growing concern about the health hazards Accepted 1 July 2010 of chronic khat chewing and this review concentrates on the adverse effects on health in the periph- Available online 17 July 2010 eral systems of the body, including the cardiovascular system and gastrointestinal tract. Comparisons are made with amphetamine and ecstasy in particular on the detrimental effects on the cardiovascular Keywords: system. The underlying mechanisms of action of khat and its main constituent, cathinone, on the car- Khat Myocardial infarction diovascular system are discussed. Links have been proposed between khat chewing and the incidence of Hypertension myocardial infarction, dilated cardiomyopathy, vascular disease such as hypertension, cerebrovascular Vasoconstriction ischaemia and thromboembolism, diabetes, sexual dysfunction, duodenal ulcer and hepatitis. -

Chewing Over Khat Prohibition the Globalisation of Control and Regulation of an Ancient Stimulant

Series on Legislative Reform of Drug Policies Nr. 17 January 2012 Chewing over Khat prohibition The globalisation of control and regulation of an ancient stimulant By Axel Klein, Pien Metaal and Martin Jelsma 1 In the context of a fast changing and well documented market in legal highs, the case of khat (Catha edulis) provides an interest- ing anomaly. It is first of all a plant-based substance that undergoes minimal transfor- mation or processing in the journey from farm to market. Secondly, khat has been consumed for hundreds if not thousands of years in the highlands of Eastern Africa and Southern Arabia.2 In European countries, khat use was first observed during the CONCLUSIONS & RECOMMENDATIONS 1980s,3 but has only attracted wider atten- Where khat has been studied exten- tion in recent years. sively, namely Australia, the UK and until Discussions about appropriate regulatory recent-ly the Netherlands, governments systems and the implications of rising khat have steered clear of prohibition because 4 use for European drug policies should take the negative medical and social harms do cognizance of social, demographic and not merit such controls. cultural trends, and compare the existing Strict bans on khat introduced ostensi- models of control that exist in Europe. Khat bly for the protection of immigrant com- provides a unique example of a herbal munities have had severe unintended stimulant that is defined as an ordinary negative consequences. vegetable in some countries and a con- trolled drug in others. It provides a rare Khat prohibition has failed to further the opportunity to study the effectiveness, costs integration, social incusion and economic and benefits of diverse control regimes. -

Street Names: Khat, Qat, Kat, Chat, Miraa, Quaadka) September 2019

Drug Enforcement Administration Diversion Control Division Drug & Chemical Evaluation Section KHAT (Street Names: Khat, Qat, Kat, Chat, Miraa, Quaadka) September 2019 Introduction: User Population: Khat, Catha edulis, is a flowering shrub native to East Abuse of khat in the United States is most prevalent among Africa and the Arabian-Peninsula. Khat often refers to the immigrants from Somalia, Ethiopia, and Yemen. Abuse of khat is leaves and young shoot of Catha edulis. It has been widely highest in cities with a substantial population of these immigrants. used since the thirteenth century as a recreational drug by the These cities include Boston (MA), Columbus (OH), Dallas (TX), indigenous people of East Africa, the Arabian Peninsula and Detroit (MI), Kansas City (MO), Los Angeles (CA), Minneapolis (MN), throughout the Middle East. Nashville (TN), New York (NY), and Washington D.C. Licit Uses: Illicit Distribution: There is no accepted medical use in treatment for khat in Individuals of Somali, Ethiopian, and Yemeni descent are the the United States. primary transporters and distributors of khat in the United States. The khat is transported from Somalia into the United States and distributed Chemistry and Pharmacology: in the Midwest, West and Southeast (Nashville, Tennessee) regions Khat contains two central nervous system (CNS) of the United States. According to the National Drug Intelligence stimulants, namely cathinone and cathine. Cathinone (alpha- Center, Somali and Yemen independent dealers are distributing khat aminopriopiophenone), which is considered to be the principal in Ann Arbor, Detroit, Lansing and Ypsilanti, Michigan; Columbus, active stimulant, is structurally similar to d-amphetamine and Ohio; Kansas City, Missouri; and Minneapolis/St. -

Khat Fast Facts

What is khat? How is khat used? What are the risks? Khat (Catha edulis) is a flowering Khat typically is ingested by chewing Individuals who abuse khat typically shrub native to northeast Africa and the leaves—as is done with loose experience a state of mild depression the Arabian Peninsula. Individuals tobacco. Dried khat leaves can be following periods of prolonged use. chew khat leaves because of the brewed in tea or cooked and added to Taken in excess khat causes extreme stimulant effects, which are similar to food. After ingesting khat, the user thirst, hyperactivity, insomnia, and but less intense than those caused by experiences an immediate increase in loss of appetite (which can lead to abusing cocaine or methamphet- blood pressure and heart rate. The anorexia). amine. effects of the drug generally begin to Frequent khat use often leads to subside between 90 minutes and 3 decreased productivity because the What does khat look like? hours after ingestion; however, they drug tends to reduce the user’s motiva- can last up to 24 hours. When fresh, khat leaves are glossy tion. Repeated use can cause manic and crimson-brown in color, resem- behavior with grandiose delusions, bling withered basil. Khat leaves Whouseskhat?Who uses khat? paranoia, and hallucinations. (There typically begin to deteriorate 48 hours The use of khat is accepted within have been reports of khat-induced after being cut from the shrub on the Somali, Ethiopian, and Yemeni psychosis.) The drug also can cause which they grow. Deteriorating khat cultures, and in the United States damage to the nervous, respiratory, leaves are leathery and turn yellow- khat use is most prevalent among circulatory, and digestive systems. -

WHAT IS KHAT? Khat Is a Flowering Evergreen Shrub That Is Abused for Its Stimulant-Like Effect

Khat WHAT IS KHAT? Khat is a flowering evergreen shrub that is abused for its stimulant-like effect. Khat has two active ingredients, cathine and cathinone. WHAT IS ITS ORIGIN? Khat is native to East Africa and the Arabian Peninsula, where the use of it is an established cultural tradition for many social situations What are common street names? Khat plant Common.street.names.for.Khat.include:. ➔»»Abyssinian Tea, African Salad, Catha, Chat, Kat, and Oat What are its overdose effects? The.dose.needed.to.constitute.an.overdose.is.not.known,. What does it look like? however.it.has.historically.been.associated.with.those.who.have. Khat.is.a.flowering.evergreen.shrub..Khat.that.is.sold.and.abused. been.long-term.chewers.of.the.leaves..Symptoms.of.toxicity. is.usually.just.the.leaves,.twigs,.and.shoots.of.the.Khat.shrub. include:. ➔»»Delusions, loss of appetite, difficulty with breathing, and How is it abused? increases in both blood pressure and heart rate Khat.is.typically.chewed.like.tobacco,.then.retained.in.the.cheek. Additionally,.there.are.reports.of.liver.damage.(chemical. and.chewed.intermittently.to.release.the.active.drug,.which. hepatitis).and.of.cardiac.complications,.specifically.myocardial. produces.a.stimulant-like.effect..Dried.Khat.leaves.can.be.made. infarctions..This.mostly.occurs.among.long-term.chewers.of.khat. into.tea.or.a.chewable.paste,.and.Khat.can.also.be.smoked.and. or.those.who.have.chewed.too.large.a.dose. even.sprinkled.on.food.. Which drugs cause similar effects? What is its effect on the mind? Khat’s.effects.are.similar.to.other.stimulants,.such.as.cocaine. -

Cedar City District in REPLY REFER to United States Department of the Interior

UNITED STATES DEPARTMENT OF THE INTERIOR BUREAU OF LAND MANAGEMENT Cedar City District IN REPLY REFER TO United States Department of the Interior BUREAU OF LAND MANAGEMENT Cedar City District 1579 North Main Cedar City, Utah 84720 October 31, 1984 Dear Public Land User: Enclosed is the proposed Resource Management Plan (RMP) and Final Environ- mental Impact Statement (FEIS) for the Cedar, Beaver, Garfield, Antimony plan- ning units. The Cedar City District Bureau of Land Management has prepared this document in conformance with the requirements of the Federal Land Policy and Management Act of 1976 and the National Environmental Policy Act of 1969. The proposed RMP and Final EIS is designed to be used in conjunction with the Draft RMP/EIS (DEIS) published in May 1984. This document contains the pro- posed plan and-its environmental consequences along with revisions and errata pertaining to the Draft EIS/RMP, public comments received, and BLM's responses to these comments. The State Director shall approve the proposed RMP no sooner' than 30 days after the Environmental Protection Agency's published notice of receipt of the FEIS in the Federal Register. Persons desiring to protest plan decisions must sub- mit written protests to the Director, Bureau of Land Management (Department of Interior, Bureau of Land Management, 18 and C NW, Washington, DC 20240) with- in 30 days of the filing of the document with the Environmental Protection Agency. All protests must be received within the time limit allowed and must conform to the requirements of 43 CFR 1610.5-2. The final resource management plan will be completed with the Record of Decision. -

Drug Enforcement Administration (DEA) Report on Usage of Khat in the United States, 2002-2003

Description of document: Drug Enforcement Administration (DEA) Report on Usage of Khat In the United States, 2002-2003 Requested date: 12-March-2014 Released date (DEA Appeal): 29-February-2016 Posted date: 04-April-2016 Source of document: Freedom of Information & Privacy Act Unit (SARF) Drug Enforcement Administration 8701 Morrissette Drive Springfield, VA 22152 Fax: (202) 307-8556 Email: [email protected] The governmentattic.org web site (“the site”) is noncommercial and free to the public. The site and materials made available on the site, such as this file, are for reference only. The governmentattic.org web site and its principals have made every effort to make this information as complete and as accurate as possible, however, there may be mistakes and omissions, both typographical and in content. The governmentattic.org web site and its principals shall have neither liability nor responsibility to any person or entity with respect to any loss or damage caused, or alleged to have been caused, directly or indirectly, by the information provided on the governmentattic.org web site or in this file. The public records published on the site were obtained from government agencies using proper legal channels. Each document is identified as to the source. Any concerns about the contents of the site should be directed to the agency originating the document in question. GovernmentAttic.org is not responsible for the contents of documents published on the website. U.S. Department of Justice Drug Enforcement Administration POI/Records Management Section 8701 Morrissette Drive Springfield, Virginia 22152 DEA Case Number: 14-00118-F FEB 2 9 2016 DEA Appeal Number: 14-00071-AP OIP Appeal Number AP-2014-01946 Subject: INFORMATION REGARDING THE MOST RECENT DEA REPORT ON USAGE OF KHAT IN THE UNITED STA TES This letter responds to your Administrative Appeal that has been remanded by the U.S. -

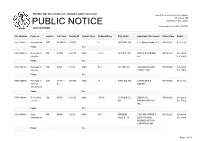

Public Notice >> Licensing and Management System Admin >>

REPORT NO. PN-1-210521-01 | PUBLISH DATE: 05/21/2021 Federal Communications Commission 45 L Street NE PUBLIC NOTICE Washington, D.C. 20554 News media info. (202) 418-0500 APPLICATIONS File Number Purpose Service Call Sign Facility ID Station Type Channel/Freq. City, State Applicant or Licensee Status Date Status 0000139612 Amendment LPD K03IM-D 185855 3 EUGENE, OR Live Sports Radio, LLC 05/19/2021 Received From: To: 0000146028 Renewal of FM KURR 164147 Main 103.1 HILDALE, UT MEDIA ADVISORS, 05/19/2021 Accepted License LLC For Filing From: To: 0000146168 Renewal of FM KIXM 87972 Main 92.3 VICTOR, ID JACKSON RADIO 05/19/2021 Accepted License GROUP, INC. For Filing From: To: 0000120096 Renewal of LPD W17CT- 125393 Main 17 MANTEO, NC LAWRENCE F. 05/19/2021 Received License D LOESCH Amendment From: To: 0000146249 Renewal of AM KKTS 161152 Main 1580.0 EVANSVILLE, DOUGLAS 05/19/2021 Accepted License WY BROADCASTING, For Filing INC. From: To: 0000146045 Minor FM KYQX 62040 Main 89.3 MINERAL CSSI NON-PROFIT 05/19/2021 Accepted Modification WELLS, TX EDUCATIONAL For Filing BROADCASTING CORPORATION From: To: Page 1 of 18 REPORT NO. PN-1-210521-01 | PUBLISH DATE: 05/21/2021 Federal Communications Commission 45 L Street NE PUBLIC NOTICE Washington, D.C. 20554 News media info. (202) 418-0500 APPLICATIONS File Number Purpose Service Call Sign Facility ID Station Type Channel/Freq. City, State Applicant or Licensee Status Date Status 0000128329 Renewal of LPT WNAL- 67953 Main 27 SCOTTSBORO, DIGITAL NETWORKS- 05/19/2021 Received License LD AL SOUTHEAST, LLC Amendment From: To: 0000146020 Renewal of FM KXBB 198736 Main 101.7 CIENEGA RIVER RAT RADIO, 05/18/2021 Accepted License SPRINGS, AZ LLC For Filing From: To: 0000146250 Renewal of FX K297AV 150119 107.3 CASPER, WY DOUGLAS 05/19/2021 Accepted License BROADCASTING, For Filing INC. -

530 CIAO BRAMPTON on ETHNIC AM 530 N43 35 20 W079 52 54 09-Feb

frequency callsign city format identification slogan latitude longitude last change in listing kHz d m s d m s (yy-mmm) 530 CIAO BRAMPTON ON ETHNIC AM 530 N43 35 20 W079 52 54 09-Feb 540 CBKO COAL HARBOUR BC VARIETY CBC RADIO ONE N50 36 4 W127 34 23 09-May 540 CBXQ # UCLUELET BC VARIETY CBC RADIO ONE N48 56 44 W125 33 7 16-Oct 540 CBYW WELLS BC VARIETY CBC RADIO ONE N53 6 25 W121 32 46 09-May 540 CBT GRAND FALLS NL VARIETY CBC RADIO ONE N48 57 3 W055 37 34 00-Jul 540 CBMM # SENNETERRE QC VARIETY CBC RADIO ONE N48 22 42 W077 13 28 18-Feb 540 CBK REGINA SK VARIETY CBC RADIO ONE N51 40 48 W105 26 49 00-Jul 540 WASG DAPHNE AL BLK GSPL/RELIGION N30 44 44 W088 5 40 17-Sep 540 KRXA CARMEL VALLEY CA SPANISH RELIGION EL SEMBRADOR RADIO N36 39 36 W121 32 29 14-Aug 540 KVIP REDDING CA RELIGION SRN VERY INSPIRING N40 37 25 W122 16 49 09-Dec 540 WFLF PINE HILLS FL TALK FOX NEWSRADIO 93.1 N28 22 52 W081 47 31 18-Oct 540 WDAK COLUMBUS GA NEWS/TALK FOX NEWSRADIO 540 N32 25 58 W084 57 2 13-Dec 540 KWMT FORT DODGE IA C&W FOX TRUE COUNTRY N42 29 45 W094 12 27 13-Dec 540 KMLB MONROE LA NEWS/TALK/SPORTS ABC NEWSTALK 105.7&540 N32 32 36 W092 10 45 19-Jan 540 WGOP POCOMOKE CITY MD EZL/OLDIES N38 3 11 W075 34 11 18-Oct 540 WXYG SAUK RAPIDS MN CLASSIC ROCK THE GOAT N45 36 18 W094 8 21 17-May 540 KNMX LAS VEGAS NM SPANISH VARIETY NBC K NEW MEXICO N35 34 25 W105 10 17 13-Nov 540 WBWD ISLIP NY SOUTH ASIAN BOLLY 540 N40 45 4 W073 12 52 18-Dec 540 WRGC SYLVA NC VARIETY NBC THE RIVER N35 23 35 W083 11 38 18-Jun 540 WETC # WENDELL-ZEBULON NC RELIGION EWTN DEVINE MERCY R. -

Exhibit 2181

Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 1 of 4 Electronically Filed Docket: 19-CRB-0005-WR (2021-2025) Filing Date: 08/24/2020 10:54:36 AM EDT NAB Trial Ex. 2181.1 Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 2 of 4 NAB Trial Ex. 2181.2 Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 3 of 4 NAB Trial Ex. 2181.3 Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 4 of 4 NAB Trial Ex. 2181.4 Exhibit 2181 Case 1:18-cv-04420-LLS Document 132 Filed 03/23/20 Page 1 of 1 NAB Trial Ex. 2181.5 Exhibit 2181 Case 1:18-cv-04420-LLS Document 133 Filed 04/15/20 Page 1 of 4 ATARA MILLER Partner 55 Hudson Yards | New York, NY 10001-2163 T: 212.530.5421 [email protected] | milbank.com April 15, 2020 VIA ECF Honorable Louis L. Stanton Daniel Patrick Moynihan United States Courthouse 500 Pearl St. New York, NY 10007-1312 Re: Radio Music License Comm., Inc. v. Broad. Music, Inc., 18 Civ. 4420 (LLS) Dear Judge Stanton: We write on behalf of Respondent Broadcast Music, Inc. (“BMI”) to update the Court on the status of BMI’s efforts to implement its agreement with the Radio Music License Committee, Inc. (“RMLC”) and to request that the Court unseal the Exhibits attached to the Order (see Dkt. -

Trends, Challenges, and Options in Khat Production in Ethiopia

International Journal of Drug Policy 30 (2016) 27–34 Contents lists available at ScienceDirect International Journal of Drug Policy jo urnal homepage: www.elsevier.com/locate/drugpo Commentary Legal harvest and illegal trade: Trends, challenges, and options in khat production in Ethiopia a, b Logan Cochrane *, Davin O’Regan a University of British Columbia, Canada b University of Maryland, United States A R T I C L E I N F O A B S T R A C T Article history: The production of khat in Ethiopia has boomed over the last two decades, making the country the world’s Received 6 September 2015 leading source. Khat is now one of Ethiopia’s largest crops by area of cultivation, the country’s second Received in revised form 31 January 2016 largest export earner, and an essential source of income for millions of Ethiopian farmers. Consumption Accepted 9 February 2016 has also spread from the traditional khat heartlands in the eastern and southern regions of Ethiopia to most major cities. This steady growth in production and use has unfolded under negligible government Keywords: support or regulation. Meanwhile, khat, which releases a stimulant when chewed, is considered an illicit Ethiopia drug in an increasing number of countries. Drawing on government data on khat production, trade, and Khat seizures as well as research on the political, socioeconomic, and development effects of plant-based illicit Trafficking narcotics industries, this commentary identifies possible considerations and scenarios for Ethiopia as the country begins to manage rising khat production, domestic consumption, and criminalization abroad. Deeply embedded in social and cultural practices and a major source of government and agricultural revenue, Ethiopian policymakers have few enviable choices. -

Hadiotv EXPERIMENTER AUGUST -SEPTEMBER 75C

DXer's DREAM THAT ALMOST WAS SHASILAND HadioTV EXPERIMENTER AUGUST -SEPTEMBER 75c BUILD COLD QuA BREE ... a 2-FET metal moocher to end the gold drain and De Gaulle! PIUS Socket -2 -Me CB Skyhook No -Parts Slave Flash Patrol PA System IC Big Voice www.americanradiohistory.com EICO Makes It Possible Uncompromising engineering-for value does it! You save up to 50% with Eico Kits and Wired Equipment. (%1 eft ale( 7.111 e, si. a er. ortinastereo Engineering excellence, 100% capability, striking esthetics, the industry's only TOTAL PERFORMANCE STEREO at lowest cost. A Silicon Solid -State 70 -Watt Stereo Amplifier for $99.95 kit, $139.95 wired, including cabinet. Cortina 3070. A Solid -State FM Stereo Tuner for $99.95 kit. $139.95 wired, including cabinet. Cortina 3200. A 70 -Watt Solid -State FM Stereo Receiver for $169.95 kit, $259.95 wired, including cabinet. Cortina 3570. The newest excitement in kits. 100% solid-state and professional. Fun to build and use. Expandable, interconnectable. Great as "jiffy" projects and as introductions to electronics. No technical experience needed. Finest parts, pre -drilled etched printed circuit boards, step-by-step instructions. EICOGRAFT.4- Electronic Siren $4.95, Burglar Alarm $6.95, Fire Alarm $6.95, Intercom $3.95, Audio Power Amplifier $4.95, Metronome $3.95, Tremolo $8.95, Light Flasher $3.95, Electronic "Mystifier" $4.95, Photo Cell Nite Lite $4.95, Power Supply $7.95, Code Oscillator $2.50, «6 FM Wireless Mike $9.95, AM Wireless Mike $9.95, Electronic VOX $7.95, FM Radio $9.95, - AM Radio $7.95, Electronic Bongos $7.95.