Classification and Analysis of a Large Collection of in Vivo Bioassay Descriptions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dissertationes Medicinae Universitatis Tartuensis 106 Dissertationes Medicinae Universitatis Tartuensis 106

DISSERTATIONES MEDICINAE UNIVERSITATIS TARTUENSIS 106 DISSERTATIONES MEDICINAE UNIVERSITATIS TARTUENSIS 106 EPIDEMIOLOGY OF ADULT EPILEPSY IN TARTU, ESTONIA Incidence, prevalence and medical treatment ANDRE ÕUN TARTU UNIVERSITY PRESS Department of Neurology and Neurosurgery, University of Tartu, Tartu, Estonia Dissertation is accepted for the commencement of the degree of Doctor of Medical Sciences on March 22, 2005 by the Council of the Faculty of Medicine, University of Tartu, Estonia Opponent: Dr. Tapani Keränen, University of Tampere, Finland Commencement: May 4, 2005 Publication of this dissertation is granted by the Faculty of Medicine, University of Tartu ISSN 1024–395X ISBN 9949–11–034–3 (trükis) ISBN 9949–11–035–1 (PDF)) Autoriõigus Andre Õun, 2005 Tartu Ülikooli Kirjastus www.tyk.ee Tellimus nr. 142 CONTENTS LIST OF ORIGINAL PUBLICATIONS......................................................... 8 ABBREVIATIONS......................................................................................... 9 I. INTRODUCTION ...................................................................................... 10 II. REVIEW OF THE LITERATURE ............................................................ 12 1. General aspects and methodology ......................................................... 12 1.1. Diagnostic accuracy ....................................................................... 12 1.2. Criteria, clinical characteristics and classifications........................ 13 1.2.1. Criteria for the activeness of epilepsy ................................ -

(12) United States Patent (10) Patent No.: US 7,700,128 B2 Doken Et Al

US007700128B2 (12) United States Patent (10) Patent No.: US 7,700,128 B2 Doken et al. (45) Date of Patent: Apr. 20, 2010 (54) SOLID PREPARATION COMPRISING AN 5,726,201 A * 3/1998 Fekete et al. ................ 514,471 INSULIN SENSITIZER, AN INSULIN 6,599,529 B1* 7/2003 Skinha et al. .... ... 424/458 SECRETAGOGUE ANDA 2003/0147952 A1* 8, 2003 Lim et al. ................... 424/468 POLYOXYETHYLENE SORBITAN EATTY 2003. O180352 A1 9, 2003 Patel et al. ACD ESTER 2004/O147564 A1 7/2004 Rao et al. 2004/0175421 A1* 9, 2004 Gidwani et al. ............. 424/465 (75) Inventors: Kazuhiro Doken, Osaka (JP); Tetsuya FOREIGN PATENT DOCUMENTS Kawano, Osaka (JP); Hiroyoshi EP O749751 A2 12/1996 Koyama, Osaka (JP): Naoru FR 2758461 7, 1998 Hamaguchi, Osaka (JP) GB 23990 15 9, 2004 JP 58065213 * 4f1983 (73) Assignee: Takeda Pharmaceutical Company WO WO95/12385 5, 1995 Limited, Osaka (JP) WO WO98, 11.884 3, 1998 WO WO 98,31360 7, 1998 (*) Notice: Subject to any disclaimer, the term of this WO WO 98/36755 8, 1998 patent is extended or adjusted under 35 WO WO98,57649 12/1998 U.S.C. 154(b) by 711 days. WO WO99.03476 1, 1999 WO WO99.03477 1, 1999 (21) Appl. No.: 10/544,581 WO WO99.03478 1, 1999 WO WOOO,274O1 5, 2000 WO WOOO.28989 5, 2000 (22) PCT Filed: Oct. 21, 2004 WO WOO1? 41737 A2 6, 2001 WO WOO1, 51463 T 2001 (86). PCT No.: PCT/UP2004/O15958 WO WOO2,04024 1, 2002 WO WO O2/O55009 T 2002 S371 (c)(1), WO WO 03/066028 A1 8, 2003 (2), (4) Date: Apr. -

WO 2016/118476 Al 28 July 2016 (28.07.2016) W P O P C T

(12) INTERNATIONAL APPLICATION PUBLISHED UNDER THE PATENT COOPERATION TREATY (PCT) (19) World Intellectual Property Organization International Bureau (10) International Publication Number (43) International Publication Date WO 2016/118476 Al 28 July 2016 (28.07.2016) W P O P C T (51) International Patent Classification: DO, DZ, EC, EE, EG, ES, FI, GB, GD, GE, GH, GM, GT, A61K 38/46 (2006.01) HN, HR, HU, ID, IL, IN, IR, IS, JP, KE, KG, KN, KP, KR, KZ, LA, LC, LK, LR, LS, LU, LY, MA, MD, ME, MG, (21) International Application Number: MK, MN, MW, MX, MY, MZ, NA, NG, NI, NO, NZ, OM, PCT/US2016/013847 PA, PE, PG, PH, PL, PT, QA, RO, RS, RU, RW, SA, SC, (22) International Filing Date: SD, SE, SG, SK, SL, SM, ST, SV, SY, TH, TJ, TM, TN, 19 January 2016 (19.01 .2016) TR, TT, TZ, UA, UG, US, UZ, VC, VN, ZA, ZM, ZW. (25) Filing Language: English (84) Designated States (unless otherwise indicated, for every kind of regional protection available): ARIPO (BW, GH, (26) Publication Language: English GM, KE, LR, LS, MW, MZ, NA, RW, SD, SL, ST, SZ, (30) Priority Data: TZ, UG, ZM, ZW), Eurasian (AM, AZ, BY, KG, KZ, RU, 62/105,342 20 January 2015 (20.01.2015) US TJ, TM), European (AL, AT, BE, BG, CH, CY, CZ, DE, DK, EE, ES, FI, FR, GB, GR, HR, HU, IE, IS, IT, LT, LU, (71) Applicant: THE CHILDREN'S MEDICAL CENTER LV, MC, MK, MT, NL, NO, PL, PT, RO, RS, SE, SI, SK, CORPORATION [US/US]; 300 Longwood Avenue, Bo SM, TR), OAPI (BF, BJ, CF, CG, CI, CM, GA, GN, GQ, ston, Massachusetts 021 15-5724 (US). -

Excipient: 0.4 Mg Lactose Monohydrate/Film-Coated Tablet

1. NAME OF THE MEDICINAL PRODUCT [[Topiramate]25 mg film-coated tablets [Topiramate] 50 mg film-coated tablets [Topiramate] 100 mg film-coated tablets [Topiramate] 200 mg film-coated tablets 2. QUALITATIVE AND QUANTITATIVE COMPOSITION 25 mg film-coated tablets Each film-coated tablet contains 25 mg of topiramate. Excipient: 0.4 mg lactose monohydrate/film-coated tablet. 50 mg film-coated tablets Each film-coated tablet contains 50 mg of topiramate. Excipient: 0.4 mg lactose monohydrate/film-coated tablet. 100 mg film-coated tablets Each film-coated tablet contains 100 mg of topiramate. Excipient: 0,05 mg sunset yellow (E110)/film-coated tablet. 200 mg film-coated tablets Each film-coated tablet contains 200 mg of topiramate. Excipient: 3.2 mg lactose monohydrate/film-coated tablet. For a full list of excipients, see section 6.1. 3. PHARMACEUTICAL FORM Film-coated tablet. [Topiramate] 25 mg are available as white, round, biconvex film-coated tablets. [Topiramate] 50 mg are available as yellow, round, biconvex film-coated tablets. [Topiramate] 100 mg are available as orange, oblong, biconvex film-coated tablets. [Topiramate] 200 mg are available as pink, oblong, biconvex film-coated tablets. 4. CLINICAL PARTICULARS 4.1 Therapeutic indications Monotherapy in adults, adolescents and children over 6 years of age with partial seizures with or without secondary generalised seizures and primary generalised tonic-clonic seizures. Adjunctive therapy in children aged 2 years and above, adolescents and adults with partial onset seizures with or without secondary generalization or primary generaliszed tonic-clonic seizures and for the treatment of seizures associated with Lennox-Gastaut syndrome. -

203313Orig1s000 203314Orig1s000

CENTER FOR DRUG EVALUATION AND RESEARCH APPLICATION NUMBER: 203313Orig1s000 203314Orig1s000 PROPRIETARY NAME REVIEW(S) PROPRIETARY NAME MEMORANDUM Division of Medication Error Prevention and Analysis (DMEPA) Office of Medication Error Prevention and Risk Management (OMEPRM) Office of Surveillance and Epidemiology (OSE) Center for Drug Evaluation and Research (CDER) *** This document contains proprietary information that cannot be released to the public*** Date of This Review: September 1, 2015 Application Type and Number: NDA 203313 Product Name and Strength: Ryzodeg 70/30 (insulin degludec and insulin aspart) injection, 100 units/mL Product Type: Combination (Drug + Device) Rx or OTC: Rx Applicant/Sponsor Name: Novo Nordisk Panorama #: 2015-1341036 DMEPA Primary Reviewer: Sarah K. Vee, PharmD DMEPA Team Leader: Yelena Maslov, PharmD Reference ID: 3813971 1 INTRODUCTION The Applicant submitted the proposed proprietary name, Ryzodeg on March 26, 2015 (b) (4) Division of Medication Error Prevention and Analysis (DMEPA) found the name conditionally acceptable in our previous review1 . On August 19, 2015, the Agency recommended that the Applicant consider including the modifier “70/30” to the proposed proprietary name to indicate the concentrations of the two insulins in the formulation. Since Ryzodeg is a mixed insulin formulation containing 70 % insulin degludec and 30 % insulin aspart, the addition of the modifier 70/30 would be consistent with current naming approach for mixed insulins. Thus, the Applicant submitted the name, Ryzodeg 70/30, for review on August 21, 2015. We note that the product characteristics are the same. This memorandum is to communicate that DMEPA finds the proposed proprietary name, Ryzodeg 70/30 (b) (4) is acceptable from both a misbranding and safety perspective. -

Curriculum Vitae: Marc Stephen Rendell, Md

P a g e | 1 CURRICULUM VITAE: MARC STEPHEN RENDELL, M.D. ADDRESS: The Rose Salter Medical Research Foundation 34 Versailles Newport Coast, CA 92657-0065 clinic office 355 Placentia Avenue, Suite 308b Newport Beach, CA 92663 TELEPHONE: 402 -578-1580 HOSPITAL AND FACULTY APPOINTMENTS: 12/1977-6/1983 Active Staff and Assistant Professor Division of Endocrinology Department of Medicine The Johns Hopkins Hospital Baltimore, Maryland 6/1980-6/1983 Assistant Professor Division of Nuclear Medicine Department of Radiology The Johns Hopkins University School of Medicine Baltimore, Maryland 12/1977-6/1983 Chief, Endocrinology Director, Radioimmunoassay Laboratory The US Public Health Service Hospital Baltimore, Maryland 7/1983-11/1984 Director, Diabetes Institute City of Faith Medical and Research Center Associate Professor, Medicine and Pathology ORU School of Medicine Tulsa, Oklahoma 2/1985- 9/2016 Director, Creighton Diabetes Center Associate Professor of Medicine Associate Professor of Biomedical Sciences (1993-1995) P a g e | 2 Professor of Medicine and Biomedical Sciences (1996-2016 ) Creighton University School of Medicine Omaha, Nebraska 3/1999- Medical Director: Rose Salter Medical Research Foundation Baltimore, Maryland, Omaha, Nebraska, Newport Beach, California CLINICAL PRACTICE 12/1977-6/1983 Active Staff Division of Endocrinology Department of Medicine The Johns Hopkins Hospital Baltimore, Maryland 12/1977-6/1983 Chief, Endocrinology Director, Radioimmunoassay Laboratory The US Public Health Service Hospital Baltimore, Maryland 7/1983-11/1984 Director, Diabetes Institute City of Faith Medical and Research Center ORU School of Medicine Tulsa, Oklahoma 2/1985- 9/2016 Director, Creighton Diabetes Center Creighton University Medical Center Omaha, Nebraska 9/2016- Medical Director: Rose Salter Diabetes Center Newport Beach, California 1/2017- Telemedicine Physician Teladoc and MDLive EDUCATION: 9/1964-6/1968 B.S. -

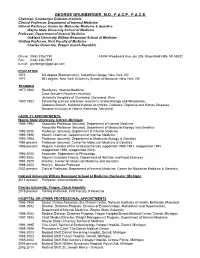

George Grunberger, M.D., F.A.C.P., F.A.C.E

GEORGE GRUNBERGER, M.D., F.A.C.P., F.A.C.E. Chairman, Grunberger Diabetes Institute Clinical Professor, Department of Internal Medicine Clinical Professor, Center for Molecular Medicine & Genetics Wayne State University School of Medicine Professor, Department of Internal Medicine Oakland University William Beaumont School of Medicine Visiting Professor, First Faculty of Medicine Charles University, Prague (Czech Republic) Phone: (248) 335-7740 43494 Woodward Ave, ste 208, Bloomfield Hills, MI 48302 Fax: (248) 338-7979 e-mail: [email protected] EDUCATION 1973 BA degree (Biochemistry), Columbia College, New York, NY 1977 MD degree, New York University School of Medicine, New York, NY TRAINING 1977-1980 Residency, Internal Medicine Case Western Reserve University University Hospitals of Cleveland, Cleveland, Ohio 1980-1983 Fellowship (clinical and basic research), Endocrinology and Metabolism, Diabetes Branch, National Institute of Arthritis, Diabetes, Digestive and Kidney Diseases National Institutes of Health, Bethesda, Maryland FACULTY APPOINTMENTS, Wayne State University, Detroit, Michigan 1986-1992 Associate Professor (tenured), Department of Internal Medicine Associate Professor (tenured), Department of Molecular Biology and Genetics 1992-2002 Professor (tenured), Department of Internal Medicine 1995-1996 Interim Chairman, Department of Internal Medicine 1992-1994 Professor (tenured), Department of Molecular Biology & Genetics 1994-present Professor (tenured), Center for Molecular Medicine & Genetics 1986-present Regular member -

Federal Register / Vol. 60, No. 80 / Wednesday, April 26, 1995 / Notices DIX to the HTSUS—Continued

20558 Federal Register / Vol. 60, No. 80 / Wednesday, April 26, 1995 / Notices DEPARMENT OF THE TREASURY Services, U.S. Customs Service, 1301 TABLE 1.ÐPHARMACEUTICAL APPEN- Constitution Avenue NW, Washington, DIX TO THE HTSUSÐContinued Customs Service D.C. 20229 at (202) 927±1060. CAS No. Pharmaceutical [T.D. 95±33] Dated: April 14, 1995. 52±78±8 ..................... NORETHANDROLONE. A. W. Tennant, 52±86±8 ..................... HALOPERIDOL. Pharmaceutical Tables 1 and 3 of the Director, Office of Laboratories and Scientific 52±88±0 ..................... ATROPINE METHONITRATE. HTSUS 52±90±4 ..................... CYSTEINE. Services. 53±03±2 ..................... PREDNISONE. 53±06±5 ..................... CORTISONE. AGENCY: Customs Service, Department TABLE 1.ÐPHARMACEUTICAL 53±10±1 ..................... HYDROXYDIONE SODIUM SUCCI- of the Treasury. NATE. APPENDIX TO THE HTSUS 53±16±7 ..................... ESTRONE. ACTION: Listing of the products found in 53±18±9 ..................... BIETASERPINE. Table 1 and Table 3 of the CAS No. Pharmaceutical 53±19±0 ..................... MITOTANE. 53±31±6 ..................... MEDIBAZINE. Pharmaceutical Appendix to the N/A ............................. ACTAGARDIN. 53±33±8 ..................... PARAMETHASONE. Harmonized Tariff Schedule of the N/A ............................. ARDACIN. 53±34±9 ..................... FLUPREDNISOLONE. N/A ............................. BICIROMAB. 53±39±4 ..................... OXANDROLONE. United States of America in Chemical N/A ............................. CELUCLORAL. 53±43±0 -

Assessing the in Vitro Inhibitory Effects on Key Enzymes

molecules Article Assessing the In Vitro Inhibitory Effects on Key Enzymes Linked to Type-2 Diabetes and Obesity and Protein Glycation by Phenolic Compounds of Lauraceae Plant Species Endemic to the Laurisilva Forest Vítor Spínola and Paula C. Castilho * CQM—Centro de Química da Madeira, Universidade da Madeira, Campus da Penteada, 9020-105 Funchal, Portugal; [email protected] * Correspondence: [email protected]; Tel.: +351-291-705-102 Abstract: Methanolic leaf extracts of four Lauraceae species endemic to Laurisilva forest (Apollonias barbujana, Laurus novocanariensis, Ocotea foetens and Persea indica) were investigated for the first time for their potential to inhibit key enzymes linked to type-2 diabetes (α-amylase, α-glucosidase, aldose reductase) and obesity (pancreatic lipase), and protein glycation. Lauraceae extracts revealed significant inhibitory activities in all assays, altough with different ability between species. In general, P. indica showed the most promissing results. In the protein glycation assay, all analysed extracts displayed a stronger effect than a reference compound: aminoguanidine (AMG). The in vitro anti- diabetic, anti-obesity and anti-glycation activities of analysed extracts showed correlation with their flavonols and flavan-3-ols (in particular, proanthocyanins) contents. These Lauraceae species Citation: Spínola, V.; Castilho, P.C. Assessing the In Vitro Inhibitory have the capacity to assist in adjuvant therapy of type-2 diabetes and associated complications, Effects on Key Enzymes Linked to through modulation of the activity of key metabolic enzymes and prevention of advanced glycation Type-2 Diabetes and Obesity and end-products (AGEs) formation. Protein Glycation by Phenolic Compounds of Lauraceae Plant Keywords: type-2 diabetes; Lauraceae; polyphenols; digestive enzymes inhibition; aldose reductase Species Endemic to the Laurisilva inhibition; protein glycation inhibition Forest. -

Anatomical Classification Guidelines V2020 EPHMRA ANATOMICAL

EPHMRA ANATOMICAL CLASSIFICATION GUIDELINES 2020 Anatomical Classification Guidelines V2020 "The Anatomical Classification of Pharmaceutical Products has been developed and maintained by the European Pharmaceutical Marketing Research Association (EphMRA) and is therefore the intellectual property of this Association. EphMRA's Classification Committee prepares the guidelines for this classification system and takes care for new entries, changes and improvements in consultation with the product's manufacturer. The contents of the Anatomical Classification of Pharmaceutical Products remain the copyright to EphMRA. Permission for use need not be sought and no fee is required. We would appreciate, however, the acknowledgement of EphMRA Copyright in publications etc. Users of this classification system should keep in mind that Pharmaceutical markets can be segmented according to numerous criteria." © EphMRA 2020 Anatomical Classification Guidelines V2020 CONTENTS PAGE INTRODUCTION A ALIMENTARY TRACT AND METABOLISM 1 B BLOOD AND BLOOD FORMING ORGANS 28 C CARDIOVASCULAR SYSTEM 35 D DERMATOLOGICALS 50 G GENITO-URINARY SYSTEM AND SEX HORMONES 57 H SYSTEMIC HORMONAL PREPARATIONS (EXCLUDING SEX HORMONES) 65 J GENERAL ANTI-INFECTIVES SYSTEMIC 69 K HOSPITAL SOLUTIONS 84 L ANTINEOPLASTIC AND IMMUNOMODULATING AGENTS 92 M MUSCULO-SKELETAL SYSTEM 102 N NERVOUS SYSTEM 107 P PARASITOLOGY 118 R RESPIRATORY SYSTEM 120 S SENSORY ORGANS 132 T DIAGNOSTIC AGENTS 139 V VARIOUS 141 Anatomical Classification Guidelines V2020 INTRODUCTION The Anatomical Classification was initiated in 1971 by EphMRA. It has been developed jointly by Intellus/PBIRG and EphMRA. It is a subjective method of grouping certain pharmaceutical products and does not represent any particular market, as would be the case with any other classification system. -

Synergistic Effect of Glyburide and Milrinone Synergistische Wirkung Von Glyburide Und Milrinone Effet Synergique De Glyburide Et Milrinone

Europäisches Patentamt *EP001145717B1* (19) European Patent Office Office européen des brevets (11) EP 1 145 717 B1 (12) EUROPEAN PATENT SPECIFICATION (45) Date of publication and mention (51) Int Cl.7: A61K 31/64, A61K 31/4545, of the grant of the patent: A61P 3/10 12.05.2004 Bulletin 2004/20 // (A61K31/64, 31:4545) (21) Application number: 01303020.0 (22) Date of filing: 30.03.2001 (54) Synergistic effect of glyburide and milrinone Synergistische Wirkung von Glyburide und Milrinone Effet synergique de glyburide et milrinone (84) Designated Contracting States: (56) References cited: AT BE CH CY DE DK ES FI FR GB GR IE IT LI LU WO-A-98/36755 MC NL PT SE TR • PARKER J C ET AL: "EFFECT OF CYCLIC AMP (30) Priority: 13.04.2000 US 196728 PHOSPHODIESTERASE INHIBITORS ON INSULIN SECRETION AND GLYCEMIA" (43) Date of publication of application: DIABETOLOGIA, BERLIN, DE, vol. 39, no. SUPPL 17.10.2001 Bulletin 2001/42 1, 1 September 1996 (1996-09-01), page A225 XP001061977 ISSN: 0012-186X (73) Proprietor: Pfizer Products Inc. • FUJIMOTO SHIMPEI ET AL: "The novel Groton, Connecticut 06340 (US) insulinotropic mechanism of pimobendan: Direct enhancement of the exocytotic process of (72) Inventors: insulin secretory granules by increased Ca2+ • Fryburg, David Albert sensitivity in beta-cells." ENDOCRINOLOGY, Groton, Connecticut 06340 (US) vol. 139, no. 3, March 1998 (1998-03), pages • Parker, Janice Catherine 1133-1140, XP001070282 ISSN: 0013-7227 Groton, Connecticut 06340 (US) • HÖHN H ET AL: "Potential Antidiabetic Agents. Pyrazolo[3,4-b]pyridines" JOURNAL OF (74) Representative: Wood, David John et al MEDICINAL CHEMISTRY, AMERICAN PFIZER LIMITED, CHEMICAL SOCIETY. -

伊域化學藥業(香港)有限公司 API Antidiabetic Agents

® 伊域化學藥業(香港)有限公司 YICK-VIC CHEMICALS & PHARMACEUTICALS (HK) LTD Rm 1006, 10/F, Hewlett Centre, Tel: (852) 25412772 (4 lines) No. 52-54, Hoi Yuen Road, Fax: (852) 25423444 / 25420530 / 21912858 Kwun Tong, E-mail: [email protected] YICK -VIC 伊域 Kowloon, Hong Kong. Site: http://www.yickvic.com API Antidiabetic Agents Product Code CAS Product Name PH-4912MA 5989-02-6 (-)-LOLIOLIDE 11028-27-6 SPI-2021AG 334618-23-4 (R)-(-)-3-AMINOPIPERIDINE DIHYDROCHLORIDE PH-3177EA 556-27-4 (S)-L-ALLIIN (REFERENCE GRADE) SPI-3425AA 2295-31-0 2,4-THIAZOLIDINEDIONE SPI-0594DC 22115-41-9 2-CYANOBENZYL BROMIDE SPI-1745BC 4318-56-3 6-CHLORO-3-METHYLURACIL MIS-1068 4270-27-3 6-CHLOROURACIL PH-4448 56180-94-0 ACARBOSE PH-1698CA 60-92-4 ADENOSINE-3',5'-CYCLIC MONOPHOSPHATE PH-3177AA 539-86-6 ALLICIN PH-3177AC 539-86-6 ALLICIN (REFERENCE GRADE) PH-3177EE 17795-27-6 ALLIIN (MIXTURE OF 4 ISOMERS) 112319-21-8 (DL-ALANINE-ISOMER) PH-2641AA 850649-62-6 ALOGLIPTIN BENZOATE PH-5937B 126724-49-0 AMYLIN (HUMAN) Copyright © 2018 YICK-VIC CHEMICALS & PHARMACEUTICALS (HK) LTD. All rights reserved. Page 1 of 7 Product Code CAS Product Name 135702-23-7 (FRAGMENT 8-37) 118068-30-7 (FRAGMENT 20-29) 122384-88-7 (AMLINTIDE,SYNTHETIC VERSION OF AMYLIN) PH-0609FA 739366-20-2 ANAGLIPTIN PH-4912EA 465-00-9 ARJUNOLIC ACID PH-5950AJ 863031-24-7 AZILSARTAN KAMEDOXOMIL PH-5950AE 863031-21-4 AZILSARTAN MEDOXOMIL PH-1160GA 22457-89-2 BENFOTIAMINE CC-2199HE 38213-69-3 BIS(MALTOLATO)OXOVANADIUM(IV) PH-5238CP BLACK MULBERRY LEAF FLAVONOID PH-5238CC BLACK MULBERRY LEAF POLYSACCHARIDE SPI-1333FA 109-73-9 BUTYLAMINE MIS-6185 109-73-9 BUTYLAMINE (REFERENCE GRADE) PH-0608HA 842133-18-0 CANAGLIFLOZIN PH-0608HE 928672-86-0 CANAGLIFLOZIN HEMIHYDRATE MIS-9365 CHAGA POLYSACCHARIDES PH-3214GC 868363-81-9 CHITOGLUCAN (tradename) PH-1148 94-20-2 CHLORPROPAMIDE PH-5065 74772-77-3 CIGLITAZONE MIS-9361 COPRINUS COMATUS EXTRACT MIS-11930 182315-46-4 CYCLOCARIC ACID B PH-4912AE CYCLOCARYA PALIURUS EXTRACT PH-4912AA CYCLOCARYA PALIURUS LEAF PH-4912AC CYCLOCARYA PALIURUS TEA ® Copyright © 2018 YICK-VIC CHEMICALS & PHARMACEUTICALS (HK) LTD.