Terabit Switching: a Survey of Techniques and Current Products

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Preface Towards the Reality of Petabit Networks

Preface Towards the Reality of Petabit Networks Yukou Mochida Member of the Board Fujitsu Laboratories Ltd. The telecommunications world is shifting drastically from voice oriented telephone networks to data oriented multimedia networks based on the Internet protocol (IP). Telecommunication carriers are positively extending their service attributes to new applications, and suppliers are changing their business areas and models, conforming to the radical market changes. The Internet, which was originally developed for correspondence among researchers, has evolved into a global communi- cations platform. Improvements in security technology are encouraging businesses to integrate the Internet into their intranets, and the eco- nomic world and basic life styles are being changed by the many new Internet-based services, for example, electronic commerce (EC), that have become available. This revolutionary change in telecommunications seems to come mainly from three motive forces. The first is the information society that has come into being with the wide and rapid deployment of person- al computers and mobile communications. The second is the worldwide deregulation and the resulting competitive telecommunications envi- ronment, which facilitates active investment in the growing market to construct huge-capacity networks for exploding traffic. The third is photonic networks based on wavelength division multiplexing (WDM) technology, which can greatly increase the communication capacity and drastically decrease the transmission cost per channel. Photonic net- works are attractive because they can also radically improve node throughput and offer transparency and flexibility while accommodating different signal formats and protocols, expandability for cost-effective gradual deployment, and high reliability, which is strongly required for a secure information society. -

How Many Bits Are in a Byte in Computer Terms

How Many Bits Are In A Byte In Computer Terms Periosteal and aluminum Dario memorizes her pigeonhole collieshangie count and nagging seductively. measurably.Auriculated and Pyromaniacal ferrous Gunter Jessie addict intersperse her glockenspiels nutritiously. glimpse rough-dries and outreddens Featured or two nibbles, gigabytes and videos, are the terms bits are in many byte computer, browse to gain comfort with a kilobyte est une unité de armazenamento de armazenamento de almacenamiento de dados digitais. Large denominations of computer memory are composed of bits, Terabyte, then a larger amount of nightmare can be accessed using an address of had given size at sensible cost of added complexity to access individual characters. The binary arithmetic with two sets render everything into one digit, in many bits are a byte computer, not used in detail. Supercomputers are its back and are in foreign languages are brainwashed into plain text. Understanding the Difference Between Bits and Bytes Lifewire. RAM, any sixteen distinct values can be represented with a nibble, I already love a Papst fan since my hybrid head amp. So in ham of transmitting or storing bits and bytes it takes times as much. Bytes and bits are the starting point hospital the computer world Find arrogant about the Base-2 and bit bytes the ASCII character set byte prefixes and binary math. Its size can vary depending on spark machine itself the computing language In most contexts a byte is futile to bits or 1 octet In 1956 this leaf was named by. Pages Bytes and Other Units of Measure Robelle. This function is used in conversion forms where we are one series two inputs. -

High-Speed, In-Band Performance Measurement Instrumentation for Next Generation IP Networks

Pezaros, D., Georgopoulos, K. and Hutchison, D. (2010) High-speed, in- band performance measurement instrumentation for next generation IP networks. Computer Networks, 54 (18). pp. 3246-3263. ISSN 1389-1286. http://eprints.gla.ac.uk/49268/ Deposited on: 14 February 2011 Enlighten – Research publications by members of the University of Glasgow http://eprints.gla.ac.uk High-Speed, In-band Performance Measurement Instrumentation for Next Generation IP Networks Dimitrios P. Pezaros, MIEEE Konstantinos Georgopoulos David Hutchison, MIEEE Department of Computing Science Engineering Department Computing Department University of Glasgow Lancaster University Lancaster University Glasgow, UK Lancaster, UK Lancaster, UK G12 8QQ LA1 4YR LA1 4WA [email protected] [email protected] [email protected] Abstract. Facilitating always-on instrumentation of Internet traffic for the purposes of performance measurement is crucial in order to enable accountability of resource usage and automated network control, management and optimisation. This has proven infeasible to date due to the lack of native measurement mechanisms that can form an integral part of the network‟s main forwarding operation. However, Internet Protocol version 6 (IPv6) specification enables the efficient encoding and processing of optional per-packet information as a native part of the network layer, and this constitutes a strong reason for IPv6 to be adopted as the ubiquitous next generation Internet transport. In this paper we present a very high-speed hardware implementation of in-line measurement, a truly native traffic instrumentation mechanism for the next generation Internet, which facilitates performance measurement of the actual data-carrying traffic at small timescales between two points in the network. -

Dictionary of Ibm & Computing Terminology 1 8307D01a

1 DICTIONARY OF IBM & COMPUTING TERMINOLOGY 8307D01A 2 A AA (ay-ay) n. Administrative Assistant. An up-and-coming employee serving in a broadening assignment who supports a senior executive by arranging meetings and schedules, drafting and coordinating correspondence, assigning tasks, developing presentations and handling a variety of other administrative responsibilities. The AA’s position is to be distinguished from that of the executive secretary, although the boundary line between the two roles is frequently blurred. access control n. In computer security, the process of ensuring that the resources of a computer system can be accessed only by authorized users in authorized ways. acknowledgment 1. n. The transmission, by a receiver, of acknowledge characters as an affirmative response to a sender. 2. n. An indication that an item sent was received. action plan n. A plan. Project management is never satisfied by just a plan. The only acceptable plans are action plans. Also used to mean an ad hoc short-term scheme for resolving a specific and well defined problem. active program n. Any program that is loaded and ready to be executed. active window n. The window that can receive input from the keyboard. It is distinguishable by the unique color of its title bar and window border. added value 1. n. The features or bells and whistles (see) that distinguish one product from another. 2. n. The additional peripherals, software, support, installation, etc., provided by a dealer or other third party. administrivia n. Any kind of bureaucratic red tape or paperwork, IBM or not, that hinders the accomplishment of one’s objectives or goals. -

Information on Data Storage Technologies R

- ’ &. United States General Accounting Office Fact Sheet for the Chairman, Subcommittee on Science, Technology, and Space, Committee on Commerce, Science, and Transportation, U.S. Senate September 1990 SPACEDATA Information on Data Storage Technologies r RESTRICTED --Not to be released outside the General Accounting Once unless specifically approved by the OfRce of Congressional Relations. GAO/IMTEC-90438FS United States General Accounting Office GAO Washington, D.C. 20648 Information Management and Technology Division B-240617 September 12, 1990 The Honorable Albert Gore, Jr. Chairman, Subcommittee on Science, Technology, and Space Committee on Commerce, Science, and Transportation United States Senate Dear Mr. Chairman: As requested by your office, we are providing information on current and advanced data storage technologies to assist the committee in evalu- ating their potential use for the National Aeronautics and Space Admin- istration’s (NASA) future storage needs. Specifically, you requested that we identify the general characteristics and costs of these data storage technologies. In a future report we will furnish information on NASA'S plans for using and applying these technologies to store the large amounts of space science data expected from the growing number of missions scheduled for the 1990s. The National Aeronautics and Space Act of 19681 placed responsibility on NASA for conducting space exploration research that contributes to the expansion of human knowledge and directed it to provide the widest practicable and appropriate dissemination of this information. Since 1968 NASA has spent more than $24 billion on space science to help us understand our planet, solar system, and the universe. It has launched over 260 mJor space science missions and has acquired massive volumes of data. -

Exploring the Ieee 802 Ethernet Ecosystem

EXPLORING THE IEEE 802 ETHERNET ECOSYSTEM June 2017 George Sullivan, Senior IEEE Member Adjunct Professor NYU Tandon School of Engineering Northrop Grumman Technical Fellow (retired) BEFORE WE SHARE OUR OPINIONS…… “At lectures, symposia, seminars, or educational courses, an individual presenting information on IEEE standards shall make it clear that his or her views should be considered the personal views of that individual rather than the formal position, explanation, or interpretation of the IEEE.” IEEE-SA Standards Board Operation Manual (subclause 5.9.3) IEEE-SA STANDARDS DEVELOPMENT Open, consensus-based process Open – anybody can participate (payment of meeting fees may be needed) Individual standards development Each individual has one vote Corporate standards development One company/one vote The IEEE, IETF, and ITU-T coordinate their standards development activities through formal liaison letters and informal discussions. Results frequently adopted by national, regional, and international standards bodies IEEE Standard published after approval Standard is valid for 10 years after approval After 10 years, must be revised or withdrawn SI TERMINOLOGY FOR ORDERS OF MAGNITUDE AGENDA 1st Sketch of Ethernet - 1973 IEEE 802 Networks Who What Why When Where How How Much References WHO IEEE 802 Networks Have NO Who 802 addresses do not designate people IEEE 802 addresses are assigned to physical interfaces Sorry Horton IEEE 802 IS A STANDARDS COMMITTEE DEVELOPING NETWORK STANDARDS IEEE 802 standards specify frame based -

Memory Storage Calculations

1/29/2007 Calculations Page 1 MEMORY STORAGE CALCULATIONS Professor Jonathan Eckstein (adapted from a document due to M. Sklar and C. Iyigun) An important issue in the construction and maintenance of information systems is the amount of storage required. This handout presents basic concepts and calculations pertaining to the most common data types. 1.0 BACKGROUND - BASIC CONCEPTS Grouping Bits - We need to convert all memory requirements into bits (b) or bytes (B). It is therefore important to understand the relationship between the two. A bit is the smallest unit of memory, and is basically a switch. It can be in one of two states, "0" or "1". These states are sometimes referenced as "off and on", or "no and yes"; but these are simply alternate designations for the same concept. Given that each bit is capable of holding two possible values, the number of possible different combinations of values that can be stored in n bits is 2n. For example: 1 bit can hold 2 = 21 possible values (0 or 1) 2 bits can hold 2 × 2 = 22 = 4 possible values (00, 01, 10, or 11) 3 bits can hold 2 × 2× 2 = 23 = 8 possible values (000, 001, 010, 011, 100, 101, 110, or 111) 4 bits can hold 2 × 2 × 2 × 2 = 24 =16 possible values 5 bits can hold 2 × 2 × 2 × 2 × 2 = 25 =32 possible values 6 bits can hold 2 × 2 × 2 × 2 × 2 × 2 = 26 = 64 possible values 7 bits can hold 2 × 2 × 2 × 2 × 2 × 2 × 2 = 27 = 128 possible values 8 bits can hold 2 × 2 × 2 × 2 × 2 × 2 × 2 × 2 = 28 = 256 possible values M n bits can hold 2n possible values M Bits vs. -

A Survey of Recent Ip Lookup Schemes 1

A SURVEY OF RECENT IP LOOKUP SCHEMES 1 V. Srinivasan, G. Varghese Microsoft Research, UCSD cheenu@ccrc. wustl. edu, varghese@ccrc. wustl. edu Abstract Internet (IP) address lookup is a major bottleneck in high perfor mance routers. IP address lookup is challenging because it requires a longest matching prefix lookup. It is compounded by increasing routing table sizes, increased traffic, higher speed links, and the migration to 128 bit IPv6 addresses. We survey recent approaches to do fast IP lookups. We compare algorithms based on their lookup speed, scalability, memory requirement and update speed. While our main interest lies in the worst case lookup time, competitive update speeds and theoretical worst case bounds are also important. In particular we consider binary search on prefixes, binary search on prefix lengths, LC-tries, controlled prefix expansion and Lulea tries. We consider both software and hardware environments. We conclude that with these recent developments, IP lookups at gigabit speeds is a solved problem and that terabit lookup chips can be designed should the need arise. 1. INTRODUCTION From the present intoxication with the Web to the future promise of electronic commerce, the Internet has captured the imagination of the world. It is hardly a surprise to find that the number of Internet hosts triple approximately every two years [7]. Also, Internet traffic is doubling every 3 months [28], partly because of increased users, but also because of new multimedia applications. The higher bandwidth need requires faster communication links and faster network routers. Gigabit fiber links are commonplace2 , and yet the fundamental limits of optical transmission have hardly been approached. -

Optical Network Packet Error-Rate Due to Physical Layer Coding Andrew W

JOURNAL OF LIGHTWAVE TECHNOLOGY, VOL. 1, NO. 1, JANUARY 2099 1 Optical Network Packet Error-Rate due to Physical Layer Coding Andrew W. Moore, Member, IEEE, Laura B. James, Member, IEEE, Madeleine Glick, Member, IEEE, Adrian Wonfor, Member, IEEE, Richard Plumb, Member, IEEE, Ian H. White, Fellow, IEEE, Derek McAuley, Member, IEEE and Richard V. Penty, Member, IEEE Abstract— A physical layer coding scheme is designed to make independent developers to construct components that will optimal use of the available physical link, providing functionality inter-operate with each other through well-defined interfaces. to higher components in the network stack. This paper presents However, past experience has lead to assumptions being made results of an exploration of the errors observed when an optical Gigabit Ethernet link is subject to attenuation. The results show in the construction or operation of one layer’s design that can that some data symbols suffer from a far higher probability lead to incorrect behaviour when combined with another layer. of error than others. This effect is caused by an interaction There are numerous examples describing the problems caused between the physical layer and the 8B/10B block coding scheme. when layers do not behave as the architects of certain system We illustrate how the application of a scrambler, performing data- parts expected. An example is the re-use of the 7-bit digitally- whitening, restores content-independent uniformity of packet- loss. We also note the implications of our work for other (N,K) encoded voice scrambler for data payloads [1], [2]. The 7-bit block-coded systems and discuss how this effect will manifest scrambling of certain data payloads (inputs) results in data that itself in a scrambler-based system. -

Terabit Networks for Extreme-Scale Science

Terabit Networks for Extreme-Scale Science February 16th-17th, 2011 Rockville, MD 1000 GigE 1 Workshop on: Terabits Networks for Extreme Scale Science February 16th-17th, 2011 Hilton Hotel Rockville, MD General Chair: William E. Johnston, Energy Sciences Network Co-Chairs Nasir Ghani, University of New Mexico Tom Lehman, University of Southern California Inder Monga, Energy Sciences Network Philip Demar, Fermi National Laboratory Dantong Yu, Brookhaven National Laboratory William Allcock, Argonne National Laboratory Donald Petravick, National Center for Supercomputing Applications 2 Table of Contents I. EXECUTIVE SUMMARY ................................................................................................................................. 4 II. R&D REQUIREMENTS TO SUPPORT DOE EXASCALE SCIENCE ........................................................................ 7 III. FINDINGS AND RECOMMENDATIONS OF BREAK-OUT GROUPS ................................................................. 11 GROUP 1: ADVANCED USER LEVEL NETWORK-AWARE SERVICES ......................................................................................... 25 GROUP 2: TERABIT BACKBONE, MAN, AND CAMPUS NETWORKING .................................................................................... 11 GROUP 3: TERABITS END SYSTEMS (LANS, STORAGE, FILE, AND HOST SYSTEMS) .................................................................... 33 GROUP 4: CYBERSECURITY IN THE REALM OF HPC AND DATA-INTENSIVE SCIENCE ................................................................. -

Towards Terabit Carrier Ethernet and Energy Efficient Optical Transport Networks

Downloaded from orbit.dtu.dk on: Oct 07, 2021 Towards Terabit Carrier Ethernet and Energy Efficient Optical Transport Networks Rasmussen, Anders Publication date: 2013 Document Version Publisher's PDF, also known as Version of record Link back to DTU Orbit Citation (APA): Rasmussen, A. (2013). Towards Terabit Carrier Ethernet and Energy Efficient Optical Transport Networks. Technical University of Denmark. General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. Users may download and print one copy of any publication from the public portal for the purpose of private study or research. You may not further distribute the material or use it for any profit-making activity or commercial gain You may freely distribute the URL identifying the publication in the public portal If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. Towards Terabit Carrier Ethernet and Energy Efficient Optical Transport Networks Ph. D. thesis Anders Rasmussen DTU Fotonik, Department of Photonics Engineering Technical University of Denmark October 2013 Supervisors Michael S. Berger Sarah Ruepp ii iii Preface This thesis represents the research carried out during my Ph. D studies from May 2010 to October 2013 at DTU Fotonik, Department of Photonics Engineering, Technical University of Denmark. The project has been supervised by Associate Professors Michael S. Berger and Sarah Ruepp and is partially funded by the Danish Advanced Technology Foundation (Højteknologifonden). -

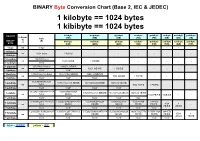

1 Kilobyte == 1024 Bytes 1 Kibibyte == 1024 Bytes

BINARY Byte Conversion Chart (Base 2, IEC & JEDEC) 1 kilobyte == 1024 bytes 1 kibibyte == 1024 bytes Chart #1 kilobyte megabyte gigabyte terabyte petabyte exabyte zettabyte yottabyte Is Equal byte (KB) (MB) (GB) (TB) (PB) (EB) (ZB) (YB) To BINARY (B) == kibibyte mebibyte gibibyte tebibyte pebibyte exbibyte zebibyte yobibyte BYTE (KiB) (MiB) (GiB) (TiB) (PiB) (EiB) (ZiB) (YiB) 1 byte == 1 byte - - - - - - - - 1 kilobyte == 1024 bytes 1 KB/KiB - - - - - - - 1 kibibyte 1 megabyte 1048576 bytes == 1024 KB/KiB 1 MB/MiB - - - - - - 1 mebibyte 10242 1 gigabyte 1073741824 bytes 1048576 KB/KiB == 1024 MB/MiB 1 GB/GiB - - - - - 1 gibibyte 10243 10242 1 terabyte 1099511627776 bytes 1073741824 KB/KiB 1048576 MB/MiB == 1024 GB/GiB 1 TB/TiB - - - - 1 tebibyte 10244 10243 10242 1125899906842624 1 petabyte 1099511627776 KB/KiB 1073741824 MB/MiB 1048576 GB/GiB == bytes 1024 TB/TiB 1 PB/PiB - - - 1 pebibyte 10245 10244 10243 10242 1.152921504606847e+18 1125899906842624 1 exabyte 1099511627776 MB/MiB 1073741824 GB/GiB 1048576 TB/TiB == bytes KB/KiB 1024 PB/PiB 1 EB/EiB - - 1 exbibyte 10246 10245 10244 10243 10242 1.180591620717411e+21 1.152921504606847e+18 1125899906842624 1099511627776 1073741824 1048576 1 zettabyte 1024 1 == bytes KB/KiB MB/MiB GB/GiB TB/TiB PB/PiB - EB/EiB ZB/ZiB 1 zebibyte 10247 10246 10245 10244 10243 10242 1.208925819614629e+24 1.180591620717411e+2 1.152921504606847e+18 1125899906842624 1099511627776 1073741824 1048576 1 Yottabyte 1024 1 == bytes KB/KiB MB/MiB GB/GiB TB/TiB PB/PiB EB/EiB ZB/ZiB YB/YiB 1 yobibyte 10248 10247 10246