The Entropy Problem

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Quantitative Study of Advanced Encryption Standard Performance

United States Military Academy USMA Digital Commons West Point ETD 12-2018 A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility Daniel Hawthorne United States Military Academy, [email protected] Follow this and additional works at: https://digitalcommons.usmalibrary.org/faculty_etd Part of the Information Security Commons Recommended Citation Hawthorne, Daniel, "A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility" (2018). West Point ETD. 9. https://digitalcommons.usmalibrary.org/faculty_etd/9 This Doctoral Dissertation is brought to you for free and open access by USMA Digital Commons. It has been accepted for inclusion in West Point ETD by an authorized administrator of USMA Digital Commons. For more information, please contact [email protected]. A QUANTITATIVE STUDY OF ADVANCED ENCRYPTION STANDARD PERFORMANCE AS IT RELATES TO CRYPTOGRAPHIC ATTACK FEASIBILITY A Dissertation Presented in Partial Fulfillment of the Requirements for the Degree of Doctor of Computer Science By Daniel Stephen Hawthorne Colorado Technical University December, 2018 Committee Dr. Richard Livingood, Ph.D., Chair Dr. Kelly Hughes, DCS, Committee Member Dr. James O. Webb, Ph.D., Committee Member December 17, 2018 © Daniel Stephen Hawthorne, 2018 1 Abstract The advanced encryption standard (AES) is the premier symmetric key cryptosystem in use today. Given its prevalence, the security provided by AES is of utmost importance. Technology is advancing at an incredible rate, in both capability and popularity, much faster than its rate of advancement in the late 1990s when AES was selected as the replacement standard for DES. Although the literature surrounding AES is robust, most studies fall into either theoretical or practical yet infeasible. -

Constructing Low-Weight Dth-Order Correlation-Immune Boolean Functions Through the Fourier-Hadamard Transform Claude Carlet and Xi Chen*

1 Constructing low-weight dth-order correlation-immune Boolean functions through the Fourier-Hadamard transform Claude Carlet and Xi Chen* Abstract The correlation immunity of Boolean functions is a property related to cryptography, to error correcting codes, to orthogonal arrays (in combinatorics, which was also a domain of interest of S. Golomb) and in a slightly looser way to sequences. Correlation-immune Boolean functions (in short, CI functions) have the property of keeping the same output distribution when some input variables are fixed. They have been widely used as combiners in stream ciphers to allow resistance to the Siegenthaler correlation attack. Very recently, a new use of CI functions has appeared in the framework of side channel attacks (SCA). To reduce the cost overhead of counter-measures to SCA, CI functions need to have low Hamming weights. This actually poses new challenges since the known constructions which are based on properties of the Walsh-Hadamard transform, do not allow to build unbalanced CI functions. In this paper, we propose constructions of low-weight dth-order CI functions based on the Fourier- Hadamard transform, while the known constructions of resilient functions are based on the Walsh-Hadamard transform. We first prove a simple but powerful result, which makes that one only need to consider the case where d is odd in further research. Then we investigate how constructing low Hamming weight CI functions through the Fourier-Hadamard transform (which behaves well with respect to the multiplication of Boolean functions). We use the characterization of CI functions by the Fourier-Hadamard transform and introduce a related general construction of CI functions by multiplication. -

Ohio IT Standard ITS-SEC-01 Data Encryption and Cryptography

Statewide Standard State of Ohio IT Standard Standard Number: Title: ITS-SEC-01 Data Encryption and Cryptography Effective Date: Issued By: 03/12/2021 Ervan D. Rodgers II, Assistant Director/State Chief Information Officer Office of Information Technology Ohio Department of Administrative Services Version Identifier: Published By: 2.0 Investment and Governance Division Ohio Office of Information Technology 1.0 Purpose This state IT standard defines the minimum requirements for cryptographic algorithms that are cryptographically strong and are used in security services that protect at-risk or sensitive data as defined and required by agency or State policy, standard or rule. This standard does not classify data elements; does not define the security schemes and mechanisms for devices such as tape backup systems, storage systems, mobile computers or removable media; and does not identify or approve secure transmission protocols that may be used to implement security requirements. 2.0 Scope Pursuant to Ohio Administrative Policy IT-01, “Authority of the State Chief Information Officer to Establish Ohio IT Policy,” this state IT standard is applicable to every organized body, office, or agency established by the laws of the state for the exercise of any function of state government except for those specifically exempted. 3.0 Background The National Institute for Science and Technology (NIST) conducts extensive research and development in cryptography techniques. Their publications include technical standards for data encryption, digital signature and message authentication as well as guidelines for implementing information security and managing cryptographic keys. These standards and guidelines have been mandated for use in federal agencies and adopted by state governments and private enterprises. -

State of the Art in Lightweight Symmetric Cryptography

State of the Art in Lightweight Symmetric Cryptography Alex Biryukov1 and Léo Perrin2 1 SnT, CSC, University of Luxembourg, [email protected] 2 SnT, University of Luxembourg, [email protected] Abstract. Lightweight cryptography has been one of the “hot topics” in symmetric cryptography in the recent years. A huge number of lightweight algorithms have been published, standardized and/or used in commercial products. In this paper, we discuss the different implementation constraints that a “lightweight” algorithm is usually designed to satisfy. We also present an extensive survey of all lightweight symmetric primitives we are aware of. It covers designs from the academic community, from government agencies and proprietary algorithms which were reverse-engineered or leaked. Relevant national (nist...) and international (iso/iec...) standards are listed. We then discuss some trends we identified in the design of lightweight algorithms, namely the designers’ preference for arx-based and bitsliced-S-Box-based designs and simple key schedules. Finally, we argue that lightweight cryptography is too large a field and that it should be split into two related but distinct areas: ultra-lightweight and IoT cryptography. The former deals only with the smallest of devices for which a lower security level may be justified by the very harsh design constraints. The latter corresponds to low-power embedded processors for which the Aes and modern hash function are costly but which have to provide a high level security due to their greater connectivity. Keywords: Lightweight cryptography · Ultra-Lightweight · IoT · Internet of Things · SoK · Survey · Standards · Industry 1 Introduction The Internet of Things (IoT) is one of the foremost buzzwords in computer science and information technology at the time of writing. -

Construction of Stream Ciphers from Block Ciphers and Their Security

Sridevi, International Journal of Computer Science and Mobile Computing, Vol.3 Issue.9, September- 2014, pg. 703-714 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IJCSMC, Vol. 3, Issue. 9, September 2014, pg.703 – 714 RESEARCH ARTICLE Construction of Stream Ciphers from Block Ciphers and their Security Sridevi, Assistant Professor, Department of Computer Science, Karnatak University, Dharwad Abstract: With well-established encryption algorithms like DES or AES at hand, one could have the impression that most of the work for building a cryptosystem -for example a suite of algorithms for the transmission of encrypted data over the internet - is already done. But the task of a cipher is very specific: to encrypt or decrypt a data block of a specified length. Given an plaintext of arbitrary length, the most simple approach would be to break it down to blocks of the desired length and to use padding for the final block. Each block is encrypted separately with the same key, which results in identical ciphertext blocks for identical plaintext blocks. This is known as Electronic Code Book (ECB) mode of operation, and is not recommended in many situations because it does not hide data patterns well. Furthermore, ciphertext blocks are independent from each other, allowing an attacker to substitute, delete or replay blocks unnoticed. The feedback modes in fact turn the block cipher into a stream cipher by using the algorithm as a keystream generator. Since every mode may yield different usage and security properties, it is necessary to analyse them in detail. -

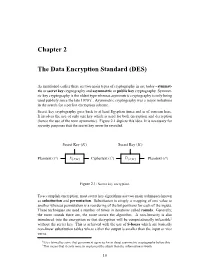

Chapter 2 the Data Encryption Standard (DES)

Chapter 2 The Data Encryption Standard (DES) As mentioned earlier there are two main types of cryptography in use today - symmet- ric or secret key cryptography and asymmetric or public key cryptography. Symmet- ric key cryptography is the oldest type whereas asymmetric cryptography is only being used publicly since the late 1970’s1. Asymmetric cryptography was a major milestone in the search for a perfect encryption scheme. Secret key cryptography goes back to at least Egyptian times and is of concern here. It involves the use of only one key which is used for both encryption and decryption (hence the use of the term symmetric). Figure 2.1 depicts this idea. It is necessary for security purposes that the secret key never be revealed. Secret Key (K) Secret Key (K) ? ? - - - - Plaintext (P ) E{P,K} Ciphertext (C) D{C,K} Plaintext (P ) Figure 2.1: Secret key encryption. To accomplish encryption, most secret key algorithms use two main techniques known as substitution and permutation. Substitution is simply a mapping of one value to another whereas permutation is a reordering of the bit positions for each of the inputs. These techniques are used a number of times in iterations called rounds. Generally, the more rounds there are, the more secure the algorithm. A non-linearity is also introduced into the encryption so that decryption will be computationally infeasible2 without the secret key. This is achieved with the use of S-boxes which are basically non-linear substitution tables where either the output is smaller than the input or vice versa. 1It is claimed by some that government agencies knew about asymmetric cryptography before this. -

Implementation of Advanced Encryption Standard Algorithm

International Journal of Scientific & Engineering Research Volume 3, Issue 3, March -2012 1 ISSN 2229-5518 Implementation of Advanced Encryption Standard Algorithm M.Pitchaiah, Philemon Daniel, Praveen Abstract—Cryptography is the study of mathematical techniques related to aspects of information security such as confidentiality, data integrity, entity authentication and data origin authentication. In data and telecommunications, cryptography is necessary when communicating over any unreliable medium, which includes any network particularly the internet. In this paper, a 128 bit AES encryption and Decryption by using Rijndael algorithm (Advanced Encryption Standard algorithm) is been made into a synthesizable using Verilog code which can be easily implemented on to FPGA. The algorithm is composed of three main parts: cipher, inverse cipher and Key Expansion. Cipher converts data to an unintelligible form called plaintext. Key Expansion generates a Key schedule that is used in cipher and inverse cipher procedure. Cipher and inverse cipher are composed of special number of rounds. For the AES algorithm, the number of rounds to be performed during the execution of the algorithm uses a round function that is composed of four different byte-oriented transformations: Sub Bytes, Shift Rows, Mix columns and Add Round Key. Index Terms—Advanced Encryption Standard, Cryptography, Decryption, Encryption. I. INTRODUCTION standard explicitly defines the allowed values for the key T HE Cryptography plays an important role in the security of length (Nk), block size (Nb), and number of rounds (Nr). data transmission [1]. This paper addresses efficient hardware B. AES Algorithm Specification implementation of the AES (Advanced Encryption Standard) algorithm and describes the design and performance testing of For the AES algorithm, the length of the input block, the Rijndael algorithm [3]. -

Implementation of Advanced Encryption Standard Algorithm With

Advanc of em al e rn n u ts o i J n l T a e n c o h International Journal of Advancements i t n a o n l Nti et al., Int J Adv Technol 2017, 8:2 r o e g t y n I in Technology DOI: 10.4172/0976-4860.1000183 ISSN: 0976-4860 Research Article Open Access Implementation of Advanced Encryption Standard Algorithm with Key Length of 256 Bits for Preventing Data Loss in an Organization Isaac Kofi Nti*, Eric Gymfi and Owusu Nyarko Department of Electrical/Electronic Engineering, Sunyani Technical University, Ghana *Corresponding author: Isaac Kofi Nti, Department of Electrical/Electronic Engineering, Sunyani Technical University, Ghana, Tel: 0208736247; E-mail: [email protected] Received date: February 23, 2017; Accepted date: March 09, 2017; Publication date: March 16, 2017 Copyright: © 2017 Nti IK, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License; which permits unrestricted use; distribution; and reproduction in any medium; provided the original author and source are credited. Abstract Data and network security is one among the foremost necessary factors in today’s business world. In recent, businesses and firms like financial institutions, law firms, schools, health sectors, telecommunications, mining and a number of government agencies want a strategic security technique of managing its data. Organizations managing bigger monetary information, bio-data and alternative relevant info are losing its valuable information or data at rest, in usage or in motion to unauthorized parties or competitors as a result of activities of hackers. -

Higher Order Correlation Attacks, XL Algorithm and Cryptanalysis of Toyocrypt

Higher Order Correlation Attacks, XL Algorithm and Cryptanalysis of Toyocrypt Nicolas T. Courtois Cryptography research, Schlumberger Smart Cards, 36-38 rue de la Princesse, BP 45, 78430 Louveciennes Cedex, France http://www.nicolascourtois.net [email protected] Abstract. Many stream ciphers are built of a linear sequence generator and a non-linear output function f. There is an abundant literature on (fast) correlation attacks, that use linear approximations of f to attack the cipher. In this paper we explore higher degree approximations, much less studied. We reduce the cryptanalysis of a stream cipher to solv- ing a system of multivariate equations that is overdefined (much more equations than unknowns). We adapt the XL method, introduced at Eurocrypt 2000 for overdefined quadratic systems, to solving equations of higher degree. Though the exact complexity of XL remains an open problem, there is no doubt that it works perfectly well for such largely overdefined systems as ours, and we confirm this by computer simula- tions. We show that using XL, it is possible to break stream ciphers that were known to be immune to all previously known attacks. For exam- ple, we cryptanalyse the stream cipher Toyocrypt accepted to the second phase of the Japanese government Cryptrec program. Our best attack on Toyocrypt takes 292 CPU clocks for a 128-bit cipher. The interesting feature of our XL-based higher degree correlation attacks is, their very loose requirements on the known keystream needed. For example they may work knowing ONLY that the ciphertext is in English. Key Words: Algebraic cryptanalysis, multivariate equations, overde- fined equations, Reed-Muller codes, correlation immunity, XL algorithm, Gr¨obner bases, stream ciphers, pseudo-random generators, nonlinear fil- tering, ciphertext-only attacks, Toyocrypt, Cryptrec. -

Security Evaluation of Cryptographic Technology Security Estimation Of

MAR. 2013 No. 426 NEWS 3 01 Security Evaluation of Cryptographic Technology MORIAI Shiho 03 Security Estimation of Lattice-Based Cryptography -The first step to practical use- AONO Yoshinori 06 Cryptanalysis and CRYPTREC Ciphers List Revision Security Fundamentals Laboratory, Network Security Research Institute 07 Awards 09 Report on Space Weather Users’ Forum 10 NICT Entrepreneurs' challenge 2 days ◇Report on The 2nd Kigyouka Koshien ◇Report on The ICT Venture Business Plan Contest FY2012 Security Evaluation of Cryptographic Technology MORIAI Shiho Director of Security Fundamentals Laboratory, Network Security Research Institute Graduated in 1993. Joined NICT in 2012 after working at Nippon Telegraph and Telephone Corporation and Sony Corporation. She has been engaged in research on design and analysis of cryptographic algorithms and international standardization of cryptographic technology. Ph.D. (Engineering). Importance of security evaluation of Security strength cryptographic technology How can we evaluate security of cryptographic algorithms? With advancements in networks, cryptographic technology has The security of a cryptographic algorithm is defined as a number become a fundamental technology that sustains the foundations associated with the amount of work (that is, the number of opera- of modern society. The use of cryptographic technology is not tions) that is required to break a cryptographic algorithm with just in preserving security of communications on the Internet the most efficient algorithm. If the attack complexity was the and mobile phones but also in automatic ticket gate systems for order of 2k, we say that the cryptographic algorithm’s security is railway passengers, electronic toll collection systems on the k bits. For example, if the key of Algorithm A is recovered with highway, content distribution of e-books etc., copyright protec- 2112 times of decryption operations, Algorithm A provides 112 tion on Blu-ray disks, and IC chip-embedded passports to pre- bits of security (Figure 1). -

Recommendation for Block Cipher Modes of Operation

NIST Special Publication 800-38A Recommendation for Block 2001 Edition Cipher Modes of Operation Methods and Techniques Morris Dworkin C O M P U T E R S E C U R I T Y ii C O M P U T E R S E C U R I T Y Computer Security Division Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8930 December 2001 U.S. Department of Commerce Donald L. Evans, Secretary Technology Administration Phillip J. Bond, Under Secretary of Commerce for Technology National Institute of Standards and Technology Arden L. Bement, Jr., Director iii Reports on Information Security Technology The Information Technology Laboratory (ITL) at the National Institute of Standards and Technology (NIST) promotes the U.S. economy and public welfare by providing technical leadership for the Nation’s measurement and standards infrastructure. ITL develops tests, test methods, reference data, proof of concept implementations, and technical analyses to advance the development and productive use of information technology. ITL’s responsibilities include the development of technical, physical, administrative, and management standards and guidelines for the cost-effective security and privacy of sensitive unclassified information in Federal computer systems. This Special Publication 800-series reports on ITL’s research, guidance, and outreach efforts in computer security, and its collaborative activities with industry, government, and academic organizations. Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply recommendation or endorsement by the National Institute of Standards and Technology, nor is it intended to imply that the entities, materials, or equipment are necessarily the best available for the purpose. -

(Advance Encryption Scheme (AES)) and Multicrypt Encryption Scheme

International Journal of Scientific and Research Publications, Volume 2, Issue 4, April 2012 1 ISSN 2250-3153 Analysis of comparison between Single Encryption (Advance Encryption Scheme (AES)) and Multicrypt Encryption Scheme Vibha Verma, Mr. Avinash Dhole Computer Science & Engineering, Raipur Institute of Technology, Raipur, Chhattisgarh, India [email protected], [email protected] Abstract- Advanced Encryption Standard (AES) is a suggested a couple of changes, which Rivest incorporated. After specification for the encryption of electronic data. It supersedes further negotiations, the cipher was approved for export in 1989. DES. The algorithm described by AES is a symmetric-key Along with RC4, RC2 with a 40-bit key size was treated algorithm, meaning the same key is used for both encrypting and favourably under US export regulations for cryptography. decrypting the data. Multicrypt is a scheme that allows for the Initially, the details of the algorithm were kept secret — encryption of any file or files. The scheme offers various private- proprietary to RSA Security — but on 29th January, 1996, source key encryption algorithms, such as DES, 3DES, RC2, and AES. code for RC2 was anonymously posted to the Internet on the AES (Advanced Encryption Standard) is the strongest encryption Usenet forum, sci.crypt. Mentions of CodeView and SoftICE and is used in various financial and public institutions where (popular debuggers) suggest that it had been reverse engineered. confidential, private information is important. A similar disclosure had occurred earlier with RC4. RC2 is a 64-bit block cipher with a variable size key. Its 18 Index Terms- AES, Multycrypt, Cryptography, Networking, rounds are arranged as a source-heavy Feistel network, with 16 Encryption, DES, 3DES, RC2 rounds of one type (MIXING) punctuated by two rounds of another type (MASHING).