A Report on “Time-Series Analysis of R Scuti(Variable) Star Magnitude” by Kaushal Submitted in the Partial Fulfilment Of

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Agb Newsletter

THE AGB NEWSLETTER An electronic publication dedicated to Asymptotic Giant Branch stars and related phenomena Official publication of the IAU Working Group on Abundances in Red Giants No. 208 — 1 November 2014 http://www.astro.keele.ac.uk/AGBnews Editors: Jacco van Loon, Ambra Nanni and Albert Zijlstra Editorial Dear Colleagues, It is our pleasure to present you the 208th issue of the AGB Newsletter. The variety of topics is, as usual, enormous, though post-AGB phases feature prominently, as does R Scuti this time. Don’t miss the announcements of the Olivier Chesneau Prize, and of three workshops to keep you busy and entertained over the course of May–July next year. We look forward to receiving your reactions to this month’s Food for Thought (see below)! The next issue is planned to be distributed around the 1st of December. Editorially Yours, Jacco van Loon, Ambra Nanni and Albert Zijlstra Food for Thought This month’s thought-provoking statement is: What is your favourite AGB star, RSG, post-AGB object, post-RSG or PN? And why? Reactions to this statement or suggestions for next month’s statement can be e-mailed to [email protected] (please state whether you wish to remain anonymous) 1 Refereed Journal Papers Evolutionary status of the active star PZ Mon Yu.V. Pakhomov1, N.N. Chugai1, N.I. Bondar2, N.A. Gorynya1,3 and E.A. Semenko4 1Institute of Astronomy, Russian Academy of Sciences, Pyatnitskaya 48, 119017, Moscow, Russia 2Crimean Astrophysical Observatory, Nauchny, Crimea, 2984009, Russia 3Lomonosov Moscow State University, Sternberg Astronomical Institute, Universitetskij prospekt, 13, Moscow 119991, Russia 4Special Astrophysical Observatory of Russian Academy of Sciences, Russia We use original spectra and available photometric data to recover parameters of the stellar atmosphere of PZ Mon, formerly referred as an active red dwarf. -

Spectroscopy of Variable Stars

Spectroscopy of Variable Stars Steve B. Howell and Travis A. Rector The National Optical Astronomy Observatory 950 N. Cherry Ave. Tucson, AZ 85719 USA Introduction A Note from the Authors The goal of this project is to determine the physical characteristics of variable stars (e.g., temperature, radius and luminosity) by analyzing spectra and photometric observations that span several years. The project was originally developed as a The 2.1-meter telescope and research project for teachers participating in the NOAO TLRBSE program. Coudé Feed spectrograph at Kitt Peak National Observatory in Ari- Please note that it is assumed that the instructor and students are familiar with the zona. The 2.1-meter telescope is concepts of photometry and spectroscopy as it is used in astronomy, as well as inside the white dome. The Coudé stellar classification and stellar evolution. This document is an incomplete source Feed spectrograph is in the right of information on these topics, so further study is encouraged. In particular, the half of the building. It also uses “Stellar Spectroscopy” document will be useful for learning how to analyze the the white tower on the right. spectrum of a star. Prerequisites To be able to do this research project, students should have a basic understanding of the following concepts: • Spectroscopy and photometry in astronomy • Stellar evolution • Stellar classification • Inverse-square law and Stefan’s law The control room for the Coudé Description of the Data Feed spectrograph. The spec- trograph is operated by the two The spectra used in this project were obtained with the Coudé Feed telescopes computers on the left. -

Variable Star Classification and Light Curves Manual

Variable Star Classification and Light Curves An AAVSO course for the Carolyn Hurless Online Institute for Continuing Education in Astronomy (CHOICE) This is copyrighted material meant only for official enrollees in this online course. Do not share this document with others. Please do not quote from it without prior permission from the AAVSO. Table of Contents Course Description and Requirements for Completion Chapter One- 1. Introduction . What are variable stars? . The first known variable stars 2. Variable Star Names . Constellation names . Greek letters (Bayer letters) . GCVS naming scheme . Other naming conventions . Naming variable star types 3. The Main Types of variability Extrinsic . Eclipsing . Rotating . Microlensing Intrinsic . Pulsating . Eruptive . Cataclysmic . X-Ray 4. The Variability Tree Chapter Two- 1. Rotating Variables . The Sun . BY Dra stars . RS CVn stars . Rotating ellipsoidal variables 2. Eclipsing Variables . EA . EB . EW . EP . Roche Lobes 1 Chapter Three- 1. Pulsating Variables . Classical Cepheids . Type II Cepheids . RV Tau stars . Delta Sct stars . RR Lyr stars . Miras . Semi-regular stars 2. Eruptive Variables . Young Stellar Objects . T Tau stars . FUOrs . EXOrs . UXOrs . UV Cet stars . Gamma Cas stars . S Dor stars . R CrB stars Chapter Four- 1. Cataclysmic Variables . Dwarf Novae . Novae . Recurrent Novae . Magnetic CVs . Symbiotic Variables . Supernovae 2. Other Variables . Gamma-Ray Bursters . Active Galactic Nuclei 2 Course Description and Requirements for Completion This course is an overview of the types of variable stars most commonly observed by AAVSO observers. We discuss the physical processes behind what makes each type variable and how this is demonstrated in their light curves. Variable star names and nomenclature are placed in a historical context to aid in understanding today’s classification scheme. -

Appendix: Spectroscopy of Variable Stars

Appendix: Spectroscopy of Variable Stars As amateur astronomers gain ever-increasing access to professional tools, the science of spectroscopy of variable stars is now within reach of the experienced variable star observer. In this section we shall examine the basic tools used to perform spectroscopy and how to use the data collected in ways that augment our understanding of variable stars. Naturally, this section cannot cover every aspect of this vast subject, and we will concentrate just on the basics of this field so that the observer can come to grips with it. It will be noticed by experienced observers that variable stars often alter their spectral characteristics as they vary in light output. Cepheid variable stars can change from G types to F types during their periods of oscillation, and young variables can change from A to B types or vice versa. Spec troscopy enables observers to monitor these changes if their instrumentation is sensitive enough. However, this is not an easy field of study. It requires patience and dedication and access to resources that most amateurs do not possess. Nevertheless, it is an emerging field, and should the reader wish to get involved with this type of observation know that there are some excellent guides to variable star spectroscopy via the BAA and the AAVSO. Some of the workshops run by Robin Leadbeater of the BAA Variable Star section and others such as Christian Buil are a very good introduction to the field. © Springer Nature Switzerland AG 2018 M. Griffiths, Observer’s Guide to Variable Stars, The Patrick Moore 291 Practical Astronomy Series, https://doi.org/10.1007/978-3-030-00904-5 292 Appendix: Spectroscopy of Variable Stars Spectra, Spectroscopes and Image Acquisition What are spectra, and how are they observed? The spectra we see from stars is the result of the complete output in visible light of the star (in simple terms). -

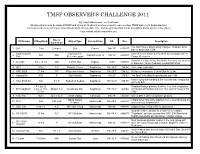

The 2011 Observers Challenge List

TMSP OBSERVER'S CHALLENGE 2011 By Kreig McBride and Tom Masterson All observations must be made at TMSP and 25 out of 30 objects must be viewed to earn a unique TMSP Observer's Award lapel pin. You must create a record of your observations which include date, time, instruments used and a brief description and/or sketch of the object. Your records will be returned to you. Size or ID Number V Magnitude Object Type Constellation RA Dec Description Separation The Sun! View 2 days noting changes. H-alpha, white 1 Sol -28m ½ degree Star Cancer 08h 39' +27d 07' light or projection is OK North Galactic Astronomical Catch this one before it sets. Next to the double star 31 2 N/A N/A Coma Berenices 12h 51' +27d 07' Pole Reference point Comae Berenices Omicron-2 a wide 4.9m, 9m double lies close by as does 3 U Cygni 5.9 – 12.1m N/A Carbon Star Cygnus 19.6h +47d 54' 6” diameter, 12.6m Planetary nebula NGC6884 4 M22 5.1m 7.8' Globular Cluster Sagittarius 18h 36.4' -23d 54' Rich, large and bright 5 NGC 6629 11.3m 15” Planetary Nebula Sagittarius 18h 25.7' -23d 12' Stellar at low powers. Central Star is 12.8m 6 Barnard 86 N/A 5' Dark Nebula Sagittarius 18h 03' -27d 53' “Ink Spot” Imbedded in spectacular star field Faint 1' glow surrounding 9.5m star w/a faint companion 7 NGC 6589-90 N/A 5' x 3' Reflection Nebula Sagittarius 18h 16.9'' -19d 47' 25” to its SW A 3.2m, B Reddish/Orange primary, B white, C is 10m companion 8 ETA Sagittarii 3.6m, C 10m, AB pair 3.6” Quadruple Star Sagittarius 18h 17.6' -36d 46' 93” distant at PA303d and D is 13m star 33” -

Variable Star Section Circular 70

The B ritish Astronomical Association VARIABLE STAR SECTION APRIL CIRCULAR 70 1990 ISSN 0267-9272 Office: Burlington House, Piccadilly, London, W1V 9AG VARIABLE STAR SECTION CIRCULAR 70 CONTENTS Doug Saw 1 Gordon Patson 1 Jack Ells 1 Changes in Administrative Arrangements 1 SS Computerization - Appeal for an Organizer 2 Submission of VSS Observations 2 Under-observed Stars in 1989 - Melvyn Taylor 3 Binocular Programme Priority List - John Isles 7 For Sale 8 Unusual Carbon Stars 9 Two Symbiotic Stars 13 Forthcoming VSS Report: W Cygni 14 Minima of Eclipsing Binaries, 1987 16 Preliminary Light-curves and Reports on 4 Stars 22 (Omicron Cet, R CrB, CH Cyg, R Set) Editorial Note 26 Five Semiregular Variables, 1974-87 - Ian Middlemist 26 (TZ Cas, ST Cep, S Dra, U Lac, Y UMa) Algols and Amateurs 34 AAVSO Meeting, Brussels 1990 July 24-28 36 Charts: AXPer/UV Aur 15 ST Cep / S Dra 32 U Lac / Y UMa 32 Professional-Amateur Liason Committee centre pages Newsletter No. 3 Observing with Amateurs’ Telescopes i Availability of Visual Data ii Meeting of Photoelectric Photometry iii AAVSO Meeting, Brussels, 1990 July 24-28 iv Printed by Castle Printers of Wittering Doug Saw It is with deep regret that we announce the death on 1990 March 6 of Doug Saw, Deputy Director and past Director and Secretary of the Section. Doug’s contribution to the Section has been exceptional, most particularly in his handling of untold thousands of observations over many years. Although in poor health recently, he continued with the administrative work until very shortly before his death. -

Further Observations of the Lambda 10830 He Line in Stars and Their Significance As a Measure of Stellar Activity

General Disclaimer One or more of the Following Statements may affect this Document This document has been reproduced from the best copy furnished by the organizational source. It is being released in the interest of making available as much information as possible. This document may contain data, which exceeds the sheet parameters. It was furnished in this condition by the organizational source and is the best copy available. This document may contain tone-on-tone or color graphs, charts and/or pictures, which have been reproduced in black and white. This document is paginated as submitted by the original source. Portions of this document are not fully legible due to the historical nature of some of the material. However, it is the best reproduction available from the original submission. Produced by the NASA Center for Aerospace Information (CASI) or I CALIFORNIA INSTITUTE OF TECHNOLOGY BIG BEAR SOLA R OBSERVATORY HALE OBSERVATORIES 4 I r -b cb c^ a y CNN (NASA-CR-145974) FURTHER CBSEPVATIONS CF N76-14992 THE LAMEDA 1083u He LING, IN STARS AND THEIR SIGNIFIC!NCE AS A MEAEURE OF STELLAR ACTIVITY (dale CbseEvatories, Pasadena, Unclas Calif.) 28 p HC $4.('. CSCL J3A G3;89 1,7726 t I FURTHER OBSERVATIONS OF THE XZ0830 HE LINE IN STARS AND THEIR SIGNIFICANCE AS A MEASURE OF STELLAR ACTIVITY H. Zirin HALE OBSERVATORIES CARNEGIE INSTITUTION OF WASHINGTON CALIFORNIA INSTITUTE OF TECHNOLOGY BBSO #0150 November, 1975 ABSTRACT Measurements of the x10830 He line in 198 stars are given, f along with data on other features in that spectral range. Nearly 80% of all G and K stars show some 110830; of these, half are variable and 1/4 show emission. -

David Stevenson the Complex Lives of Star Clusters Astronomers’ Universe

David Stevenson The Complex Lives of Star Clusters Astronomers’ Universe More information about this series at http://www.springer.com/series/6960 David Stevenson The Complex Lives of Star Clusters David Stevenson Sherwood , UK ISSN 1614-659X ISSN 2197-6651 (electronic) Astronomers’ Universe ISBN 978-3-319-14233-3 ISBN 978-3-319-14234-0 (eBook) DOI 10.1007/978-3-319-14234-0 Library of Congress Control Number: 2015936043 Springer Cham Heidelberg New York Dordrecht London © Springer International Publishing Switzerland 2015 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifi cally the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfi lms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specifi c statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. The publisher, the authors and the editors are safe to assume that the advice and information in this book are believed to be true and accurate at the date of publication. Neither the publisher nor the authors or the editors give a warranty, express or implied, with respect to the material contained herein or for any errors or omissions that may have been made. Cover photo of the Orion Nebula M42 courtesy of NASA Spitzer Printed on acid-free paper Springer International Publishing AG Switzerland is part of Springer Science+Business Media (www.springer.com) For my late mother, Margaret Weir Smith Stevenson Pref ace Stars are fairly social beasts. -

Late Stages of Stellar Evolution*

Late stages of Stellar Evolution £ Joris A.D.L. Blommaert ([email protected]) Instituut voor Sterrenkunde, K.U. Leuven, Celestijnenlaan 200B, B-3001 Leuven, Belgium Jan Cami NASA Ames Research Center, MS 245-6, Moffett Field, CA 94035, USA Ryszard Szczerba N. Copernicus Astronomical Center, Rabia´nska 8, 87-100 Toru´n, Poland Michael J. Barlow Department of Physics & Astronomy, University College London, Gower Street, London WC1E 6BT, U.K. Abstract. A large fraction of ISO observing time was used to study the late stages of stellar evolution. Many molecular and solid state features, including crystalline silicates and the rota- tional lines of water vapour, were detected for the first time in the spectra of (post-)AGB stars. Their analysis has greatly improved our knowledge of stellar atmospheres and circumstel- lar environments. A surprising number of objects, particularly young planetary nebulae with Wolf-Rayet central stars, were found to exhibit emission features in their ISO spectra that are characteristic of both oxygen-rich and carbon-rich dust species, while far-IR observations of the PDR around NGC 7027 led to the first detections of the rotational line spectra of CH and CH· . Received: 18 October 2004, Accepted: 2 November 2004 1. Introduction ISO (Kessler et al., 1996, Kessler et al., 2003) has been tremendously impor- tant in the study of the final stages of stellar evolution. A substantial fraction of ISO observing time was used to observe different classes of evolved stars. IRAS had already shown the strong potential to discover many evolved stars with circumstellar shells in the infrared wavelength range. -

The Agb Newsletter

THE AGB NEWSLETTER An electronic publication dedicated to Asymptotic Giant Branch stars and related phenomena Official publication of the IAU Working Group on Red Giants and Supergiants No. 286 — 2 May 2021 https://www.astro.keele.ac.uk/AGBnews Editors: Jacco van Loon, Ambra Nanni and Albert Zijlstra Editorial Board (Working Group Organising Committee): Marcelo Miguel Miller Bertolami, Carolyn Doherty, JJ Eldridge, Anibal Garc´ıa-Hern´andez, Josef Hron, Biwei Jiang, Tomasz Kami´nski, John Lattanzio, Emily Levesque, Maria Lugaro, Keiichi Ohnaka, Gioia Rau, Jacco van Loon (Chair) Editorial Dear Colleagues, It is our pleasure to present you the 286th issue of the AGB Newsletter. A healthy 30 postings are sure to keep you inspired. Now that the Leuven workshop has happened, we are gearing up to the IAU-sponsored GAPS 2021 virtual discussion meeting aimed to lay out a roadmap for cool evolved star research: we have got an exciting line-up of new talent as well as seasoned experts introducing the final session – all are encouraged to contribute to the meeting through 5-minute presentations or live discussion and the White Paper that will follow (see announcement at the back). Those interested in the common envelope process will no doubt consider attending the CEPO 2021 virtual meeting on this topic organised at the end of August / star of September. Great news on the job front: there are Ph.D. positions in Bordeaux, postdoc positions in Warsaw and another one in Nice. Thanks to Josef Hron and P´eter Abrah´am´ for ensuring the continuation of the Fizeau programme for exchange in the field of interferometry. -

Fall Messier Marathon Planned for November

Fall Messier Marathon planned for November. See Page 9 for details! Got an idea for a new club logo? Send a sketch to Harry Bearman FWAS August Meeting is Beautiful at Botanic Center Welcome New Our first meeting at the Fort Worth Botanic Garden Center was a great success! A Members! large turnout of 60 members was there to see Celebrity Astronomer Death Match-- Star Hoppers vs GoTo's. We met in the "Redbud" room, which has a nice window, kitchen Raude Woods with counter, white board/screen, and room enough for meeting essentials. Don Welch We were treated to a number of reports on Perseid observations, sunspot tracking, and Andy & Diane Lovejoy asteroid watching. Preston Starr had been able to video asteroid 2002NY40, and the Eric & Sandra Milfeld video was broadcast on CNN! Steve Richer also brought a set of NASA mission pins from the Mercury and Gemini missions. Inside this issue: The remainder of the meeting was a rousing match between Becky Nordeen (representing the star hopper crowd) and Doug Christianson (representing the (Continued on page 2) Observing Reports Between 930pm CDT and 1000pm the asteroid was passing thru Lyra almost straight overhead. Dobs and alt az mounted SCT's are very difficult to aim straight Saturday was a partial victory up, but since I was polar aligned it was a much easier for the GOTO guy and a task. minor concession to the starhoppers. Using the sky and telescope chart (see At the FWAS site, Harry, our SkyandTelescope.com/observing/objects/asteroids/) I exalted leader, on the big Dob began tracking the mag 6 star shown between the Los and me on the polar aligned Angeles and Boston tracks at the position where the Meade LX200 8" SCT. -

162, December 2014

British Astronomical Association VARIABLE STAR SECTION CIRCULAR No 162, December 2014 Contents Image of ASAS-SN-14JV - Denis Buczynski .......................... inside front cover From the Director - R. Pickard ........................................................................... 3 Images of two new Variables - Denis Buczynski ............................................... 3 Eclipsing Binary News - D. Loughney .............................................................. 7 Recurrent Objects News - G. Poyner ................................................................ 9 RZ Vulpeculae - G. Poyner .............................................................................. 10 Spectra of Symbiotic Stars - D. Boyd .............................................................. 13 T Leo and the first recorded UGSU Superoutbursts - J. Toone ...................... 16 Filling in the Gaps - T. Markam ....................................................................... 20 The AAVSO DSLR Observing Manual - A Review - R. Miles ........................ 22 Nova Del 2013 (V339 Del) - A Spectroscopic Bonanza - R. Leadbeater ......... 26 Variable Star Section Meeting, York (part 2) - T. Markham ............................ 28 Binocular Programme - M. Taylor ................................................................... 29 Eclipsing Binary Predictions – Where to Find Them - D. Loughney .............. 30 Charges for Section Publications .............................................. inside back cover Guidelines for Contributing to the