Stages of Psychometric Measure Development: the Example of the Generalized Expertise Measure (GEM)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Personalizing Predictions of Video-Induced Emotions Using Personal Memories As Context

A Blast From the Past: Personalizing Predictions of Video-Induced Emotions using Personal Memories as Context Bernd Dudzik∗ Joost Broekens Delft University of Technology Delft University of Technology Delft, South Holland, The Netherlands Delft, South Holland, The Netherlands [email protected] [email protected] Mark Neerincx Hayley Hung Delft University of Technology Delft University of Technology Delft, South Holland, The Netherlands Delft, South Holland, The Netherlands [email protected] [email protected] ABSTRACT match a viewer’s current desires, e.g., to see an "amusing" video, A key challenge in the accurate prediction of viewers’ emotional these will likely need some sensitivity for the conditions under responses to video stimuli in real-world applications is accounting which any potential video will create this experience specifically for person- and situation-specific variation. An important contex- for him/her. Therefore, addressing variation in emotional responses tual influence shaping individuals’ subjective experience of avideo by personalizing predictions is a vital step for progressing VACA is the personal memories that it triggers in them. Prior research research (see the reviews by Wang et al. [68] and Baveye et al. [7]). has found that this memory influence explains more variation in Despite the potential benefits, existing research efforts still rarely video-induced emotions than other contextual variables commonly explore incorporating situation- or person-specific context to per- used for personalizing -

Psycholinguistics

11/09/2013 Psycholinguistics What do these activities have in common? What kind of process is involved in producing and understanding language? 1 11/09/2013 Questions • What is psycholinguistics? • What are the main topics of psycholinguistics? 9.1 Introduction • * Psycholinguistics is the study of the language processing mechanisms. Psycholinguistics deals with the mental processes a person uses in producing and understanding language. It is concerned with the relationship between language and the human mind, for example, how word, sentence, and discourse meaning are represented and computed in the mind. 2 11/09/2013 9.1 Introduction * As the name suggests, it is a subject which links psychology and linguistics. • Psycholinguistics is interdisciplinary in nature and is studied by people in a variety of fields, such as psychology, cognitive science, and linguistics. It is an area of study which draws insights from linguistics and psychology and focuses upon the comprehension and production of language. • The scope of psycholinguistics • The common aim of psycholinguists is to find out the structures and processes which underline a human’s ability to speak and understand language. • Psycholinguists are not necessarily interested in language interaction between people. They are trying above all to probe into what is happening within the individual. 3 11/09/2013 The scope of psycholinguistics • At its heart, psycholinguistic work consists of two questions. – What knowledge of language is needed for us to use language? – What processes are involved in the use of language? The “knowledge” question • Four broad areas of language knowledge: Semantics deals with the meanings of sentences and words. -

Assessment Center Structure and Construct Validity: a New Hope

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2015 Assessment Center Structure and Construct Validity: A New Hope Christopher Wiese University of Central Florida Part of the Psychology Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Doctoral Dissertation (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Wiese, Christopher, "Assessment Center Structure and Construct Validity: A New Hope" (2015). Electronic Theses and Dissertations, 2004-2019. 733. https://stars.library.ucf.edu/etd/733 ASSESSMENT CENTER STRUCTURE AND CONSTRUCT VALIDITY: A NEW HOPE by CHRISTOPHER W. WIESE B.S., University of Central Florida, 2008 A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of Psychology in the College of Sciences at the University of Central Florida Orlando, Florida Summer Term 2015 Major Professor: Kimberly Smith-Jentsch © 2015 Christopher Wiese ii ABSTRACT Assessment Centers (ACs) are a fantastic method to measure behavioral indicators of job performance in multiple diverse scenarios. Based upon a thorough job analysis, ACs have traditionally demonstrated very strong content and criterion-related validity. However, researchers have been puzzled for over three decades with the lack of evidence concerning construct validity. ACs are designed to measure critical job dimensions throughout multiple situational exercises. However, research has consistently revealed that different behavioral ratings within these scenarios are more strongly related to one another (exercise effects) than the same dimension rating across scenarios (dimension effects). -

Education Quarterly Reviews

Education Quarterly Reviews Allanson, Patricia E., and Notar, Charles E. (2020), Statistics as Measurement: 4 Scales/Levels of Measurement. In: Education Quarterly Reviews, Vol.3, No.3, 375-385. ISSN 2621-5799 DOI: 10.31014/aior.1993.03.03.146 The online version of this article can be found at: https://www.asianinstituteofresearch.org/ Published by: The Asian Institute of Research The Education Quarterly Reviews is an Open Access publication. It May be read, copied, and distributed free of charge according to the conditions of the Creative ComMons Attribution 4.0 International license. The Asian Institute of Research Education Quarterly Reviews is a peer-reviewed International Journal. The journal covers scholarly articles in the fields of education, linguistics, literature, educational theory, research, and methodologies, curriculum, elementary and secondary education, higher education, foreign language education, teaching and learning, teacher education, education of special groups, and other fields of study related to education. As the journal is Open Access, it ensures high visibility and the increase of citations for all research articles published. The Education Quarterly Reviews aiMs to facilitate scholarly work on recent theoretical and practical aspects of education. The Asian Institute of Research Education Quarterly Reviews Vol.3, No.3, 2020: 375-385 ISSN 2621-5799 Copyright © The Author(s). All Rights Reserved DOI: 10.31014/aior.1993.03.03.146 Statistics as Measurement: 4 Scales/Levels of Measurement 1 2 Patricia E. Allanson , Charles E. Notar 1 Liberty University 2 Jacksonville State University. EMail: [email protected] (EMeritus) Abstract This article discusses the basics of the “4 scales of MeasureMent” and how they are applicable to research or everyday tools of life. -

Psychology, Meaning Making and the Study of Worldviews: Beyond Religion and Non-Religion

Psychology, Meaning Making and the Study of Worldviews: Beyond Religion and Non-Religion Ann Taves, University of California, Santa Barbara Egil Asprem, Stockholm University Elliott Ihm, University of California, Santa Barbara Abstract: To get beyond the solely negative identities signaled by atheism and agnosticism, we have to conceptualize an object of study that includes religions and non-religions. We advocate a shift from “religions” to “worldviews” and define worldviews in terms of the human ability to ask and reflect on “big questions” ([BQs], e.g., what exists? how should we live?). From a worldviews perspective, atheism, agnosticism, and theism are competing claims about one feature of reality and can be combined with various answers to the BQs to generate a wide range of worldviews. To lay a foundation for the multidisciplinary study of worldviews that includes psychology and other sciences, we ground them in humans’ evolved world-making capacities. Conceptualizing worldviews in this way allows us to identify, refine, and connect concepts that are appropriate to different levels of analysis. We argue that the language of enacted and articulated worldviews (for humans) and worldmaking and ways of life (for humans and other animals) is appropriate at the level of persons or organisms and the language of sense making, schemas, and meaning frameworks is appropriate at the cognitive level (for humans and other animals). Viewing the meaning making processes that enable humans to generate worldviews from an evolutionary perspective allows us to raise news questions for psychology with particular relevance for the study of nonreligious worldviews. Keywords: worldviews, meaning making, religion, nonreligion Acknowledgments: The authors would like to thank Raymond F. -

Construct Validity and Reliability of the Work Environment Assessment Instrument WE-10

International Journal of Environmental Research and Public Health Article Construct Validity and Reliability of the Work Environment Assessment Instrument WE-10 Rudy de Barros Ahrens 1,*, Luciana da Silva Lirani 2 and Antonio Carlos de Francisco 3 1 Department of Business, Faculty Sagrada Família (FASF), Ponta Grossa, PR 84010-760, Brazil 2 Department of Health Sciences Center, State University Northern of Paraná (UENP), Jacarezinho, PR 86400-000, Brazil; [email protected] 3 Department of Industrial Engineering and Post-Graduation in Production Engineering, Federal University of Technology—Paraná (UTFPR), Ponta Grossa, PR 84017-220, Brazil; [email protected] * Correspondence: [email protected] Received: 1 September 2020; Accepted: 29 September 2020; Published: 9 October 2020 Abstract: The purpose of this study was to validate the construct and reliability of an instrument to assess the work environment as a single tool based on quality of life (QL), quality of work life (QWL), and organizational climate (OC). The methodology tested the construct validity through Exploratory Factor Analysis (EFA) and reliability through Cronbach’s alpha. The EFA returned a Kaiser–Meyer–Olkin (KMO) value of 0.917; which demonstrated that the data were adequate for the factor analysis; and a significant Bartlett’s test of sphericity (χ2 = 7465.349; Df = 1225; p 0.000). ≤ After the EFA; the varimax rotation method was employed for a factor through commonality analysis; reducing the 14 initial factors to 10. Only question 30 presented commonality lower than 0.5; and the other questions returned values higher than 0.5 in the commonality analysis. Regarding the reliability of the instrument; all of the questions presented reliability as the values varied between 0.953 and 0.956. -

Graphologist

Confessions of a (Former) Graphologist A first-hand report of one man's entry into the field of graphology, his later deep involvement with the practice, and his subsequent disillusionment. Along the way he encountered a continuing lack of scientific validation and a refusal of graphologists to face graphology's validity problems. ROBERT J. TRIPICIAN round 1972 I happened upon a magazine article describing handwriting analysis. The article got my A immediate attention as it showed a sample of hand- writing that closely resembled that of a co-worker. The per- sonality factors described in the analysis of this sample appeared to match the co-worker's personality very closely. This piqued my interest. After retirement I pursued the study and practice of graphology rather heavily, even becoming a Certified Professional Graphologist. I soon found that while texts on the subject are in general agreement regarding the meaning and interpretation of individual handwriting features, none of them describe an orderly approach to developing a com- 4 4 January/February 2000 SKEPTICAL INQUIRER plete profile. Indeed, developing profiles is described as an eso- with a sharp point denotes a sharp temper. teric and intuitive process. Furthermore, none of the texts d. A lower case p with a spike at the top (see figure 4) is describe an orderly method of recording notes. interpreted to mean that the writer is argumentative. However, I subsequently developed a computer database to automate as in the case of the capital letter /, this formation was taught the note-taking process and eliminate die need for memoriz- in both the Mills and Palmer Method systems as the standard. -

The 100 Most Eminent Psychologists of the 20Th Century

Review of General Psychology Copyright 2002 by the Educational Publishing Foundation 2002, Vol. 6, No. 2, 139–152 1089-2680/02/$5.00 DOI: 10.1037//1089-2680.6.2.139 The 100 Most Eminent Psychologists of the 20th Century Steven J. Haggbloom Renee Warnick, Jason E. Warnick, Western Kentucky University Vinessa K. Jones, Gary L. Yarbrough, Tenea M. Russell, Chris M. Borecky, Reagan McGahhey, John L. Powell III, Jamie Beavers, and Emmanuelle Monte Arkansas State University A rank-ordered list was constructed that reports the first 99 of the 100 most eminent psychologists of the 20th century. Eminence was measured by scores on 3 quantitative variables and 3 qualitative variables. The quantitative variables were journal citation frequency, introductory psychology textbook citation frequency, and survey response frequency. The qualitative variables were National Academy of Sciences membership, election as American Psychological Association (APA) president or receipt of the APA Distinguished Scientific Contributions Award, and surname used as an eponym. The qualitative variables were quantified and combined with the other 3 quantitative variables to produce a composite score that was then used to construct a rank-ordered list of the most eminent psychologists of the 20th century. The discipline of psychology underwent a eve of the 21st century, the APA Monitor (“A remarkable transformation during the 20th cen- Century of Psychology,” 1999) published brief tury, a transformation that included a shift away biographical sketches of some of the more em- from the European-influenced philosophical inent contributors to that transformation. Mile- psychology of the late 19th century to the stones such as a new year, a new decade, or, in empirical, research-based, American-dominated this case, a new century seem inevitably to psychology of today (Simonton, 1992). -

Cognitive Psychology

COGNITIVE PSYCHOLOGY PSYCH 126 Acknowledgements College of the Canyons would like to extend appreciation to the following people and organizations for allowing this textbook to be created: California Community Colleges Chancellor’s Office Chancellor Diane Van Hook Santa Clarita Community College District College of the Canyons Distance Learning Office In providing content for this textbook, the following professionals were invaluable: Mehgan Andrade, who was the major contributor and compiler of this work and Neil Walker, without whose help the book could not have been completed. Special Thank You to Trudi Radtke for editing, formatting, readability, and aesthetics. The contents of this textbook were developed under the Title V grant from the Department of Education (Award #P031S140092). However, those contents do not necessarily represent the policy of the Department of Education, and you should not assume endorsement by the Federal Government. Unless otherwise noted, the content in this textbook is licensed under CC BY 4.0 Table of Contents Psychology .................................................................................................................................................... 1 126 ................................................................................................................................................................ 1 Chapter 1 - History of Cognitive Psychology ............................................................................................. 7 Definition of Cognitive Psychology -

Affective Image Content Analysis: a Comprehensive Survey∗

Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18) Affective Image Content Analysis: A Comprehensive Survey∗ Sicheng Zhaoy, Guiguang Dingz, Qingming Huang], Tat-Seng Chuax, Bjorn¨ W. Schuller♦ and Kurt Keutzery yDepartment of Electrical Engineering and Computer Sciences, University of California, Berkeley, USA zSchool of Software, Tsinghua University, China ]School of Computer and Control Engineering, University of Chinese Academy of Sciences, China xSchool of Computing, National University of Singapore, Singapore ♦Department of Computing, Imperial College London, UK Abstract Specifically, the task of AICA is often composed of three steps: human annotation, visual feature extraction and learn- Images can convey rich semantics and induce ing of mapping between visual features and perceived emo- strong emotions in viewers. Recently, with the ex- tions [Zhao et al., 2017a]. One main challenge for AICA is plosive growth of visual data, extensive research ef- the affective gap, which can be defined as “the lack of coinci- forts have been dedicated to affective image content dence between the features and the expected affective state in analysis (AICA). In this paper, we review the state- which the user is brought by perceiving the signal” [Hanjalic, of-the-art methods comprehensively with respect to 2006]. Recently, various hand-crafted or learning-based fea- two main challenges – affective gap and percep- tures have been designed to bridge this gap. Current AICA tion subjectivity. We begin with an introduction methods mainly assign an image with the dominant (average) to the key emotion representation models that have emotion category (DEC) with the assumption that different been widely employed in AICA. -

Psychological Tests Commonly Used in the Assessment of Chronic Pain *

Colorado Division of Workers’ Compensation COMPREHENSIVE PSYCHOLOGICAL TESTING Psychological Tests Commonly Used in the Assessment of Chronic Pain * TEST TEST CHARACTERISTICS STRENGTHS AND LENGTH, SCORING WEAKNESSES OPTIONS & TEST TAKING TIME Comprehensive Inventories For Medical Patients BHI™ 2 (Battery for What it Measures: Depression, anxiety and hostility; Strengths: Well-developed theoretical basis tied 217 items, 18 scales including Health Improvement – 2nd violent and suicidal ideation; borderline, emotional to a paradigm of delayed recovery in medical 3 validity measures, 40 edition ) dependency, chronic maladjustment, substance abuse, patients, and to assessing primary (“red flag”) content-based subscales, 25 history of abuse, perseverance, conflicts with and secondary (“yellow flag”) risk factors. Has critical items, 25-35 minutes, Pearson Assessments employer, family and physician, pain preoccupation, nationally normed 0-10 pain profiling. Two computerized scoring and www.pearsonassessments.c somatization, disability perceptions and others. norms groups are available, based on national report. om rehabilitation patient and community samples, Uses: Useful for identifying affective, both of which are stratified to match US census 6th grade reading level Standardization: S characterological, psychophysiological and social data. English and Spanish versions available. Scientific Review: JBG factors affecting pain and disability reports. Also Standardized audio tape administration for Intended for: M useful for assessing patients -

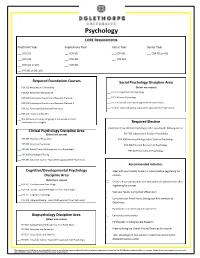

Psychology CORE Requirements

Psychology CORE Requirements Freshman Year Sophomore Year Junior Year Senior Year ___ COR 101 ___ COR 201 ___ COR 301 ___ COR 401 or 402 ___ COR 102 ___ COR 202 ___ COR 302 ___ COR 103 or 104 ___ COR 203 ___ FYS 101 or OGL 101 Required Foundation Courses Social Psychology Discipline Area ___ PSY 101 Introduction to Psychology (Select one course) ___ PSY 209 Behavioral Neuroscience ___ PSY 202 Organizational Psychology ___ PSY 204 Social Psychology ___ PSY 320 Psychological Statistics and Research MethodsI ___ PSY 321 Psychological Statistics and Research Methods II ___ PSY 290 Special Topics (With approval from Psych dept.) ___ PSY 322 Advanced Experimental Psychology ___ PSY 490 Advanced Special Topics (With approval from Psych dept.) ___ PSY 405 History and Systems ___ One Semester of Foreign Language at the second semester elementary level or higher Required Elective Completion of any additional Psychology elective excluding the following courses: Clinical Psychology Discipline Area (Select one course) PSY 200 Independent Study in Psychology ___ PSY 205 Theories of Personality PSY 400 Advanced Independent Study in Psychology ___ PSY 206 Abnormal Psychology PSY 406 Directed Research in Psychology ___ PSY 290 Special Topics (With approval from Psych dept.) PSY 407 Internship in Psychology ___ PSY 303 Psychological Testing ___ PSY 490 Advanced Special Topics (With approval from Psych dept.) Recommended Activities Cognitive/Developmental Psychology Meet with your Faculty Academic Advisor before registering for Discipline Area courses