Sketches for Lp-Sampling Without Replacement

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Phillip B. Gibbons Curriculum Vitae

Phillip B. Gibbons Curriculum Vitae [email protected] http://cs.cmu.edu/~gibbons/ July 2020 Research Interests Research areas include big data, parallel computing, databases, cloud computing, sensor networks, distributed systems, and computer architecture. My publications span theory and systems, across a broad range of computer science and engineering (e.g., conference papers in APoCS, ATC, ESA, EuroSys, HPCA, ICML, IPDPS, ISCA, MICRO, NeurIPS, NSDI, OSDI, PACT, SoCC, SODA, SOSP, SPAA and VLDB since 2015). Education • University of California at Berkeley, Berkeley, California, 1984{1989. Ph.D. in Computer Science. Dissertation advisor: Richard M. Karp. • Dartmouth College, Hanover, New Hampshire, 1979{1983. B.A. in Mathematics. Graduated summa cum laude and Phi Beta Kappa. Professional Experience • Carnegie Mellon University, Pittsburgh, Pennsylvania. Professor, Computer Science Department, 2015{present. Professor, Electrical and Computer Engineering Department, 2015{present. Principal Investigator (PI or co-PI) for the following research projects: { Prescriptive Memory: Razing the semantic wall between applications and computer systems with heterogeneous compute and memories. { Asymmetric Memory: Write-efficient algorithms and systems, for settings (such as emerging non- volatile memories) where writes are significantly more costly than reads. { Big Learning Systems: Mapping out and exploring the space of large-scale machine learning from a systems' perspective. Recent focus on geo-distributed learning over non-IID data. Adjunct Professor, Computer Science Department, 2003{2015. Adjunct Associate Professor, Computer Science Department, 2000{2003. Visiting Professor, Computer Science Department, 2000. • Intel Labs Pittsburgh, Pittsburgh, Pennsylvania. Principal Research Scientist, 2001{2015. Principal Investigator for the Intel Science and Technology Center for Cloud Computing { A $11.5M research partnership with Carnegie Mellon, Georgia Tech, Princeton, UC Berkeley, and U. -

SIGOPS Annual Report 2011

SIGOPS Annual Report 2011 Fiscal Year July 2010-June 2011 Submitted by Doug Terry, SIGOPS Chair Overview SIGOPS is a vibrant community of people with interests in “operating systems” in the broadest sense, including topics such as distributed computing, storage systems, security, concurrency, middleware, mobility, virtualization, networking, cloud computing, datacenter software, and Internet services. We sponsor a number of top conferences, provide travel grants to students, present yearly awards, disseminate information to members electronically, and collaborate with other SIGs on important programs for computing professionals. Notable activities from the past year include: Elections were held for new officers, and Jeanna Matthews was elected as the new SIGOPS Chair, George Candea as Vice Chair, and Dilma da Silva as Treasurer. They will serve two-year terms from July 1, 2011 through June 30, 2013. The past officers (Doug Terry as Chair, Frank Bellosa as Vice Chair and Jeanna Matthews as Treasurer) completed their 4 years of service on June 30. Planning for the next ACM Symposium on Operating Systems (SOSP), which is scheduled for October 2011 in Cascais, Portugal, has been largely completed by Ted Wobber, the General Chair, and Peter Druschel, the Program Chair. The Second ACM Symposium on Cloud Computing (SOCC), which is co-sponsored between SIGOPS and SIGMOD, is being co-located with SOSP in Portugal. The first SIGOPS Asia-Pacific Workshop on Systems (APSys) was held on August 30, 2010 in New Delhi, India, immediately before the SIGCOMM 2010 conference. And the second instance of this workshop is being held in Shanghai, China, in July 2011. ACM released the first version of the Cloud Computing Tech Pack, which was produced by Doug Terry, the SIGOPS Chair. -

Curriculum Vitae

Massachusetts Institute of Technology School of Engineering Faculty Personnel Record Date: April 1, 2020 Full Name: Charles E. Leiserson Department: Electrical Engineering and Computer Science 1. Date of Birth November 10, 1953 2. Citizenship U.S.A. 3. Education School Degree Date Yale University B. S. (cum laude) May 1975 Carnegie-Mellon University Ph.D. Dec. 1981 4. Title of Thesis for Most Advanced Degree Area-Efficient VLSI Computation 5. Principal Fields of Interest Analysis of algorithms Caching Compilers and runtime systems Computer chess Computer-aided design Computer network architecture Digital hardware and computing machinery Distance education and interaction Fast artificial intelligence Leadership skills for engineering and science faculty Multicore computing Parallel algorithms, architectures, and languages Parallel and distributed computing Performance engineering Scalable computing systems Software performance engineering Supercomputing Theoretical computer science MIT School of Engineering Faculty Personnel Record — Charles E. Leiserson 2 6. Non-MIT Experience Position Date Founder, Chairman of the Board, and Chief Technology Officer, Cilk Arts, 2006 – 2009 Burlington, Massachusetts Director of System Architecture, Akamai Technologies, Cambridge, 1999 – 2001 Massachusetts Shaw Visiting Professor, National University of Singapore, Republic of 1995 – 1996 Singapore Network Architect for Connection Machine Model CM-5 Supercomputer, 1989 – 1990 Thinking Machines Programmer, Computervision Corporation, Bedford, Massachusetts 1975 -

Curriculum Vitae Bradley E. Richards December 2010

Curriculum Vitae Bradley E. Richards December 2010 Office: Home: Department of Mathematics and Computer Science 13446 108th Ave SW University of Puget Sound Vashon, WA 98070 1500 N. Warner St. (206) 567-5308 Tacoma, WA 98416 (206) 234-3560 (cell) (253) 879{3579 (253) 879{3352 (fax) [email protected] Degrees Ph.D. in Computer Science, August 1996 and M.S. in Computer Science, May 1992 University of Wisconsin, Madison, WI Advisor: James R. Larus Thesis: \Memory Systems for Parallel Programming" M.Sc. in Computer Science, April 1990 University of Victoria, Victoria B.C., Canada Advisor: Maarten van Emden Thesis: \Contributions to Functional Programming in Logic" B.A. Degrees, magna cum laude, in Computer Science and Physics, May 1988 Gustavus Adolphus College, St. Peter, MN Advisor: Karl Knight Positions Held University of Puget Sound, Tacoma, Washington Professor (7/2010{present) Associate Professor (7/2005{6/2010) Vassar College, Poughkeepsie, New York Associate Professor, tenured (6/2004{8/2005) Assistant Professor (9/1997{6/2004) Visiting Assistant Professor (9/1996{8/1997) University of Wisconsin, Madison, Wisconsin Graduate Research Assistant (6/1993{8/1996) Graduate Teaching Assistant (9/1990{5/1993) University of Victoria, Victoria, B.C., Canada Graduate Research Assistant (9/1988{4/1990) Graduate Teaching Assistant (9/1988{4/1990) Grants and Awards Co-PI, NSF Computing Research Infrastructure (CRI) grant #0734761, titled \Workshop for investigating the issues involved in implementing a data repository for empirical CS education data." Total grant amount $19,070, awarded 7/2007. With PI Kathryn Sanders, Associate Professor of Mathematics and Computer Science, Rhode Island College. -

Curriculum Vitae

Vita Benjamin G. Zorn June 2021 Partner Researcher Research in Software Engineering (RiSE) Group URL: http://aka.ms/zorn Microsoft Research, Redmond Lab Telephone: (425) 703-6290, Cell: on-request Microsoft Corporation Fax: (425) 936-7329 One Microsoft Way, Redmond WA 98052 Email: [email protected], [email protected] Research Interests Programming language design and implementation, program runtime systems and memory management, performance evaluation, compilers, error recovery, software fault tolerance, security, malware detection, software development, computer architecture Education • Ph.D. (Computer Science) December 1989, University of California, Berkeley. Thesis: Comparative Performance Evaluation of Garbage Collection Algorithms. Advisor: Paul N. Hilfinger. • M.S. (Computer Science) 1984, University of California, Berkeley. Master's Thesis: Experiences with Ada Code Generation. Advisor: Paul N. Hilfinger. • B.S. (Mathematics/Computer Science) summa cum laude 1982. Rensselaer Polytechnic Institute. Professional Experience • May 2019 – present. Partner Researcher, Research in Software Engineering (RiSE) group, Microsoft Research, Redmond Lab. • January 2012 – May 2019, Research Manager and Principal Researcher, Research in Software Engineering (RiSE) group, Microsoft Research, Redmond. I co-managed the Research in Software Engineering (RiSE) group, a group of almost 30 researchers and developers working on programming languages and software engineering research. • January 2006 – 2012, Principal Researcher, Microsoft Research, Redmond. • August 1998 – January 2006, Senior Researcher, Microsoft Research. • June 1999 – present, Adjoint Associate Professor, University of Colorado at Boulder. • August 1996 – Junes 1999, Associate Professor, University of Colorado at Boulder. • January 1990 – July 1996, Assistant Professor, University of Colorado at Boulder. • May 1983 – December 1989, Research Assistant, University of California at Berkeley. • December 1985 – December 1989, Course Instructor, Franz Incorporated. -

FCRC 2011 June 4 - 11, San Jose, CA TIMELINE SCHEDULE

FCRC 2011 June 4 - 11, San Jose, CA TIMELINE SCHEDULE Sponsored by Corporate Support Provided by Gold Silver CONFERENCE/WORKSHOP/EVENT ACRONYMS & DATES Dates Full Name Dates Full Name 3DAPAS 8 A Workshop on Dynamic Distrib. Data-Intensive Applications, Programming Abstractions, & Systs (HPDC) IWQoS 6--7 Int. Workshop on Quality of Service (ACM SIGMETRICS and IEEE Communications Society) A4MMC 4 Applications fo Multi and Many Core Processors: Analysis, Implementation, and Performance (ISCA) LSAP 8 P Workshop on Large-Scale System and Application Performance (HPDC) AdAuct 5 Ad Auction Workshop (EC) MAMA 8 Workshop on Mathematical Performance Modeling and Analysis (METRICS) AMAS-BT 4 P Workship on Architectural and Microarchitectural Support for Binary Translation (ISCA) METRICS 7--11 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems BMD 5 Workshop on Bayesian Mechanism Design (EC) MoBS 5 A Workshop on Modeling, Benchmarking, and Simulation (ISCA) CARD 5 P Workshop on Computer Architecture Research Directions (ISCA) MRA 8 Int. Workshop on MapReduce and its Applications (HPDC) CBP 4 P JILP Workshop on Computer Architecture Competitions: Championship Branch Prediction (ISCA) MSPC 5 Memory Systems Performance and Correctness (PLDI) Complex 8--10 IEEE Conference on Computational Complexity (IEEE TCMFC) NDCA 5 A New Directions in Computer Architecture (ISCA) CRA-W 4--5 CRA-W Career Mentoring Workshop NetEcon 6 Workshop on the Economics of Networks, Systems, and Computation (EC) DIDC 8 P Int. Workshop on Data-Intensive Distributed Computing (HPDC) PLAS 5 Programming Languages and Analysis for Security Workshop (PLDI) EAMA 4 Workshop on Emerging Applications and Manycore Architectures (ISCA) PLDI 4--8 ACM SIGPLAN Conference on Programming Language Design and Implementation EC 5--9 ACM Conference on Electronic Commerce (ACM SIGECOM) PODC 6--8 ACM SIGACT-SIGOPT Symp. -

Ms. Donna Cappo Director of SIG Services ACM October 4, 2017

Ms. Donna Cappo Director of SIG Services ACM October 4, 2017 Dear Ms. Cappo, We are writing to you to request that the ACM SIG Governing Board consider the formation of a new ACM Emerging Interest Group on Energy (tentatively named EIG-ENERGY). The generation, distribution, and consumption of energy lie at the foundations of modern civi- lization. Traditional energy systems are centralized and carbon-intensive, with highly-controllable generation, minimal energy storage, infrequent monitoring, and wasteful consumption by energy- unaware end users. With the global push towards societal de-carbonization, we are in the midst of a revolutionary transformation, where energy systems have already incorporated tens of millions of decentralized, stochastic, carbon-free renewable-energy sources and soon will have vastly more storage. However, due to the intermittent and uncontrollable nature of power generation from re- newable sources, there will be a need for demand flexibility, which can only be achieved by an intelligent use of information and communication technologies, as exemplified below: • Low-cost sensor technologies, embedded systems hardware, and communications using the Internet of Things are critical to allow large-scale monitoring, control, and actuation of en- ergy systems • Data analytics and computational algorithms are necessary for the design of efficient energy systems • Future energy systems are likely to have an architecture that resembles the Internet: large- scale, loosely-coupled, distributed, and heterogeneous. Thus, there are important lessons to be learned from the design of the Internet. These observations motivate the emerging discipline of Energy Informatics, which studies the design and efficient operation of future energy systems. -

Biography Five Related and Significant Publications

GUY BLELLOCH Professor Computer Science Department Carnegie Mellon University Pittsburgh, PA 15213 [email protected], http://www.cs.cmu.edu/~guyb Biography Guy E. Blelloch received his B.S. and B.A. from Swarthmore College in 1983, and his M.S. and PhD from MIT in 1986, and 1988, respectively. Since then he has been on the faculty at Carnegie Mellon University, where he is now an Associate Professor. During the academic year 1997-1998 he was a visiting professor at U.C. Berkeley. He held the Finmeccanica Faculty Chair from 1991–1995 and received an NSF Young Investigator Award in 1992. He has been program chair for the ACM Symposium on Parallel Algorithms and Architectures, program co-chair for the IEEE International Parallel Processing Symposium, is on the editorial board of JACM, and has served on a dozen or so program committees. His research interests are in parallel programming languages and parallel algorithms, and in the interaction of the two. He has developed the NESL programming language under an ARPA contract and an NSF NYI award. His work on parallel algorithms includes work on sorting, computational geometry, and several pointer-based algorithms, including algorithms for list-ranking, set-operations, and graph connectivity. Five Related and Significant Publications 1. Guy Blelloch, Jonathan Hardwick, Gary L. Miller, and Dafna Talmor. Design and Implementation of a Practical Parallel Delaunay Algorithm. Algorithmica, 24(3/4), pp. 243–269, 1999. 2. Guy Blelloch, Phil Gibbons and Yossi Matias. Efficient Scheduling for Languages with Fine-Grained Parallelism. Journal of the ACM, 46(2), pp. -

Appendix C SURPLUS/LOSS: $291.34 SIGCSE 0.00 Sigada 100.00 SIGAPP 0.00 SIGPLAN 0.00

Starts Ends Conference Actual SIGs and their % SIGACCESS 21-Oct-13 23-Oct-13 ASSETS '13: The 15th International ACM SIGACCESS ATTENDANCE: 155 SIGACCESS 100.00 Conference on Computers and Accessibility INCOME: $74,697.30 EXPENSE: $64,981.11 SURPLUS/LOSS: $9,716.19 SIGACT 12-Jan-14 14-Jan-14 ITCS'14 : Innovations in Theoretical Computer Science ATTENDANCE: 76 SIGACT 100.00 INCOME: $19,210.00 EXPENSE: $21,744.07 SURPLUS/LOSS: ($2,534.07) 22-Jul-13 24-Jul-13 PODC '13: ACM Symposium on Principles ATTENDANCE: 98 SIGOPS 50.00 of Distributed Computing INCOME: $62,310.50 SIGACT 50.00 EXPENSE: $56,139.24 SURPLUS/LOSS: $6,171.26 23-Jul-13 25-Jul-13 SPAA '13: 25th ACM Symposium on Parallelism in ATTENDANCE: 45 SIGACT 50.00 Algorithms and Architectures INCOME: $45,665.50 SIGARCH 50.00 EXPENSE: $39,586.18 SURPLUS/LOSS: $6,079.32 23-Jun-14 25-Jun-14 SPAA '14: 26th ACM Symposium on Parallelism in ATTENDANCE: 73 SIGARCH 50.00 Algorithms and Architectures INCOME: $36,107.35 SIGACT 50.00 EXPENSE: $22,536.04 SURPLUS/LOSS: $13,571.31 31-May-14 3-Jun-14 STOC '14: Symposium on Theory of Computing ATTENDANCE: SIGACT 100.00 INCOME: $0.00 EXPENSE: $0.00 SURPLUS/LOSS: $0.00 SIGAda 10-Nov-13 14-Nov-13 HILT 2013:High Integrity Language Technology ATTENDANCE: 60 SIGBED 0.00 ACM SIGAda Annual INCOME: $32,696.00 SIGCAS 0.00 EXPENSE: $32,404.66 SIGSOFT 0.00 Appendix C SURPLUS/LOSS: $291.34 SIGCSE 0.00 SIGAda 100.00 SIGAPP 0.00 SIGPLAN 0.00 SIGAI 11-Nov-13 15-Nov-13 ASE '13: ACM/IEEE International Conference on ATTENDANCE: 195 SIGAI 25.00 Automated Software Engineering -

Acm Digital Library 매뉴얼

ACM DIGITAL LIBRARY 매뉴얼 신원데이터넷 [email protected] Confidential 1 ⓒ 2020 Shinwon Datanet Co., Ltd TABLE OF CONTENTS 1. 출판사 소개 2. 수록내용 3. The ACM Digital Library 4. The Guide to Computing Literature Confidential 2 ⓒ 2020 Shinwon Datanet Co., Ltd 1. 출판사 소개 Association for Computing Machinery (ACM) - 1947년 설립된 미국컴퓨터협회 ACM(http://www.acm.org)은 컴퓨터 및 IT 관련 모든 분야에 대한 최신 정보를 제공하는 협회로, 현재 전 세계 190 여 개 국 150,000 여 명 이상의 회원 보유 - ACM 회원들은 단순히 기술보고서나 논문을 게재하는 활동 이외에도, 연구 시 발생 되었던 문제들과 해결 방법, 그리고 수록된 기술 보고서나 논문에 대한 Review를 제시함으로써 컴퓨터 관련 분야의 가장 권위 있는 Community를 형성하고 있음 Confidential 3 ⓒ 2020 Shinwon Datanet Co., Ltd 1. 출판사 소개 SIG(Special Interest Groups) • ACM 내 소 주제분야 관련 분과회 • 전세계 170개의 컨퍼런스, 워크샵, 심포지엄을 주관 • 컴퓨터 IT 관련 분야의 37개 분과에서 관련 연구 및 정보 교환 SIGACCESS Accessibility and Computing SIGKDD Knowledge Discovery in Data SIGACT Algorithms & Computation Theory SIGLOG Logic and Computation SIGAI Artificial Intelligence SIGMETRICS Measurement and Evaluation SIGAPP Applied Computing SIGMICRO Microarchitecture SIGARCH Computer Architecture SIGMIS Management Information Systems SIGAda Ada Programming Language SIGMM Multimedia Systems SIGBED Embedded Systems SIGMOBILE Mobility of Systems, Users, Data & Computing SIGBio Bioinformatics, Computational Biology SIGMOD Management of Data SIGCAS Computers and Society SIGOPS Operating Systems SIGCHI Computer SIGPLAN Programming Languages SIGCOMM Data Communication SIGSAC Security, Audit and Control SIGCSE Computer Science Education SIGSAM Symbolic & Algebraic Manipulation SIGDA Design Automation SIGSIM Simulation SIGDOC Design of Communication SIGSOFT Software Engineering SIGEVO Genetic and Evolutionary Computation SIGSPATIAL Spatial Information SIGGRAPH Computer Graphics SIGUCCS University & College Computing Services SIGHPC High Performance Computing SIGWEB Hypertext, Hypermedia and Web SIGIR Information Retrieval SIGecom Electronic Commerce SIGITE Information Technology Education Confidential ⓒ 2020 Shinwon Datanet Co., Ltd 4 2. -

Truly Perfect Samplers for Data Streams and Sliding Windows

Truly Perfect Samplers for Data Streams and Sliding Windows Rajesh Jayaram∗ David P. Woodruff† Samson Zhou‡ August 30, 2021 Abstract In the G-sampling problem, the goal is to output an index i of a vector f Rn, such that for all coordinates j [n], ∈ ∈ G(f ) Pr[i = j]=(1 ǫ) j + γ, ± k∈[n] G(fk) P where G : R R≥0 is some non-negative function. If ǫ = 0 and γ = 1/ poly(n), the sampler is called perfect→. In the data stream model, f is defined implicitly by a sequence of updates to its coordinates, and the goal is to design such a sampler in small space. Jayaram and p Woodruff (FOCS 2018) gave the first perfect Lp samplers in turnstile streams, where G(x)= x , using polylog(n) space for p (0, 2]. However, to date all known sampling algorithms are| not| truly perfect, since their output∈ distribution is only point-wise γ =1/ poly(n) close to the true distribution. This small error can be significant when samplers are run many times on successive portions of a stream, and leak potentially sensitive information about the data stream. In this work, we initiate the study of truly perfect samplers, with ǫ = γ = 0, and compre- hensively investigate their complexity in the data stream and sliding window models. We begin by showing that sublinear space truly perfect sampling is impossible in the turnstile model, by 1 proving a lower bound of Ω min n, log γ for any G-sampler with point-wise error γ from the true distribution. -

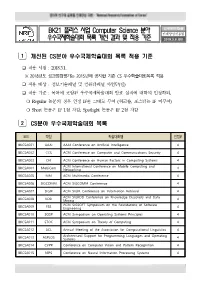

BK21 플러스 사업 Computer Science 분야 우수국제학술대회 목록 개선

BK21 플러스 사업 Computer Science 분야 인재양성지원실 인재양성진흥팀 우수국제학술대회 목록 개선 결과 및 적용 기준 2018.3.6.(화) 1 개선된 CS분야 우수국제학술대회 목록 적용 기준 ❑ 적용 시점 : 2018.3.1. ※ 2018년도 성과점검평가는 2015년에 공지한 기존 CS 우수학술대회목록 적용 ❑ 적용 대상 : 정보기술패널 및 컴퓨터패널 사업단(팀) ❑ 적용 기준 : 목록에 포함된 우수국제학술대회 발표 실적에 대하여 인정하되, ❍ Regular 논문의 경우 인정 IF를 그대로 부여 (워크숍, 포스터는 IF 미부여) ❍ Short 논문은 IF 1점 차감, Spotlight 논문은 IF 2점 차감 2 CS분야 우수국제학술대회 목록 코드 약칭 학술대회명 인정IF BKCSA001 AAAI AAAI Conference on Artificial Intelligence 4 BKCSA002 CCS ACM Conference on Computer and Communications Security 4 BKCSA003 CHI ACM Conference on Human Factors in Computing Systems 4 ACM International Conference on Mobile Computing and BKCSA004 MobiCom Networking 4 BKCSA005 MM ACM Multimedia Conference 4 BKCSA006 SIGCOMM ACM SIGCOMM Conference 4 BKCSA007 SIGIR ACM SIGIR Conference on Information Retrieval 4 ACM SIGKDD Conference on Knowledge Discovery and Data BKCSA008 KDD 4 Mining ACM SIGSOFT Symposium on the Foundations of Software BKCSA009 FSE Engineering 4 BKCSA010 SOSP ACM Symposium on Operating Systems Principles 4 BKCSA011 STOC ACM Symposium on Theory of Computing 4 BKCSA012 ACL Annual Meeting of the Association for Computational Linguistics 4 Architectural Support for Programming Languages and Operating BKCSA013 ASPLOS 4 Systems BKCSA014 CVPR Conference on Computer Vision and Pattern Recognition 4 BKCSA015 NIPS Conference on Neural Information Processing Systems 4 1 코드 약칭 학술대회명 인정IF BKCSA016 OOPSLA Conference on Object-Oriented Programming, System, Languages, 4 and Applications BKCSA017 INFOCOM IEEE Conference on Computer Communications