Constrained Information-Theoretic Tripartite Graph Clustering To

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

8 Highly Effective Habits That Helped Make Bill Gates the Richest Man on Earth

8 Highly Effective Habits That Helped Make Bill Gates the Richest Man on Earth Adopting these habits may not make you a billionaire, but it will make you more effective and more successful. By Minda Zetlin, Co-author of 'The Geek Gap' How did Bill Gates get to be the richest person in the world, with a net worth around $80 billion? Being in the right place with the right product at the dawn of the personal computer era certainly had a lot to do with it. But so do some very smart approaches to work and life that all of us can follow. The personal finance site GOBankingRates recently published a list of 10 habits and experiences that make Gates so successful and helped him build his fortune. Here are my favorites. How many of them do you do? 1. He's always learning. Gates is famous for being a Harvard dropout, but the only reason he dropped out is that he and Paul Allen saw a window of opportunity to start their own software company. In fact, Gates loves learning and often sat in on classes he wasn't signed up for. That's something he had in common with Steve Jobs, who stuck around after dropping out of Reed College, sleeping on floors, so that he could take classes that interested him. 2. He reads everything. "Just about every kind of book interested him -- encyclopedias, science fiction, you name it," Gates's father said in an interview. Although his parents were thrilled that their son was such a bookworm, they had to establish a no-reading-at-the-dinner-table rule. -

Ip Man' Martial Arts Superstar Yen Joins Polio Campaign

Ip Man' martial arts superstar Yen joins polio campaign 21 August 2013 | News | By BioSpectrum Bureau Singapore: Internationally-renowned Hong Kong action star Mr Donnie Yen has joined the growing roster of public figures and celebrities to participate in Rotary's 'This Close' public awareness campaign for polio eradication. Mr Yen, an Asian superstar who gained world fame with Ip Man, Wu Xia and many other classics, will help Rotary achieve its goal of a polio-free world by raising his thumb and forefinger in the 'this close' gesture in the ad with the tagline "we're this close to ending polio". Mr Yen said, "I decided to become a Rotary ambassador for polio eradication because polio kills or paralyzes young children and Rotary is committed to ending this terrible disease worldwide. I also learned that the world has never been so close to eradication of polio since the mid 80's thanks to the vigorous efforts of Rotary International and its partners." Rotary club has partnered with Bill & Melinda Gates Foundation in the campaign for polio eradication. The Gates Foundation will match two-to-one, up to $35 million per year for every dollar that Rotary commits, in order to reduce the funding shortfall for polio eradication through 2018. It will help provide world's governments with the $5.5 billion needed to finish the job and end polio forever. The Rotary awareness campaign also features public figures and celebrities including Bill Gates, co-chair of the Bill & Melinda Gates Foundation, Nobel Peace Prize laureate Archbishop Emeritus Desmond Tutu, action movie star Jackie Chan, boxing great Manny Pacquiao, Korean pop star Psy, golf legend Jack Nicklaus, conservationist Jane Goodall, premier violinist Itzhak Perlman, Grammy Award winners AR Rahman, Angelique Kidjo and Ziggy Marley, and peace advocate Queen Noor of Jordan.. -

Using Disc to Teach Adaptive Selling

SOMETHING YOU SAW AT CONFERENCE THAT YOU WILL USE Dr. Cindy B. Rippé USING DISC TO CATEGORIZE STUDENTS FOR TEACHING SUCCESS DISC BEHAVIORAL STYLES WHAT IS DISC • Behavioral analysis based on Carl Jung • Measures behavioral types like Myers-Briggs (MBTI) • DISC easier and more practical • Statistically validated behavioral classification tool (Extended DISC, 2013; Inscape Publishing, 1996; RenAud, Rutledge, & Shepherd, 2012) https://discpersonalitytesting.com/free-disc-test/ D-STYLE “In order to help you reach your goal of leading others, today I’m going to teach you DISC.” D-STYLE Simon Cowell Coach Bobby Knight Rosie O’Donnell Venus Williams Kanye West Captain Kirk of “Star Tyra Banks Trek” Alec Baldwin Bill O’Reilly Madonna Bernadette of “Big Bang Theory” J.R. Ewing of Dallas I-STYLE “Today I have a fun and exciting tool to teach you how to connect with others.” I-STYLE Kate Hudson Scotty of “Star Trek” Amy Poehler Dolly Parton Kevin Hart Prince Harry Drew Barrymore Jim Carrey Bill Clinton Ellen DeGeneres Jay Leno Robin Williams Will Smith Penny of “Big Bang Melissa McCarthy Theory” S-STYLE “Today I have A wAy to help you hAve hArmony with others and am first going to tell you about DISC styles and next I’m going to tell you how to adapt to them.” S-STYLE Sarah Jessica Magic Johnson Parker Jimmy Fallon Mr. Sulu of “Star Michael J. Fox Trek” Tom Brokaw Princess Diana Mahatma Gandhi Halle Berry David Beckham Matthew Broderick Kate Duchess of Rajesh of “Big Bang Windsor Theory” Peyton Manning C-STYLE “Today I Am going to tAke you through A step by step process providing you with AdditionAl information so you can think about the best way to analyze other people with DISC.” C-STYLE Spock of “Star Trek” Jack Nicklaus Al Gore Jimmy Carter Albert Einstein Ted Koppel Bill Gates Bjorn Borg Tiger Woods Clint Eastwood Keanu Reeves Richard Nixon Monica of “Friends” Sherlock Holmes Hermione of “Harry Sheldon Cooper of Potter” “Big Bang Theory” . -

Jesus Financial Advice

JESUS’ FINANCIAL ADVICE To Warren Buffet, Bill Gates and Oprah Winfrey Luke 16:1-15 Bill Gates is the richest man in America. When the stock market goes up and down his wealth rises and falls by billions of dollars, and it probably doesn’t bother him one way or the other. Bill Gates made his money in computer software. Warren Buffet made his money in business investments. And Oprah Winfrey, one of the richest women in America, made her money in the entertainment industry. Imagine a roundtable discussion with Bill Gates, Warren Buffet, Oprah Winfrey and Jesus Christ. The topic is money and Jesus is talking. He is obviously different from the rest around the table. On the one hand he doesn’t appear to have their wealth. On the other hand you sort of feel like he comes from “old money”—that his Father is really rich! Whatever your impression, Jesus is comfortable talking about money whether he has any or not. He doesn’t seem to be the least bit intimidated by three of the richest people in America. Jesus begins by telling a story. We recognize it as “The Parable of the Shrewd Manager” from Luke 16. It goes like this: “There was a rich man whose manager was accused of wasting his possessions. So he called him in and asked him, ‘What is this I hear about you? Give an account of your management, because you cannot be my manager any longer.’ “The manager said to himself, ‘What shall I do now? My master is taking away my job. -

Government Affairs E-Update

Government Affairs e-Update Volume 4, Issue 5 12 March 2004 Brought to you by Cisco Government Affairs Online Cisco Government Affairs E-Update Volume 4, Issue 5 12 March 2004 Brought to you by Cisco Government Affairs Online: http://www.cisco.com/gov Note: This report is generally issued on Fridays. This Week@Cisco in Government Affairs Cisco's E-Update keeps you up to date on the major policy news of the week. Focusing on broadband, education and e-government areas, but covering high-tech and telecom in general, the E-Update is a great source of information for policymakers. To subscribe, send a message with “subscribe” in the subject line to “[email protected]” If you have high-tech public policy news or announcements that you think other e-update subscribers would be interested in, please send them to [email protected]. There are over 1200 subscribers to Cisco Government Affairs’ eUpdate. RE-DESIGNED CISCO GOVERNMENT AFFAIRS WEBSITE – Please visit www.cisco.com/gov to view our redesigned worldwide government affairs website. You can reach any member of the team at: http://www.cisco.com/gov/contact/index.html. Also see Q&A with Laura Ipsen, Vice President of Worldwide Government Affairs, as she looks at 2004 and the issues that Cisco is focusing on: http://newsroom.cisco.com/dlls/media_info/public_policy_overview.html OP-ED - OPTIONS DRIVE INNOVATION, CREATE JOBS - MANDATORY EXPENSING PLAN WOULD HURT AN AILING ECONOMY - By Craig R. Barrett, John T. Chambers and Scott McNealy - As the Financial Standards Accounting Board (FASB) prepares its proposal on mandatory expensing of employee stock options, it's time to revisit the question of if -- and how -- stock options should be expensed. -

Managing Generational Differences Goal

National Conference of Bar Foundations February 6, 2015 Managing Generational Differences Goal To Uncover the: ◦ Events ◦ Conditions ◦ Values ◦ Behaviors That make each generation Unique! Framing The Generations Clashpoints Jot Down Your Thoughts-- • How does your organization break down by generation? • A generation gap you experienced at work • Where your team experiences gaps • Advantages of your generation mix For the first time in US History there are Four Generations in the Workplace TRADITIONALISTS 1900 -1945 Influences People in the News . The Great . Frank Sinatra Depression . Ella Fitzgerald . The New Deal . FDR . World War II . Jackie Robinson . The G.I. Bill . Henry Ford . The Cold War . John Wayne . The Atom Bomb . Joe DiMaggio TRADITIONALISTS 1900 -1945 Traits Growing Up . Loyal/Civic Minded . Disciplined . Patriotic . Conformers . Hard working . Personal sacrifice . Fiscally conservative . Children should be . Faith in Institutions seen and not heard . Work for same employer . Make do or do without . Practical . Respect Authority babyboomers 1946 - 1964 Influences People in the News . Booming birthrate . John F. Kennedy . Economic prosperity . Martin Luther King, Jr. Vietnam . Rosa Parks . Watergate . The Jackson Five . Assassinations . Elvis . Civil rights . The Beatles movement . Neil Armstrong . Women’s movement . Sex, drugs, rock & roll babyboomers 1946 - 1964 Traits Growing Up . Confident . Stay-at-home moms . Independent . Suburbs . Self-reliant . TV . Competitive . Play well with others . Questioners of authority . Idealistic babyboomers 1946 - 1964 Traits . Optimistic . Desire to stand out from the crowd . Cool . Work-centric . Relish long work hours . Defined by Professional Accomplishments GENERATION X 1965 - 1981 Influences People in the News . Divorce . Bill Clinton . Lay-offs . Bill Gates . AIDS . Michael Jordan . -

Our Brochure

The think tank for a more inclusive, sustainable and forward-looking Europe WHAT WE BELIEVE IN #EUROPEMATTERS In 2018, Friends of Europe launched its from Europe is security, jobs, and tackling flagship initiative #EuropeMatters, bringing climate change. Citizens also want more say together business leaders, policymakers, in the decision-making process and better civil society representatives and citizens to transparency. co-design a Europe that still matters in 2030, within the framework of a renewed European We set out a vision for the Europe we want social contract. The initiative serves as the in our #EuropeMatters report, one that is guiding principle across Friends of Europe’s based on input from our multi-stakeholder current activities. engagement and the messages we heard from citizens. This vision became a set of Since initiating the #EuropeMatters project, concrete recommendations for the 2019 a vast network of stakeholders and citizens European leadership on security, prosperity have contributed to the development of four and sustainability. By focusing on the policy scenarios setting out plausible futures for areas that matter most to people, these can Europe in 2030. These were debated at the help reinvigorate the relationship between 15th edition of our annual flagship high- citizens and the European project. level roundtable, the State of Europe. We also conducted a poll of 11,000 EU citizens, Our objective is to mobilise a coalition which revealed striking insights across of the willing, united by their belief that regions, gender and age – including the #EuropeMatters and to together ensure that general belief that the EU is mostly irrelevant Europe is better prepared to take strategic in their lives. -

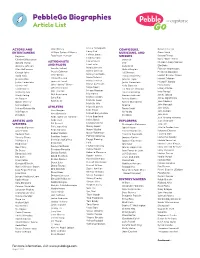

Pebblego Biographies Article List

PebbleGo Biographies Article List Kristie Yamaguchi ACTORS AND Walt Disney COMPOSERS, Delores Huerta Larry Bird ENTERTAINERS William Carlos Williams MUSICIANS, AND Diane Nash LeBron James Beyoncé Zora Neale Hurston SINGERS Donald Trump Lindsey Vonn Chadwick Boseman Beyoncé Doris “Dorie” Miller Lionel Messi Donald Trump ASTRONAUTS BTS Elizabeth Cady Stanton Lisa Leslie Dwayne Johnson AND PILOTS Celia Cruz Ella Baker Magic Johnson Ellen DeGeneres Amelia Earhart Duke Ellington Florence Nightingale Mamie Johnson George Takei Bessie Coleman Ed Sheeran Frederick Douglass Manny Machado Hoda Kotb Ellen Ochoa Francis Scott Key Harriet Beecher Stowe Maria Tallchief Jessica Alba Ellison Onizuka Jennifer Lopez Harriet Tubman Mario Lemieux Justin Timberlake James A. Lovell Justin Timberlake Hector P. Garcia Mary Lou Retton Kristen Bell John “Danny” Olivas Kelly Clarkson Helen Keller Maya Moore Lynda Carter John Herrington Lin-Manuel Miranda Hillary Clinton Megan Rapinoe Michael J. Fox Mae Jemison Louis Armstrong Irma Rangel Mia Hamm Mindy Kaling Neil Armstrong Marian Anderson James Jabara Michael Jordan Mr. Rogers Sally Ride Selena Gomez James Oglethorpe Michelle Kwan Oprah Winfrey Scott Kelly Selena Quintanilla Jane Addams Michelle Wie Selena Gomez Shakira John Hancock Miguel Cabrera Selena Quintanilla ATHLETES Taylor Swift John Lewis Alex Morgan Mike Trout Will Rogers Yo-Yo Ma John McCain Alex Ovechkin Mikhail Baryshnikov Zendaya Zendaya John Muir Babe Didrikson Zaharias Misty Copeland Jose Antonio Navarro ARTISTS AND Babe Ruth Mo’ne Davis EXPLORERS Juan de Onate Muhammad Ali WRITERS Bill Russell Christopher Columbus Julia Hill Nancy Lopez Amanda Gorman Billie Jean King Daniel Boone Juliette Gordon Low Naomi Osaka Anne Frank Brian Boitano Ernest Shackleton Kalpana Chawla Oscar Robertson Barbara Park Bubba Wallace Franciso Coronado Lucretia Mott Patrick Mahomes Beverly Cleary Candace Parker Jacques Cartier Mahatma Gandhi Peggy Fleming Bill Martin Jr. -

Media Reviews

Med. Hist. (2016), vol. 60(3), pp. 446–449. c The Author 2016. Published by Cambridge University Press 2016 doi:10.1017/mdh.2016.52 Media Reviews Bill and Melinda Gates Foundation Visitor Center The Visitor Center at the new headquarters of the Bill and Melinda Gates Foundation aims to promote awareness of the Foundation’s history, the philanthropic goals of its founders, and its current work in the areas of health, poverty, and education. Located across the street from the Space Needle and other tourist attractions at the sprawling Seattle Center, its interactive exhibits are designed to appeal to tourists and locals, novices and experts, and young and old alike. Although the space without doubt primarily serves a propaganda function for the Foundation and the Gates family, there is much of interest to the historian of medicine. A large portion of the exhibition area, in fact, is devoted to shaping perceptions of the Foundation’s past and ongoing work in public health and global health. The Visitor Center comprises a foyer and four large halls, in which a series of permanent, carefully co-ordinated multimedia exhibits convey the origins of the Foundation and its model of philanthropic work. In doing so, they also portray Bill Gates and Melinda French Gates as benevolent philanthropists who have always been committed to changing the world and improving human well-being. It is up to the visitor, of course, to decide if this is in fact true, but the Visitor Center attempts to make a powerful case. One of the rooms, ‘Family and Foundation’, features a large, interactive timeline that portrays Bill Gates and Melinda French Gates as heirs to family traditions of giving back, charts the early years of the Foundation’s development, and documents more recent projects and events. -

Rotary and Polio Factsheet

ROTARY AND POLIO Polio Poliomyelitis (polio) is a paralyzing and potentially fatal disease that still threatens children in some parts of the world. The poliovirus invades the nervous system and can cause total paralysis in a matter of hours. It can strike at any age but mainly affects children under five. Polio is incurable, but completely vaccine-preventable. PolioPlus In 1985, Rotary launched its PolioPlus program, the first initiative to tackle global polio eradication through the mass vaccination of children. Rotary has contributed more than $1.5 billion and countless volunteer hours to immunize more than 2.5 billion children in 122 countries. In addition, Rotary’s advocacy efforts have played a role in decisions by donor governments to contribute more than $7.2 billion to the effort. Global Polio Eradication Initiative The Global Polio Eradication Initiative, formed in 1988, is a public-private partnership that includes Rotary, the World Health Organization, the U.S. Centers for Disease Control and Prevention, UNICEF, the Bill & Melinda Gates Foundation, and governments of the world. Rotary’s focus is advocacy, fundraising, volunteer recruitment and awareness-building. Polio Today Today, there are only two countries that have never stopped transmission of the wild poliovirus: Afghanistan and Pakistan. Less than 75 polio cases were confirmed worldwide in 2015, which is a reduction of more than 99.9 percent since the 1980s, when the world saw about 1,000 cases per day. Challenges The polio cases represented by the remaining one percent are the most difficult to prevent, due to factors including geographical isolation, poor public infrastructure, armed conflict and cultural barriers. -

2016 CGI ANNUAL MEETING Media Kit

2016 CGI ANNUAL MEETING Media Kit 1 CONTENTS ABOUT THE ANNUAL MEETING............................................................................................ 3 PRESS LOGISTICS ...................................................................................................................... 5 AGENDA ........................................................................................................................................ 8 SUNDAY, SEPTEMBER 18 .................................................................................................................... 8 MONDAY, SEPTEMBER 19 .................................................................................................................. 8 TUESDAY, SEPTEMBER 20 ................................................................................................................ 17 WEDNESDAY, SEPTEMBER 21 ......................................................................................................... 31 PLENARY SPEAKER BIOGRAPHIES ................................................................................... 42 CGI LEGACY SPOTLIGHT ..................................................................................................... 54 EVENT MAPS ............................................................................................................................. 56 CGI TIMELINE........................................................................................................................... 61 *Updated September 17, 2016 Schedule subject -

Alphabetical List of Persons for Whom Recommendations Were Received for Padma Awards - 2015

Alphabetical List of Persons for whom recommendations were received for Padma Awards - 2015 Sl. No. Name 1. Shri Aashish 2. Shri P. Abraham 3. Ms. Sonali Acharjee 4. Ms. Triveni Acharya 5. Guru Shashadhar Acharya 6. Shri Gautam Navnitlal Adhikari 7. Dr. Sunkara Venkata Adinarayana Rao 8. Shri Pankaj Advani 9. Shri Lal Krishna Advani 10. Dr. Devendra Kumar Agarwal 11. Shri Madan Mohan Agarwal 12. Dr. Nand Kishore Agarwal 13. Dr. Vinay Kumar Agarwal 14. Dr. Shekhar Agarwal 15. Dr. Sanjay Agarwala 16. Smt. Raj Kumari Aggarwal 17. Ms. Preety Aggarwal 18. Dr. S.P. Aggarwal 19. Dr. (Miss) Usha Aggarwal 20. Shri Vinod Aggarwal 21. Shri Jaikishan Aggarwal 22. Dr. Pratap Narayan Agrawal 23. Shri Badriprasad Agrawal 24. Dr. Sudhir Agrawal 25. Shri Vishnu Kumar Agrawal 26. Prof. (Dr.) Sujan Agrawal 27. Dr. Piyush C. Agrawal 28. Shri Subhash Chandra Agrawal 29. Dr. Sarojini Agrawal 30. Shri Sushiel Kumar Agrawal 31. Shri Anand Behari Agrawal 32. Dr. Varsha Agrawal 33. Dr. Ram Autar Agrawal 34. Shri Gopal Prahladrai Agrawal 35. Shri Anant Agrawal 36. Prof. Afroz Ahmad 37. Prof. Afzal Ahmad 38. Shri Habib Ahmed 39. Dr. Siddeek Ahmed Haji Panamtharayil 40. Dr. Ranjan Kumar Akhaury 41. Ms. Uzma Akhtar 42. Shri Eshan Akhtar 43. Shri Vishnu Akulwar 44. Shri Bruce Alberts 45. Captain Abbas Ali 46. Dr. Mohammed Ali 47. Dr. Govardhan Aliseri 48. Dr. Umar Alisha 49. Dr. M. Mohan Alva 50. Shri Mohammed Amar 51. Shri Gangai Amaren 52. Smt. Sindhutai Ramchandra Ambike 53. Mata Amritanandamayi 54. Dr. Manjula Anagani 55. Shri Anil Kumar Anand 56.