Speech Rehabilitation in Post-Stroke Aphasia Using Visual Illustration of Speech Articulators

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intensive Auditory Comprehension Treatment for People with Severe Aphasia

Intensive Auditory Comprehension Treatment for People with Severe Aphasia: Outcomes and Use of Self-Directed Strategies A dissertation proposal submitted to the Division of Graduate Education and Research Of the University of Cincinnati in partial fulfillment of the requirements of the degree of DOCTOR OF PHILOSOPHY In the Department of Communication Sciences and Disorders In the College of Allied Health Sciences Dissertation Committee: Aimee Dietz, Ph D., chair Lisa Kelchner, Ph.D. Robin Thomas, Ph.D. Pete Scheifele, Ph.D 2012 by Kelly Knollman-Porter Intensive Auditory Comprehension Treatment Abstract The purpose of this study was to determine the efficacy of an intensive (2 hours/day, 5 days/week for 3 weeks) treatment protocol on individuals with severe, chronic speech perception or auditory comprehension deficits associated with aphasia. Two experiments were implemented to examine this purpose. Experiment I: Single Word Comprehension Approach (SWCA) established the effectiveness of an intensive treatment protocol on single word auditory comprehension (n = 6). Alternatively, Experiment II: Speech Perception Approach (SPA) examined the outcomes of an intensive treatment protocol on speech perception in individuals with profound global aphasia (n = 2). The researcher employed an ABA single subject design for both experiments, and examined the following variables: (1) changes in single word comprehension (SWCA) or speech perception (SPA); (2) the number of self-initiated requests for repetition and lip-reading cues; (3) the effectiveness of repetition and lip-reading cues; (4) the indirect effects of the protocols on verbal expression (SWCA – naming; SPA – repetition; Both – narrative skills); (5) and generalization to functional communication environments. Results revealed that all participants enrolled in the SWCA or SPA exhibited a lack of awareness regarding their comprehension impairment at the onset of treatment. -

Impact of a Reminder/Extinction Procedure on Threat-Conditioned Pupil Size and Skin Conductance Responses

Downloaded from learnmem.cshlp.org on March 28, 2020 - Published by Cold Spring Harbor Laboratory Press Research Impact of a reminder/extinction procedure on threat- conditioned pupil size and skin conductance responses Josua Zimmermann1,2 and Dominik R. Bach1,2,3 1Computational Psychiatry Research, Department of Psychiatry, Psychotherapy, and Psychosomatics, Psychiatric Hospital, University of Zurich, 8032 Zurich, Switzerland; 2Neuroscience Centre Zurich, University of Zurich, 8057 Zurich, Switzerland; 3Wellcome Centre for Human Neuroimaging and Max Planck/UCL Centre for Computational Psychiatry and Ageing Research, University College London, London WC1 3BG, United Kingdom A reminder can render consolidated memory labile and susceptible to amnesic agents during a reconsolidation window. For the case of threat memory (also termed fear memory), it has been suggested that extinction training during this reconso- lidation window has the same disruptive impact. This procedure could provide a powerful therapeutic principle for treat- ment of unwanted aversive memories. However, human research yielded contradictory results. Notably, all published positive replications quantified threat memory by conditioned skin conductance responses (SCR). Yet, other studies mea- suring SCR and/or fear-potentiated startle failed to observe an effect of a reminder/extinction procedure on the return of fear. Here we sought to shed light on this discrepancy by using a different autonomic response, namely, conditioned pupil dilation, in addition to SCR, in a replication of the original human study. N = 71 humans underwent a 3-d threat condition- ing, reminder/extinction, and reinstatement, procedure with 2 CS+, of which one was reminded. Participants successfully learned the threat association on day 1, extinguished conditioned responding on day 2, and showed reinstatement on day 3. -

Phonetic and Phonological Systems Analysis (PPSA) User Notes For

Phonetic and Phonological Systems Analysis (PPSA) User Notes for English Systems Sally Bates* and Jocelynne Watson** *University of St Mark and St John **Queen Margaret University, Edinburgh “A fully comprehensive analysis is not required for every child. A systematic, principled analysis is, however, necessary in all cases since it forms an integral part of the clinical decision-making process.” Bates & Watson (2012, p 105) Sally Bates & Jocelynne Watson (Authors) QMU & UCP Marjon © Phonetic and Phonological Systems Analysis (PPSA) is licensed under a Creative Commons Attribution-Non-Commercial-NoDerivs 3.0 Unported License. Table of Contents Phonetic and Phonological Systems Analysis (PPSA) Introduction 3 PPSA Child 1 Completed PPSA 5 Using the PPSA (Page 1) Singleton Consonants and Word Structure 8 PI (Phonetic Inventory) 8 Target 8 Correct Realisation 10 Errored Realisation and Deletion 12 Other Errors 15 Using the PPSA (Page 2) Consonant Clusters 16 WI Clusters 17 WM Clusters 18 WF Clusters 19 Using the PPSA (Page 3) Vowels 20 Using the PPSA (Page 2) Error Pattern Summary 24 Child 1 Interpretation 25 Child 5 Data Sample 27 Child 5 Completed PPSA 29 Child 5 Interpretation 32 Advantages of the PPSA – why we like this approach 34 What the PPSA doesn’t do 35 References 37 Key points of the Creative Commons License operating with this PPSA Resource 37 N.B. We recommend that the reader has a blank copy of the 3 page PPSA to follow as they go through this guide. This is also available as a free download (PPSA Charting and Summary Form) under the same creative commons license conditions. -

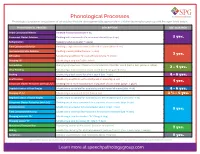

Phonological Processes

Phonological Processes Phonological processes are patterns of articulation that are developmentally appropriate in children learning to speak up until the ages listed below. PHONOLOGICAL PROCESS DESCRIPTION AGE ACQUIRED Initial Consonant Deletion Omitting first consonant (hat → at) Consonant Cluster Deletion Omitting both consonants of a consonant cluster (stop → op) 2 yrs. Reduplication Repeating syllables (water → wawa) Final Consonant Deletion Omitting a singleton consonant at the end of a word (nose → no) Unstressed Syllable Deletion Omitting a weak syllable (banana → nana) 3 yrs. Affrication Substituting an affricate for a nonaffricate (sheep → cheep) Stopping /f/ Substituting a stop for /f/ (fish → tish) Assimilation Changing a phoneme so it takes on a characteristic of another sound (bed → beb, yellow → lellow) 3 - 4 yrs. Velar Fronting Substituting a front sound for a back sound (cat → tat, gum → dum) Backing Substituting a back sound for a front sound (tap → cap) 4 - 5 yrs. Deaffrication Substituting an affricate with a continuant or stop (chip → sip) 4 yrs. Consonant Cluster Reduction (without /s/) Omitting one or more consonants in a sequence of consonants (grape → gape) Depalatalization of Final Singles Substituting a nonpalatal for a palatal sound at the end of a word (dish → dit) 4 - 6 yrs. Stopping of /s/ Substituting a stop sound for /s/ (sap → tap) 3 ½ - 5 yrs. Depalatalization of Initial Singles Substituting a nonpalatal for a palatal sound at the beginning of a word (shy → ty) Consonant Cluster Reduction (with /s/) Omitting one or more consonants in a sequence of consonants (step → tep) Alveolarization Substituting an alveolar for a nonalveolar sound (chew → too) 5 yrs. -

Verbal Language Spontaneous Recovery After Ischemic Stroke

Arq Neuropsiquiatr 2009;67(3-B):856-859 VERBAL LANGUAGE SPONTANEOUS RECOVERY AFTER ISCHEMIC STROKE Gabriela Camargo Remesso1, Ana Lúcia de Magalhães Leal Chiappetta1, Alexandre Santos Aguiar2, Márcia Maiumi Fukujima3, Gilmar Fernandes do Prado3 Abstract – Objective: To analyze the spontaneous recovery of the verbal language on patients who have had an ischemic stroke. Method: Retrospective analysis of 513 medical records. We characterize referring aspects for data identification, language deficit, spontaneous recovery and speech therapy. Results: The average age was 62.2 years old (SD= ±12.3), the average time of academic experience was 4.5 years (SD=±3.9), 245 (47.7%) patients presented language disturbance, 166 (54.0%) presented spontaneous recovery, from which 145 (47.2%) had expression deficit (p=0.001); 12 (3.9%) had comprehension deficit and 9 (2.9%) had both expression and comprehension deficit. Speech therapy was carried with 15 patients (4.8%) (p=0.001). Conclusion: The verbal language spontaneous recovery occurred in most of the patients being taken care of at the stroke out clinic, and expression disturbance was the most identified alteration. As expected, the left hemisphere was associated with the deficit and smoking and pregressive stroke were the language alteration primary associated factors. KEY WORDS: aphasia, language, stroke, neuronal plasticity. Recuperação espontânea da linguagem verbal após acidente cerebrovascular isquêmico Resumo – Objetivo: Analisar a recuperação espontânea da linguagem verbal em pacientes que -

The Brain That Changes Itself

The Brain That Changes Itself Stories of Personal Triumph from the Frontiers of Brain Science NORMAN DOIDGE, M.D. For Eugene L. Goldberg, M.D., because you said you might like to read it Contents 1 A Woman Perpetually Falling . Rescued by the Man Who Discovered the Plasticity of Our Senses 2 Building Herself a Better Brain A Woman Labeled "Retarded" Discovers How to Heal Herself 3 Redesigning the Brain A Scientist Changes Brains to Sharpen Perception and Memory, Increase Speed of Thought, and Heal Learning Problems 4 Acquiring Tastes and Loves What Neuroplasticity Teaches Us About Sexual Attraction and Love 5 Midnight Resurrections Stroke Victims Learn to Move and Speak Again 6 Brain Lock Unlocked Using Plasticity to Stop Worries, OPsessions, Compulsions, and Bad Habits 7 Pain The Dark Side of Plasticity 8 Imagination How Thinking Makes It So 9 Turning Our Ghosts into Ancestors Psychoanalysis as a Neuroplastic Therapy 10 Rejuvenation The Discovery of the Neuronal Stem Cell and Lessons for Preserving Our Brains 11 More than the Sum of Her Parts A Woman Shows Us How Radically Plastic the Brain Can Be Appendix 1 The Culturally Modified Brain Appendix 2 Plasticity and the Idea of Progress Note to the Reader All the names of people who have undergone neuroplastic transformations are real, except in the few places indicated, and in the cases of children and their families. The Notes and References section at the end of the book includes comments on both the chapters and the appendices. Preface This book is about the revolutionary discovery that the human brain can change itself, as told through the stories of the scientists, doctors, and patients who have together brought about these astonishing transformations. -

Relapse of Evaluative Learning-Evidence For

This may be the author’s version of a work that was submitted/accepted for publication in the following source: Luck, Camilla C. & Lipp, Ottmar V. (2020) Relapse of Evaluative Learning-Evidence for Reinstatement, Renewal, but Not Spontaneous Recovery, of Extinguished Evaluative Learning in a Picture-Picture Evaluative Conditioning Paradigm. Journal of Experimental Psychology: Learning Memory and Cognition, 46(6), pp. 1178-1206. This file was downloaded from: https://eprints.qut.edu.au/206340/ c 2019 American Psychological Association This work is covered by copyright. Unless the document is being made available under a Creative Commons Licence, you must assume that re-use is limited to personal use and that permission from the copyright owner must be obtained for all other uses. If the docu- ment is available under a Creative Commons License (or other specified license) then refer to the Licence for details of permitted re-use. It is a condition of access that users recog- nise and abide by the legal requirements associated with these rights. If you believe that this work infringes copyright please provide details by email to [email protected] Notice: Please note that this document may not be the Version of Record (i.e. published version) of the work. Author manuscript versions (as Sub- mitted for peer review or as Accepted for publication after peer review) can be identified by an absence of publisher branding and/or typeset appear- ance. If there is any doubt, please refer to the published source. https://doi.org/10.1037/xlm0000785 Running head: RELAPSE OF EVALUATIVE CONDITIONING 1 Relapse of Evaluative Learning – Evidence for Reinstatement, Renewal, but not Spontaneous Recovery, of Extinguished Evaluative Learning in a Picture-Picture Evaluative Conditioning Paradigm Camilla C. -

Recovery from Disorders of Consciousness: Mechanisms, Prognosis and Emerging Therapies

REVIEWS Recovery from disorders of consciousness: mechanisms, prognosis and emerging therapies Brian L. Edlow 1,2, Jan Claassen3, Nicholas D. Schiff4 and David M. Greer 5 ✉ Abstract | Substantial progress has been made over the past two decades in detecting, predicting and promoting recovery of consciousness in patients with disorders of consciousness (DoC) caused by severe brain injuries. Advanced neuroimaging and electrophysiological techniques have revealed new insights into the biological mechanisms underlying recovery of consciousness and have enabled the identification of preserved brain networks in patients who seem unresponsive, thus raising hope for more accurate diagnosis and prognosis. Emerging evidence suggests that covert consciousness, or cognitive motor dissociation (CMD), is present in up to 15–20% of patients with DoC and that detection of CMD in the intensive care unit can predict functional recovery at 1 year post injury. Although fundamental questions remain about which patients with DoC have the potential for recovery, novel pharmacological and electrophysiological therapies have shown the potential to reactivate injured neural networks and promote re-emergence of consciousness. In this Review, we focus on mechanisms of recovery from DoC in the acute and subacute-to-chronic stages, and we discuss recent progress in detecting and predicting recovery of consciousness. We also describe the developments in pharmacological and electro- physiological therapies that are creating new opportunities to improve the lives of patients with DoC. Disorders of consciousness (DoC) are characterized In this Review, we discuss mechanisms of recovery by alterations in arousal and/or awareness, and com- from DoC and prognostication of outcome, as well as 1Center for Neurotechnology mon causes of DoC include cardiac arrest, traumatic emerging treatments for patients along the entire tempo- and Neurorecovery, brain injury (TBI), intracerebral haemorrhage and ral continuum of DoC. -

From Substance Abuse Among Aboriginal Peoples in Canada Adrien Tempier

The International Indigenous Policy Journal Volume 2 Article 7 Issue 1 Health and Well-Being May 2011 Awakening: 'Spontaneous recovery’ from substance abuse among Aboriginal peoples in Canada Adrien Tempier Colleen A. Dell University of Saskatchewan, [email protected] Elder Campbell Papequash Randy Duncan Raymond Tempier Recommended Citation Tempier, A. , Dell, C. A. , Papequash, E. , Duncan, R. , Tempier, R. (2011). Awakening: 'Spontaneous recovery’ from substance abuse among Aboriginal peoples in Canada. The International Indigenous Policy Journal, 2(1) . DOI: 10.18584/iipj.2011.2.1.7 This Research is brought to you for free and open access by Scholarship@Western. It has been accepted for inclusion in The International Indigenous Policy Journal by an authorized administrator of Scholarship@Western. For more information, please contact [email protected]. Awakening: 'Spontaneous recovery’ from substance abuse among Aboriginal peoples in Canada Abstract There is a paucity of research on spontaneous recovery (SR) from substance abuse in general, and specific to Aboriginal peoples. There is also limited understanding of the healing process associated with SR. In this study, SR was examined among a group of Aboriginal peoples in Canada. Employing a decolonizing methodology, thematic analysis of traditional talking circle narratives identified an association between a traumatic life event and an ‘awakening.’ This ‘awakening’ was embedded in primary (i.e., consider impact on personal well-being) and secondary (i.e., implement alternative coping mechanism) cognitive appraisal processes and intrinsic and extrinsic motivation rooted in increased traditional Aboriginal cultural awareness and understanding. This contributed to both abstinence (i.e., recovery) and sustained well-being (i.e., continued abstinence). -

Neuroprognostication of Consciousness Recovery in a Patient with COVID-19 Related Encephalitis: Preliminary Findings from a Multimodal Approach

brain sciences Case Report Neuroprognostication of Consciousness Recovery in a Patient with COVID-19 Related Encephalitis: Preliminary Findings from a Multimodal Approach Aude Sangare 1,2,3,*, Anceline Dong 4, Melanie Valente 1, Nadya Pyatigorskaya 1,2,5 , Albert Cao 2,4, Victor Altmayer 4, Julie Zyss 3, Virginie Lambrecq 1,2,3, Damien Roux 6 , Quentin Morlon 6, Pauline Perez 1,3, Amina Ben Salah 1,3, Sara Virolle 7, Louis Puybasset 2,8, Jacobo D Sitt 1,2, Benjamin Rohaut 1,2,4,9 and Lionel Naccache 1,2,3 1 Brain institute—ICM, Inserm U1127, CNRS UMR 7225, Sorbonne Université, 75013 Paris, France; [email protected] (M.V.); [email protected] (N.P.); [email protected] (V.L.); [email protected] (P.P.); [email protected] (A.B.S.); [email protected] (J.D.S.); [email protected] (B.R.); [email protected] (L.N.) 2 CNRS, INSERM, Laboratoire d’Imagerie Biomédicale, Sorbonne Université, 75006 Paris, France; [email protected] (A.C.); [email protected] (L.P.) 3 Department of Neurophysiology, AP-HP, Hôpital Pitié-Salpêtrière, Sorbonne Université, 75006 Paris, France; [email protected] 4 Department of Neurology, Neuro-ICU, AP-HP, Hôpital Pitié-Salpêtrière, Sorbonne Université, 75006 Paris, France; [email protected] (A.D.); [email protected] (V.A.) 5 Department of Neuroradiology, AP-HP, Hôpital Pitié-Salpêtrière, Sorbonne Université, 75006 Paris, France 6 Department of Critical Care, Hôpital Louis Mourier, AP-HP, Université -

An Analysis of I-Umlaut in Old English

An Analysis of I-Umlaut in Old English Meizi Piao (Seoul National University) Meizi Piao. 2012. An Analysis of I-Umlaut in Old English. SNU Working Papers in English Linguistics and Language X, XX-XX Lass (1994) calls the period from Proto-Germanic to historical Old English ‘The Age of Harmony’. Among the harmony processes in this period, i-umlaut has been considered as ‘one of the most far-reaching and important sound changes’ (Hogg 1992, Lass 1994) or as ‘one of the least controversial sound changes’ (Colman 2005). This paper tries to analyze i-umlaut in Old English within the framework of the Autosegmental theory and the Optimality theory, and explain how suffix i or j in the unstressed syllable cause the stem vowels in the stressed syllable to be fronted or raised. (Seoul National University) Keywords: I-umlaut, Old English, Autosegmental theory, vowel harmony Optimality theory 1. Introduction Old English is an early form of the English language that was spoken and written by the Anglo-Saxons and their descendants in the area now known as England between at least the mid-5th century to the mid-12th century. It is a West Germanic language closely related to Old Frisian. During the period of Old English, one of the most important phonological processes is umlaut, which especially affects vowels, and become the reason for the superficially irregular and unrelated Modern English phenomenon. I-Umlaut is the conditioned sound change that the vowel either moves directly forward in the mouth [u>y, o>e, A>&] or forward and up [A>&>e]. -

Retrieval Induces Reconsolidation of Fear Extinction Memory

Retrieval induces reconsolidation of fear extinction memory Janine I. Rossatoa,b, Lia R. Bevilaquaa,b, Iván Izquierdoa,b,1, Jorge H. Medinaa,c, and Martín Cammarotaa,b,c,1 aCentro de Memória, Instituto do Cérebro, Pontifícia Universidade Católica do Rio Grande do Sul and bInstituto Nacional de Neurociência Translacional, Conselho Nacional de Desenvolvimento Científico e Tecnológico, RS90610-000, Porto Alegre, Brazil; and cInstituto de Biologia Celular y Neurociencias “Prof. Dr. Eduardo de Robertis”, Facultad de Medicina, Universidad de Buenos Aires, Ciudad Autónoma de Buenos Aires, CP1121 Buenos Aires, Argentina Contributed by Iván Izquierdo, November 4, 2010 (sent for review July 18, 2010) The nonreinforced expression of long-tem memory may lead to Results two opposite protein synthesis-dependent processes: extinction To analyze whether inhibition of protein synthesis after reac- and reconsolidation. Extinction weakens consolidated memories, tivation affects retention of fear extinction, we used a one-trial whereas reconsolidation allows incorporation of additional infor- step-down inhibitory avoidance (IA) task, a form of aversive mation into them. Knowledge about these two processes has ac- learning in which stepping down from a platform is paired with cumulated in recent years, but their possible interaction has not a mild foot shock. IA memory is hippocampus-dependent (19) been evaluated yet. Here, we report that inhibition of protein and can be extinguished by allowing the animal to step down to synthesis in the CA1 region of the dorsal hippocampus after re- and explore the floor of the training box in the absence of the trieval of fear extinction impedes subsequent reactivation of the ensuing foot shock.