Automation and Testing for Simplified Software Deployment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

RZ/G Verified Linux Package for 64Bit Kernel V1.0.5-RT Release Note For

Release Note RZ/G Verified Linux Package for 64bit kernel Version 1.0.5-RT R01TU0311EJ0102 Rev. 1.02 Release Note for HTML5 Sep 7, 2020 Introduction This release note describes the contents, building procedures for HTML5 (Gecko) and important points of the RZ/G Verified Linux Package for 64bit kernel (hereinafter referred to as “VLP64”). In this release, Linux packages for HTML5 is preliminary and provided AS IS with no warranty. If you need information to build Linux BSPs without a GUI Framework of HTML5, please refer to “RZ/G Verified Linux Package for 64bit kernel Version 1.0.5-RT Release Note”. Contents 1. Release Items ................................................................................................................. 2 2. Build environment .......................................................................................................... 4 3. Building Instructions ...................................................................................................... 6 3.1 Setup the Linux Host PC to build images ................................................................................. 6 3.2 Building images to run on the board ........................................................................................ 8 3.3 Building SDK ............................................................................................................................. 12 4. Components ................................................................................................................. 13 5. Restrictions -

Fpm Documentation Release 1.7.0

fpm Documentation Release 1.7.0 Jordan Sissel Sep 08, 2017 Contents 1 Backstory 3 2 The Solution - FPM 5 3 Things that should work 7 4 Table of Contents 9 4.1 What is FPM?..............................................9 4.2 Installation................................................ 10 4.3 Use Cases................................................. 11 4.4 Packages................................................. 13 4.5 Want to contribute? Or need help?.................................... 21 4.6 Release Notes and Change Log..................................... 22 i ii fpm Documentation, Release 1.7.0 Note: The documentation here is a work-in-progress. If you want to contribute new docs or report problems, I invite you to do so on the project issue tracker. The goal of fpm is to make it easy and quick to build packages such as rpms, debs, OSX packages, etc. fpm, as a project, exists with the following principles in mind: • If fpm is not helping you make packages easily, then there is a bug in fpm. • If you are having a bad time with fpm, then there is a bug in fpm. • If the documentation is confusing, then this is a bug in fpm. If there is a bug in fpm, then we can work together to fix it. If you wish to report a bug/problem/whatever, I welcome you to do on the project issue tracker. You can find out how to use fpm in the documentation. Contents 1 fpm Documentation, Release 1.7.0 2 Contents CHAPTER 1 Backstory Sometimes packaging is done wrong (because you can’t do it right for all situations), but small tweaks can fix it. -

Setting up Your Environment

APPENDIX A Setting Up Your Environment Choosing the correct tools to work with asyncio is a non-trivial choice, since it can significantly impact the availability and performance of asyncio. In this appendix, we discuss the interpreter and the packaging options that influence your asyncio experience. The Interpreter Depending on the API version of the interpreter, the syntax of declaring coroutines change and the suggestions considering API usage change. (Passing the loop parameter is considered deprecated for APIs newer than 3.6, instantiating your own loop should happen only in rare circumstances in Python 3.7, etc.) Availability Python interpreters adhere to the standard in varying degrees. This is because they are implementations/manifestations of the Python language specification, which is managed by the PSF. At the time of this writing, three relevant interpreters support at least parts of asyncio out of the box: CPython, MicroPython, and PyPy. © Mohamed Mustapha Tahrioui 2019 293 M. M. Tahrioui, asyncio Recipes, https://doi.org/10.1007/978-1-4842-4401-2 APPENDIX A SeTTinG Up YouR EnViROnMenT Since we are ideally interested in a complete or semi-complete implementation of asyncio, our choice is limited to CPython and PyPy. Both of these products have a great community. Since we are ideally using a lot powerful stdlib features, it is inevitable to pose the question of implementation completeness of a given interpreter with respect to the Python specification. The CPython interpreter is the reference implementation of the language specification and hence it adheres to the largest set of features in the language specification. At the point of this writing, CPython was targeting API version 3.7. -

Open Data User Guide.Pdf

PARTICIPANT’S GUIDE Virtual Machine Connection Details Hostname: hackwe1.cs.uwindsor.ca Operating System: Debian 6.0 (“Squeeze”) IP Address: 137.207.82.181 Middleware: Node.js, Perl, PHP, Python DBMS: MySQL, PostgreSQL Connection Type: SSH Web Server: Apache 2.2 UserID: root Available Text Editors: nano, vi Password: Nekhiav3 UserID: hackwe Feel free to install additional packages or tools. Password: Imusyeg6 Google Maps API Important Paths and Links Follow this quick tutorial to get started Home Directory https://developers.google.com/maps/documentation/javascript/tutorial /home/hackwe The most common objects you will use are LatLng objects which store a lati- tude and longitude, and Marker objects which place a point on a Map object APT Package Management Tool help.ubuntu.com/community/AptGet/Howto LatLng Object developers.google.com/maps/documentation/javascript/reference#LatLng City of Windsor Open Data Catalogue Marker Object www.citywindsor.ca/opendata/Pages/Open-Data- developers.google.com/maps/documentation/javascript/reference#Marker Catalogue.aspx Map Object Windsor Hackforge developers.google.com/maps/documentation/javascript/reference#Map hackf.org WeTech Alliance wetech-alliance.com XKCD xkcd.com PARTICIPANT’S GUIDE Working with Geospatial (SHP) Data in Linux Node.js Python To manipulate shape files in Python 2.x, you’ll need the pyshp package. These Required Libraries instructions will quickly outline how to install and use this package to get GIS data out of *.shp files. node-shp: Github - https://github.com/yuletide/node-shp Installation npm - https://npmjs.org/package/shp To install pyshp you first must have setuptools installed in your python site- packages. -

Packaging and Distributing Python Projects

Packaging and Distributing Python Projects Maximilian Nöthe Astroparticle Physics, TU Dortmund Summer School – 2021-06-10 overview Introduction Using setuptools Versions and Semantic Versioning Choosing a License Publishing Binary Wheels A new Alternative: poetry Conda Packages and conda-forge Conclusions and Recommendations M. Nöthe – TU Dortmund Packaging 2 Warning Copying commands or code from PDF files is BIOHAZARD dangerous Radiation Copy from the example files in the repository or type by hand. Typing by hand is best for learning. M. Nöthe – TU Dortmund Packaging 3 The Python Package Index → Python packages are published on the Python Package Index (https://pypi.org) → pip install foo will by default: 1. Search for a package named foo on PyPI 2. Download the best available distribution for your platform 3. Install all dependencies of the package 4. Install the package → There is https://test.pypi.org for people to test their packaging code before publishing to “the real thing”. → It is also possible to self-host a python package index M. Nöthe – TU Dortmund Packaging – Introduction 4 Source Distributions and Wheels Source Distributions Wheels → .zip or .tar.gz archives of the → Standardized format for pre-built project python packages → Simplest solution to publish your → Simple for pure-python packages (no package compiled components) → If a package contains compiled → Platform-dependent wheels for components, these need to be built at packages with compiled components installation time → C-Extensions → Cython-Code → Wrappers for C or C++-Libraries → … M. Nöthe – TU Dortmund Packaging – Introduction 5 Wheels → Platform dependent binary wheels must follow standards to be uploaded to PyPI → This is to ensure they run on many systems (not just on your computer) → Essentially: → Compile using the oldest C-Standard Library a package wants to support → Include all needed libraries in the wheel More on how to actually build wheels for your own projects later. -

Pip Documentation Release 18.1.Dev0

pip Documentation Release 18.1.dev0 pip developers August 22, 2018 Contents 1 Quickstart 3 2 Installation 5 3 User Guide 9 4 Reference Guide 21 5 Development 59 6 Release Notes 67 i ii pip Documentation, Release 18.1.dev0 User list| Dev list| GitHub| PyPI | User IRC: #pypa | Dev IRC: #pypa-dev The PyPA recommended tool for installing Python packages. Contents 1 pip Documentation, Release 18.1.dev0 2 Contents CHAPTER 1 Quickstart First, install pip. Install a package from PyPI: $ pip install SomePackage [...] Successfully installed SomePackage Install a package that’s already been downloaded from PyPI or obtained from elsewhere. This is useful if the target machine does not have a network connection: $ pip install SomePackage-1.0-py2.py3-none-any.whl [...] Successfully installed SomePackage Show what files were installed: $ pip show --files SomePackage Name: SomePackage Version: 1.0 Location: /my/env/lib/pythonx.x/site-packages Files: ../somepackage/__init__.py [...] List what packages are outdated: $ pip list --outdated SomePackage (Current: 1.0 Latest: 2.0) Upgrade a package: $ pip install --upgrade SomePackage [...] (continues on next page) 3 pip Documentation, Release 18.1.dev0 (continued from previous page) Found existing installation: SomePackage 1.0 Uninstalling SomePackage: Successfully uninstalled SomePackage Running setup.py install for SomePackage Successfully installed SomePackage Uninstall a package: $ pip uninstall SomePackage Uninstalling SomePackage: /my/env/lib/pythonx.x/site-packages/somepackage Proceed (y/n)? y Successfully uninstalled SomePackage 4 Chapter 1. Quickstart CHAPTER 2 Installation 2.1 Do I need to install pip? pip is already installed if you are using Python 2 >=2.7.9 or Python 3 >=3.4 downloaded from python.org or if you are working in a Virtual Environment created by virtualenv or pyvenv. -

Analytical and Other Software on the Secure Research Environment

Analytical and other software on the Secure Research Environment The Research Environment runs with the operating system: Microsoft Windows Server 2016 DataCenter edition. The environment is based on the Microsoft Data Science virtual machine template and includes the following software: • R Version 4.0.2 (2020-06-22), as part of Microsoft R Open • R Studio Desktop 1.3.1093 working with R 4.0.2 • Anaconda 3, including an environment for Python 3.8.5 • Python, 3.8.5 as part of the Anaconda base environment • Jupyter Notebook, as part of the Anaconda3 environment • Microsoft Office 2016 Standard edition, including Word, Excel, PowerPoint, and OneNote (Access not included) • JuliaPro 0.5.1.1 and the Juno IDE for Julia • PyCharm Community Edition, 2020.3 • PLINK • JAGS • WinBUGS • OpenBUGS • stan and rstan • Apache Spark 2.2.0 • SparkML and pySpark • Apache Drill 1.11.0 • MAPR Drill driver • VIM 8.0.606 • TensorFlow • MXNet, MXNet Model Server • Microsoft Cognitive Toolkit (CNTK) • Weka • Vowpal Wabbit • xgboost • Team Data Science Process (TDSP) Utilities • VOTT (Visual Object Tagging Tool) 1.6.11 • Microsoft Machine Learning Server • PowerBI • Docker version 10.03.5, build 2ee0c57608 • SQL Server Developer Edition (2017), including Management Studio and SQL Server Integration Services (SSIS) • Visual Studio Code 1.17.1 • Nodejs • 7-zip • Evince PDF Viewer • Acrobat Reader • Microsoft Photo Viewer • PowerShell 6 March 2021 Version 1.2 And in the Premium research environments: • STATA 16.1 • SAS 9.4, m4 (academic license) Users also have the ability to bring in additional software if the software was specified in the data request, the software runs in the operating system described above, and the user can provide Vivli with any necessary licensing keys. -

Installer Crack Pkg

Installer crack pkg click here to download but i want to install the game: i am alive. but its an pkg file and i cant . The retail PKG files will install but the Cracked PKG files fail to install. Restarting in Target Disk Mode If you want to install NewMac's bundled and type: $ /Volumes/Mac OS X Install Disc 1/System/Installation/Packages/ Note that . 5 days ago DownloadDirectX End-User Runtime Web Installer · Close windowDirectX End- User Runtime Web Installer. Skype for Business on Mac. Details. Download the installer files based on the download methods available for your product. Click www.doorway.ru and follow the prompts to continue. Image of the. All packages available in the latest release of Anaconda are listed on the pages linked below. These packages may be installed with the command conda install. The individual Unity installers are provided www.doorway.ru files, which can be installed the option is presented as Torrent download (Win+Mac) in the Downloads. Ubuntu packages. Ubuntu comes with darktable packages. You can install them with. sudo apt-get install darktable. If you need a newer version than what is. Learn www.doorway.ru files and view a list of programs that open them. A PKG file is a package of compressed installer files used to install a software program. Get Involved. If you want to help out with developing and testing, grab a daily build. Our GitHub project is here and the issue tracker for the server here. , Windows Installer (EXE) · Mac Package Installer (PKG) · AS Package, n/a, n/a Please refer to our documentation for install/uninstall instructions. -

Checklist for a Software Management Plan

Checklist for a Software Management Plan Michael Jackson (ed.) Version 0.2 10.5281/zenodo.1460504 12 October 2018 Please cite as: Michael Jackson (ed.) (12 October 2018). Checklist for a Software Management Plan (Version 0.2). Zenodo. doi:10.5281/zenodo.1460504. Web site: https://www.software.ac.uk/software- management-plans Introduction It is easy to concentrate on short-term issues when developing research software. Getting publications, collaboration with others and the demands of a daily research routine can all conspire to prevent proper planning for the development of research software. A Software Management Plan can help us to define a set of structures and goals to help us to understand what we are going to write, who it is for, how we will get it to them, how will it help them, and how we will assess whether it has helped them. They also help us to understand what processes, resources and infrastructure we need and how we can use these to meet our own goals, in the short, medium and long term. They also encourage us to think about the future of our software once our project or funding period ends, and what our plans for its long-term sustainability are. This checklist is intended to help researchers write software management plans. Use of this checklist The Software Sustainability Institute provides this checklist on an "as-is" basis, makes no warranties regarding any information provided within and disclaims liability for damages resulting from using this information. You are solely responsible for determining the appropriateness of any advice and guidance provided and assume any risks associated with your use of this advice and guidance. -

Netscaler® 13.0 - Third Party Notices

NetScaler® 13.0 - Third Party Notices NetScaler 13.0 may include third party software components licensed under the following terms. This list was generated using third party software as of the date listed. This list may change with specific versions of the product and may not be complete; it is provided “As-Is.” TO THE EXTENT PERMITTED BY APPLICABLE LAW, CITRIX AND ITS SUPPLIERS MAKE NO REPRESENTATIONS OR WARRANTIES, EXPRESS OR IMPLIED, STATUTORY OR OTHERWISE, WITH REGARD TO THE LIST OR ITS ACCURACY OR COMPLETENESS, OR WITH RESPECT TO ANY RESULTS TO BE OBTAINED FROM USE OR DISTRIBUTION OF THE LIST. BY USING OR DISTRIBUTING THE LIST, YOU AGREE THAT IN NO EVENT SHALL CITRIX BE HELD LIABLE FOR ANY SPECIAL, DIRECT, INDIRECT OR CONSEQUENTIAL DAMAGES OR ANY OTHER DAMAGES WHATSOEVER RESULTING FROM ANY USE OR DISTRIBUTION OF THIS LIST. 1 Table of Contents Adaptive Communication Environment (ACE) 6.3.1 ................................................................................... 17 Apache HTTP Server 2.4.39 ......................................................................................................................... 17 Apache Tomcat 8.5.34 ................................................................................................................................ 17 Apache Xalan C++ 1.10.0 ............................................................................................................................ 17 Apache Xerces C++ 2.7.0 ............................................................................................................................ -

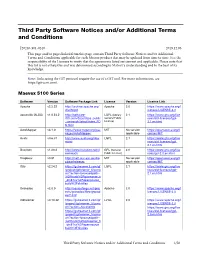

Third Party Software Notices And/Or Additional Terms and Conditions

Third Party Software Notices and/or Additional Terms and Conditions F20240-501-0240 2019.12.06 This page and/or pages linked from this page contain Third Party Software Notices and/or Additional Terms and Conditions applicable for each Matrox product that may be updated from time to time. It is the responsibility of the Licensee to verify that the agreements listed are current and applicable. Please note that this list is not exhaustive and was determined according to Matrox’s understanding and to the best of its knowledge. Note: Links using the GIT protocol require the use of a GIT tool. For more information, see https://git-scm.com/. Maevex 5100 Series Software Version Software Package Link License Version License Link Apache v2.2.22 http://archive.apache.org/ Apache 2.0 https://www.apache.org/l dist/httpd icenses/LICENSE-2.0 asoundlib (ALSA) v1.0.24.2 http://software- LGPL (Library 2.1 https://www.gnu.org/lice dl.ti.com/dsps/dsps_public General Public nses/old-licenses/lgpl- _sw/ezsdk/latest/index_FD License) 2.1.en.html S.html AutoMapper v3.1.0 https://www.nuget.org/pac MIT No version https://opensource.org/li kages/AutoMapper/ applicable censes/MIT Avahi v0.6.31 http://www.avahi.org/dow LGPL 2.1 https://www.gnu.org/lice nload nses/old-licenses/lgpl- 2.1.en.html Busybox v1.20.2 http://www.busybox.net/d GPL (General 2.0 https://www.gnu.org/lice ownloads Public License) nses/gpl-2.0.en.html Dropbear v0.51 http://matt.ucc.asn.au/dro MIT No version https://opensource.org/li pbear/releases applicable censes/MIT Glib v2.24.2 https://gstreamer.ti.com/gf -

Python Development Documentation Release 1.0

Python Development Documentation Release 1.0 Victor Stinner Sep 24, 2021 Contents 1 Python 3 3 2 Python Packaging 9 3 Python Date and Time 13 4 Python Numbers 15 5 Python Continuous Integration: Travis CI, AppVeyor, VSTS, Buildbots 23 6 Python Debug Tools 29 7 Debug CPython with gdb 37 8 Unstable tests 41 9 External libraries and Generated files 47 10 Supported platforms and architectures 51 11 Downstream patches 57 12 Python on Android 59 13 Python Development Workflow 65 14 CPython infrastructure 67 15 Python Memory 71 16 Compile CPython on Windows 75 17 Price of the portability 79 18 C hacks in CPython source code 83 19 Bugs found by the Python project 87 20 Python Finalization 89 i 21 Python builds 95 22 Python Subinterpreters 99 23 Add a field to PyConfig 105 24 Embed Python in an application 109 25 Python C API 111 26 Python Thread State 113 27 Fork in Python 117 28 Test the next Python 121 29 Popular third party extension modules 123 30 Analysis of CPython binary assembly 125 31 Python garbage collector 129 32 Python performance 131 33 CPython implementation optimizations 133 34 Python Startup Time 137 35 PEP 393 performances 141 36 Python community 143 37 Diversity 147 38 Communication Channels 153 39 CPython mentoring 155 40 CPython Core Developers 157 41 CPython tasks 161 42 History of Python 163 43 Misc notes 167 44 See also 171 45 Indices and tables 173 Index 175 ii Python Development Documentation, Release 1.0 by Victor Stinner. Python: Contents 1 Python Development Documentation, Release 1.0 2 Contents CHAPTER 1 Python 3 Python 3.0 was released in December 2008.