Author Verification in Stream of Text with Echo State Network-Based

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Politics and Metaphysics in Three Novels of Philip K. Dick

EUGÊNIA BARTHELMESS Politics and Metaphysics in Three Novels of Philip K. Dick Dissertação apresentada ao Curso de Pós- Graduação em Letras, Área de Concentra- ção Literaturas de Língua Inglesa, do Setor de Ciências Humanas, Letras e Artes da Universidade Federai do Paraná, como requisito parcial à obtenção do grau de Mestre. Orientadora: Prof.3 Dr.a BRUNILDA REICHMAN LEMOS CURITIBA 19 8 7 OF PHILIP K. DICK ERRATA FOR READ p -;2011 '6:€h|j'column iinesllll^^is'iiearly jfifties (e'jarly i fx|fties') fifties); Jl ' 1 p,.2Ò 6th' column line 16 space race space race (late fifties) p . 33 line 13 1889 1899 i -,;r „ i i ii 31 p .38 line 4 reel."31 reel • p.41 line 21 ninteenth nineteenth p .6 4 line 6 acien ce science p .6 9 line 6 tear tears p. 70 line 21 ' miliion million p .72 line 5 innocence experience p.93 line 24 ROBINSON Robinson p. 9 3 line 26 Robinson ROBINSON! :; 1 i ;.!'M l1 ! ! t i " i î : '1 I fi ' ! • 1 p .9 3 line 27 as deliberate as a deliberate jf ! •! : ji ' i' ! p .96 lin;e , 5! . 1 from form ! ! 1' ' p. 96 line 8 male dis tory maledictory I p .115 line 27 cookedly crookedly / f1 • ' ' p.151 line 32 why this is ' why is this I 1; - . p.151 line 33 Because it'll Because (....) it'll p.189 line 15 mourmtain mountain 1 | p .225 line 13 crete create p.232 line 27 Massachusetts, 1960. Massachusetts, M. I. T. -

THE SURVIVAL and MUTATION of Utoi

PHOENIX RENEWED: THE SURVIVAL AND MUTATION OF UTOi’IAN THOUGHT IN NORTH AMERICAN SCIENCE FICTION, 1965—1982 A DISSERTATION SUBMITTED TO THE FACULTY OF ATLANTA UNIVERSITY IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR TEE DEGREE OF DOCTOR OF PHILOSOPHY BY HODA MOUKHTAR ZAKI DEPARTMENT OF POLITICAL SCIENCE ATLANTA, GEORGIA DECEMBER 1984 ABS TRACT POLITICAL SCIENCE ZAKI, H01P4 MOURHIAR B.A. , American University in Cairo, 1971 N.A., Atlanta University, Atlanta, Georgia, 1974 Phoenix Renewed: The Survival and_Mutation_of Utopian Thought in ~urth American Science Fiction, 1965—1982 Adviser: Dr. Alex Hillingham The&is d~tei Decenber, 1984 This dissertation is concerned with the status of utoni en in rwdcra timas. As such it is concerned with a historic problem ir pci tial :hearv, i.e., how to visualize a perfect human community. Since the turn of the 20th century, we have seen a decline in utopian ~i tera.ture. A variety of commentators, including Mannhein: and Mumford, noted and decried this trend. It seemed ironic to those observers that utopia~s demise would occur when humanity was closest to realizing material abundance for all. My research evaluates this irony. The primary data of my work are drawn from the genre of science fiction. The new locus for utopian thought seems natural enough. Science fiction is a speculative activity and, in its emphasis on science and technology, concerns itself with an area of human activity that has been intimately connected with the idea of progress since the European Enlightenment. A number of scholars including Mumford, Sargent, Suvin, and Williams, have asserted that contemporary utopian thought could be found in science fiction. -

Rd., Urbana, Ill. 61801 (Stock 37882; $1.50, Non-Member; $1.35, Member) JOURNAL CIT Arizona English Bulletin; V15 N1 Entire Issue October 1972

DOCUMENT RESUME ED 091 691 CS 201 266 AUTHOR Donelson, Ken, Ed. TITLE Science Fiction in the English Class. INSTITUTION Arizona English Teachers Association, Tempe. PUB DATE Oct 72 NOTE 124p. AVAILABLE FROMKen Donelson, Ed., Arizona English Bulletin, English Dept., Ariz. State Univ., Tempe, Ariz. 85281 ($1.50); National Council of Teachers of English, 1111 Kenyon Rd., Urbana, Ill. 61801 (Stock 37882; $1.50, non-member; $1.35, member) JOURNAL CIT Arizona English Bulletin; v15 n1 Entire Issue October 1972 EDRS PRICE MF-$0.75 HC-$5.40 PLUS POSTAGE DESCRIPTORS Booklists; Class Activities; *English Instruction; *Instructional Materials; Junior High Schools; Reading Materials; *Science Fiction; Secondary Education; Teaching Guides; *Teaching Techniques IDENTIFIERS Heinlein (Robert) ABSTRACT This volume contains suggestions, reading lists, and instructional materials designed for the classroom teacher planning a unit or course on science fiction. Topics covered include "The Study of Science Fiction: Is 'Future' Worth the Time?" "Yesterday and Tomorrow: A Study of the Utopian and Dystopian Vision," "Shaping Tomorrow, Today--A Rationale for the Teaching of Science Fiction," "Personalized Playmaking: A Contribution of Television to the Classroom," "Science Fiction Selection for Jr. High," "The Possible Gods: Religion in Science Fiction," "Science Fiction for Fun and Profit," "The Sexual Politics of Robert A. Heinlein," "Short Films and Science Fiction," "Of What Use: Science Fiction in the Junior High School," "Science Fiction and Films about the Future," "Three Monthly Escapes," "The Science Fiction Film," "Sociology in Adolescent Science Fiction," "Using Old Radio Programs to Teach Science Fiction," "'What's a Heaven for ?' or; Science Fiction in the Junior High School," "A Sampler of Science Fiction for Junior High," "Popular Literature: Matrix of Science Fiction," and "Out in Third Field with Robert A. -

Left SF: Selected and Annotated

The Anarchist Library (Mirror) Anti-Copyright Left Science Fiction Selected and Annotated, If Not Always Exactly Recommended, [novels, stories, and plays] Mark Bould Mark Bould Left Science Fiction Selected and Annotated, If Not Always Exactly Recommended, [novels, stories, and plays] 2016 Red Planets A section of the appendix reproduced with permission from the author. Note the 2016 publication date: the number of possible texts for this list from 2016–2021 could nearly double the list. usa.anarchistlibraries.net 2016 Pamela Zoline, ‘The Heat Death of the Universe’ (1967). Central to These lists of recommended reading and viewing take a deliber- New Wave and feminist SF, it brings together the drudgery of a ately broad view of what constitutes left SE. Not all of the authors housewife’s daily life and the entropic universe. and directors listed below would call themselves leftists, and some works are not so much leftist as of interest to leftists. None are completely unproblematic and some are not very good at all. Reading Edward Abbey, The Monkey Wrench Gang (1975). Eco-saboteurs take on colluding business and government. Sequel: Hayduke Lives! (1990). See also Good Times (1980). Abe Kobo, Inter Ice Age 4 (1959). The most overtly science-fictional of Abe’s absurdist explorations of contemporary alienation. See also Woman in the Dunes (1962), The Face of Another (1964), The Ruined Map (1967), The Box Man (1973), The Ark Sakura (1984), Beyond the Curve (1991), The Kangaroo Notebook (1991). Chingiz Aitmatov, The Day Lasts Longer than a Hundred Years (1980). Surprisingly uncensored mediation of Central Asian tradition, Soviet modernity and the possibilities presented by an alien world. -

Rollins College Winter Term 1974

University of Central Florida STARS Text Materials of Central Florida Central Florida Memory 1-1-1974 Rollins College Winter Term 1974 Rollins College Find similar works at: https://stars.library.ucf.edu/cfm-texts University of Central Florida Libraries http://library.ucf.edu This Catalog is brought to you for free and open access by the Central Florida Memory at STARS. It has been accepted for inclusion in Text Materials of Central Florida by an authorized administrator of STARS. For more information, please contact [email protected]. Recommended Citation Rollins College, "Rollins College Winter Term 1974" (1974). Text Materials of Central Florida. 837. https://stars.library.ucf.edu/cfm-texts/837 Rollins College WINTER TERM - 7974 DIRECTED STUDIES AND INDEPENDENT STUDIES FOREWORD To The Student: e. The winter term is designed to provide a different type of lear ning experience from the fall and spring terms. With a concentration on one subject, you will have more opportunity to work "on your own", and to explore areas of learning which do not fit in to the longer terms. Most courses in the winter term will be graded on a Credit/No Credit basis. The exceptions to this rule are courses designated as fulfilling the distribution requirements (marked "d" following the course number on the _schedule) or fulfilling the foreign culture requirement (marked "c" following the course number), and senior independent studies required by the student's major department. The basis for grading each course is shown with the course description. Courses in this booklet are listed in departmental order, according to the instructors; however, many of the courses are interdisciplinary in content. -

Science Fiction Book Club Interview with Robert Silverberg (October 2019)

Science Fiction Book Club Interview with Robert Silverberg (October 2019) Robert Silverberg is a many-time winner of the Hugo and Nebula awards, was named to the Science Fiction Hall of Fame in 1999, and in 2004 was designated as a Grand Master by the Science Fiction Writers of America. His books and stories have been translated into forty languages. Among his best known titles are Nightwings, Dying Inside, the Book of Skulls, and the three volumes of the Majipoor Cycle: Lord Valentine’s Castle, Majipoor Chronicles, and Valentine Pontifex. Andrew ten Broek: Do you read stories through another eye when you're in the role of the editor, than when you would when you go through your own story or that of a befriended author? As an editor, I looked for stories that I wish I had written myself. Those were easy choices. Even easier to buy were stories that I wish I COULD have written, but probably wasn’t capable of doing. Mike Garber: A few brief comments on Tower of Glass, please. I don’t have anything specific to say. I wrote that novel about fifty years ago and what I remember is mainly that I liked it while I was doing it. Alan Ziebarth: When did you start reading science fiction? And who were your favorite science fiction authors when you began reading science fiction? I first encountered science fiction about 1946, with TWENTY THOUSAND LEAGUES UNDER THE SEA and THE TIME MACHINE. I discovered the s-f magazines in 1948. My early favorite writers were Henry Kuttner (especially under his Lewis Padgett pseudonym), A.E. -

Vector 60 Edwards 1972-06 BSFA

VECTOR 60 Journal of the BSFA ; June 1972 oonTenTS The British Science Fiction Association Chairman John Brunner Treasurer: Mrs G.T .Adams 54 Cobden Road Bitterne Park Lead-In 3 Southampton S02 4FT Through A Glass Darkly Publicity/ Roger G.Peyton ...... John Brunner 5 Advertising 131 Gillhurst Road Harborne Science Fiction and the Cinema Birmingham Bl7 8PG ..... Philip Strick 13 Membership Mrs E. A. Vai ton Book Reviews 16 Secretary 25 Yewdale Crescent Chester Song at Twilight, and The Coventry CV2 2FF Fannish Inquisition ’larks. ..... Peter Roberts 21 Company Grahame R. Poole Secretary 23 Russet Rd The Frenzied Living Thing Cheltenham ....Bruce Gillespie 25 Glos. GL51 7LN BSFA News 29 Vector costs 30p. There are no sub Edward John Carnell 1919-1972 scriptions in the U.K.; however, .... Harry Harrison membership of the BSFA costs £1.50 Dan Morgan per annum. Ted Tubb Outside the U.K. subscription rates Brian Aldiss 30 are: The Mail Response (letters) 36 U.S.A. & Canada: Single copy 60p; 10 issues ^5»5° Australia: Single copy 60/; 10 issues /A5.5O VECTOR is the official journal of the British Science Fiction (Australian agent: Bruce Gillespie Association. P0 Box 5195AA Melbourne Editors Malcolm Edwards Victoria 3001 75A Harrow View Australia) Harrow All other countries at equivalent rates. Middx HA1 1RF News Editor: Archie Mercer 21 Trenethick Parc Vector 60, June 1972. Helston Copyright (C) Malcolm Edwards, 1972 Cornwall Of the novels, only The Lathe of Heaven has so far appeared in this LEAD-IN country (Gollancz); all the others are available in imported US paper back editions with the exception of Margaret and _I, which I've never heard of. -

Notable SF&F Books

Notable SF&F Books Version 2.0.13 Publication information listed is generally the first trade publication, excluding earlier limited releases. Series information is usually via ISFDB. Aaronovitch, Ben Broken Homes Gollancz, 2013 HC $14.99 \Rivers of London" #4. Aaronovitch, Ben Foxglove Summer Gollancz, 2014 HC $14.99 \Rivers of London" #5. Aaronovitch, Ben The Hanging Tree Gollancz, 2016 HC $14.99 \Rivers of London" #6. Aaronovitch, Ben Moon Over Soho Del Rey, 2011 PB $7.99 \Rivers of London" #2. Aaronovitch, Ben Rivers of London Gollancz, 2011 HC $12.99 \Rivers of London" #1. Aaronovitch, Ben Whispers Under Ground Gollancz, 2012 HC $12.99 \Rivers of London" #3. Adams, Douglas Dirk Gently's Holistic Detective Agency Heinemann, 1987 HC $9.95 \Dirk Gently" #1. Adams, Douglas The Hitch Hiker's Guide to the Galaxy Pan Books, 1979 PB $0.80 \Hitchhiker's Guide to the Galaxy" #1. Adams, Douglas Life, the Universe, and Everything Pan Books, 1982 PB $1.50 \Hitchhiker's Guide to the Galaxy" #3. Adams, Douglas Mostly Harmless Heinemann, 1992 HC $12.99 \Hitchhiker's Guide to the Galaxy" #5. Adams, Douglas The Long Dark Tea-Time of the Soul Heinemann, 1988 HC $10.95 \Dirk Gently" #2. Adams, Douglas The Restaurant at the End of the Universe Pan Books, 1980 PB $0.95 \Hitchhiker's Guide to the Galaxy" #2. Adams, Douglas So Long and Thanks for All the Fish Pan Books, 1984 HC $6.95 \Hitchhiker's Guide to the Galaxy" #4. Adams, Richard Watership Down Rex Collins, 1972 HC $3.95 Carnegie Medal. -

Robert Silverberg Papers: Finding Aid

http://oac.cdlib.org/findaid/ark:/13030/c8w95gbv No online items Robert Silverberg Papers: Finding Aid Finding aid prepared by Katie J. Richardson, June 29, 2009. The Huntington Library, Art Collections, and Botanical Gardens Manuscripts Department 1151 Oxford Road San Marino, California 91108 Phone: (626) 405-2129 Email: [email protected] URL: http://www.huntington.org © 2009 The Huntington Library. All rights reserved. Robert Silverberg Papers: Finding Aid mssSIL 1-1882 1 Overview of the Collection Title: Robert Silverberg Papers Dates (inclusive): 1953-1992 Collection Number: mssSIL 1-1882 Creator: Silverberg, Robert. Extent: 1,882 pieces + ephemera in 89 boxes + 1 oversize folder Repository: The Huntington Library, Art Collections, and Botanical Gardens. Manuscripts Department 1151 Oxford Road San Marino, California 91108 Phone: (626) 405-2129 Email: [email protected] URL: http://www.huntington.org Abstract: This collection contains the papers of American author and science fiction writer Robert Silverberg (born 1935). Includes manuscripts of a selection of Silverberg’s literary works, mostly dating from 1973-1995, as well as correspondence, dating from 1954-1992, that chiefly concerns his professional dealings in relation to his writings and his business relationships with publishing companies. Language: English. Access Open to qualified researchers by prior application through the Reader Services Department. For more information, contact Reader Services. Publication Rights The Huntington Library does not require that researchers request permission to quote from or publish images of this material, nor does it charge fees for such activities. The responsibility for identifying the copyright holder, if there is one, and obtaining necessary permissions rests with the researcher. -

Hugo Award Hugo Award

Hugo Award Hugo Award • The People of the Wind • The People of the Wind by Poul Anderson by Poul Anderson • The Gods Themselves by Isaac Asimov • The Gods Themselves by Isaac Asimov • The Windup Girl by Paolo Bacigalupi • The Windup Girl by Paolo Bacigalupi • The Forge of God by Greg Bear • The Forge of God by Greg Bear • Fahrenheit 451 by Ray Bradbury • Fahrenheit 451 by Ray Bradbury • Earth by David Brin • Earth by David Brin • The Whole Man by John Brunner • The Whole Man by John Brunner • Cryoburn by Lois McMaster Bujold • Cryoburn by Lois McMaster Bujold • Skin Game by Jim Butcher • Skin Game by Jim Butcher • Prentice Alvin by Orson Scott Card • Prentice Alvin by Orson Scott Card • Seventh Son by Orson Scott Card • Seventh Son by Orson Scott Card • The Yiddish Policemen’s Union • The Yiddish Policemen’s Union by Michael Chabon by Michael Chabon • A Closed and Common Orbit • A Closed and Common Orbit by Beck Chambers by Beck Chambers • Childhood’s End by Arthur C. Clarke • Childhood’s End by Arthur C. Clarke • A Fall of Moondust by Arthur C. Clarke • A Fall of Moondust by Arthur C. Clarke • Jonathan Strange & Mr. Norrell • Jonathan Strange & Mr. Norrell by Susanna Clarke by Susanna Clarke • Babel-17 by Samuel Delaney • Babel-17 by Samuel Delaney • The Man in the High Castle by Philip Dick • The Man in the High Castle by Philip Dick • When Gravity Fails • When Gravity Fails by George Alec Effinger by George Alec Effinger • To Your Scattered Bodies Go • To Your Scattered Bodies Go by Philip Jose Farmer by Philip Jose Farmer • Eifelheim by Michael Flynn • Eifelheim by Michael Flynn • Blackout by Mira Grant • Blackout by Mira Grant • Feed by Mira Grant • Feed by Mira Grant • The Forever War by Joe Haldeman • The Forever War by Joe Haldeman • The Moon is a Harsh Mistress • The Moon is a Harsh Mistress by Robert Heinlein by Robert Heinlein • Starship Troopers by Robert Heinlein • Starship Troopers by Robert Heinlein • Children of Dune by Frank Herbert • Children of Dune by Frank Herbert • Dune by Frank Herbert • Dune by Frank Herbert • The Fifth Season by N.K. -

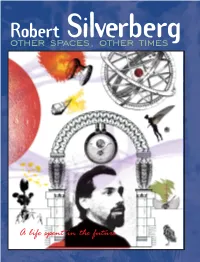

Robert Silverberg Market Paperback Companies, and I’Ve Known and Dealt with Virtually Every Editor Who Other Spaces, Other Times Played a Role in That Evolution

$29.95 “IN THE COURSE of my six decades of writing, I’ve witnessed the transition of science- other times other spaces, fiction publishing from being a pulp-magazine-centered field to one dominated by mass- Robert Silverberg market paperback companies, and I’ve known and dealt with virtually every editor who other spaces, other times played a role in that evolution. For much of that time I was close to the center of the field as writer and sometimes as editor, not only deeply involved in its commercial mutations but also privy to all the personal and professional gossip that it generated.All that special knowledge has left me with a sense of my responsibility to the field’s historians. I was there, I did this and did that, I worked with this great editor and that one, I knew all but a handful of the major writers on a first-name basis, and all of that will be lost if I don’t make some sort of record of it.Therefore it behooves me to set down an account of those experiences for those who will find them of value.” — From Silverberg’s introduction ROBERT SILVERBERG is one of the most important American science fiction writers of the 20th century. He rose to prominence during the 1950s at the end the pulp era and the dawning of a more sophisticated kind of science fiction. One of the most prolif- ic of writers, early on he would routinely crank out a story a day. By the late 1960s he was one of the small group of writers using science fiction as an art form and turning out award-winning stories and novels. -

Philcon 87 the 51St Philadelphia Science Fiction Conference

Philcon 87 The 51st Philadelphia Science Fiction Conference Presented by The Philadelphia Science Fiction Society November 13th, 14th, and 15th, 1987 Principal Speaker Robert Silverberg Guest Artist Tim Hildebrandt Special Guest Timothy Zahn The 1987 Philcon Committee Table of Chairman Programming (cont.) Todd Dashoff Contents Green Room A Message from the Vice-Chairman Sara Paul Chair..................... 3 Ira Kaplowitz Masquerade Philcon Highlights 4 Administration George Paczolt Principal Speaker, Secretary Science Programming Robert Silverberg... 6 Laura Paskman-Syms Hank Smith About Treasurer Writer’s Workshop Robert Silverberg... 7 Joyce L. Carroll Darrell Schweitzer Robert Silverberg: A Bibliography...... 10 Hotel Liaison John Betancourt Gary Feldbaum Exhibitions Pulp Magazines and Science Fiction 12 Operations ArtShow Guest Artist, Laura Paskman-Syms Yoel Attiya Tim Hildebrandt 16 Lew Wolkoff Joni Brill-Dashoff Rob Himmelsbach About Yale Edeiken Dealer’s Room Tim Hildebrandt 19 John Syms Special Guest PSFS Sales Donna Smith Timothy Zahn...... 22 Ira Kaplowitz Fixed Function? About Publications Timothy Zahn...... 24 Mark Trebing Babysitting Guests of Philcon 27 Margaret Phillips Christine Nealon Joyce Carr oil Eva Whitley Art Show Rules... 28 Joann Lawler Committee Nurse Registration Barbara Higgins Art Credit? Joann Lawler Con Suite Tim Hildebrandt: Dave Kowalczyk Rich Kabakjian Front cover, back cover, 4,7,17,18 Signs Janis Hoffing Wayne Zimmerman Den Charles Dougherty: 11,13 Programming Carol Kabakjian Becky Kaplowitz Wayne Zimmerman: Main Program 12 Barbara Higgins Information Sara Paul Crystal Hagel “About Tim Michael Cohen Logistics Hildebrandt” copyright Hank Smith Michele Weinstein © 1987 by Thranx Inc. Artist’s Workshop Morale Officer Copyright © 1987 by Michele Lundgren Margaret Phillips The Philadelphia Filking Personnel Science Fiction Society.