Mapping the Newspaper Industry in Transition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

LEE ENTERPRISES, INCORPORATED (Exact Name of Registrant As Specified in Its Charter) Delaware 42-0823980 (State of Incorporation) (I.R.S

Table of Contents UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 10-K [X] ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For The Fiscal Year Ended September 29, 2019 OR [ ] TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 Commission File Number 1-6227 LEE ENTERPRISES, INCORPORATED (Exact name of Registrant as specified in its Charter) Delaware 42-0823980 (State of incorporation) (I.R.S. Employer Identification No.) 4600 E 53rd Street, Davenport, Iowa 52807 (Address of principal executive offices) (563) 383-2100 Registrant's telephone number, including area code Securities registered pursuant to Section 12(b) of the Act: Title of Each Class Trading Symbol(s) Name of Each Exchange On Which Registered Common Stock - $0.01 par value LEE New York Stock Exchange Indicate by check mark if the Registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. Yes [ ] No [X] Indicate by check mark if the Registrant is not required to file reports pursuant to Section 13 or Section 15(d) of the Act. Yes [ ] No [X] Indicate by check mark whether the Registrant (1) has filed all reports required to be filed by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the Registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. Yes [X] No [ ] Indicate by check mark whether the Registrant has submitted electronically every Interactive Data File required to be submitted pursuant to Rule 405 of Regulation S-T (§232.405 of this Chapter) during the preceding 12 months (or such shorter period that the Registrant was required to submit). -

Online Media and the 2016 US Presidential Election

Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Faris, Robert M., Hal Roberts, Bruce Etling, Nikki Bourassa, Ethan Zuckerman, and Yochai Benkler. 2017. Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election. Berkman Klein Center for Internet & Society Research Paper. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:33759251 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA AUGUST 2017 PARTISANSHIP, Robert Faris Hal Roberts PROPAGANDA, & Bruce Etling Nikki Bourassa DISINFORMATION Ethan Zuckerman Yochai Benkler Online Media & the 2016 U.S. Presidential Election ACKNOWLEDGMENTS This paper is the result of months of effort and has only come to be as a result of the generous input of many people from the Berkman Klein Center and beyond. Jonas Kaiser and Paola Villarreal expanded our thinking around methods and interpretation. Brendan Roach provided excellent research assistance. Rebekah Heacock Jones helped get this research off the ground, and Justin Clark helped bring it home. We are grateful to Gretchen Weber, David Talbot, and Daniel Dennis Jones for their assistance in the production and publication of this study. This paper has also benefited from contributions of many outside the Berkman Klein community. The entire Media Cloud team at the Center for Civic Media at MIT’s Media Lab has been essential to this research. -

Minority Percentages at Participating Newspapers

Minority Percentages at Participating Newspapers Asian Native Asian Native Am. Black Hisp Am. Total Am. Black Hisp Am. Total ALABAMA The Anniston Star........................................................3.0 3.0 0.0 0.0 6.1 Free Lance, Hollister ...................................................0.0 0.0 12.5 0.0 12.5 The News-Courier, Athens...........................................0.0 0.0 0.0 0.0 0.0 Lake County Record-Bee, Lakeport...............................0.0 0.0 0.0 0.0 0.0 The Birmingham News................................................0.7 16.7 0.7 0.0 18.1 The Lompoc Record..................................................20.0 0.0 0.0 0.0 20.0 The Decatur Daily........................................................0.0 8.6 0.0 0.0 8.6 Press-Telegram, Long Beach .......................................7.0 4.2 16.9 0.0 28.2 Dothan Eagle..............................................................0.0 4.3 0.0 0.0 4.3 Los Angeles Times......................................................8.5 3.4 6.4 0.2 18.6 Enterprise Ledger........................................................0.0 20.0 0.0 0.0 20.0 Madera Tribune...........................................................0.0 0.0 37.5 0.0 37.5 TimesDaily, Florence...................................................0.0 3.4 0.0 0.0 3.4 Appeal-Democrat, Marysville.......................................4.2 0.0 8.3 0.0 12.5 The Gadsden Times.....................................................0.0 0.0 0.0 0.0 0.0 Merced Sun-Star.........................................................5.0 -

Minority Percentages at Participating Newspapers

2012 Minority Percentages at Participating Newspapers American Asian Indian American Black Hispanic Multi-racial Total American Asian The News-Times, El Dorado 0.0 0.0 11.8 0.0 0.0 11.8 Indian American Black Hispanic Multi-racial Total Times Record, Fort Smith 0.0 0.0 0.0 0.0 3.3 3.3 ALABAMA Harrison Daily Times 0.0 0.0 0.0 0.0 0.0 0.0 The Alexander City Outlook 0.0 0.0 0.0 0.0 0.0 0.0 The Daily World, Helena 0.0 0.0 0.0 0.0 0.0 0.0 The Andalusia Star-News 0.0 0.0 0.0 0.0 0.0 0.0 The Sentinel-Record, Hot Springs National Park 0.0 0.0 0.0 0.0 0.0 0.0 The News-Courier, Athens 0.0 0.0 0.0 0.0 0.0 0.0 The Jonesboro Sun 0.0 0.0 0.0 0.0 0.0 0.0 The Birmingham News 0.0 0.0 20.2 0.0 0.0 20.2 Banner-News, Magnolia 0.0 0.0 15.4 0.0 0.0 15.4 The Cullman Times 0.0 0.0 0.0 0.0 0.0 0.0 Malvern Daily Record 0.0 0.0 0.0 0.0 0.0 0.0 The Decatur Daily 0.0 0.0 13.9 11.1 0.0 25.0 Paragould Daily Press 0.0 0.0 0.0 0.0 0.0 0.0 Enterprise Ledger 0.0 0.0 0.0 0.0 0.0 0.0 Pine Bluff Commercial 0.0 0.0 25.0 0.0 0.0 25.0 TimesDaily, Florence 0.0 0.0 4.8 0.0 0.0 4.8 The Daily Citizen, Searcy 0.0 0.0 0.0 0.0 0.0 0.0 Fort Payne Times-Journal 0.0 0.0 0.0 0.0 0.0 0.0 Stuttgart Daily Leader 0.0 0.0 0.0 0.0 0.0 0.0 Valley Times-News, Lanett 0.0 0.0 0.0 0.0 0.0 0.0 Evening Times, West Memphis 0.0 0.0 0.0 0.0 0.0 0.0 Press-Register, Mobile 0.0 0.0 8.7 0.0 1.4 10.1 CALIFORNIA Montgomery Advertiser 0.0 0.0 17.5 0.0 0.0 17.5 The Bakersfield Californian 0.0 2.4 2.4 16.7 0.0 21.4 The Selma Times-Journal 0.0 0.0 50.0 0.0 0.0 50.0 Desert Dispatch, Barstow 0.0 0.0 0.0 0.0 0.0 0.0 -

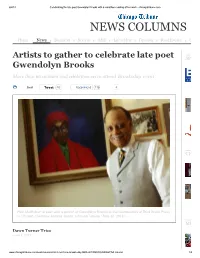

News Columns

6/4/13 Celebrating the late poet Gwendolyn Brooks with a marathon reading of her work - chicagotribune.com NEWS COLUMNS Home News Business Sports A&E Lifestyles Opinion Real Estate Cars Artists to gather to celebrate late poet SPECIAL ADVERTISING SECTIONS Gwendolyn Brooks More than 60 authors and celebrities set to attend 'Brooksday' event Email Tw eet 10 Recommend 118 4 CHICAGONOW Haki Madhubuti is seen with a portrait of Gwendolyn Brooks at the headquarters of Third World Press in Chicago. (Terrence Antonio James, Chicago Tribune / May 30, 2013) METROMIX Dawn Turner Trice June 3, 2013 www.chicagotribune.com/news/columnists/ct-met-trice-brooks-day-0603-20130603,0,4580527,full.column 1/5 6/4/13 Celebrating the late poet Gwendolyn Brooks with a marathon reading of her work - chicagotribune.com Haki Madhubuti met the late, great poet Gwendolyn Brooks in 1967 in a South Side church where she was teaching poetry writing to members of the Blackstone Rangers street gang. Back then, Madhubuti was a young, published poet who wasn't in a gang, just eager to participate in Brooks' workshop. "In the early days, my work was rough because I was rough," said Madhubuti, the founder and president of Third World Press. "I came from the streets of the West Side of Chicago and didn't take criticism lightly. We had blow ups and the last one was so difficult that we didn't speak for a couple of weeks." But he and Brooks sat down over a cup of coffee and made amends. "From that point on, I became her cultural son," he said. -

Blagojevich Often MIA, Aides Say Visited 02/22/2011

Blagojevich often MIA, aides say Visited 02/22/2011 SIGN IN HOME DELIVERY CHICAGOPOINTS ADVERTISE JOBS CARS REAL ESTATE APARTMENTS MORE CLASSIFIED Home News Suburbs Business Sports Entertainment TribU Health Opinion Breaking Newsletters Skilling's weather Traffic Obits Travel Amy Horoscopes Blogs Columns Photos Blagojevich often MIA, aides say 4.50 from 4 ratings Rod Blagojevich's approval ratings had tanked and he was furious. Illinois voters were ingrates, the former governor complained to an aide on an undercover recording played at his corruption trial Thursday. SHARE Facebook (0) Retweet (0) Digg (0) ShareThis Add your voice to the mix! Sign In | Register Chicago Tribune Discussions Discussion FAQ Privacy Policy Terms of Service 1400 What's Hot sort: newest first | oldest first most commented highest rated Neither side budging in Wisconsin union fight (584 comments) GDAVFFR at 9:14 PM July 9, 2010 Reply Its all we know of our politicians is, that their in it for money & power & Report Abuse Wisconsin governor warns that layoff notices those that profess their in it for truth & honor too few to be counted. 0 0 could go out next week if budget isn't solved (398 comments) Wisconsin senators living day-to- mk.interiorplanning at 1:30 PM July 9, 2010 Reply day south of border (292 I wonder if he was down in Argentina with Sanford? Report Abuse comments) 0 0 Wisconsin budget battle rallies continue (192 comments) _CrimeBoss_ at 1:13 PM July 9, 2010 Reply Blago was totally useless. That is why we voted for him. Report Abuse You can lead kids to broccoli, but 0 0 you can't make them eat (179 The logic: Blago was "way to dumb to hurt us". -

Bid to Bridge a Segregated City

BASEBALL ROYALTY MAKES TOUR OF TOWN As the buzz builds about a possible trade to the Cubs, Orioles star shortstop Manny Machado embraces the spotlight. David Haugh, Chicago Sports CHRIS A+E WALKER/ CHICAGO TRIBUNE THE WONDERS UNDERWATER Shedd’s ‘Underwater Beauty’ showcases extraordinary colors and patterns from the world of aquatic creatures EXPANDED SPORTS COVE SU BSCRIBER EXCLUSIVE RA GE Questions? Call 1-800-Tribune Tuesday, May 22, 2018 Breaking news at chicagotribune.com Lawmakers to get intel ‘review’ additional detail. Deal made for meeting over FBI source During a meeting with Trump, in Russia probe amid Trump’s demand Deputy Attorney General Rod Rosenstein and FBI Director By Desmond Butler infiltrated his presidential cam- Christopher Wray also reiterated and Chad Day paign. It’s unclear what the mem- an announcement late Sunday Associated Press bers will be allowed to review or if that the Justice Department’s the Justice Department will be inspector general will expand an WASHINGTON — The White providing any documents to Con- existing investigation into the House said Monday that top FBI gress. Russia probe by examining and Justice Department officials White House press secretary whether there was any improper have agreed to meet with congres- Sarah Huckabee Sanders said politically motivated surveillance. sional leaders and “review” highly Trump chief of staff John Kelly Rep. Devin Nunes, a Trump classified information the law- will broker the meeting among supporter and head of the House makers have been seeking as they congressional leaders and the FBI, intelligence committee, has been scrutinize the handling of the Justice Department and Office of demanding information on an FBI Russia investigation. -

Jazz Pianist Gerald Clayton Performs with Students - Chicagotribune.Com

6/24/13 Jazz pianist Gerald Clayton performs with students - chicagotribune.com Sign In or Sign Up MUSIC Home News Business Sports A&E Lifestyles Opinion Real Estate Cars Jobs Jazz pianist Gerald Clayton performs with students Ads by Google 2013 "Best Home Security" Who's Honest, and Who's Trusted? View Our 2013 Top Recommended! BestHomeSecurityCompanys.com Email Tw eet 1 0 TODAY'S FLYERS Target Dell US This Week Only Latest Flyer Sports Authority Home Depot USA Valid until Jun 30 3 Days Left Flyer alerts and shopping advice from ChicagoShopping.com enter your email SUBSCRIBE Gerald Clayton (June 6, 2013) SPECIAL ADVERTISING SECTIONS Howard Reich HOWARD REICH Arts critic 10:01 a.m. CDT, June 6, 2013 TRENDS IN CHICAGO Keep up with today's Gerald Clayton, one of the most accomplished and auto, home, food and promising young pianists in jazz, will collaborate with travel trends students from the Chicago Public Schools’ Advanced Arts PRIMETIME Education Program and Chicago High School for the Arts A special look at local Bio | E-mail | Recent columns (also known as ChiArts) at 7:30 p.m. Thursday at lifestyles for those over Columbia College Concert Hall, 1014 S. Michigan Ave. 50 Ads by Google Presented by the Thelonious Monk Institute of Jazz in What is Dementia SPRING STYLES conjunction with the Chicago Public Schools, the event also Concerned About Coffee helps early risers will feature Chicago saxophonists Jarrard Harris and Dementia? Learn Signs, be more productive Anthony Bruno. Clayton has been in residence with the Symptoms, Types & Treatment. students this week; Harris and Bruno have been their www.chicagotribune.com/entertainment/music/chi-gerald-clayton-20130606,0,3263134.column 1/3 6/24/13 Jazz pianist Gerald Clayton performs with students - chicagotribune.com instructors. -

Fueling Discovery Today, We Day in the College

Illustration by Elena Poiata, Wisconsin Foundation and Alumni Association Sunday, May 3, 2020 | go.madison.com/discovery 2 | SUNDAY, MAY 3, 2020 | WISCONSIN STATE JOURNAL UW-MADISON COLLEGE OF LETTERS & SCIENCE COLLEGE OF LETTERS & SCIENCE Curiosity-driven research fuels life-changing discovery at L&S n late February, I sat down to own research in their own words, About the write an opening piece for this not for the professional peers with interim dean I special section of the Wiscon- whom we are so used to discussing sin State Journal. our work, but in the spirit of the My essay was Wisconsin Idea. Eric M. Wilcots is the interim dean full of pride for my The pieces in this supplement of the College of Letters & Science. College of Letters & were chosen well before the start Prior to assuming that role, Wilcots Science colleagues of the global outbreak of the virus served as deputy dean, charged with and the ways in behind COVID-19 and do not spe- overseeing the college’s efforts on a which they are cifically touch on the pandemic. wide range of initiatives, including working to under- Yet, the underlying principle of inclusivity/diversity, undergraduate ERIC M. education enhancement, research WILCOTS stand and influence curiosity-driven research that how we all think guides the College of Letters & initiatives and research services. about our world. Science, reflected here, also in- He is also the Mary C. Jacoby Profes- The last few months have turned fuses our efforts to understand the sor in the Department of Astronomy, that world upside down. -

Madison Marathon & Run Madtown

PRESENTED BY PRESENTED BY SPONSORSHIP OPPORTUNITIES WHO WE ARE Fun for you, good for Madison Our mission at Madison Festivals, Inc. is to enrich our community by bringing together people, businesses, and charities. Incorporated on April 5, 1993, Madison Festivals, Inc. (MFI) was formed exclusively to organize and manage charitable events for the education, participation, and entertainment of the public in the area of Madison, Wisconsin. These events enhance Madison’s reputation as a great city to live in and visit, build community around volunteering, supporting local businesses and provide over $100,00 annually to many local charities and non-profits. MFI owns the Taste of Madison, Madison Marathon and Run Madtown. The events are managed and produced by contracting with Madison-based event management company, Race Day Events. A MADISON FESTIVALS PRODUCTION PRESENTED BY PRESENTED BY RUN MADTOWN MADISON MARATHON FRIDAY - SUNDAY FRIDAY - SUNDAY MAY 25 - 26, 2019 NOVEMBER 8-10, 2019 Run Madtown is a Saturday night-time Kids, 5K & 10K and a Sunday half Marathon over Memorial Day weekend. The Madison Marathon and half Marathon are run on Sunday morning of Veterans’ Day weekend. All races start and finish on Madison’s iconic Capitol Square and have courses that highlight surrounding Madison neighborhoods, famous sites and the University of Wisconsin campus. Run Madtown and the Madison Marathon both kick off with two-day pre-race expos at historical Monona Terrace and are brought to close on Sunday with post-race parties presented by Michelob Ultra. A great race in a Capital Place! In 2018 approximately 7,000 participants and over 36,000 cheering fans attended these events. -

Niles Herald- Spectator

© o NILES HERALD- SPECTATOR Sl.50 Thursday, October 22, 2015 n i1esheraJdspectatoicom Expense reports released GO Nues Township D219 paid for extensive travel, high-end hotels.Page 4 KEVIN TANAKA/PIONEER PRESS Scary sounds The Park Ridge Civic Orchestra hosts its family Halloween concert at the Pickwick Theatre. Page 23 SPORTS Game. Set Match. Pioneer Press previews this weekend's girls tennis state tournament. ABEl. URIBE/CHICAGO TRIBUNE Page 39 KEVIN TANAKA/PIONEER Mies North High School campus seen from Old Orchard Road PRESS LiVING Ghoulish treats Spice up your Halloween meals with creative reci- pes, such as gnarly "witch fingers" (chicken fingers) served with "eye ofnewt" (cooked peas). Area cooks share that recipe and more.INSIDE JUDY BUCHENOT/NAPERVILLE SUN 2 SHOUT OUT NILEs HERALD-SPECTATOR nilesheraldspectator.com Usha Kamaria, community leader Bob Fleck, Publisher/General Manager Usha Kamaria has been an "Gravity," "Deep Sea" and "Monkey suburbs®chicagotribune.com important leader in Skokie and a Kingdom?' Q. Do you have children? John Puterbaugh, Editor. 312-222-3331 [email protected] positive advocate for the large Indian community of Niles Town- A. Yes, we have one daughter Georgia Garvey, Managing Editor 312-222-2398; [email protected] ship. She sat on the Niles Township and one son. Q. Favorite charity? Matt Bute, Vice President of Advertising Board of Trustees and was a [email protected] founding member of Coming To- A. I believe chaity starts from gether in Niles Township and the home - taldng care of our elderly Local News Editor MAILING ADDRESS Gandhi Memorial Trust Pioneer and taking care of the helpless and Richard Ray, 312-222-3339 435 N. -

Lee Enterprises Appoints Publisher of Wisconsin State Journal

Lee Enterprises Appoints Publisher of Wisconsin State Journal April 18, 2006 MADISON, Wis.--(BUSINESS WIRE)--April 18, 2006--William K. Johnston, who began his newspaper career with Lee Enterprises, Incorporated (NYSE:LEE), 33 years ago in Madison, is returning as publisher of the Wisconsin State Journal. Johnston, currently publisher of the Lincoln Journal Star and regional executive for Lee publishing operations in Nebraska, will begin his new duties May 22. He will succeed James W. Hopson, who announced in March that he plans to retire at the end of the year. Until retirement, Hopson will continue to serve as a Lee vice president for publishing, overseeing other Lee newspapers in Wisconsin and Minnesota and handling special projects. Mary Junck, Lee chairman and chief executive officer, described Johnston as one of the company's most accomplished leaders. "Bill is a builder," she said. "On top of his own terrific track record as an advertising manager, general manager, publisher and regional executive throughout his long career in Lee, Bill has had an influential hand in the professional growth of eight of our current publishers, three of our top editors and many more key managers. He's continually improved our products and services, and he's also earned respect and admiration in the communities he's served." As publisher of the Wisconsin State Journal, Johnston also will become a principal officer of Capital Newspapers of Madison Newspapers, Inc., which is jointly owned by Lee and The Capital Times Co. "I'm extremely excited about this opportunity to return to my home state as publisher of a newspaper as terrific as the Wisconsin State Journal," he said.