Transformation Monoids in Programming Language Semantics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

General Education and Liberal Studies Course List

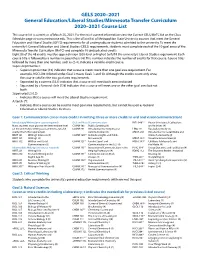

GELS 2020–2021 General Education/Liberal Studies/Minnesota Transfer Curriculum 2020–2021 Course List This course list is current as of March 25, 2021. For the most current information view the Current GELS/MnTC list on the Class Schedule page at www.metrostate.edu. This is the official list of Metropolitan State University courses that meet the General Education and Liberal Studies (GELS) requirements for all undergraduate students admitted to the university. To meet the university’s General Education and Liberal Studies (GELS) requirements, students must complete each of the 10 goal areas of the Minnesota Transfer Curriculum (MnTC) and complete 48 unduplicated credits. Eight (8) of the 48 credits must be upper division (300-level or higher) to fulfill the university’s Liberal Studies requirement. Each course title is followed by a number in parenthesis (4). This number indicates the number of credits for that course. Course titles followed by more than one number, such as (2-4), indicate a variable-credit course. Superscript Number: • Superscript number (10) indicates that a course meets more than one goal area requirement. For example, NSCI 20410 listed under Goal 3 meets Goals 3 and 10. Although the credits count only once, the course satisfies the two goal area requirements. • Separated by a comma (3,LS) indicates that a course will meet both areas indicated. • Separated by a forward slash (7/8) indicates that a course will meet one or the other goal area but not both. Superscript LS (LS): • Indicates that a course will meet the Liberal Studies requirement. Asterisk (*): • Indicates that a course can be used to meet goal area requirements, but cannot be used as General Education or Liberal Studies Electives. -

LINUX INTERNALS LABORATORY III. Understand Process

LINUX INTERNALS LABORATORY VI Semester: IT Course Code Category Hours / Week Credits Maximum Marks L T P C CIA SEE Total AIT105 Core - - 3 2 30 70 100 Contact Classes: Nil Tutorial Classes: Nil Practical Classes: 36 Total Classes: 36 OBJECTIVES: The course should enable the students to: I. Familiar with the Linux command-line environment. II. Understand system administration processes by providing a hands-on experience. III. Understand Process management and inter-process communications techniques. LIST OF EXPERIMENTS Week-1 BASIC COMMANDS I Study and Practice on various commands like man, passwd, tty, script, clear, date, cal, cp, mv, ln, rm, unlink, mkdir, rmdir, du, df, mount, umount, find, unmask, ulimit, ps, who, w. Week-2 BASIC COMMANDS II Study and Practice on various commands like cat, tail, head , sort, nl, uniq, grep, egrep,fgrep, cut, paste, join, tee, pg, comm, cmp, diff, tr, awk, tar, cpio. Week-3 SHELL PROGRAMMING I a) Write a Shell Program to print all .txt files and .c files. b) Write a Shell program to move a set of files to a specified directory. c) Write a Shell program to display all the users who are currently logged in after a specified time. d) Write a Shell Program to wish the user based on the login time. Week-4 SHELL PROGRAMMING II a) Write a Shell program to pass a message to a group of members, individual member and all. b) Write a Shell program to count the number of words in a file. c) Write a Shell program to calculate the factorial of a given number. -

Simple Laws About Nonprominent Properties of Binary Relations

Simple Laws about Nonprominent Properties of Binary Relations Jochen Burghardt jochen.burghardt alumni.tu-berlin.de Nov 2018 Abstract We checked each binary relation on a 5-element set for a given set of properties, including usual ones like asymmetry and less known ones like Euclideanness. Using a poor man's Quine-McCluskey algorithm, we computed prime implicants of non-occurring property combinations, like \not irreflexive, but asymmetric". We considered the laws obtained this way, and manually proved them true for binary relations on arbitrary sets, thus contributing to the encyclopedic knowledge about less known properties. Keywords: Binary relation; Quine-McCluskey algorithm; Hypotheses generation arXiv:1806.05036v2 [math.LO] 20 Nov 2018 Contents 1 Introduction 4 2 Definitions 8 3 Reported law suggestions 10 4 Formal proofs of property laws 21 4.1 Co-reflexivity . 21 4.2 Reflexivity . 23 4.3 Irreflexivity . 24 4.4 Asymmetry . 24 4.5 Symmetry . 25 4.6 Quasi-transitivity . 26 4.7 Anti-transitivity . 28 4.8 Incomparability-transitivity . 28 4.9 Euclideanness . 33 4.10 Density . 38 4.11 Connex and semi-connex relations . 39 4.12 Seriality . 40 4.13 Uniqueness . 42 4.14 Semi-order property 1 . 43 4.15 Semi-order property 2 . 45 5 Examples 48 6 Implementation issues 62 6.1 Improved relation enumeration . 62 6.2 Quine-McCluskey implementation . 64 6.3 On finding \nice" laws . 66 7 References 69 List of Figures 1 Source code for transitivity check . .5 2 Source code to search for right Euclidean non-transitive relations . .5 3 Timing vs. universe cardinality . -

APPENDIX a Aegis and Unix Commands

APPENDIX A Aegis and Unix Commands FUNCTION AEGIS BSD4.2 SYSS ACCESS CONTROL AND SECURITY change file protection modes edacl chmod chmod change group edacl chgrp chgrp change owner edacl chown chown change password chpass passwd passwd print user + group ids pst, lusr groups id +names set file-creation mode mask edacl, umask umask umask show current permissions acl -all Is -I Is -I DIRECTORY CONTROL create a directory crd mkdir mkdir compare two directories cmt diff dircmp delete a directory (empty) dlt rmdir rmdir delete a directory (not empty) dlt rm -r rm -r list contents of a directory ld Is -I Is -I move up one directory wd \ cd .. cd .. or wd .. move up two directories wd \\ cd . ./ .. cd . ./ .. print working directory wd pwd pwd set to network root wd II cd II cd II set working directory wd cd cd set working directory home wd- cd cd show naming directory nd printenv echo $HOME $HOME FILE CONTROL change format of text file chpat newform compare two files emf cmp cmp concatenate a file catf cat cat copy a file cpf cp cp Using and Administering an Apollo Network 265 copy std input to std output tee tee tee + files create a (symbolic) link crl In -s In -s delete a file dlf rm rm maintain an archive a ref ar ar move a file mvf mv mv dump a file dmpf od od print checksum and block- salvol -a sum sum -count of file rename a file chn mv mv search a file for a pattern fpat grep grep search or reject lines cmsrf comm comm common to 2 sorted files translate characters tic tr tr SHELL SCRIPT TOOLS condition evaluation tools existf test test -

A General Account of Coinduction Up-To Filippo Bonchi, Daniela Petrişan, Damien Pous, Jurriaan Rot

A General Account of Coinduction Up-To Filippo Bonchi, Daniela Petrişan, Damien Pous, Jurriaan Rot To cite this version: Filippo Bonchi, Daniela Petrişan, Damien Pous, Jurriaan Rot. A General Account of Coinduction Up-To. Acta Informatica, Springer Verlag, 2016, 10.1007/s00236-016-0271-4. hal-01442724 HAL Id: hal-01442724 https://hal.archives-ouvertes.fr/hal-01442724 Submitted on 20 Jan 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. A General Account of Coinduction Up-To ∗ Filippo Bonchi Daniela Petrişan Damien Pous Jurriaan Rot May 2016 Abstract Bisimulation up-to enhances the coinductive proof method for bisimilarity, providing efficient proof techniques for checking properties of different kinds of systems. We prove the soundness of such techniques in a fibrational setting, building on the seminal work of Hermida and Jacobs. This allows us to systematically obtain up-to techniques not only for bisimilarity but for a large class of coinductive predicates modeled as coalgebras. The fact that bisimulations up to context can be safely used in any language specified by GSOS rules can also be seen as an instance of our framework, using the well-known observation by Turi and Plotkin that such languages form bialgebras. -

The UNIX Time- Sharing System

1. Introduction There have been three versions of UNIX. The earliest version (circa 1969–70) ran on the Digital Equipment Cor- poration PDP-7 and -9 computers. The second version ran on the unprotected PDP-11/20 computer. This paper describes only the PDP-11/40 and /45 [l] system since it is The UNIX Time- more modern and many of the differences between it and older UNIX systems result from redesign of features found Sharing System to be deficient or lacking. Since PDP-11 UNIX became operational in February Dennis M. Ritchie and Ken Thompson 1971, about 40 installations have been put into service; they Bell Laboratories are generally smaller than the system described here. Most of them are engaged in applications such as the preparation and formatting of patent applications and other textual material, the collection and processing of trouble data from various switching machines within the Bell System, and recording and checking telephone service orders. Our own installation is used mainly for research in operating sys- tems, languages, computer networks, and other topics in computer science, and also for document preparation. UNIX is a general-purpose, multi-user, interactive Perhaps the most important achievement of UNIX is to operating system for the Digital Equipment Corpora- demonstrate that a powerful operating system for interac- tion PDP-11/40 and 11/45 computers. It offers a number tive use need not be expensive either in equipment or in of features seldom found even in larger operating sys- human effort: UNIX can run on hardware costing as little as tems, including: (1) a hierarchical file system incorpo- $40,000, and less than two man years were spent on the rating demountable volumes; (2) compatible file, device, main system software. -

Introduction to Relations

CHAPTER 7 Introduction to Relations 1. Relations and Their Properties 1.1. Definition of a Relation. Definition:A binary relation from a set A to a set B is a subset R ⊆ A × B: If (a; b) 2 R we say a is related to b by R. A is the domain of R, and B is the codomain of R. If A = B, R is called a binary relation on the set A. Notation: • If (a; b) 2 R, then we write aRb. • If (a; b) 62 R, then we write aR6 b. Discussion Notice that a relation is simply a subset of A × B. If (a; b) 2 R, where R is some relation from A to B, we think of a as being assigned to b. In these senses students often associate relations with functions. In fact, a function is a special case of a relation as you will see in Example 1.2.4. Be warned, however, that a relation may differ from a function in two possible ways. If R is an arbitrary relation from A to B, then • it is possible to have both (a; b) 2 R and (a; b0) 2 R, where b0 6= b; that is, an element in A could be related to any number of elements of B; or • it is possible to have some element a in A that is not related to any element in B at all. 204 1. RELATIONS AND THEIR PROPERTIES 205 Often the relations in our examples do have special properties, but be careful not to assume that a given relation must have any of these properties. -

How to Build a Search-Engine with Common Unix-Tools

The Tenth International Conference on Advances in Databases, Knowledge, and Data Applications Mai 20 - 24, 2018 - Nice/France How to build a Search-Engine with Common Unix-Tools Andreas Schmidt (1) (2) Department of Informatics and Institute for Automation and Applied Informatics Business Information Systems Karlsruhe Institute of Technologie University of Applied Sciences Karlsruhe Germany Germany Andreas Schmidt DBKDA - 2018 1/66 Resources available http://www.smiffy.de/dbkda-2018/ 1 • Slideset • Exercises • Command refcard 1. all materials copyright, 2018 by andreas schmidt Andreas Schmidt DBKDA - 2018 2/66 Outlook • General Architecture of an IR-System • Naive Search + 2 hands on exercices • Boolean Search • Text analytics • Vector Space Model • Building an Inverted Index & • Inverted Index Query processing • Query Processing • Overview of useful Unix Tools • Implementation Aspects • Summary Andreas Schmidt DBKDA - 2018 3/66 What is Information Retrieval ? Information Retrieval (IR) is finding material (usually documents) of an unstructured nature (usually text) that satisfies an informa- tion need (usually a query) from within large collections (usually stored on computers). [Manning et al., 2008] Andreas Schmidt DBKDA - 2018 4/66 What is Information Retrieval ? need for query information representation how to match? document document collection representation Andreas Schmidt DBKDA - 2018 5/66 Keyword Search • Given: • Number of Keywords • Document collection • Result: • All documents in the collection, cotaining the keywords • (ranked by relevance) Andreas Schmidt DBKDA - 2018 6/66 Naive Approach • Iterate over all documents d in document collection • For each document d, iterate all words w and check, if all the given keywords appear in this document • if yes, add document to result set • Output result set • Extensions/Variants • Ranking see examples later ... -

Sort, Uniq, Comm, Join Commands

The 19th International Conference on Web Engineering (ICWE-2019) June 11 - 14, 2019 - Daejeon, Korea Powerful Unix-Tools - sort & uniq & comm & join Andreas Schmidt Department of Informatics and Institute for Automation and Applied Informatics Business Information Systems Karlsruhe Institute of Technologie University of Applied Sciences Karlsruhe Germany Germany Andreas Schmidt ICWE - 2019 1/10 sort • Sort lines of text files • Write sorted concatenation of all FILE(s) to standard output. • With no FILE, or when FILE is -, read standard input. • sorting alpabetic, numeric, ascending, descending, case (in)sensitive • column(s)/bytes to be sorted can be specified • Random sort option (-R) • Remove of identical lines (-u) • Examples: • sort file city.csv starting with the second column (field delimiter: ,) sort -k2 -t',' city.csv • merge content of file1.txt and file2.txt and sort the result sort file1.txt file2.txt Andreas Schmidt ICWE - 2019 2/10 sort - examples • sort file by country code, and as a second criteria population (numeric, descending) sort -t, -k2,2 -k4,4nr city.csv numeric (-n), descending (-r) field separator: , second sort criteria from column 4 to column 4 first sort criteria from column 2 to column 2 Andreas Schmidt ICWE - 2019 3/10 sort - examples • Sort by the second and third character of the first column sort -t, -k1.2,1.2 city.csv • Generate a line of unique random numbers between 1 and 10 seq 1 10| sort -R | tr '\n' ' ' • Lottery-forecast (6 from 49) - defective from time to time ;-) seq 1 49 | sort -R | head -n6 -

The Linux Command Line

The Linux Command Line Second Internet Edition William E. Shotts, Jr. A LinuxCommand.org Book Copyright ©2008-2013, William E. Shotts, Jr. This work is licensed under the Creative Commons Attribution-Noncommercial-No De- rivative Works 3.0 United States License. To view a copy of this license, visit the link above or send a letter to Creative Commons, 171 Second Street, Suite 300, San Fran- cisco, California, 94105, USA. Linux® is the registered trademark of Linus Torvalds. All other trademarks belong to their respective owners. This book is part of the LinuxCommand.org project, a site for Linux education and advo- cacy devoted to helping users of legacy operating systems migrate into the future. You may contact the LinuxCommand.org project at http://linuxcommand.org. This book is also available in printed form, published by No Starch Press and may be purchased wherever fine books are sold. No Starch Press also offers this book in elec- tronic formats for most popular e-readers: http://nostarch.com/tlcl.htm Release History Version Date Description 13.07 July 6, 2013 Second Internet Edition. 09.12 December 14, 2009 First Internet Edition. 09.11 November 19, 2009 Fourth draft with almost all reviewer feedback incorporated and edited through chapter 37. 09.10 October 3, 2009 Third draft with revised table formatting, partial application of reviewers feedback and edited through chapter 18. 09.08 August 12, 2009 Second draft incorporating the first editing pass. 09.07 July 18, 2009 Completed first draft. Table of Contents Introduction....................................................................................................xvi -

From Peirce's Algebra of Relations to Tarski's Calculus of Relations

The Origin of Relation Algebras in the Development and Axiomatization of the Calculus of Relations Author(s): Roger D. Maddux Source: Studia Logica: An International Journal for Symbolic Logic, Vol. 50, No. 3/4, Algebraic Logic (1991), pp. 421-455 Published by: Springer Stable URL: http://www.jstor.org/stable/20015596 Accessed: 02/03/2009 14:54 Your use of the JSTOR archive indicates your acceptance of JSTOR's Terms and Conditions of Use, available at http://www.jstor.org/page/info/about/policies/terms.jsp. JSTOR's Terms and Conditions of Use provides, in part, that unless you have obtained prior permission, you may not download an entire issue of a journal or multiple copies of articles, and you may use content in the JSTOR archive only for your personal, non-commercial use. Please contact the publisher regarding any further use of this work. Publisher contact information may be obtained at http://www.jstor.org/action/showPublisher?publisherCode=springer. Each copy of any part of a JSTOR transmission must contain the same copyright notice that appears on the screen or printed page of such transmission. JSTOR is a not-for-profit organization founded in 1995 to build trusted digital archives for scholarship. We work with the scholarly community to preserve their work and the materials they rely upon, and to build a common research platform that promotes the discovery and use of these resources. For more information about JSTOR, please contact [email protected]. Springer is collaborating with JSTOR to digitize, preserve and extend access to Studia Logica: An International Journal for Symbolic Logic. -

Gnu Coreutils Core GNU Utilities for Version 5.93, 2 November 2005

gnu Coreutils Core GNU utilities for version 5.93, 2 November 2005 David MacKenzie et al. This manual documents version 5.93 of the gnu core utilities, including the standard pro- grams for text and file manipulation. Copyright c 1994, 1995, 1996, 2000, 2001, 2002, 2003, 2004, 2005 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License”. Chapter 1: Introduction 1 1 Introduction This manual is a work in progress: many sections make no attempt to explain basic concepts in a way suitable for novices. Thus, if you are interested, please get involved in improving this manual. The entire gnu community will benefit. The gnu utilities documented here are mostly compatible with the POSIX standard. Please report bugs to [email protected]. Remember to include the version number, machine architecture, input files, and any other information needed to reproduce the bug: your input, what you expected, what you got, and why it is wrong. Diffs are welcome, but please include a description of the problem as well, since this is sometimes difficult to infer. See section “Bugs” in Using and Porting GNU CC. This manual was originally derived from the Unix man pages in the distributions, which were written by David MacKenzie and updated by Jim Meyering.