A Guide to Hard Drive Testing Page 1 of 49

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Open Source Used in 8685 for UPC V 4.0.0.8E25

Open Source Used In 8685 for UPC v 4.0.0.8E25 This document contains the licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of the source code to which you are entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License) , please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-20003954 Contents 1.1 BusyBox 1.11.1 1.1.1 Available under license 1.2 JPEG Decoder for UPC 8685 HPK driver 6b 27-Mar-1998 1.2.1 Notifications 1.2.2 Available under license 1.3 Linux Kernel stblinux-2.6.31-2.3 2.6.31-2.3 1.3.1 Available under license 1.4 NetSNMP in Broadcom 7019 chip Cable Modem Software 5.0.9 1.4.1 Available under license 1.5 OpenSSH Library in Broadcom 7019 chip Cable Modem Software 4.0p1 1.5.1 Available under license 1.6 OpenSSL Library in Broadcom 7019 chip Cable Modem Software 0.9.8 1.6.1 Notifications Open Source Used In 8685 for UPC v 4.0.0.8E25 1 1.6.2 Available under license 1.7 smartmontools 5.36 1.7.1 Available under license 1.1 BusyBox 1.11.1 1.1.1 Available under license : --- A note on GPL versions BusyBox is distributed under version 2 of the General Public License (included in its entirety, below). -

Superdoctor 5 User's Guide

SuperDoctor 5 User's Guide Version 1.8c The information in this USER’S GUIDE has been carefully reviewed and is believed to be accurate. The vendor assumes no responsibility for any inaccuracies that may be contained in this document, makes no commitment to update or to keep current the information in this manual, or to notify any person organization of the updates. Please Note: For the most up-to-date version of this manual, please see our web site at www.supermicro.com. Super Micro Computer, Inc. (“Supermicro”) reserves the right to make changes to the product described in this manual at any time and without notice. This product, including software, if any, and documentation may not, in whole or in part, be copied, photocopied, reproduced, translated or reduced to any medium or machine without prior written consent. DISCLAIMER OF WARRANTY ON SOFTWARE AND MATERIALS. You expressly acknowledge and agree that use of the Software and Materials is at your sole risk. FURTHERMORE, SUPER MICRO COMPUTER INC. DOES NOT WARRANT OR MAKE ANY REPRESENTATIONS REGARDING THE USE OR THE RESULTS OF THE USE OF THE SOFTWARE OR MATERIALS IN TERMS OF THEIR CORRECTNESS, ACCURACY, RELIABILITY, OR OTHERWISE. NO ORAL OR WRITTEN INFORMATION OR ADVICE GIVEN BY SUPER MICRO COMPUTER INC. OR SUPER MICRO COMPUTER INC. AUTHORIZED REPRESENTATIVE SHALL CREATE A WARRANTY OR IN ANY WAY INCREASE THE SCOPE OF THIS WARRANTY. SHOULD THE SOFTWARE AND/OR MATERIALS PROVE DEFECTIVE, YOU (AND NOT SUPER MICRO COMPUTER INC. OR A SUPER MICRO COMPUTER INC. AUTHORIZED REPRESENTATIVE) ASSUME THE ENTIRE COST OF ALL NECESSARY SERVICE, REPAIR, OR CORRECTION. -

Crash Prevention Hard Disks Don’T Always Die As Suddenly As You Might Think.The Right Tools Can Help You Detect Hard Disk Issues Before They Become Critical

SYSADMIN smartmontools Monitoring Hard Disks with smartmontools Crash Prevention Hard disks don’t always die as suddenly as you might think.The right tools can help you detect hard disk issues before they become critical. BY GABRIELE POHL a system that all modern ATA and SCSI the IDE bus as the primary master; it is hard disks, as well as SCSI tape drives, (or should be) accessible as /dev/hda. should have. Besides logging measured These commands all require root privi- values and errors, SMART has device leges, since non-privileged users do not testing features. Of course it is a good have access to device files. think to know about an impending disas- Listing 1 tells you that the drive is a ard disks have rotating moving ter well in advance. And this is exactly 34097H4 model by Maxtor; its serial parts – disks that rotate at 5400 what the Smartmontools package [1] number is L4101EJC; it has version Hor 7200 or more revolutions per does for you. It accesses the SMART fea- YAH814Y0 of the factory software; and it minute – and the heads are subject to ture provided by your hard disks and complies to version 6 of the ATA/ATAPI extreme acceleration and deceleration. runs a daemon called smartd to provide standard. The serial number can be very Because they have moving parts, hard automated controls. important when it comes to claiming a disks are subject to wear and tear. Manu- The package is available for the cur- replacement drive within the warranty facturers typically estimate a mean rent versions of the Linux, FreeBSD, period. -

SUSE Linux Enterprise Server 12 SP4 System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 12 SP4

SUSE Linux Enterprise Server 12 SP4 System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 12 SP4 An administrator's guide for problem detection, resolution and optimization. Find how to inspect and optimize your system by means of monitoring tools and how to eciently manage resources. Also contains an overview of common problems and solutions and of additional help and documentation resources. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xiii -

Operating System Requirements for Red Hat Enterprise Linux CONFIDENTIAL INFORMATION the Information Herein Is the Property of Ex Libris Ltd

Operating System Requirements for Red Hat Enterprise Linux CONFIDENTIAL INFORMATION The information herein is the property of Ex Libris Ltd. or its affiliates and any misuse or abuse will result in economic loss. DO NOT COPY UNLESS YOU HAVE BEEN GIVEN SPECIFIC WRITTEN AUTHORIZATION FROM EX LIBRIS LTD. This document is provided for limited and restricted purposes in accordance with a binding contract with Ex Libris Ltd. or an affiliate. The information herein includes trade secrets and is confidential. DISCLAIMER The information in this document will be subject to periodic change and updating. Please confirm that you have the most current documentation. There are no warranties of any kind, express or implied, provided in this documentation, other than those expressly agreed upon in the applicable Ex Libris contract. This information is provided AS IS. Unless otherwise agreed, Ex Libris shall not be liable for any damages for use of this document, including, without limitation, consequential, punitive, indirect or direct damages. Any references in this document to third‐party material (including third‐party Web sites) are provided for convenience only and do not in any manner serve as an endorsement of that third‐ party material or those Web sites. The third‐party materials are not part of the materials for this Ex Libris product and Ex Libris has no liability for such materials. TRADEMARKS Ex Libris, the Ex Libris logo, Alma, campusM, Esploro, Leganto, Primo, Rosetta, Summon, ALEPH 500, SFX, SFXIT, MetaLib, MetaSearch, MetaIndex and other Ex Libris products and services referenced herein are trademarks of Ex Libris, and may be registered in certain jurisdictions. -

Preparing for Future Data Center Professionals: Integrating Storage Technology Into the Computer Information Technology Curriculum

Session S2E Preparing for Future Data Center Professionals: Integrating Storage Technology into the Computer Information Technology Curriculum Wei Hao, Hetal Jasani, Traian Marius Truta Northern Kentucky University, [email protected], [email protected], [email protected] Abstract -Information brings economic value to the To address the above problems, we have developed a new customers and data is the "soul" of the enterprise. Data course entitled CIT 465/565 - Storage Administration, for centers are playing more and more important roles in senior undergraduate students majoring in CIT and for the enterprises. Storage technology is one of the graduate students in the Master of Science in Computer fundamental technologies behind data centers. The Information Technology (MSCIT) at NKU. Since both our storage knowledge and skills are needed for data center CIT programs emphasize the hands-on learning, we have professionals. Thus, we have developed a new course, developed not only lecture components but also laboratory components for the course. The lecture components are Storage Administration, for Computer Information designed to cover three parts: storage fundamentals, storage Technology (CIT) major students at Northern Kentucky networks, and emerging technologies and data centers. The University (NKU). Since our CIT program emphasizes storage fundamentals part focuses on the fundamental hands-on learning, we have developed the course based storage concepts, such as storage devices, disk interfaces, on hands-on laboratory components. The laboratory disk geometry, disk partitions, disk performance, files components are developed based on open source systems, Redundant Array of Independent Disks (RAID), software and simulator software. In this paper, we hot swap, Logical Volume Management (LVM), and storage describe the hands-on laboratory components in details. -

Licensing Information User Manual Oracle® ZFS

Licensing Information User Manual ® Oracle ZFS Storage ZS3-2 Release OS8.6.0 Part No: E71914-01 July 2016 Licensing Information User Manual Oracle ZFS Storage ZS3-2 Part No: E71914-01 Copyright © 2013, 2016, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS. Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

Eyeglass Search OSS Licenses and Packages V9

ECA 15.1 opensuse https://en.opensuse.org/openSUSE:License Package Licence Name Version Type Key Licence Name opensuse 15.1 OS annogen:annogen 0.1.0 JAR Not Found antlr:antlr 2.7.7 JAR BSD Berkeley Software Distribution (BSD) aopalliance:aopalliance 1 JAR Public DomainPublic Domain asm:asm 3.1 JAR Not Found axis:axis 1.4 JAR Apache-2.0 The Apache Software License, Version 2.0 axis:axis-wsdl4j 1.5.1 JAR Not Found backport-util-concurrent:backport-util-concurrent3.1 JAR Public DomainPublic Domain com.amazonaws:aws-java-sdk 1.1.7.1 JAR Apache-2.0 The Apache Software License, Version 2.0 com.beust:jcommander 1.72 JAR Apache-2.0 The Apache Software License, Version 2.0 com.carrotsearch:hppc 0.6.0 JAR Apache-2.0 The Apache Software License, Version 2.0 com.clearspring.analytics:stream 2.7.0 JAR Apache-2.0 The Apache Software License, Version 2.0 com.drewnoakes:metadata-extractor 2.4.0-beta-1JAR Public DomainPublic Domain com.esotericsoftware:kryo-shaded 3.0.3 JAR BSD Berkeley Software Distribution (BSD) com.esotericsoftware:minlog 1.3.0 JAR BSD Berkeley Software Distribution (BSD) com.fasterxml.jackson.core:jackson-annotations2.6.1 JAR Apache-2.0 The Apache Software License, Version 2.0 com.fasterxml.jackson.core:jackson-annotations2.5.0 JAR Apache-2.0 The Apache Software License, Version 2.0 com.fasterxml.jackson.core:jackson-annotations2.2.0 JAR Apache-2.0 The Apache Software License, Version 2.0 com.fasterxml.jackson.core:jackson-annotations2.9.0 JAR Apache-2.0 The Apache Software License, Version 2.0 com.fasterxml.jackson.core:jackson-annotations2.6.0 -

Assessment of Current Health and Remaining Useful Life of Hard Disk Drives

Assessment of Current Health and Remaining Useful Life of Hard Disk Drives A Thesis Presented by Yogesh G. Bagul to The Department of Mechanical and Industrial Engineering in partial fulfillment of the requirements for the degree of Master of Science in Computer Systems Engineering Northeastern University Boston, Massachusetts USA January 2009 Northeastern University Graduate School of Engineering Thesis Title: Assessment of Current Health and Remaining Useful Life of Hard Disk Drives Author: Yogesh G. Bagul Department: Mechanical and Industrial Engineering Department Approved for Thesis Requirement of the Master of Science Degree ii © 2009 Yogesh G. Bagul iii ACKNOWLEDGEMENTS I would like to express my sincere gratitude to my thesis advisor, Dr. Ibrahim Zeid, for his guidance and support. I would also like to thank Dr. Sagar Kamarthi for his inspiration and guidance. I learned many things related to research from both of them. Their truly scientist intuition and an oasis of ideas always inspired and motivated me to fulfill their expectations. I would also like to thank the faculty and staff of Mechanical and Industrial Engineering Department for their timely support. I would also like to thank Tom Papadopoulos and James Jones for all the technical help they did. Also thanks to the Northeastern University for awarding me a Stipended Graduate Assistantship and providing me with the financial means to complete this project. I cannot end without thanking my family, especially Lt Cdr Rahul (my brother), on whose constant encouragement and love I have relied throughout my time at the Northeastern University. My successful completion of MS thesis would not have been possible without their loving support, caring thoughts, superior guidance, and endless patience. -

Oracle® Database Client Installation Guide

Oracle® Database Client Installation Guide 12c Release 2 (12.2) for Linux E85761-05 October 2019 Oracle Database Client Installation Guide, 12c Release 2 (12.2) for Linux E85761-05 Copyright © 2015, 2019, Oracle and/or its affiliates. All rights reserved. Primary Author: Prakash Jashnani Contributing Authors: Douglas Williams Contributors: David Austin, Neha Avasthy, Prasad Bagal, Subhranshu Banerjee, Mark Bauer, Tammy Bednar, Eric Belden, Gavin Bowe, Robert Chang, Darcy Christensen, Kiran Chamala, Jonathan Creighton, Benoit Dageville, Sudip Datta, Jim Erickson, Marcus Fallen, Joseph Francis, Mark Fuller, Allan Graves, Barbara Glover, Asad Hasan, Thirumaleshwara Hasandka, Sagar Jadhav, Clara Jaeckel, Aneesh Khandelwal, Joel Kallman, Eugene Karichkin, Jai Krishnani, Sangeeth Kumar, Ranjith Kundapur, Kevin Jernigan, Christopher Jones, Simon Law, Bryn Llewellyn, Saar Maoz, Sreejith Minnanghat, Gopal Mulagund, Sue Lee, Rich Long, Barb Lundhild, Rolly Lv, Rudregowda Mallegowda, Padmanabhan Manavazhi, Mughees Minhas, Krishna Mohan, Matthew McKerley, John McHugh, Gurudas Pai, Satish Panchumarthy , Rajesh Prasad, Rajendra Pingte, Richard Roddy, Srinivas Poovala, Mohammed Shahnawaz Quadri, Hanlin Qian, Gurumurthy Ramamurthy, Hema Ramamurthy, Sunil Ravindrachar, Mark Richwine, Dipak Saggi, Trivikrama Samudrala, Shachi Sanklecha, David Schreiner, Ara Shakian, Mohit Singhal, Dharma Sirnapalli, Akshay Shah, James Spiller, Roy Swonger, Binoy Sukumaran, Kamal Tbeileh, Ravi Thammaiah, Shekhar Vaggu, Preethi Vallam, Ajesh Viswambharan, Peter Wahl, Terri Winters, Sergiusz Wolicki, Sivakumar Yarlagadda, Zakia Zerhouni This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. -

SAN Host Utilities

Notices About this information The following copyright statements and licenses apply to software components that are distributed with various versions of the NetApp® SteelStore Cloud Integrated Storage v3.2, 3.2.3, and 3.3 products. Your product does not necessarily use all the software components referred to below. Copyrights and licenses The following components are subject to the BSD licenses: ? curl-7.21.0: MIT/BSD license http://curl.haxx.se/legal/licmix.html License Mixing with apps, libcurl and Third Party Libraries =========================================================== libcurl can be built to use a fair amount of various third party libraries, libraries that are written and provided by other parties that are distributed using their own licenses. Even libcurl itself contains code that may cause problems to some. This document attempts to describe what licenses libcurl and the other libraries use and what possible dilemmas linking and mixing them all can lead to for end users. I am not a lawyer and this is not legal advice! One common dilemma is that GPL[1]-licensed code is not allowed to be linked with code licensed under the Original BSD license (with the announcement clause). You may still build your own copies that use them all, but distributing them as binaries would be to violate the GPL license - unless you accompany your license with an exception[2]. This particular problem was addressed when the Modified BSD license was created, which does not have the announcement clause that collides with GPL. libcurl http://curl.haxx.se/docs/copyright.html Uses an MIT (or Modified BSD)-style license that is as liberal as possible. -

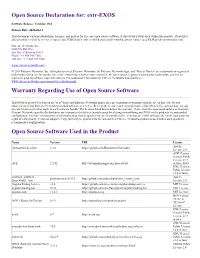

Extr-EXOS Warranty Regarding Use of Open Source Software Open

Open Source Declaration for: extr-EXOS Software Release: Versions: 30.6 Release Date: 2020-03-13 This document contains attributions, licenses, and notices for free and open source software (Collectively FOSS) used within this product. If you have any questions or wish to receive a copy of any FOSS source code to which you may be entitled, please contact us at [email protected]. Extreme Networks, Inc 6480 Via Del Oro San Jose, California 95119 Phone / +1 408.904.7002 Toll-free / + 1 888.257.3000 www.extremenetworks.com © 2019 Extreme Networks, Inc. All rights reserved. Extreme Networks, the Extreme Networks logo, and "Project Names" are trademarks or registered trademarks of Extreme Networks, Inc. in the United States and/or other countries. All other names, registered trademarks, trademarks, and service marks are property of their respective owners. For additional information on Extreme Networks trademarks, see www.extremenetworks.com/company/legal/trademarks Warranty Regarding Use of Open Source Software This FOSS is provided to you on an "as is" basis, and Extreme Networks makes no representations or warranties for the use of this code by you independent of any Extreme Networks provided software or services. Refer to the licenses and copyright notices listed below for each package for any specific license terms that apply to each software bundle. The licenses listed below define the warranty, if any, from the associated authors or licensors. Extreme Networks specifically disclaims any warranties for defects caused caused by altering or modifying any FOSS or the products' recommended configuration. You have no warranty or indemnification claims against Extreme Networks in the event that the FOSS infringes the intellectual property rights of a third party.