Photographic Noise Performance Measures Based on RAW Files Analysis of Consumer Cameras

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Laser Linewidth, Frequency Noise and Measurement

Laser Linewidth, Frequency Noise and Measurement WHITEPAPER | MARCH 2021 OPTICAL SENSING Yihong Chen, Hank Blauvelt EMCORE Corporation, Alhambra, CA, USA LASER LINEWIDTH AND FREQUENCY NOISE Frequency Noise Power Spectrum Density SPECTRUM DENSITY Frequency noise power spectrum density reveals detailed information about phase noise of a laser, which is the root Single Frequency Laser and Frequency (phase) cause of laser spectral broadening. In principle, laser line Noise shape can be constructed from frequency noise power Ideally, a single frequency laser operates at single spectrum density although in most cases it can only be frequency with zero linewidth. In a real world, however, a done numerically. Laser linewidth can be extracted. laser has a finite linewidth because of phase fluctuation, Correlation between laser line shape and which causes instantaneous frequency shifted away from frequency noise power spectrum density (ref the central frequency: δν(t) = (1/2π) dφ/dt. [1]) Linewidth Laser linewidth is an important parameter for characterizing the purity of wavelength (frequency) and coherence of a Graphic (Heading 4-Subhead Black) light source. Typically, laser linewidth is defined as Full Width at Half-Maximum (FWHM), or 3 dB bandwidth (SEE FIGURE 1) Direct optical spectrum measurements using a grating Equation (1) is difficult to calculate, but a based optical spectrum analyzer can only measure the simpler expression gives a good approximation laser line shape with resolution down to ~pm range, which (ref [2]) corresponds to GHz level. Indirect linewidth measurement An effective integrated linewidth ∆_ can be found by can be done through self-heterodyne/homodyne technique solving the equation: or measuring frequency noise using frequency discriminator. -

About Raspberry Pi HQ Camera Lenses Created by Dylan Herrada

All About Raspberry Pi HQ Camera Lenses Created by Dylan Herrada Last updated on 2020-10-19 07:56:39 PM EDT Overview In this guide, I'll explain the 3 main lens options for a Raspberry Pi HQ Camera. I do have a few years of experience as a video engineer and I also have a decent amount of experience using cameras with relatively small sensors (mainly mirrorless cinema cameras like the BMPCC) so I am very aware of a lot of the advantages and challenges associated. That being said, I am by no means an expert, so apologies in advance if I get anything wrong. Parts Discussed Raspberry Pi High Quality HQ Camera $50.00 IN STOCK Add To Cart © Adafruit Industries https://learn.adafruit.com/raspberry-pi-hq-camera-lenses Page 3 of 13 16mm 10MP Telephoto Lens for Raspberry Pi HQ Camera OUT OF STOCK Out Of Stock 6mm 3MP Wide Angle Lens for Raspberry Pi HQ Camera OUT OF STOCK Out Of Stock Raspberry Pi 3 - Model B+ - 1.4GHz Cortex-A53 with 1GB RAM $35.00 IN STOCK Add To Cart Raspberry Pi Zero WH (Zero W with Headers) $14.00 IN STOCK Add To Cart © Adafruit Industries https://learn.adafruit.com/raspberry-pi-hq-camera-lenses Page 4 of 13 © Adafruit Industries https://learn.adafruit.com/raspberry-pi-hq-camera-lenses Page 5 of 13 Crop Factor What is crop factor? According to Wikipedia (https://adafru.it/MF0): In digital photography, the crop factor, format factor, or focal length multiplier of an image sensor format is the ratio of the dimensions of a camera's imaging area compared to a reference format; most often, this term is applied to digital cameras, relative to 35 mm film format as a reference. -

Denver Cmc Photography Section Newsletter

MARCH 2018 DENVER CMC PHOTOGRAPHY SECTION NEWSLETTER Wednesday, March 14 CONNIE RUDD Photography with a Purpose 2018 Monthly Meetings Steering Committee 2nd Wednesday of the month, 7:00 p.m. Frank Burzynski CMC Liaison AMC, 710 10th St. #200, Golden, CO [email protected] $20 Annual Dues Jao van de Lagemaat Education Coordinator Meeting WEDNESDAY, March 14, 7:00 p.m. [email protected] March Meeting Janice Bennett Newsletter and Communication Join us Wednesday, March 14, Coordinator fom 7:00 to 9:00 p.m. for our meeting. [email protected] Ron Hileman CONNIE RUDD Hike and Event Coordinator [email protected] wil present Photography with a Purpose: Conservation Photography that not only Selma Kristel Presentation Coordinator inspires, but can also tip the balance in favor [email protected] of the protection of public lands. Alex Clymer Social Media Coordinator For our meeting on March 14, each member [email protected] may submit two images fom National Parks Mark Haugen anywhere in the country. Facilities Coordinator [email protected] Please submit images to Janice Bennett, CMC Photo Section Email [email protected] by Tuesday, March 13. [email protected] PAGE 1! DENVER CMC PHOTOGRAPHY SECTION MARCH 2018 JOIN US FOR OUR MEETING WEDNESDAY, March 14 Connie Rudd will present Photography with a Purpose: Conservation Photography that not only inspires, but can also tip the balance in favor of the protection of public lands. Please see the next page for more information about Connie Rudd. For our meeting on March 14, each member may submit two images from National Parks anywhere in the country. -

What Resolution Should Your Images Be?

What Resolution Should Your Images Be? The best way to determine the optimum resolution is to think about the final use of your images. For publication you’ll need the highest resolution, for desktop printing lower, and for web or classroom use, lower still. The following table is a general guide; detailed explanations follow. Use Pixel Size Resolution Preferred Approx. File File Format Size Projected in class About 1024 pixels wide 102 DPI JPEG 300–600 K for a horizontal image; or 768 pixels high for a vertical one Web site About 400–600 pixels 72 DPI JPEG 20–200 K wide for a large image; 100–200 for a thumbnail image Printed in a book Multiply intended print 300 DPI EPS or TIFF 6–10 MB or art magazine size by resolution; e.g. an image to be printed as 6” W x 4” H would be 1800 x 1200 pixels. Printed on a Multiply intended print 200 DPI EPS or TIFF 2-3 MB laserwriter size by resolution; e.g. an image to be printed as 6” W x 4” H would be 1200 x 800 pixels. Digital Camera Photos Digital cameras have a range of preset resolutions which vary from camera to camera. Designation Resolution Max. Image size at Printable size on 300 DPI a color printer 4 Megapixels 2272 x 1704 pixels 7.5” x 5.7” 12” x 9” 3 Megapixels 2048 x 1536 pixels 6.8” x 5” 11” x 8.5” 2 Megapixels 1600 x 1200 pixels 5.3” x 4” 6” x 4” 1 Megapixel 1024 x 768 pixels 3.5” x 2.5” 5” x 3 If you can, you generally want to shoot larger than you need, then sharpen the image and reduce its size in Photoshop. -

Sample Manuscript Showing Specifications and Style

Information capacity: a measure of potential image quality of a digital camera Frédéric Cao 1, Frédéric Guichard, Hervé Hornung DxO Labs, 3 rue Nationale, 92100 Boulogne Billancourt, FRANCE ABSTRACT The aim of the paper is to define an objective measurement for evaluating the performance of a digital camera. The challenge is to mix different flaws involving geometry (as distortion or lateral chromatic aberrations), light (as luminance and color shading), or statistical phenomena (as noise). We introduce the concept of information capacity that accounts for all the main defects than can be observed in digital images, and that can be due either to the optics or to the sensor. The information capacity describes the potential of the camera to produce good images. In particular, digital processing can correct some flaws (like distortion). Our definition of information takes possible correction into account and the fact that processing can neither retrieve lost information nor create some. This paper extends some of our previous work where the information capacity was only defined for RAW sensors. The concept is extended for cameras with optical defects as distortion, lateral and longitudinal chromatic aberration or lens shading. Keywords: digital photography, image quality evaluation, optical aberration, information capacity, camera performance database 1. INTRODUCTION The evaluation of a digital camera is a key factor for customers, whether they are vendors or final customers. It relies on many different factors as the presence or not of some functionalities, ergonomic, price, or image quality. Each separate criterion is itself quite complex to evaluate, and depends on many different factors. The case of image quality is a good illustration of this topic. -

Seeing Like Your Camera ○ My List of Specific Videos I Recommend for Homework I.E

Accessing Lynda.com ● Free to Mason community ● Set your browser to lynda.gmu.edu ○ Log-in using your Mason ID and Password ● Playlists Seeing Like Your Camera ○ My list of specific videos I recommend for homework i.e. pre- and post-session viewing.. PART 2 - FALL 2016 ○ Clicking on the name of the video segment will bring you immediately to Lynda.com (or the login window) Stan Schretter ○ I recommend that you eventually watch the entire video class, since we will only use small segments of each video class [email protected] 1 2 Ways To Take This Course What Creates a Photograph ● Each class will cover on one or two topics in detail ● Light ○ Lynda.com videos cover a lot more material ○ I will email the video playlist and the my charts before each class ● Camera ● My Scale of Value ○ Maximum Benefit: Review Videos Before Class & Attend Lectures ● Composition & Practice after Each Class ○ Less Benefit: Do not look at the Videos; Attend Lectures and ● Camera Setup Practice after Each Class ○ Some Benefit: Look at Videos; Don’t attend Lectures ● Post Processing 3 4 This Course - “The Shot” This Course - “The Shot” ● Camera Setup ○ Exposure ● Light ■ “Proper” Light on the Sensor ■ Depth of Field ■ Stop or Show the Action ● Camera ○ Focus ○ Getting the Color Right ● Composition ■ White Balance ● Composition ● Camera Setup ○ Key Photographic Element(s) ○ Moving The Eye Through The Frame ■ Negative Space ● Post Processing ○ Perspective ○ Story 5 6 Outline of This Class Class Topics PART 1 - Summer 2016 PART 2 - Fall 2016 ● Topic 1 ○ Review of Part 1 ● Increasing Your Vision ● Brief Review of Part 1 ○ Shutter Speed, Aperture, ISO ○ Shutter Speed ● Seeing The Light ○ Composition ○ Aperture ○ Color, dynamic range, ● Topic 2 ○ ISO and White Balance histograms, backlighting, etc. -

Foveon FO18-50-F19 4.5 MP X3 Direct Image Sensor

® Foveon FO18-50-F19 4.5 MP X3 Direct Image Sensor Features The Foveon FO18-50-F19 is a 1/1.8-inch CMOS direct image sensor that incorporates breakthrough Foveon X3 technology. Foveon X3 direct image sensors Foveon X3® Technology • A stack of three pixels captures superior color capture full-measured color images through a unique stacked pixel sensor design. fidelity by measuring full color at every point By capturing full-measured color images, the need for color interpolation and in the captured image. artifact-reducing blur filters is eliminated. The Foveon FO18-50-F19 features the • Images have improved sharpness and immunity to color artifacts (moiré). powerful VPS (Variable Pixel Size) capability. VPS provides the on-chip capabil- • Foveon X3 technology directly converts light ity of grouping neighboring pixels together to form larger pixels that are optimal of all colors into useful signal information at every point in the captured image—no light for high frame rate, reduced noise, or dual mode still/video applications. Other absorbing filters are used to block out light. advanced features include: low fixed pattern noise, ultra-low power consumption, Variable Pixel Size (VPS) Capability and integrated digital control. • Neighboring pixels can be grouped together on-chip to obtain the effect of a larger pixel. • Enables flexible video capture at a variety of resolutions. • Enables higher ISO mode at lower resolutions. Analog Biases Row Row Digital Supplies • Reduces noise by combining pixels. Pixel Array Analog Supplies Readout Reset 1440 columns x 1088 rows x 3 layers On-Chip A/D Conversion Control Control • Integrated 12-bit A/D converter running at up to 40 MHz. -

Time-Series Prediction of Environmental Noise for Urban Iot Based on Long Short-Term Memory Recurrent Neural Network

applied sciences Article Time-Series Prediction of Environmental Noise for Urban IoT Based on Long Short-Term Memory Recurrent Neural Network Xueqi Zhang 1,2 , Meng Zhao 1,2 and Rencai Dong 1,* 1 State Key Laboratory of Urban and Regional Ecology, Research Center for Eco-Environmental Sciences, Chinese Academy of Sciences, Beijing 100085, China; [email protected] (X.Z.); [email protected] (M.Z.) 2 College of Resources and Environment, University of Chinese Academy of Sciences, Beijing 100049, China * Correspondence: [email protected]; Tel.: +86-010-62849112 Received: 12 January 2020; Accepted: 6 February 2020; Published: 8 February 2020 Abstract: Noise pollution is one of the major urban environmental pollutions, and it is increasingly becoming a matter of crucial public concern. Monitoring and predicting environmental noise are of great significance for the prevention and control of noise pollution. With the advent of the Internet of Things (IoT) technology, urban noise monitoring is emerging in the direction of a small interval, long time, and large data amount, which is difficult to model and predict with traditional methods. In this study, an IoT-based noise monitoring system was deployed to acquire the environmental noise data, and a two-layer long short-term memory (LSTM) network was proposed for the prediction of environmental noise under the condition of large data volume. The optimal hyperparameters were selected through testing, and the raw data sets were processed. The urban environmental noise was predicted at time intervals of 1 s, 1 min, 10 min, and 30 min, and their performances were compared with three classic predictive models: random walk (RW), stacked autoencoder (SAE), and support vector machine (SVM). -

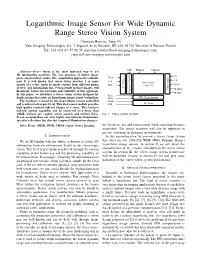

Logarithmic Image Sensor for Wide Dynamic Range Stereo Vision System

Logarithmic Image Sensor For Wide Dynamic Range Stereo Vision System Christian Bouvier, Yang Ni New Imaging Technologies SA, 1 Impasse de la Noisette, BP 426, 91370 Verrieres le Buisson France Tel: +33 (0)1 64 47 88 58 [email protected], [email protected] NEG PIXbias COLbias Abstract—Stereo vision is the most universal way to get 3D information passively. The fast progress of digital image G1 processing hardware makes this computation approach realizable Vsync G2 Vck now. It is well known that stereo vision matches 2 or more Control Amp Pixel Array - Scan Offset images of a scene, taken by image sensors from different points RST - 1280x720 of view. Any information loss, even partially in those images, will V Video Out1 drastically reduce the precision and reliability of this approach. Exposure Out2 In this paper, we introduce a stereo vision system designed for RD1 depth sensing that relies on logarithmic image sensor technology. RD2 FPN Compensation The hardware is based on dual logarithmic sensors controlled Hsync and synchronized at pixel level. This dual sensor module provides Hck H - Scan high quality contrast indexed images of a scene. This contrast indexed sensing capability can be conserved over more than 140dB without any explicit sensor control and without delay. Fig. 1. Sensor general structure. It can accommodate not only highly non-uniform illumination, specular reflections but also fast temporal illumination changes. Index Terms—HDR, WDR, CMOS sensor, Stereo Imaging. the details are lost and consequently depth extraction becomes impossible. The sensor reactivity will also be important to prevent saturation in changing environments. -

Fault Location in Power Distribution Systems Via Deep Graph

Fault Location in Power Distribution Systems via Deep Graph Convolutional Networks Kunjin Chen, Jun Hu, Member, IEEE, Yu Zhang, Member, IEEE, Zhanqing Yu, Member, IEEE, and Jinliang He, Fellow, IEEE Abstract—This paper develops a novel graph convolutional solving a set of nonlinear equations. To solve the multiple network (GCN) framework for fault location in power distri- estimation problem, it is proposed to use estimated fault bution networks. The proposed approach integrates multiple currents in all phases including the healthy phase to find the measurements at different buses while taking system topology into account. The effectiveness of the GCN model is corroborated faulty feeder and the location of the fault [2]. It is pointed by the IEEE 123 bus benchmark system. Simulation results show out in [15] that the accuracy of impedance-based methods can that the GCN model significantly outperforms other widely-used be affected by factors including fault type, unbalanced loads, machine learning schemes with very high fault location accuracy. heterogeneity of overhead lines, measurement errors, etc. In addition, the proposed approach is robust to measurement When a fault occurs in a distribution system, voltage drops noise and data loss errors. Data visualization results of two com- peting neural networks are presented to explore the mechanism can occur at all buses. The voltage drop characteristics for of GCNs superior performance. A data augmentation procedure the whole system vary with different fault locations. Thus, the is proposed to increase the robustness of the model under various voltage measurements on certain buses can be used to identify levels of noise and data loss errors. -

Single-Pixel Imaging Via Compressive Sampling

© DIGITAL VISION Single-Pixel Imaging via Compressive Sampling [Building simpler, smaller, and less-expensive digital cameras] Marco F. Duarte, umans are visual animals, and imaging sensors that extend our reach— [ cameras—have improved dramatically in recent times thanks to the intro- Mark A. Davenport, duction of CCD and CMOS digital technology. Consumer digital cameras in Dharmpal Takhar, the megapixel range are now ubiquitous thanks to the happy coincidence that the semiconductor material of choice for large-scale electronics inte- Jason N. Laska, Ting Sun, Hgration (silicon) also happens to readily convert photons at visual wavelengths into elec- Kevin F. Kelly, and trons. On the contrary, imaging at wavelengths where silicon is blind is considerably Richard G. Baraniuk more complicated, bulky, and expensive. Thus, for comparable resolution, a US$500 digi- ] tal camera for the visible becomes a US$50,000 camera for the infrared. In this article, we present a new approach to building simpler, smaller, and cheaper digital cameras that can operate efficiently across a much broader spectral range than conventional silicon-based cameras. Our approach fuses a new camera architecture Digital Object Identifier 10.1109/MSP.2007.914730 1053-5888/08/$25.00©2008IEEE IEEE SIGNAL PROCESSING MAGAZINE [83] MARCH 2008 based on a digital micromirror device (DMD—see “Spatial Light Our “single-pixel” CS camera architecture is basically an Modulators”) with the new mathematical theory and algorithms optical computer (comprising a DMD, two lenses, a single pho- of compressive sampling (CS—see “CS in a Nutshell”). ton detector, and an analog-to-digital (A/D) converter) that com- CS combines sampling and compression into a single non- putes random linear measurements of the scene under view. -

Receiver Dynamic Range: Part 2

The Communications Edge™ Tech-note Author: Robert E. Watson Receiver Dynamic Range: Part 2 Part 1 of this article reviews receiver mea- the receiver can process acceptably. In sim- NF is the receiver noise figure in dB surements which, taken as a group, describe plest terms, it is the difference in dB This dynamic range definition has the receiver dynamic range. Part 2 introduces between the inband 1-dB compression point advantage of being relatively easy to measure comprehensive measurements that attempt and the minimum-receivable signal level. without ambiguity but, unfortunately, it to characterize a receiver’s dynamic range as The compression point is obvious enough; assumes that the receiver has only a single a single number. however, the minimum-receivable signal signal at its input and that the signal is must be identified. desired. For deep-space receivers, this may be COMPREHENSIVE MEASURE- a reasonable assumption, but the terrestrial MENTS There are a number of candidates for mini- mum-receivable signal level, including: sphere is not usually so benign. For specifi- The following receiver measurements and “minimum-discernable signal” (MDS), tan- cation of general-purpose receivers, some specifications attempt to define overall gential sensitivity, 10-dB SNR, and receiver interfering signals must be assumed, and this receiver dynamic range as a single number noise floor. Both MDS and tangential sensi- is what the other definitions of receiver which can be used both to predict overall tivity are based on subjective judgments of dynamic range do. receiver performance and as a figure of merit signal strength, which differ significantly to compare competing receivers.