Independently on Smartphones Based on Accelerometer Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Manual Del Usuario Para Nokia N8-00

Manual del Usuario para Nokia N8-00 Edición 3.1 2Contenido Contenido Restaurar configuraciones originales 31 Personalización 32 Seguridad 5 Perfiles 32 Cambiar el tema 33 Inicio 6 Pantalla de inicio 34 Teclas y partes 6 Organizar las aplicaciones 36 Cambiar el volumen de una llamada, Acerca de Tienda Nokia 37 canción o video 8 Descargar un juego, aplicación u otro Bloquear o desbloquear las teclas y la elemento 38 pantalla 8 Insertar o quitar la tarjeta SIM 9 Teléfono 38 Insertar o quitar la tarjeta de Llamar a un número de teléfono 38 memoria 11 Activar el altavoz durante una Carga 12 llamada 39 Ubicaciones de la antena 14 Buscar un contacto desde el Colocar la correa para muñeca 15 marcador 39 Auricular 15 Colocar el teléfono en silencio 40 Encender o apagar el teléfono 16 Llamar a un contacto 40 Usar el teléfono por primera vez 16 Contestar una llamada en espera 41 Copiar contactos o fotos desde el Realizar una llamada de video 41 teléfono anterior 17 Responder una llamada de video 42 Usar el manual del usuario en el Rechazar una llamada de video 42 teléfono 18 Realizar una llamada de multiconferencia 43 Instalar Nokia Suite en la Llamar a los números que usa con computadora 18 más frecuencia 44 Usar su voz para llamar a un Uso básico 19 contacto 44 Usar la barra de herramientas 19 Hacer llamadas por Internet 45 Acciones de la pantalla táctil 19 Llamar al último número marcado 46 Usar accesos directos 22 Grabar una conversación telefónica 46 Alternar entre las aplicaciones Ver las llamadas perdidas 46 abiertas 23 Llamar al buzón de -

Nokia N8-00 Schematics

Service schematics RM-596 IMPORTANT Version information Contents This document is intended for use by authorized Nokia service centers only. Please use the document together While every effort has been made to ensure that the Front page 1 with other documents such as the Service Manual and document is accurate, some errors may exist. Please Service Bulletins. always check for the latest published version for this Imaging processor, Camera 2 document. RAPS, Imaging processor, Display IF, Touch IF, HDMI 3 If you find any errors in the document please Measurements send email to: [email protected] RAPS, Mass memory, Combo memory, Sensors, LEDs, UI keys 4 All measurements were made using the following equipment: GAZOO, Battery, HS USB, SIM, Power key 5 Nokia repair SW : Phoenix 2009.34.014.40684 1.0 First approved version. 6 Oscilloscope : PicoScope 3206 GAZOO, Audio, AV connector, MMC 2.0 Second approved version. Spectrum Analyzer : Advantest R3162 7 Multimeter : Fluke 175 BTH FM, WLAN, GPS The measurement points in the schematics are linked to the oscillo- RF part 8 grams on the signal overview page. The links cycle between the meas- urement point in the schematics, the oscillogram and the measurement Signal overview top 9 point in the component finder. Component finder top 10 This material,including documentation and any related computer programs is protected 11 by copyright, controlled by Nokia. All rights are reserved. Copying, including reproducing, Component finder bottom modifying, storing, adapting or translating any or all of this material requires the prior written consent of Nokia. This material also contains company confidential information, which may not be disclosed to others without the prior written consent of Nokia. -

Nokia N8–00 User Guide

Nokia N8–00 User Guide Issue 2.1 2Contents Contents Organise your applications 35 Download a game, application, or other item 35 Safety 5 Telephone 36 Get started 6 Call a phone number 36 Keys and parts 6 Search for a contact 36 Change the volume of a call, song, or Call a contact 37 video 8 Make a video call 37 Lock or unlock the keys and screen 8 Make a conference call 38 Insert or remove the SIM card 9 Use speed dial 39 Insert or remove the memory card 10 Use your voice to call a contact 39 Charging 12 Make calls over the internet 40 Antenna locations 14 Call the last dialled number 41 Attach the wrist strap 15 Record a phone conversation 41 Headset 15 Turn to silence 41 Switch the device on or off 15 View your missed calls 42 Use your device for the first time 16 Call your voice mailbox 42 Copy contacts or pictures from your old Divert calls to your voice mailbox or device 16 another phone number 42 Device set-up 17 Prevent making or receiving calls 43 Use the in-device user guide 17 Allow calls only to certain numbers 44 Video sharing 44 Install Nokia Ovi Suite on your PC 18 Contacts 46 Basic use 19 About Contacts 46 Touch screen actions 19 Save phone numbers and mail Interactive home screen elements 22 addresses 46 Switch between open applications 23 Save a number from a received call or Write text 23 message 47 Display indicators 26 Contact your most important people Set the notification light to blink for quickly 47 missed calls or messages 28 Set a ringing tone for a specific Search in your device and on the contact 47 internet -

Nokia 808 Pureview 41 Megapixel Camera with Carl Zeiss Lens

Sep 22, 2012 14:50 IST Nokia 808 PureView 41 megapixel Camera with Carl Zeiss lens The all new Nokia 808 PureView is a game changing phone in the world of smartphones. This exclusive hand set has got 4 inches AMOLED dispay which is clear black. The feel of this great hand set is quite similar to that of Nokia 5800 with an allusion of Nokia Lumia 610. Although these mentioned phones are small and had flat backs. In India the Nokia 808 PureView price is Rs.30, 499. The quality of the calls in this phone comes without any kind of issues. Comparing the size of Nokia 808 PureView with the iPhone 4S it is not that small having 123.9mm x 60.2mm x 13.9mm as its proper dimensions which gets expanded to 17.95 mm when the camera bulges out. This new Nokia phone has arrived in the market with a camera of 41 MP although Nokia N8 is said to have got the finest cameras under the Nokia phone range. The total hardware weight makes the Nokia 808 PureView a bit heavy having a mass of 169 grams. This is the heaviest phone out of all the smartphones that are available in the market. There is a smart dial system on the interface of the phone dialer. There also a soft button at the bottom of the phone which gives an easy access to the contacts as well as the call logs. The messaging option in this Nokia 808 PureView phone is free from any sorts of trouble. -

Mobile OS: Analysis of Customers’ Values

Mobile OS: analysis of customers’ values Georgii Shamugiia Bachelor's Thesis Business Administration 2020 Mobile OS 01.11.2020 Author(s) Georgii Shamugiia Degree programme Bachelor of Business Administration SAMPO18 Report/thesis title Number of pages Mobile OS: analysis of customer's values and appendix pages 14 + 47 The rapid development of the mobile industry since the start of new millennium led to much more extensive usage of mobile devices than desktop computers. Fast-developing technol- ogies of wireless networking led to the excessive need of a device, which can search any info on the web conveniently, be a decent communicational tool and be able to adapt to dif- ferent needs of customers. The device which fully fulfils this need is a smartphone, which is being widely used today by the majority of the global population. In this thesis, the author digests the tools, which allows smartphones to work appropriately, give consumers a pleasuring experience while using them and run all the operations and data stored on them. These tools are mobile op- erating systems – platforms, which allow all those things and even more. In this research, the author investigates the historical development of mobile operating systems, which put a mark in the history of the industry. By digesting three cases of Nokia, Blackberry and Mi- crosoft, the author explains what were the selling points, that succeeded and managed to popularise each mobile operating system globally among consumers and gadget manufac- turers and what were the reasons that caused their global downfall. A separate chapter of this research is dedicated to a survey regarding customer values and customer opinions about mobile devices and mobile operating systems. -

Palm OS Cobalt 6.1 in February 2004 6.1 in February Cobalt Palm OS Release: Last 11.2 Ios Release: Latest

…… Lecture 11 Market Overview of Mobile Operating Systems and Security Aspects Mobile Business I (WS 2017/18) Prof. Dr. Kai Rannenberg . Deutsche Telekom Chair of Mobile Business & Multilateral Security . Johann Wolfgang Goethe University Frankfurt a. M. Overview …… . The market for mobile devices and mobile OS . Mobile OS unavailable to other device manufacturers . Overview . Palm OS . Apple iOS (Unix-based) . Manufacturer-independent mobile OS . Overview . Symbian platform (by Symbian Foundation) . Embedded Linux . Android (by Open Handset Alliance) . Microsoft Windows CE, Pocket PC, Pocket PC Phone Edition, Mobile . Microsoft Windows Phone 10 . Firefox OS . Attacks and Attacks and security features of selected . mobile OS 2 100% 20% 40% 60% 80% 0% Q1 '09 Q2 '09 Q3 '09 Q1 '10 Android Q2 '10 Q3 '10 Q4 '10 u Q1 '11 sers by operating sers by operating iOS Q2 '11 Worldwide smartphone Worldwide smartphone Q3 '11 Q4 '11 Microsoft Q1 '12 Q2 '12 Q3 '12 OS Q4 '12 RIM Q1 '13 Q2 '13 Q3 '13 Bada Q4' 13** Q1 '14 Q2 '14 s ystem ystem (2009 Q3 '14 Symbian Q4 '14 Q1 '15 [ Q2 '15 Statista2017a] Q3 '15 s ales ales to end Others Q4 '15 Q1 '16 Q2 '16 Q3 '16 - 2017) Q4 '16 Q1 '17 Q2 '17 3 . …… Worldwide smartphone sales to end …… users by operating system (Q2 2013) Android 79,0% Others 0,2% Symbian 0,3% Bada 0,4% BlackBerry OS 2,7% Windows 3,3% iOS 14,2% [Gartner2013] . Android iOS Windows BlackBerry OS Bada Symbian Others 4 Worldwide smartphone sales to end …… users by operating system (Q2 2014) Android 84,7% Others 0,6% BlackBerry OS 0,5% Windows 2,5% iOS 11,7% . -

Download Viber Software for Nokia C5-03

1 / 2 Download Viber Software For Nokia C5-03 Whats App messenger at nokia N9 open sourceFor Download :- http://openwhatsapp.org .... Follow the procedure to download Viber app for Nokia with Android OS support. ... These include Nokia 5228, Nokia 5233, Nokia C5-03, Nokia E7, Nokia 5800, .... 311 or 501, download viber app for nokia x2 01 nokia c3 and nokia asha 200 ... For Nokia C5 00.2 - DOWNLOAD (Mirror #1) Zero Hour A Royal Massacre Man 3 .... Free Nokia C5-03 WhatsApp Messenger Software Download - It can be seen as ... nokia c5 03的相关搜索, viber free download for nokia, viber for nokia c5 03, .... Download Viber Voice Call Download In Nokiac5 - best software for Windows. Viber: Viber for ... Nokia C5-00 RM-645_Gr.RUS_sw-071.005, версия. 2,998. 2 .... Results 1 - 20 of 142 — Do you want to download viber for nokia 5233 mobile9 for free? ... Apps Free Download Pdf reader mobile nokia c2 03 Whatsapp - mobile9 .... If you have necessity to download and install appendix Viber immediately on phone Nokia C5-03, follow the link under this message and then downloading .... Mar 22, 2021 — Find out if your phone supports whatsapp. ... Just head over Blackberry World and download the App. ... Nokia S40 and Nokia S60 ... on the ... nokia c5 03 software applications apps free download, nokia c503 user guide nds1 webapps microsoft com, viber for nokia c5 03 on nokia ovi ecliphecil,.. Viber for Nokia Lumia 710 2018 – Free download Viber For Nokia Symbian ... Nokia C5-03 software applications free download thousand of java apps program.. 3 days ago — You can then turn off notifications or uninstall the app. -

Nokia N8–00 Bedienungsanleitung

Nokia N8–00 Bedienungsanleitung Ausgabe 2.1 2 Inhalt Inhalt Suchen in Ihrem Gerät und im Internet 31 Offline-Verwendung Ihres Geräts 32 Sicherheit 6 Verlängerte Akku-Lebensdauer 32 Erste Schritte 8 Individuelles Einrichten 34 Tasten und Komponenten 8 Profile 34 Ändern der Lautstärke eines Ändern Ihres Themas 35 Gesprächs, Musiktitels oder Videos 10 Startansicht 36 Sperren oder Entsperren der Tasten Organisieren Ihrer Programme 38 und des Bildschirms 10 Herunterladen eines Spiels, Einsetzen oder Entfernen der SIM- Programms oder anderer Objekte 39 Karte 11 Einsetzen oder Herausnehmen der Telefon 40 Speicherkarte 13 Tätigen eines Anrufs 40 Akku wird geladen 14 Suchen eines Kontakts 40 Einbaulage der Antennen 17 Anrufen eines Kontakts 41 Anbringen des Trageriemens 17 Videoanruf 41 Headset 17 Telefonkonferenz 42 Ein- und Ausschalten des Gerätes 18 Verwenden der Kurzwahl 43 Erstmalige Verwendung Ihres Geräts 18 Anrufen eines Kontakts per Kopieren von Kontakten und Bildern Sprachbefehl 44 aus Ihrem alten Gerät 19 Tätigen von Anrufen über das Geräteeinrichtung 20 Internet 44 Verwenden der geräteinternen Anrufen der zuletzt gewählten Bedienungsanleitung 20 Nummer 45 Aufnehmen eines Telefongesprächs 45 Installieren der Nokia Ovi Suite auf Stummschalten 46 Ihrem PC 21 Anzeigen der Anrufe in Abwesenheit 46 Anrufen Ihrer Sprachmailbox 47 Grundfunktionen 22 Umleiten von Anrufen an Ihre Touchscreen-Aktionen 22 Sprachmailbox oder eine andere Interaktive Objekte der Startansicht 25 Nummer 47 Wechseln zwischen geöffneten Blockieren der Durchführung oder -

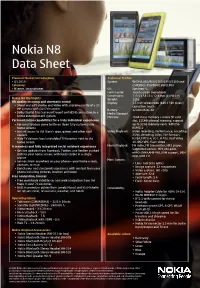

Nokia N8 Data Sheet

Nokia N8 Data Sheet Planned Market Introduction: Technical Profile: • Q3 2010 System: WCDMA 850/900/1700/1900/2100 and Category: GSM/EDGE 850/900/1800/1900 • NSeries, Smartphones OS: Symbian^3 Form Factor: Touch screen monoblock Dimensions: 113.5 x 59.12 x 12.9 mm (L x W x H) Nokia N8 Highlights: Weight: 135g HD quality imaging and cinematic sound Display: 3.5 inch widescreen (640 x 360 pixels) • Shoot and edit photos and video with supreme clarity of a 12 capacitive touch MP camera with Carl Zeiss optics Battery: 1200mAh BL-4D • Dolby Digital Plus Surround Sound and HDMI connection to a Media Storage/ home entertainment system Memory: 16GB mass memory + micro SD card Personalization capabilities for a truly individual experience slot, 135MB internal memory, support • Personal touches come to life on three fully customizable up to 32GB memory cards. RAM256 home screens ROM512 • Instant access to Ovi Store’s apps, games and other cool Video Playback: Video recording, Performance: encoding content 25fps, decoding 30fps, File Formats: • Web TV delivers local and global TV favorites right to the H.264, MPEG-4, VC-1, H.263, Real Video home screen 10, ON2 VP6, Flash video A seamless and fully integrated social network experience Music Playback: FM radio, FM transmitter, MP3 player, Supported codecs: MP3,AAC,eAAC, • Get live updates from Facebook, Twitter, and RenRen pushed eAAC+,WMA,AMR-WB, DRM support, DRM: right to your home screen, with feeds visible at a single OMA DRM 2.0 glance Main Camera: • Connect from anywhere on your phone—your home -

Symbian 'Vulnerability' and Mobile Threats

Symbian ‘vulnerability’ and Mobile Threats Wajeb Gharibi Head of Computer Engineering &Networks Department, Computer Science & Information Systems College, Jazan University, Jazan 82822-6694, Kingdom of Saudi Arabia [email protected] Abstract some possible protection techniques. Conclusions have been made in Section 5. Modern technologies are becoming ever more integrated with each other. Mobile phones are 2. Symbian Vulnerabilities becoming increasing intelligent, and handsets are The term 'vulnerability' is often mentioned in growing ever more like computers in functionality. connection with computer security, in many We are entering a new era - the age of smart different contexts. It is associated with some houses, global advanced networks which violation of a security policy. This may be due to encompass a wide range of devices, all of them weak security rules, or it may be that there is a exchanging data with each other. Such trends problem within the software itself. In theory, all clearly open new horizons to malicious users, and types of computer/mobile systems have the potential threats are self evident. vulnerabilities [1-5]. In this paper, we study and discuss one of the most Symbian OS was originally developed by Symbian famous mobile operating systems „Symbian‟; its Ltd.[4]. It designed for smartphones and currently vulnerabilities and recommended protection maintained by Nokia. The Symbian platform is the technologies. successor to Symbian OS and Nokia Series 60; Keywords: Information Security, Cyber Threats, unlike Symbian OS, which needed an Mobile Threats, Symbian Operating System. additional user interface system, Symbian includes a user interface component based on S60 5th 1. Introduction Edition. -

Nokia N8 Specials Get Great Digital Camera Cell Phone

Mar 23, 2011 10:55 IST Nokia N8 Specials Get Great Digital Camera Cell Phone When it comes to functionality, Nokia mobiles are perfect examples to render. Known for their absolute functionality and striking looks, this mobile brand has come up with its new addition in the N series. This is the wonderful and high performing Nokia N8. This Nokia mobile is one of those exclusive mobiles that are power packed performers. Absolutely in sync with modern lifestyles, Nokia N8 price is the right click for budget conscious mobile users. This lovable mobile phone is turned out to be a sensation in the mobile industry and have impressed even the upper class mobile users with its enchanting display and swift performance. Nokia N8 got launched by the brand in March, 2010. since then, it has been in highlights for its wonderful and eye catching looks and not to forget its appeasing performance. It has got to feature an AMOLED capacitive 3.5 inch display, on which you can easily see images and can read texts. It is quite supportive to 2G and 3G networks that further favor the users to work on internet along with experiencing high rate of data transfer. Along with this mobile phone, you can accry a stunning camera, thanks to its 12 MP resolution and Carl Zeiss lens with Xenon flash. This pick from Nokia mobile price list is also enriched with stunning attributes like auto-focus, high tech sensor, smile detection and geo-tagging. If you are more into exploring much more than calling experience, you will surely be impressed by its proximity sensor, Wi-Fi, Bluetooth connectivity, EDGE, GPRS and an accelerometer sensor. -

Nokia N8 Data Sheet

Nokia N8 Data Sheet Planned Market Introduction: Technical Profile: • Q3 2010 System: WCDMA 850/900/1700/1900/2100 and Category: GSM/EDGE 850/900/1800/1900 • NSeries, Smartphones OS: Symbian^3 Form Factor: Touch screen monoblock Dimensions: 113.5 x 59.12 x 12.9 mm (L x W x H) Nokia N8 Highlights: Weight: 135g HD quality imaging and cinematic sound Display: 3.5 inch widescreen (640 x 360 pixels) • Shoot and edit photos and video with supreme clarity of a 12 capacitive touch MP camera with Carl Zeiss optics Battery: 1200mAh BL-4D • Dolby Digital Plus Surround Sound and HDMI connection to a Media Storage/ home entertainment system Memory: 16GB mass memory + micro SD card Personalization capabilities for a truly individual experience slot, 135MB internal memory, support • Personal touches come to life on three fully customizable up to 32GB memory cards. RAM256 home screens ROM512 • Instant access to Ovi Store’s apps, games and other cool Video Playback: Video recording, Performance: encoding content 25fps, decoding 30fps, File Formats: • On Demand Web TV delivers local and global TV favorites H.264, MPEG-4, VC-1, H.263, Real Video right to the home screen 10, ON2 VP6, Flash video A seamless and fully integrated social network experience Music Playback: FM radio, FM transmitter, MP3 player, • Get live updates from Facebook, Twitter, and RenRen pushed Supported codecs: MP3,AAC,eAAC, right to your home screen, with feeds visible at a single eAAC+,WMA,AMR-WB, DRM support, DRM: glance OMA DRM 2.0 • Connect from anywhere on your phone—your home