Celis Washington 0250E 10366.Pdf (969.5Kb)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Notices of the American Mathematical Society

ISSN 0002-9920 of the American Mathematical Society February 2006 Volume 53, Number 2 Math Circles and Olympiads MSRI Asks: Is the U.S. Coming of Age? page 200 A System of Axioms of Set Theory for the Rationalists page 206 Durham Meeting page 299 San Francisco Meeting page 302 ICM Madrid 2006 (see page 213) > To mak• an antmat•d tub• plot Animated Tube Plot 1 Type an expression in one or :;)~~~G~~~t;~~i~~~~~~~~~~~~~:rtwo ' 2 Wrth the insertion point in the 3 Open the Plot Properties dialog the same variables Tl'le next animation shows • knot Plot 30 Animated + Tube Scientific Word ... version 5.5 Scientific Word"' offers the same features as Scientific WorkPlace, without the computer algebra system. Editors INTERNATIONAL Morris Weisfeld Editor-in-Chief Enrico Arbarello MATHEMATICS Joseph Bernstein Enrico Bombieri Richard E. Borcherds Alexei Borodin RESEARCH PAPERS Jean Bourgain Marc Burger James W. Cogdell http://www.hindawi.com/journals/imrp/ Tobias Colding Corrado De Concini IMRP provides very fast publication of lengthy research articles of high current interest in Percy Deift all areas of mathematics. All articles are fully refereed and are judged by their contribution Robbert Dijkgraaf to the advancement of the state of the science of mathematics. Issues are published as S. K. Donaldson frequently as necessary. Each issue will contain only one article. IMRP is expected to publish 400± pages in 2006. Yakov Eliashberg Edward Frenkel Articles of at least 50 pages are welcome and all articles are refereed and judged for Emmanuel Hebey correctness, interest, originality, depth, and applicability. Submissions are made by e-mail to Dennis Hejhal [email protected]. -

Paul Milgrom Wins the BBVA Foundation Frontiers of Knowledge Award for His Contributions to Auction Theory and Industrial Organization

Economics, Finance and Management is the seventh category to be decided Paul Milgrom wins the BBVA Foundation Frontiers of Knowledge Award for his contributions to auction theory and industrial organization The jury singled out Milgrom’s work on auction design, which has been taken up with great success by governments and corporations He has also contributed novel insights in industrial organization, with applications in pricing and advertising Madrid, February 19, 2013.- The BBVA Foundation Frontiers of Knowledge Award in the Economics, Finance and Management category goes in this fifth edition to U.S. mathematician Paul Milgrom “for his seminal contributions to an unusually wide range of fields of economics including auctions, market design, contracts and incentives, industrial economics, economics of organizations, finance, and game theory,” in the words of the prize jury. This breadth of vision encompasses a business focus that has led him to apply his theories in advisory work with governments and corporations. Milgrom (Detroit, 1942), a professor of economics at Stanford University, was nominated for the award by Zvika Neeman, Head of The Eitan Berglas School of Economics at Tel Aviv University. “His work on auction theory is probably his best known,” the citation continues. “He has explored issues of design, bidding and outcomes for auctions with different rules. He designed auctions for multiple complementary items, with an eye towards practical applications such as frequency spectrum auctions.” Milgrom made the leap from games theory to the realities of the market in the mid 1990s. He was dong consultancy work for Pacific Bell in California to plan its participation in an auction called by the U.S. -

Fact-Checking Glen Weyl's and Stefano Feltri's

The Market Design Community and the Broadcast Incentive Auction: Fact-Checking Glen Weyl’s and Stefano Feltri’s False Claims By Paul Milgrom* June 3, 2020 TV Station Interference Constraints in the US and Canada In a recent Twitter rant and a pair of subsequent articles in Promarket, Glen Weyl1 and Stefano Feltri2 invent a conspiratorial narrative according to which the academic market design community is secretive and corrupt, my own actions benefitted my former business associates and the hedge funds they advised in the 2017 broadcast incentive auction, and the result was that far too little TV spectrum was reassigned for broadband at far too little value for taxpayers. The facts bear out none of these allegations. In fact, there were: • No secrets: all of Auctionomics’ communications are on the public record, • No benefits for hedge funds: the funds vigorously opposed Auctionomics’ proposals, which reduced their auction profits, • No spectrum shortfalls: the number of TV channels reassigned was unaffected by the hedge funds’ bidding, and • No taxpayer losses: the money value created for the public by the broadband spectrum auction was more than one hundred times larger than the alleged revenue shortfall. * Paul Milgrom, the co-founder and Chairman of Auctionomics, is the Shirley and Leonard Ely Professor of Economics at Stanford University. According to his 2020 Distinguished Fellow citation from the American Economic Association, Milgrom “is the world’s leading auction designer, having helped design many of the auctions for radio spectrum conducted around the world in the last thirty years.” 1 “It Is Such a Small World: The Market-Design Academic Community Evolved in a Business Network.” Stefano Feltri, Promarket, May 28, 2020. -

Strength in Numbers: the Rising of Academic Statistics Departments In

Agresti · Meng Agresti Eds. Alan Agresti · Xiao-Li Meng Editors Strength in Numbers: The Rising of Academic Statistics DepartmentsStatistics in the U.S. Rising of Academic The in Numbers: Strength Statistics Departments in the U.S. Strength in Numbers: The Rising of Academic Statistics Departments in the U.S. Alan Agresti • Xiao-Li Meng Editors Strength in Numbers: The Rising of Academic Statistics Departments in the U.S. 123 Editors Alan Agresti Xiao-Li Meng Department of Statistics Department of Statistics University of Florida Harvard University Gainesville, FL Cambridge, MA USA USA ISBN 978-1-4614-3648-5 ISBN 978-1-4614-3649-2 (eBook) DOI 10.1007/978-1-4614-3649-2 Springer New York Heidelberg Dordrecht London Library of Congress Control Number: 2012942702 Ó Springer Science+Business Media New York 2013 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Exempted from this legal reservation are brief excerpts in connection with reviews or scholarly analysis or material supplied specifically for the purpose of being entered and executed on a computer system, for exclusive use by the purchaser of the work. Duplication of this publication or parts thereof is permitted only under the provisions of the Copyright Law of the Publisher’s location, in its current version, and permission for use must always be obtained from Springer. -

Putting Auction Theory to Work

Putting Auction Theory to Work Paul Milgrom With a Foreword by Evan Kwerel © 2003 “In Paul Milgrom's hands, auction theory has become the great culmination of game theory and economics of information. Here elegant mathematics meets practical applications and yields deep insights into the general theory of markets. Milgrom's book will be the definitive reference in auction theory for decades to come.” —Roger Myerson, W.C.Norby Professor of Economics, University of Chicago “Market design is one of the most exciting developments in contemporary economics and game theory, and who can resist a master class from one of the giants of the field?” —Alvin Roth, George Gund Professor of Economics and Business, Harvard University “Paul Milgrom has had an enormous influence on the most important recent application of auction theory for the same reason you will want to read this book – clarity of thought and expression.” —Evan Kwerel, Federal Communications Commission, from the Foreword For Robert Wilson Foreword to Putting Auction Theory to Work Paul Milgrom has had an enormous influence on the most important recent application of auction theory for the same reason you will want to read this book – clarity of thought and expression. In August 1993, President Clinton signed legislation granting the Federal Communications Commission the authority to auction spectrum licenses and requiring it to begin the first auction within a year. With no prior auction experience and a tight deadline, the normal bureaucratic behavior would have been to adopt a “tried and true” auction design. But in 1993 there was no tried and true method appropriate for the circumstances – multiple licenses with potentially highly interdependent values. -

Prizes and Awards Session

PRIZES AND AWARDS SESSION Wednesday, July 12, 2021 9:00 AM EDT 2021 SIAM Annual Meeting July 19 – 23, 2021 Held in Virtual Format 1 Table of Contents AWM-SIAM Sonia Kovalevsky Lecture ................................................................................................... 3 George B. Dantzig Prize ............................................................................................................................. 5 George Pólya Prize for Mathematical Exposition .................................................................................... 7 George Pólya Prize in Applied Combinatorics ......................................................................................... 8 I.E. Block Community Lecture .................................................................................................................. 9 John von Neumann Prize ......................................................................................................................... 11 Lagrange Prize in Continuous Optimization .......................................................................................... 13 Ralph E. Kleinman Prize .......................................................................................................................... 15 SIAM Prize for Distinguished Service to the Profession ....................................................................... 17 SIAM Student Paper Prizes .................................................................................................................... -

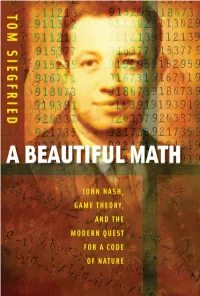

A Beautiful Math : John Nash, Game Theory, and the Modern Quest for a Code of Nature / Tom Siegfried

A BEAUTIFULA BEAUTIFUL MATH MATH JOHN NASH, GAME THEORY, AND THE MODERN QUEST FOR A CODE OF NATURE TOM SIEGFRIED JOSEPH HENRY PRESS Washington, D.C. Joseph Henry Press • 500 Fifth Street, NW • Washington, DC 20001 The Joseph Henry Press, an imprint of the National Academies Press, was created with the goal of making books on science, technology, and health more widely available to professionals and the public. Joseph Henry was one of the founders of the National Academy of Sciences and a leader in early Ameri- can science. Any opinions, findings, conclusions, or recommendations expressed in this volume are those of the author and do not necessarily reflect the views of the National Academy of Sciences or its affiliated institutions. Library of Congress Cataloging-in-Publication Data Siegfried, Tom, 1950- A beautiful math : John Nash, game theory, and the modern quest for a code of nature / Tom Siegfried. — 1st ed. p. cm. Includes bibliographical references and index. ISBN 0-309-10192-1 (hardback) — ISBN 0-309-65928-0 (pdfs) 1. Game theory. I. Title. QA269.S574 2006 519.3—dc22 2006012394 Copyright 2006 by Tom Siegfried. All rights reserved. Printed in the United States of America. Preface Shortly after 9/11, a Russian scientist named Dmitri Gusev pro- posed an explanation for the origin of the name Al Qaeda. He suggested that the terrorist organization took its name from Isaac Asimov’s famous 1950s science fiction novels known as the Foun- dation Trilogy. After all, he reasoned, the Arabic word “qaeda” means something like “base” or “foundation.” And the first novel in Asimov’s trilogy, Foundation, apparently was titled “al-Qaida” in an Arabic translation. -

NBER WORKING PAPER SERIES COMPLEMENTARITIES and COLLUSION in an FCC SPECTRUM AUCTION Patrick Bajari Jeremy T. Fox Working Paper

NBER WORKING PAPER SERIES COMPLEMENTARITIES AND COLLUSION IN AN FCC SPECTRUM AUCTION Patrick Bajari Jeremy T. Fox Working Paper 11671 http://www.nber.org/papers/w11671 NATIONAL BUREAU OF ECONOMIC RESEARCH 1050 Massachusetts Avenue Cambridge, MA 02138 September 2005 Bajari thanks the National Science Foundation, grants SES-0112106 and SES-0122747 for financial support. Fox would like to thank the NET Institute for financial support. Thanks to helpful comments from Lawrence Ausubel, Timothy Conley, Nicholas Economides, Philippe Fevrier, Ali Hortacsu, Paul Milgrom, Robert Porter, Gregory Rosston and Andrew Sweeting. Thanks to Todd Schuble for help with GIS software, to Peter Cramton for sharing his data on license characteristics, and to Chad Syverson for sharing data on airline travel. Excellent research assistance has been provided by Luis Andres, Stephanie Houghton, Dionysios Kaltis, Ali Manning, Denis Nekipelov and Wai-Ping Chim. Our email addresses are [email protected] and [email protected]. The views expressed herein are those of the author(s) and do not necessarily reflect the views of the National Bureau of Economic Research. ©2005 by Patrick Bajari and Jeremy T. Fox. All rights reserved. Short sections of text, not to exceed two paragraphs, may be quoted without explicit permission provided that full credit, including © notice, is given to the source. Complementarities and Collusion in an FCC Spectrum Auction Patrick Bajari and Jeremy T. Fox NBER Working Paper No. 11671 September 2005 JEL No. L0, L5, C1 ABSTRACT We empirically study bidding in the C Block of the US mobile phone spectrum auctions. Spectrum auctions are conducted using a simultaneous ascending auction design that allows bidders to assemble packages of licenses with geographic complementarities. -

The Folk Theorem in Repeated Games with Discounting Or with Incomplete Information

The Folk Theorem in Repeated Games with Discounting or with Incomplete Information Drew Fudenberg; Eric Maskin Econometrica, Vol. 54, No. 3. (May, 1986), pp. 533-554. Stable URL: http://links.jstor.org/sici?sici=0012-9682%28198605%2954%3A3%3C533%3ATFTIRG%3E2.0.CO%3B2-U Econometrica is currently published by The Econometric Society. Your use of the JSTOR archive indicates your acceptance of JSTOR's Terms and Conditions of Use, available at http://www.jstor.org/about/terms.html. JSTOR's Terms and Conditions of Use provides, in part, that unless you have obtained prior permission, you may not download an entire issue of a journal or multiple copies of articles, and you may use content in the JSTOR archive only for your personal, non-commercial use. Please contact the publisher regarding any further use of this work. Publisher contact information may be obtained at http://www.jstor.org/journals/econosoc.html. Each copy of any part of a JSTOR transmission must contain the same copyright notice that appears on the screen or printed page of such transmission. The JSTOR Archive is a trusted digital repository providing for long-term preservation and access to leading academic journals and scholarly literature from around the world. The Archive is supported by libraries, scholarly societies, publishers, and foundations. It is an initiative of JSTOR, a not-for-profit organization with a mission to help the scholarly community take advantage of advances in technology. For more information regarding JSTOR, please contact [email protected]. http://www.jstor.org Tue Jul 24 13:17:33 2007 Econornetrica, Vol. -

Brief 2007.Pdf

Brief (1-10-2007) Read Brief on the Web at http://www.umn.edu/umnnews/Publications/Brief/Brief_01102007.html . Vol. XXXVII No. 1; Jan. 10, 2007 Editor: Gayla Marty, [email protected] INSIDE THIS ISSUE --Regents approved UMTC stadium design and revised cost Jan. 3. --CAPA communications survey is now open: P&A staff invited to participate. --Founding director of the new campuswide UMTC honors program is Serge Rudaz. --People: VP for university relations Karen Himle began Jan. 9, and more. Campus Announcements and Events University-wide | Crookston | Duluth | Morris | Rochester | Twin Cities THE BOARD OF REGENTS APPROVED A TCF BANK STADIUM DESIGN for UMTC in a special session Jan. 3. The 50,000-seat stadium will be a blend of brick, stone, and glass in a traditional collegiate horseshoe shape, open to the downtown Minneapolis skyline, with the potential to expand to 80,000 seats. A revised cost of $288.5 million was also approved--an addition of $39.8 million to be financed without added expense to taxpayers, students, or the U's academic mission. Read more at http://www.umn.edu/umnnews/Feature_Stories/Regents_approve_stadium_design.html . COUNCIL OF ACADEMIC PROFESSIONALS AND ADMINISTRATORS (CAPA): The 2006-07 CAPA communications survey is the central way for CAPA to improve communications with U academic professional and administrative (P&A) staff statewide. Committee chair John Borchert urges P&A staff to participate. Read more and link to the survey at http://www.umn.edu/umnnews/Faculty_Staff_Comm/Council_of_Academic_Professionals_and_Administrators/ Survey_begins.html . FOUNDING DIRECTOR OF THE NEW UMTC HONORS PROGRAM is Serge Rudaz, professor and director of undergraduate studies, School of Physics and Astronomy. -

Jennifer Tour Chayes March 2016 CURRICULUM VITAE Office

Jennifer Tour Chayes March 2016 CURRICULUM VITAE Office Address: Microsoft Research New England 1 Memorial Drive Cambridge, MA 02142 e-mail: [email protected] Personal: Born September 20, 1956, New York, New York Education: 1979 B.A., Physics and Biology, Wesleyan University 1983 Ph.D., Mathematical Physics, Princeton University Positions: 1983{1985 Postdoctoral Fellow in Mathematical Physics, Departments of Mathematics and Physics, Harvard University 1985{1987 Postdoctoral Fellow, Laboratory of Atomic and Solid State Physics and Mathematical Sciences Institute, Cornell University 1987{1990 Associate Professor, Department of Mathematics, UCLA 1990{2001 Professor, Department of Mathematics, UCLA 1997{2005 Senior Researcher and Head, Theory Group, Microsoft Research 1997{2008 Affiliate Professor, Dept. of Physics, U. Washington 1999{2008 Affiliate Professor, Dept. of Mathematics, U. Washington 2005{2008 Principal Researcher and Research Area Manager for Mathematics, Theoretical Computer Science and Cryptography, Microsoft Research 2008{ Managing Director, Microsoft Research New England 2010{ Distinguished Scientist, Microsoft Corporation 2012{ Managing Director, Microsoft Research New York City Long-Term Visiting Positions: 1994-95, 1997 Member, Institute for Advanced Study, Princeton 1995, May{July ETH, Z¨urich 1996, Sept.{Dec. AT&T Research, New Jersey Awards and Honors: 1977 Johnston Prize in Physics, Wesleyan University 1979 Graham Prize in Natural Sciences & Mathematics, Wesleyan University 1979 Graduated 1st in Class, Summa Cum Laude, -

Approximation of Large Dynamic Games

Approximation of Large Dynamic Games Aaron L. Bodoh-Creed - Cornell University ∗ February 8, 2012 Abstract We provide a framework for simplifying the analysis and estimation of dynamic games with many agents by using nonatomic limit game approximations. We show that that the equilibria of a nonatomic approximation are epsilon-Bayesian-Nash Equilibria of the dynamic game with many players if the limit game is continuous. We also provide conditions under which the Bayesian-Nash equilibrium strategy correspondence of a large dynamic game is upper hemicontinuous in the number of agents. We use our results to show that repeated static Nash equilibria are the only equilibria of continuous repeated games of imperfect public or private monitoring in the limit as N approaches infinity. Extensions include: games with large players in the limit as N approaches infinity; games with (discontinuous) entry and exit decisions; Markov perfect equilibria of complete information stochastic games with aggregate shocks; and games with private and/or imperfect monitoring. Finally we provide an application of our framework to the analysis of large dynamic auction platforms such as E-Bay using the nonatomic limit model of Satterthwaite and Shneyerov [33]. 1 Introduction Our study provides a novel framework for employing dynamic nonatomic games to generate insights about game-theoretic models with a large, but finite, set of players. A number of ∗I would like to thank Ilya Segal, Paul Milgrom, Doug Bernheim, Jon Levin, C. Lanier Benkard, Eric Budish, Matthew Jackson, Ramesh Johari, Ichiro Obara, Philip Reny, Gabriel Weintraub, Robert Wilson, Marcello Miccoli, Juuso Toikka, Alex Hirsch, Carlos Lever, Ned Augenblick, Isabelle Sin, John Lazarev, and Bumin Yenmez for invaluable discussions regarding this topic.