Spying with Your Robot Vacuum Cleaner: Eavesdropping Via Lidar Sensors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cyber Threats to Mobile Phones Paul Ruggiero and Jon Foote

Cyber Threats to Mobile Phones Paul Ruggiero and Jon Foote Mobile Threats Are Increasing Smartphones, or mobile phones with advanced capabilities like those of personal computers (PCs), are appearing in more people’s pockets, purses, and briefcases. Smartphones’ popularity and relatively lax security have made them attractive targets for attackers. According to a report published earlier this year, smartphones recently outsold PCs for the first time, and attackers have been exploiting this expanding market by using old techniques along with new ones.1 One example is this year’s Valentine’s Day attack, in which attackers distributed a mobile picture- sharing application that secretly sent premium-rate text messages from the user’s mobile phone. One study found that, from 2009 to 2010, the number of new vulnerabilities in mobile operating systems jumped 42 percent.2 The number and sophistication of attacks on mobile phones is increasing, and countermeasures are slow to catch up. Smartphones and personal digital assistants (PDAs) give users mobile access to email, the internet, GPS navigation, and many other applications. However, smartphone security has not kept pace with traditional computer security. Technical security measures, such as firewalls, antivirus, and encryption, are uncommon on mobile phones, and mobile phone operating systems are not updated as frequently as those on personal computers.3 Mobile social networking applications sometimes lack the detailed privacy controls of their PC counterparts. Unfortunately, many smartphone users do not recognize these security shortcomings. Many users fail to enable the security software that comes with their phones, and they believe that surfing the internet on their phones is as safe as or safer than surfing on their computers.4 Meanwhile, mobile phones are becoming more and more valuable as targets for attack. -

Whitepaper Optimizing Cleaning Efficiency of Robotic Vacuum Cleaner

OPTIMIZING CLEANING EFFICIENCY OF ROBOTIC VACUUM CLEANER RAMJI JAYARAM Lead Design Engineer, Tata Elxs i RASHMI RAMESH DANDGE Senior Software Engineer, Tata Elxs i TABLE OF CONTENTS ABSTRACT…………………………………………………………………………………………………………………………………………… 3 Introduction ……………………………………………………………………………………………. 4 Reducing human intervention due to wedging and entanglement…..………………………………… 5 Improved cleaning performance over different surfaces………………………………………………… 12 Conclusion ………………………………………………………………………………………………. 16 About Tata Elxsi ……………………………………………………………………………………….. 13 2 [email protected] Optimizing cleaning efficiency of robotic vacuum cleaner 1.0 ABSTRACT An essential household chore is floor cleaning, which is often considered unpleasant, difficult, and dull. This led to the development of vacuum cleaners that could assist us with such a task. Modern appliances are delivering convenience and reducing time spent on house chores. While vacuum cleaners have made home cleaning manageable, they are mostly noisy and bulky for everyday use. Modern robotic vacuums deliver consistent performance and enhanced cleaning features, but continue to struggle with uneven terrain and navigation challenges. This whitepaper will take a more in-depth look at the problem faced by the robotic vacuum cleaner (RVC) in avoiding obstacles and entanglement and how we can arrive at a solution depending on the functionalities. 3 [email protected] Optimizing cleaning efficiency of robotic vacuum cleaner 2.0 INTRODUCTION The earliest robotic vacuums did one thing passably well. They danced randomly over a smooth or flat surface, sucking up some dirt and debris until their battery charge got low and they had to return to their docks. They could more or less clean a space without the ability to understand it. Today’s robotic cleaners have made huge strides over the past few years. -

Vacuum Cleaner User Manual

Vacuum Cleaner User Manual EN VCO 42701 AB 01M-8839253200-1717-01 Please read this user manual first! Dear Valued Customer, Thank you for selecting this Beko appliance. We hope that you get the best results from your appliance which has been manufactured with high quality and state-of-the-art technology. Therefore, please read this entire user manual and all other accompanying documents carefully before using the appliance and keep it as a reference for future use. If you handover the appliance to someone else, give the user manual as well. Follow the instructions by paying attention to all the information and warnings in the user manual. Remember that this user manual may also apply to other models. Differences between models are explicitly described in the manual. Meanings of the Symbols Following symbols are used in various sections of this manual: Important information and useful C hints about usage. WARNING: Warnings against A dangerous situations concerning the security of life and property. Protection class for electric shock. This product has been produced in environmentally friendly modern facilities. This product does not contain PCB’s CONTENTS 1 Important safety and environmental instructions 4-5 1.1 General safety . 4 1.2 Compliance with the WEEE Directive and Disposing of the Waste Product. 5 1.3 Compliance with RoHS Directive . 5 1.4 Package information . 5 1.5 Plug Wiring . 6 2 Your vacuum cleaner 7 2.1 Overview . .7 2.2 Technical data. .7 3 Usage 8-10 3.1 Intended use . 8 3.2 Attaching/removing the hose. 8 3.3 Attaching/removing the telescopic tube. -

High End Auction - MODESTO - December 4

09/24/21 11:08:27 High End Auction - MODESTO - December 4 Auction Opens: Fri, Nov 27 10:48am PT Auction Closes: Fri, Dec 4 12:00pm PT Lot Title Lot Title MX9000 Klipsch Audio Technologies MX9034 Ceiling Fan MX9001 EEKOTO Tripod MX9035 Donner Ukulele MX9002 Azeus Air Purifier MX9036 Self-Balancing Scooter MX9003 Electric Self-Balancing Scooter MX9037 Shark Navigator Lift-Away Vacuum MX9004 Shark Genius Stem Pocket Mop System MX9038 LG Ultrawide Curved Monitor 38" MX9005 Fully Automatic Belt-Drive Turntable MX9039 LG Ultra Gear Gaming Monitor 38" MX9006 EEKOTO Tripod MX9040 Hoover Powerdash Pet Carpet Cleaner MX9007 Pusn Hyper Photography & Utility Solution MX9041 Comfyer Cyclone Vacuum MX9008 Orbit Brass Impact Sprinkler on Tripod Base MX9042 Stylish Monitor w/ Eye-Care Technology LED MX9009 Orbit Brass Impact Sprinkler on Tripod Base Backlight Monitor MX9010 Mendini by Cecilio Violin MX9043 Musetex 903 Computer Case MX9011 Orbit Brass Impact Sprinkler on Tripod Base MX9044 Bissell Pet Hair Eraser MX9012 Orbit Brass Impact Sprinkler on Tripod Base MX9045 2.1 CH Sound Bar MX9013 2.0CH Soundbar MX9046 Inflatable Movie Projector Screen MX9014 2.0CH Soundbar MX9047 Air Purifier MX9015 Gaming Accessories MX9048 Keyboard Stand MX9016 Item See Picture MX9049 Keyboard Stand MX9017 Robotic Pool Cleaner MX9050 Portable Indoor Kerosene Heater MX9018 iRobot Roomba Robot Vacuum MX9051 XXL Touch Bin Trash Can MX9019 Robotic Vacuum Cleaner MX9052 Mr. Heater Propane Heater MX9020 Toaster Oven MX9053 Mr. Heater Propane Heater MX9021 Shiatsu Foot Massager -

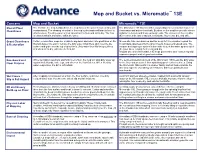

Mop Bucket Vs Micromatic

™ Mop and Bucket vs. Micromatic 13E Concern Mop and Bucket Micromatic™ 13E Overall Floor The first time the mop is dipped into the mop bucket, the water becomes dirty and The Micromatic 13E floor scrubber always dispenses a solution mixture of Cleanliness contaminated. The cleaning chemical in the mop bucket water will start to lose its clean water and active chemicals. Brushes on the scrubber provide intense effectiveness. The dirty water is then spread on the floor and left to dry. The floor agitation to loosen and break up tough soils. The vacuum on the scrubber is left wet with dirt and grime still in the water. then removes the water and dirt, leaving the floor clean, dry, and safe. Grout Cleanliness Cotton or microfiber mops are unable to dig down and reach into grout lines on tile Micromatic 13E uses brushes and the weight of the machine to push the & Restoration floors to loosen the soil or remove the dirty water. Mop fibers skim over the tile brush bristle tips deep into the grout lines to loosen embedded soils. The surface and glide over the top of grout lines. Dirty water then fills the grout lines vacuum and squeegee system is then able to suck the water up and out of and when left to dry, will leave behind dirt. the grout lines, leaving them clean and dry. Regular use of the Micromatic 13E helps prevent the time consuming and expensive project work of grout restoration. Baseboard and While swinging mops back and forth over a floor, the mop will sling dirty water up The semi-enclosed scrub deck of the Micromatic 13E keeps the dirty water Floor Fixtures against baseboards, table legs, and other on the floor fixtures. -

Vacuum Cleaner User Manual

Vacuum Cleaner User Manual Model No.: FVC18M13 *Before operating the unit, please read this manual thoroughly and retain it for future reference. 1 CONTENT PAGE SAFETY INSTRUCTION 3 PARTS DESCRIPTION 4 OPERATION INSTRUCTION 5 MAINTENANCE INSTRUCTION 6 TROUBLE SHOOTING 7 SPECIFICATION 7 WARRANTY 8 2 SAFETY INSTRUCTION 1. To avoid electric shock, fire or injury, please read the user manual carefully before using the appliance and keep it for future reference. 2. This appliance is for household use only. Please use suitable power source (220-240V~ 50/60Hz). 3. Never immerse the appliance in water or other liquids. 4. Do not operate the appliance with wet hands. 5. Do not use the appliance under direct sunshine, outdoor or on wet surfaces. 6. Please turn off the appliance when not in use otherwise it may result in danger. 7. Please turn off the appliance and unplug the socket when unattended otherwise it may result in danger. 8. Keep the appliance away from children. 9. The appliance is not intended for used by children or persons with reduced physical, sensory or mental capabilities, or lack of experience and knowledge, unless they have been given supervision or instruction concerning use of the appliance by a person responsible for their safety. 10. Children should be supervised to ensure that they do not play with the appliance. 11. With any indication of malfunction (including power cord), please stop using the appliance immediately to avoid hazard. Take it to the authorized service center for repair. Do not attempt to repair or change any parts by yourself. -

Download Catalog

Electric Appliances Product Catalogue for EUROPE Product catalogue 2019_Electric Appliances_Europe.indd 1 23/8/2019 15:15:22 Our Promise For more than a century, has consistently provided innovative, reliable, high-quality products and customer service. It’s a combination of groundbreaking technology and rock-solid dependability that’s made us one of the world’s most trusted brands. From outdoor portable generators that provide power for your home, work and play moments, to high-definition TVs that are setting new standards for performance, we’re constantly developing advanced products, rigorously testing them to make sure they work time after time, day after day. When you see the , you know you’re getting a product packed with features that make your life easier, while still being easy to use. A product that has all the latest thinking, while providing years of value. Innovation You Can Be Sure Of. From a company that always puts you first. 2 Product catalogue 2019_Electric Appliances_Europe.indd 2 23/8/2019 15:15:27 Content Heritage Time Line P.4 Museum P.6 Cooking Series Retro Series P.13 Gold Series P.16 Transform Series P.19 Culinaire Series P.20 Wooden Series P.23 Healthy Cooking Series P.24 Mini Series P.27 Fun Series P.30 Pro Series P.32 Essential Line Breakfast P.37 Blending and Juicing P.41 Mixing and Food Processing P.44 Cooking P.46 Vacuum Cleaning P.51 Home Environment P.53 3 Product catalogue 2019_Electric Appliances_Europe.indd 3 23/8/2019 15:15:31 130 years 1846 1865 1869 1869 1871 1873 1881 1886 1888 Invention and Innovation -

Design and Implementation of Low Cost Vacuum Cleaner

International Journal of Engineering Technology Science and Research IJETSR www.ijetsr.com ISSN 2394 – 3386 Volume 4, Issue 10 October 2017 Design and Implementation of Low Cost Vacuum Cleaner Sushmita Deb, Sanjay Kumar K, Sushma T.P., Nadashree V.S., Sahana.T SJM Institute of Technology, Chitradurga, Karnataka, India ABSTRACT The goal is to build an electric vacuum cleaner using a 12V battery. We concluded that 12V battery is enough to build an electric vacuum rather than using battery of higher Volts .So that 12V battery works effectively and easily. The machine functions even better by using low volts machines. Materials used to build the vacuum cleaner are:2litre plastic bottle, 12V dc motor, switch, m-seal, one water bottle cap, an empty deodorant can and an empty Otrivin plastic bottle. KEY WORDS: Vacuum cleaner, Plastic bottles, Deodorant cans, Meshes, Flexible pipes INTRODUCTION A vacuum cleaner is a device that uses an air pump to create a partial vacuum to suck up dust and dirt, usually from floors, and from other surfaces such as upholstery and draperies. The dirt is collected by either a dust bag or a cyclone for later disposal. Vacuum cleaners, which are used in homes as well as in industry, exist in a variety of sizes and models small battery-powered hand-held devices, domestic central vacuum cleaner, huge stationary industrial appliances that can handle several hundred litres of dust before being emptied, and self-propelled vacuum trucks for recovery of large spills or removal of contaminated soil. Specialized shop vacuums can be used to suck up both dust and liquids. -

Image Steganography Applications for Secure Communication

IMAGE STEGANOGRAPHY APPLICATIONS FOR SECURE COMMUNICATION by Tayana Morkel Submitted in partial fulfillment of the requirements for the degree Master of Science (Computer Science) in the Faculty of Engineering, Built Environment and Information Technology University of Pretoria, Pretoria May 2012 © University of Pretoria Image Steganography Applications for Secure Communication by Tayana Morkel E-mail: [email protected] Abstract To securely communicate information between parties or locations is not an easy task considering the possible attacks or unintentional changes that can occur during communication. Encryption is often used to protect secret information from unauthorised access. Encryption, however, is not inconspicuous and the observable exchange of encrypted information between two parties can provide a potential attacker with information on the sender and receiver(s). The presence of encrypted information can also entice a potential attacker to launch an attack on the secure communication. This dissertation investigates and discusses the use of image steganography, a technology for hiding information in other information, to facilitate secure communication. Secure communication is divided into three categories: self-communication, one-to-one communication and one-to-many communication, depending on the number of receivers. In this dissertation, applications that make use of image steganography are implemented for each of the secure communication categories. For self-communication, image steganography is used to hide one-time passwords (OTPs) in images that are stored on a mobile device. For one-to-one communication, a decryptor program that forms part of an encryption protocol is embedded in an image using image steganography and for one-to-many communication, a secret message is divided into pieces and different pieces are embedded in different images. -

Robotic Vacuum Cleaner Design to Mitigate Slip Errors in Warehouses

Robotic Vacuum Cleaner Design to Mitigate Slip Errors in Warehouses By Benjamin Fritz Schilling Bachelor of Science in Mechanical Engineering New Mexico Institute of Mining and Technology, New Mexico, 2016 Submitted to the Department of Mechanical Engineering in partial fulfillment of the requirements for the degree of Master of Engineering in Advanced Manufacturing and Design at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY September 2017 © 2017 Benjamin Fritz Schilling. All rights reserved. The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Signature of Author: Department of Mechanical Engineering August 11, 2017 Certified by: Maria Yang Associate Professor of Mechanical Engineering Thesis Supervisor Accepted by: Rohan Abeyaratne Quentin Berg Professor of Mechanics & Chairman, Committee for Graduate Students 1 (This page is intentionally left black) 2 Robotic Vacuum Cleaner Design to Mitigate Slip Errors in Warehouses By Benjamin Fritz Schilling Submitted to the Department of Mechanical Engineering on August 11, 2017 in partial fulfillment of the requirements for the degree of Master of Engineering in Advanced Manufacturing and Design Abstract Warehouses are extremely dusty environments due to the concrete and cardboard dust generated. This is problematic in automated warehouses that use robots to move items from one location to another. If the robot slips, it can collide with other robots or lose track of where it is located. Currently, to reduce the amount of dust on the floor, warehouses use industrial scrubbers that users walk behind or ride. This requires manual labor and a regular scheduled maintenance plan that needs to be followed to mitigate the dust accumulation. -

I-Mop XL Parts Manual

i-mop XL® SCRUBBER Parts Manual (S/N 300000- ) Model Part No.: 1231845 - Machine w/Battery & Charger, Blue Brushes 1235203 - Machine w/Battery & Charger, Red Brushes 9016402 - Machine w/Extra Battery & Charger, Blue Brushes 1232547 - Battery Charger North America / International 9016490 For the latest Parts manuals and other Rev. 06 (01-2020) language Operator manuals, visit: www.tennantco.com/manuals *9016490* B A Ref Part No. Serial Number Description Qty. 1 82681 (000000- ) Bracket Wldt, Lpg Mount 1 o 2 63810 (000000- ) Latch Assy, Lpg Tank Mtg, W/Nut 4 Y 3 51839 (000000- ) Nut Adjustable, Lpg Tank Mtg 2 4 49263 (000000- ) Tie, Cable 3 5 82556 (000000- 001039) Bracket, Vaporizer 1 D6 54930 (000000- ) Vaporizer, LPG 1 C HOW TO ORDER PARTS - See diagram above Only use TENNANT Company supplied or equivalent parts. Parts and supplies may be ordered online, by phone, by fax or by mail. Follow the steps below to ensure prompt delivery. 1. (A) Identify the machine model. Please fill out at time of installation for 2. (B) Identify the machine serial number from the data label. future reference. 3. (C) Ensure the proper serial number is used from Model No. - the parts list. 4. Identify the part number and quantity. Serial No. - Do not order by page or reference numbers. Machine Options - 5. Provide your name, company name, customer ID number, billing and shipping address, phone number and Sales Rep. - purchase order number. 6. Provide detail shipping instructions. Sales Rep. phone no. - Customer ID Number - (D) - identifies an assembly Installation Date - Y - identifies parts included in assembly Tennant Company PO Box 1452 Minneapolis, MN 55440 USA Phone: (800) 553- 8033 www.tennantco.com Specifications and parts are subject to change without notice. -

Espionage Against the United States by American Citizens 1947-2001

Technical Report 02-5 July 2002 Espionage Against the United States by American Citizens 1947-2001 Katherine L. Herbig Martin F. Wiskoff TRW Systems Released by James A. Riedel Director Defense Personnel Security Research Center 99 Pacific Street, Building 455-E Monterey, CA 93940-2497 REPORT DOCUMENTATION PAGE Form Approved OMB No. 0704-0188 The public reporting burden for this collection of information is estimated to average 1 hour per response, including the time for reviewing instructions, searching existing data sources, gathering and maintaining the data needed, and completing and reviewing the collection of information. Send comments regarding this burden estimate or any other aspect of this collection of information, including suggestions for reducing the burden, to Department of Defense, Washington Headquarters Services, Directorate for Information Operations and Reports (0704- 0188), 1215 Jefferson Davis Highway, Suite 1204, Arlington, VA 22202-4302. Respondents should be aware that notwithstanding any other provision of law, no person shall be subject to any penalty for failing to comply with a collection of information if it does not display a currently valid OMB control number. PLEASE DO NOT RETURN YOUR FORM TO THE ABOVE ADDRESS. 1. REPORT DATE (DDMMYYYY) 2. REPORT TYPE 3. DATES COVERED (From – To) July 2002 Technical 1947 - 2001 4. TITLE AND SUBTITLE 5a. CONTRACT NUMBER 5b. GRANT NUMBER Espionage Against the United States by American Citizens 1947-2001 5c. PROGRAM ELEMENT NUMBER 6. AUTHOR(S) 5d. PROJECT NUMBER Katherine L. Herbig, Ph.D. Martin F. Wiskoff, Ph.D. 5e. TASK NUMBER 5f. WORK UNIT NUMBER 7. PERFORMING ORGANIZATION NAME(S) AND ADDRESS(ES) 8.