3D-Lanenet: End-To-End 3D Multiple Lane Detection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Jacob Boehme's Theosophical Vision of Islam

Kom, 2016, vol. V (1) : 1–20 UDC: 14 Беме Ј. 28-1:141.332 DOI: 10.5937/kom1601001P Original scientific paper A Wild Tree toward the North – Jacob Boehme’s Theosophical Vision of Islam Roland Pietsch Ukrainian Free University, Munich, Germany Jacob Boehme, who was given by his friends the respectful title “Philoso- phus Teutonicus”, is one of the greatest theosophers and mystics at the be- ginning of the seventeenth century, whose influence extends to the present day. He was born in 1757 in the village Alt-Seidenberg near Görlitz, in a Protestant family of peasant background. Boehme spent most of his life in Görlitz, as a member of the Cobbler’s Guild. His first mystical experience was in 1600, when he contemplated the Byss and the Abyss. Published in 1612, “Aurora: the Day-Spring (Morgenröte im Aufgang)” was Boehme’s first attempt to describe his great theosophical vision. It immediately incurred the public condemnation of Görlitz’s Protestant Church. He was forbidden to write further. Boehme kept silent for six years and then published “A Description of the Three Principles of the Divine Essence (Beschreibung der drei Prinzipien göttlichen Wesens)” in 1619 and many other works. A large commentary of Genesis, “Mysterium Magnum” came out in 1623, fol- lowed by “The Way to Christ (Der Weg zu Christo)” in 1624. In the same year Jacob Boehme died on November 20th in Görlitz. According to his own self-conception, Boehme’s doctrine of divine wisdom (Theo-Sophia) is a divine science which was revealed to him in its entirety (see Pietsch: 1999, 205-228). -

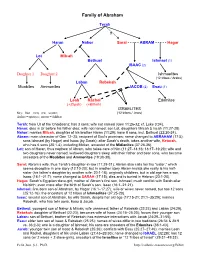

Family of Abraham

Family of Abraham Terah ? Haran Nahor Sarai - - - - - ABRAM - - - - - Hagar Lot Milcah Bethuel Ishmael (1) ISAAC (2) Daughter 1 Daughter 2 Ishmaelites (12 tribes / Arabs) Laban Rebekah Moabites Ammonites JACOB (2) Esau (1) Leah Rachel Edomites (+Zilpah) (+Bilhah) ISRAELITES Key: blue = men; red = women; (12 tribes / Jews) dashes = spouses; arrows = children Terah: from Ur of the Chaldeans; has 3 sons; wife not named (Gen 11:26-32; cf. Luke 3:34). Haran: dies in Ur before his father dies; wife not named; son Lot, daughters Milcah & Iscah (11:27-28). Nahor: marries Milcah, daughter of his brother Haran (11:29); have 8 sons, incl. Bethuel (22:20-24). Abram: main character of Gen 12–25; recipient of God’s promises; name changed to ABRAHAM (17:5); sons Ishmael (by Hagar) and Isaac (by Sarah); after Sarah’s death, takes another wife, Keturah, who has 6 sons (25:1-4), including Midian, ancestor of the Midianites (37:28-36). Lot: son of Haran, thus nephew of Abram, who takes care of him (11:27–14:16; 18:17–19:29); wife and two daughters never named; widowed daughters sleep with their father and bear sons, who become ancestors of the Moabites and Ammonites (19:30-38). Sarai: Abram’s wife, thus Terah’s daughter-in-law (11:29-31); Abram also calls her his “sister,” which seems deceptive in one story (12:10-20); but in another story Abram insists she really is his half- sister (his father’s daughter by another wife; 20:1-18); originally childless, but in old age has a son, Isaac (16:1–21:7); name changed to SARAH (17:15); dies and is buried in Hebron (23:1-20). -

D'var Torah Pekudei the Torah Portion Pekudei Is the Final Parsha

D’Var Torah Pekudei The Torah portion Pekudei is the final parsha of the Book of Shemot (Exodus), and in Hebrew means accounting. It is the last in a series of 5 parshiot (torah sections) describing the Mishkan-the portable sanctuary built by our ancestors by divine command to serve as “ a dwelling place for G-D in this physical world”. Pekudei contains three elements: a) an audit of the gold, silver and copper used in the Miskan’s construction, b) the making of the priestly garments and c) the erection and consecration of the Mishkan. Before I continue however, I want to pose a question that I also hope to answer before I complete this sermon. In the beginning of Bereshit (Genesis), the Torah devotes 31 verses to describe how G-D created the entire world. In striking contrast, the Torah portions of this and the last 4 weeks devote 371 verses to describe how the Jews created the tabernacle in the desert. This seems profoundly strange and begs the question, “Why?” More on this later. Bezalel from the tribe of Jehudah and Oholiav from the tribe of Dan were the divinely chosen architects of the Mishkan and priestly garments. In chapter 39, Verse 43 we are told,” And Moses saw all of the work, and behold they had done it; as the Lord commanded, even so they had done it. And Moses blessed them.” Moshe expressed his thanks by invoking a blessing upon them. The time had been short, the task great and arduous; but the laborers were fired up by holy enthusiasm and zeal and had joyfully completed the work they had undertaken. -

Parshat Shemot 21 Tevet 5779 Dec 28-29, 2018 Shaul Robinson Josh Rosenfeld Sherwood Goffin Yanky Lemmer Tamar Fix Alan Samuels

Parshat Shemot 21 Tevet 5779 Dec 28-29, 2018 Shaul Robinson Josh Rosenfeld Sherwood Goffin Yanky Lemmer Tamar Fix Alan Samuels ECHOD Senior Rabbi Assistant Rabbi Founding Chazzan Cantor Executive Director President SHABBAT SCHEDULE THANK YOU TO OUR SPONSORS 4:18pm Shabbat Candle Lighting Hashkama Kiddush: Sponsored anonymously. Friday Night Main Kiddush: Please join us for a fun, dairy Kiddush courtesy of LSS! 4:20pm Mincha followed by Kabbalat Shabbat in the Nathaniel Richman Cohen Sanctuary. Rabbi Herschel Cohen Memorial Minyan Kiddush: Dvar Torah given by Rabbi Shaul Robinson. Mordechai Beilis, Elana, Natalie and Michele Zvulon, in commemoration of the yahrtzeit of their parents and grandparents, Mazala Aviva bat Shabbat Morning Menachem v’Esther a”h (Aviva) and Aharon Simcha ben Yitzchak v’Klura 7:45am Hashkama Minyan in the Belfer Beit Midrash followed by a z”l (Aron) whose yahrtzeits are on the 26th of Tevet. shiur given by Rabbi Moshe Sokolow 8:30am Parsha Shiur given by Rabbinic Intern, Zac Schwartz Martine and Jack Schenker in commemoration of the 23rd yahrtzeit of 9:00am Services in the Nathaniel Richman Cohen Sanctuary. Martine’s mother, Sabine Krenik a”h, a survivor of the Shoah. Drasha given by Rabbi Shaul Robinson followed by Musaf. Beginners Kiddush: Helene Miller, Devorah Haller, and Megan Messina 9:15am Beginners Service led by Rabbi Ephraim Buchwald in on behalf of the Beginners group in honor of Rabbi Ephraim Buchwald’s Rm LL201 birthday. 9:37am Latest Shema 9:45am Rabbi Herschel Cohen Memorial Minyan in the Belfer -

Mayweekendministryschedule.Pdf

May 6/7 Ministry Schedule–4th Sunday of Easter Ministry 4:30 p.m. Mass 8:00 a.m. Mass 9:30 a.m. Mass 11:15 a.m.Mass Greeters Bonnie Fischer Elaine Brendel Nicole Bichler Dan & Rose Mayo Jan Wolf Sr Anna Rose Ruhland Eva Wentz Ushers Myron Senechal Dan Jacob Michael Bichler Jerry Heilman Tim Fischer Chester Schwab Chad Nein Paul & Diane Vetter Duane Wolf David Fleckenstein Jesse Folk Lectors Val Jundt Claude Wilmes Robin Nein Student Deb Ryckman Cindy Schwab Trisha James Student Altar Servers Ian Funk Austin Link Elizabeth Bichler Dominic Fleck Abby Funk Grace Link Jack Woeste Joshua Fleck Eucharistic Dc. Wayne Jundt Dc Wayne Jundt Dc Jerry Volk Dc. Jerry Volk Ministers Sean Solberg * Barry Hansen Ann Schreiber Deb Wanner Karen Grosz * Fred Urlacher Pius Fischer Derek Schmit Loretta Seeger * Wayne Link Lynn Fischer Cindy Hersch Clean Vessels Allan Brossart * Dan Reinbold Mark Van Hout John Bachmeier Clean Vessels Bonnie Fischer Mary Meyer Selena Van Hout Tracy Bachmeier *Homebound Musicians Marlys Wanner Carol Wilmes Sara Schuster Sara Schuster Sara Schuster Don Willey TBA Kate Weinand May 13/14 Ministry Schedule-5th Sunday of Easter Ministry 4:30 p.m. Mass 8:00 a.m. Mass 9:30 a.m. Mass 11:15 a.m. Mass Greeters Yvonne Marchand Harold & Barb Neameyer Bob & Peggy Brunelle Moylan Family Marlene Kraft Ushers Pat & Betty Heidrich Ike Carpenter Dennis Wanner Jim & Diane Glatt Frank Keller John Finneman Joe Mathern Kent Keller Blaine Erhardt Paul Waletzko Lectors Tricia Schlosser John Bachmeier Devin Cunningham Brad Moylan Ramona Ehli Deb Luptak -

Twelve Sons of Jacob / Twelve Tribes of Israel

Twelve Sons of Jacob / Twelve Tribes of Israel In the Hebrew Bible (Old Testament), the Israelites are described as descendants of the twelve sons of Jacob (whose name was changed to Israel in Gen 32:28), the son of Isaac, the son of Abraham. The phrase “Twelve Tribes of Israel” (or simply “Twelve Tribes”) sometimes occurs in the Bible (OT & NT) without any individual names being listed (Gen 49:28; Exod 24:4; 28:21; 39:14; Ezek 47:13; Matt 19:28; Luke 22:30; Acts 26:7; and Rev 21:12; cf. also “Twelve Tribes of the Dispersion” in James 1:1). More frequently, however, the names are explicitly mentioned. The Bible contains two dozen listings of the twelve sons of Jacob and/or tribes of Israel. Some of these are in very brief lists, while others are spread out over several paragraphs or chapters that discuss the distribution of the land or name certain representatives of each tribe, one after another. Surprisingly, however, each and every listing is slightly different from all the others, either in the order of the names mentioned or even in the specific names used (e.g., the two sons of Joseph are sometimes listed along with or instead of their father; and sometimes one or more names is omitted for various reasons). A few of the texts actually have more than 12 names! Upon closer analysis, one can discover several principles for the ordering and various reasons for the omission or substitution of some of the names, as explained in the notes below the following tables. -

Parshat Shemot No 1657: 23 Tevet 5777 (January 21, 2017)

Parshat Shemot No 1657: 23 Tevet 5777 (January 21, 2017) WANT TO BECOME A MEMBER Membershiip: $50.00 CLICK HERE TO JOIN OR DONATE TO THE RZA Piillllar Membershiip:$180.00 We are iinthe process of collllectiing membershiip dues for 2017. Plleaseshow your support and jjoiin as a member or renew your membershiip at thiistiime. Relliigiious Ziioniists of Ameriica 305 Seventh Avenue, 12th Flloor, New York, NY 10001 [email protected], www.rza.org Dear Friend of Religious Zionism, One of the initiatives we are planning, in anticipation of the 50th anniversary of the re- unification of Jerusalem, is an “Honor Roll” to be signed by the leadership of congregations and schools across the country. 1) Please have your leadership inform us if they want to be included on our Honor Roll. (We will include the names of all participating institutions in the media). 2) Please share this Honor Roll with institutions in your community and encourage participation. 3) Please arrange to hang this Honor Roll in the lobbies of your Shuls and Schools. Click here to print out a copy of the poster OR kindly email us to let us know if you’d like us to mail you a hard copy flyer or poster. Rabbi Gideon Shloush Religious Zionists of America - Mizrachi [email protected] In The Spotlight We are pleased to announce a new initiative: Each week,we will (translate and) feature a d’var Torah shared by a Rav who teaches at aDati Leumi Hesder Yeshiva in Israel. Our goal is – until we get thereourselves – to bring Torat Yisrael closer to America. -

The Rise of Muslim Foreign Fighters the Rise of Muslim Thomas Hegghammer Foreign Fighters Islam and the Globalization of Jihad

The Rise of Muslim Foreign Fighters The Rise of Muslim Thomas Hegghammer Foreign Fighters Islam and the Globalization of Jihad A salient feature of armed conºict in the Muslim world since 1980 is the involvement of so-called foreign ªghters, that is, unpaid combatants with no apparent link to the conºict other than religious afªnity with the Muslim side. Since 1980 between 10,000 and 30,000 such ªghters have inserted themselves into conºicts from Bosnia in the west to the Philippines in the east. Foreign ªghters matter be- cause they can affect the conºicts they join, as they did in post-2003 Iraq by promoting sectarian violence and indiscriminate tactics.1 Perhaps more impor- tant, foreign ªghter mobilizations empower transnational terrorist groups such as al-Qaida, because volunteering for war is the principal stepping-stone for individual involvement in more extreme forms of militancy. For example, when Muslims in the West radicalize, they usually do not plot attacks in their home country right away, but travel to a war zone such as Iraq or Afghanistan ªrst. Indeed, a majority of al-Qaida operatives began their militant careers as war volunteers, and most transnational jihadi groups today are by-products of foreign ªghter mobilizations.2 Foreign ªghters are therefore key to under- standing transnational Islamist militancy. Why did the Muslim foreign ªghter phenomenon emerge when it did? Nowadays the presence of foreign ªghters is almost taken for granted as a cor- ollary of conºict in the Muslim world. Long-distance foreign ªghter mobiliza- Thomas Hegghammer is Senior Research Fellow at the Norwegian Defence Research Establishment in Oslo and Nonresident Fellow at New York University’s Center on Law and Security. -

The Critical Concept of Normal Personality in Islam

Al-Risalah: Jurnal Studi Agama dan Pemikiran Islam | Vol. 12 | No. 1 | 2021 The Critical Concept of Normal Personality in Islam P-ISSN: 2085-5818 | E-ISSN: 2686-2107 https://uia.e-journal.id/alrisalah/article/1265 DOI: https://doi.org/10.34005/alrisalah.v12i2.1265 Naskah Dikirim: 24-02-2021 Naskah Direview: 26-02-2021 Naskah Diterbitkan: 02-03-2021 Abdul Hadi As-Syafiiyah Islamic University, Jakarta, Indonesia [email protected] Badrah Uyuni As-Syafiiyah Islamic University, Jakarta, Indonesia [email protected] Abstract: This study come to high light the normal personality in Islam regarding to human nature and behaviors. Human in the perception of Islam is composed of the body, spirit, and behavior. It is the outcome of the interaction of the two components, to understand the interaction and those relationships as the first part of the human, and the other part of God. Human behavior mostly belongs to two different systems, starting to work and influence since the creation of the fetus in the mother's womb. This creation has two aspects, one body and the other breathed the soul. The Islamic point of view in interpreting human behavior on several levels, each level is suitable for understanding a specific knowledge source and a specific research methodology: There is a reflexive level of behavior and could be understood by way of behavior theory. And there is a physiological level and is understood by the physiological path., and there is a social level and is understood by sociology science and anthropology science, and there is also spiritual level and is understood by divine science (revelation). -

After Adam: Reading Genesis in an Age of Evolutionary Science Daniel C

Article After Adam: Reading Genesis in an Age of Evolutionary Science Daniel C. Harlow Daniel C. Harlow Recent research in molecular biology, primatology, sociobiology, and phylogenetics indicates that the species Homo sapiens cannot be traced back to a single pair of individuals, and that the earliest human beings did not come on the scene in anything like paradisal physical or moral conditions. It is therefore difficult to read Genesis 1–3 as a factual account of human origins. In current Christian thinking about Adam and Eve, several scenarios are on offer. The most compelling one regards Adam and Eve as strictly literary figures—characters in a divinely inspired story about the imagined past that intends to teach theological, not historical, truths about God, creation, and humanity. Taking a nonconcordist approach, this article examines Adam and Eve as symbolic- literary figures from the perspective of mainstream biblical scholarship, with attention both to the text of Genesis and ancient Near Eastern parallels. Along the way, it explains why most interpreters do not find the doctrines of the Fall and original sin in the text of Genesis 2–3, but only in later Christian readings of it. This article also examines briefly Paul’s appeal to Adam as a type of Christ. Although a historical Adam and Eve have been very important in the Christian tradition, they are not central to biblical theology as such. The doctrines of the Fall and original sin may be reaffirmed without a historical Adam and Eve, but invite reformulation given the overwhelming evidence for an evolving creation. -

ABRAHAM and LOT (Gen,, Chs

PART TWENTY-SEVEN THE STORY OF ABRAHAM: ABRAHAM AND LOT (Gen,, chs. 13, 14) 1. The Biblical Account (ch. 13) And Abrain weiit up out of Egypt, he, and his wife, aiid all that he hnd, aiid Lot witb hiin, into the South. 2 And Abraiiz bas very qpich in cattle, in silver, aiid in gold. 3 And he weiit oii his jouriieys frow the Sou,th even to Beth-el, uiito the place where his teiit had been at the be- giniziiig, between Fcih-el and Ai, 4 uiito the place of the altar, which he had,inade there at the first: aid there Abram called on the name of Jehovah. J And Lot also, who went with Abranz, bad flocks, and herds, and tents. 6. Aiid the laid was not able to bear them, that they might dwell together: foY their substance was great, so that they could iiot dwell together. 7 Aiid there was a strife between the berdsnzen of Abrain’s cattle aiid the herdsinen of Lot’s cattle: and the Caizaanite and Perizzite dwelt then in the land. 8 And Abranz said uiito Lot, Let there be no strife, I pray thee, between ine aiid thee, and between nzy herds- men and thy herdsinen; for we are brethren. 9 Is Izot the whole land before thee? separate thyself, I Pray thee, froin me: if thou wilt take the left haiid, then I will go to the right; or if thou take the right haiid, then I will go to the left. 10 Aiid Lot lifted up his eyes, and beheld all the Plain of the Jordaii, that it was well watered everywhere, before JeJ3ouah destroyed Sodonz and Gonzorrab, like the garden ,of Jehovah, like the land of Egypt, as thou goest unto Zoar. -

The Compass of Peace

Peace Lutheran Church 3530 Dayton-Xenia Road Beavercreek, Ohio 45432 The Compass of Peace www.peacebeavercreek.org RETURN SERVICE REQUESTED Peace Lutheran Church, October 2020 FLOCKNOTE COMMUNICATIONS Peace is moving to a new communication tool called “Flocknote” which you may have already received. This tool will be used to register for worship and other communications. We will begin to register for worship starting the weekend of October 24 - 25. For worship, you will receive a weekly email asking which service you plan to attend and you will follow the instructions in the email to secure a space for worship. This tool also has the ability to communicate with church groups by text and to send out the Compass and Newsbits. There is an app available at no cost at the apple or google app stores. Please be ready to engage with any emails you receive from Flocknote. FAQ WORSHIP Church Office: 937-426-1441 SCHEDULE Website: www.peacebeavercreek.org What if I do not have internet access? Email: [email protected] For all who do not have internet or text messaging, please call the office to sign up for the worship service of At Pavilion your choice. Our Ministers: Every Member Saturday at 5:15 p.m. What if I do not want to receive text messages? Senior Pastor: Stephen Kimm If you do not want to receive text messages, you do not have to enter your cell phone number. If you receive a Sunday at 10:15 a.m. text message, you can reply “STOP” and you will no longer receive them.