The Next Generation of Data-Sharing in Financial Services: Using Privacy Enhancing Techniques to Unlock New Value

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Next-Gen Technology Transformation in Financial Services

April 2020 Next-gen Technology transformation in Financial Services Introduction Financial Services technology is currently in the midst of a profound transformation, as CIOs and their teams prepare to embrace the next major phase of digital transformation. The challenge they face is significant: in a competitive environment of rising cost pressures, where rapid action and response is imperative, financial institutions must modernize their technology function to support expanded digitization of both the front and back ends of their businesses. Furthermore, the current COVID-19 situation is putting immense pressure on technology capabilities (e.g., remote working, new cyber-security threats) and requires CIOs to anticipate and prepare for the “next normal” (e.g., accelerated shift to digital channels). Most major financial institutions are well aware of the imperative for action and have embarked on the necessary transformation. However, it is early days—based on our experience, most are only at the beginning of their journey. And in addition to the pressures mentioned above, many are facing challenges in terms of funding, complexity, and talent availability. This collection of articles—gathered from our recent publishing on the theme of financial services technology—is intended to serve as a roadmap for executives tasked with ramping up technology innovation, increasing tech productivity, and modernizing their platforms. The articles are organized into three major themes: 1. Reimagine the role of technology to be a business and innovation partner 2. Reinvent technology delivery to drive a step change in productivity and speed 3. Future-proof the foundation by building flexible and secure platforms The pace of change in financial services technology—as with technology more broadly—leaves very little time for leaders to respond. -

The Future of Banking Is Open

Visa Consulting & Analytics The Future of Banking is Open 1 Visa Consulting & Analytics Open banking What it is, why it matters, and how banks can lead the change If a consumer wants to block out the world around them, a single app gives them access to hundreds of thousands of songs and podcasts; over time, this app learns about their tastes and suggests new tunes for their listening pleasure. As technology evolves and artificial intelligence becomes more pervasive, consumers are beginning to ask why managing money cannot be as intuitive as managing music? Data is a key ingredient in offering personalized financial services, but it has been traditionally hard for consumers to share sensitive information with third parties, in a secure and consistent way. In response, financial innovation globally by both traditional and emerging players has taken place. This has led to an increase in financial innovation globally that is driven partly by the market and partly by regulation. As the world responds to the COVID-19 pandemic, one of the clear outcomes is accelerated digital engagement, something that can only be done quickly and at scale through an open ecosystem and the unbundling of financial services. In this paper, we look at what open banking is, the way some of the world’s most forward-thinking banks are responding, and how Visa Consulting & Analytics can help in transforming your business. 2 Contents What is open banking, and why does it matter? What is driving open banking? What are the consumer considerations? What are the commercial implications for ecosystem players? How are banks responding? Five strategies to consider How can Visa help you take the next step on your open banking journey? 3 What is open banking, and why does it matter? Broadly put, the term open banking refers to the use of APIs (Application Programming Interfaces) to share consumers’ financial data (with their consent) to trusted third-parties that, in turn, create and distribute novel financial products and services. -

Canada Fintech Report 2021 | Financial Technology | Accenture

Collaborating to win in Canada’s Fintech ecosystem Accenture 2021 Canadian Fintech report Contents Introduction 3 Executive Summary 4 Part 1: Canadian Fintech Ecosystem Analysis 5 Part 2: Financial Services Industry Outlook and Trends 34 Part 3: Global Fintech Ecosystem Benchmarking 46 Part 4: The Canadian Fintech Ecosystem: Looking Ahead 54 Appendix A: Global Fintech Ecosystem Benchmarking Methodology 58 Appendix B: Definition of Funding Types 61 References 62 2 Introduction As the pace of change continues to accelerate, industry boundaries blur; financial institutions, now more than ever, are adopting the mindset of technology companies. As both market and regulatory forces push these Canadian companies into the spotlight, the financial services ecosystem may be poised to deliver the most personalized and seamless digital experiences Canadians have ever seen. This report offers insights into this ecosystem for 2020 in four parts: Part 1: Canadian Fintech Ecosystem Analysis Part 3: Global Fintech Ecosystem We examine the current state of the Canadian Benchmarking fintech ecosystem - at both the national and Using our benchmarking model, we rank city level - in terms of growth, talent, and four Canadian cities (Calgary, Montreal, investment. We also discuss how incumbent Toronto and Vancouver) against 16 leading financial institutions (FI) are responding and and emerging fintech hubs around the collaborating, the importance of incubators world. This quantitative model draws on 46 and accelerators, and the government’s role in individual data points from various public supporting even further innovation. and proprietary sources, distilled into five key metrics. Part 2: Financial Services Industry Outlook and Trends Part 4: The Canadian Fintech Ecosystem: We elaborate on key emerging trends we Looking Ahead see as influencing the future direction of the Finally, we summarize our findings and explore Canadian financial services industry. -

Kalifa Review – FIL Response KALIFA REVIEW Finance Innovation Lab’S Response

Kalifa Review – FIL response KALIFA REVIEW Finance Innovation Lab’s response 1 OVERVIEW .................................................................................................................2 2 RESPONSE................................................................................................................. 3 3 ASKS .........................................................................................................................5 4 ANALYSIS OF FINTECH ...............................................................................................7 4.1 FINTECH AND DATA ........................................................................................................ 7 4.2 FINTECH AND BIG TECH ................................................................................................... 8 4.3 RISKS OF FINTECH ......................................................................................................... 9 The Finance Innovation Lab is a Registered Charity (number 1165269) and Company Limited by Guarantee. (number 09380418). 1 Kalifa Review – FIL response 1 Overview The Finance Innovation Lab welcomes the attention and focus on the future of fintech. Last December, we published our report Lifting the Lid on Fintech, which examined the acceleration of technology-driven innovation in finance and explored how fintech is transforming finance on a systemic level, leading to worrying concentrations of power and increased threats to democracy, sustainability, justice, and resilience in finance. It is -

DIGITAL BANKS How Can They Deepen Financial Inclusion?

Antonio Aragon Aragon Antonio Renuncio | CGAP Photo Contest CGAP Photo | DIGITAL BANKS How can they deepen financial inclusion? Ivo Jenik and Peter Zetterli February 2020 Is retail banking changing at its core? The world is rapidly changing. New We focus on three broad technologies and business models are innovation spaces defined by upending long-established markets across different sets of actors. virtually every major sector. Financial services are no exception, as traditional • Digital banks from plain start- retail banks are joined by a growing up competitors to radically new number of digital partners and other business models like banking- competitors. as-a-service. • Fintech start-ups and funding What are the implications for incumbents, + innovation ecosystems that regulators, and investors? And what will enable them. this evolving landscape mean for financial inclusion and the many stakeholders • Platforms like big tech giants working to make universal access a in the United States and China reality? and local goods or services platforms in emerging markets. In mid-2018, CGAP launched an effort to This initial slide deck focuses on understand this change and how it may digital banks. For content on the alter the very nature and structure of other innovation spaces, please banking. This presentation features our stay tuned to www.cgap.org/fintech early findings concerning digital banking as we publish more work across models. this agenda. Photo Photo credit: Deo Surah, CGAP Photo Contest 2 © CGAP 2020 Table of Contents Executive Summary 4 I. Introduction 7 II. Emerging Business Models & Trends 13 How Can Digital Banking Advance Financial III. -

Unpacking the Open Banking Black

White Paper Unpacking the Open Banking black box Contents A banking revolution - Open Banking In today’s dynamic business environment, banking 02 A banking revolution - Open Banking regulators are taking steps to intervene in disruptive ways 02 What is Open Banking? to drive greater access and transparency to customer 05 Key challenges and lessons for Australia information in an attempt to stimulate competition and 09 Seize the opportunity innovation, for a more customer centric banking experience. 12 How can IBM help? Where banks previously had exclusive access to their 14 The right partner for a changing world customer’s information, new regulation dictates much of that information must be made available to external parties via digital channels. By forcing incumbent banks to break their monopoly access on payment mechanisms – startups, aggregators and other sectors are empowered to improve customer service, lower costs and develop more innovative technology. In the European Union, the Payments Services Directive 2 (PSD2) requires banks to open access to their back-end services for account information and payment initiation to third parties through application programming interfaces (APIs). In the UK the Competition and Money Authority (CMA) has driven a similar agenda requiring the nine largest banks in the UK to open access to all payment’s accounts. Concurrently with this opening up of access to customer information, the EU has introduced data privacy legislation (GDPR) governing consumer rights in directing how customer information may be used, and in providing guidelines on how customer information must be safeguarded. What is Open Banking? Open Banking is the provision of third-party access to customer and account information through the use of APIs. -

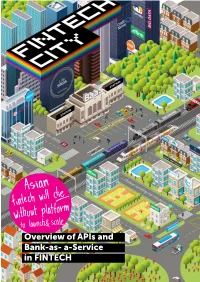

Overview of Apis and Bank-As- A-Service in FINTECH Baas for Banks As Amazone Web Services for E-Commerce

Overview of APIs and Bank-as- a-Service in FINTECH BaaS for banks as Amazone Web Services for e-commerce Traditional bank 2015 customers 67% product Amazon’s profit New fintech comes from AWS! players marketing support ? human resources To fight with - complience IT-guys to support - processing center and manage servers or to earn with - card issuing 2006 - money storage Creation of AWS for new players License Servers You may write off infrastructure investments or use them as new revenue streams While banks have always been looking to con- ratization.¹ Fintech-startups nowadays can serve trol the financial services industry, with the rise of almost any financial need for the eligible popu- fintech, the situation has changed drastically. One lation. Now banks are looking to collaborate with of the core differences in approach to financial fintech so as to not to lose the links in the value services between banks and fintech lies in democ- chains that make them so powerful. Chris Skinner one of the TOP5 fintech-influencers and predictors, author of bestsellers «DIGITAL BANK and «VALUE WEB», managing partner of the BB Fund in London You’re probably all familiar with SaaS – it’s basically paying for applications as you use them, rather than buying them. These services used to cost you a fortune, but are now free or near enough. That’s where banking is going. Banking becomes plug and play apps you stitch together to suit your business or lifestyle. There’s no logical reason why Banking shouldn’t be delivered as SaaS. This is the future bank, and old banks will need to reconsider their services to com- pete with this zero margin model. -

Annual Report NOYB 2020

Annual Report 2020 noyb.eu Annual Report 2020 TABLE OF CONTENTS 1. PREFACE ...................................3 3.5 CREDIT RANKING AGENCIES ......... 16 3.5.1 Complaint against credit ranking agency CRIF 2. ABOUT NOYB ........................... 4 3.6 DATA BREACHES ...........................17 3. OUR PROJECTS ......................... 9 3.6.1 Complaint against IT Solutions “C-Planet” 3.1 EU-US DATA TRANSFERS .............. 10 3.7 KNOWLEDGE SHARING ..................17 3.1.1. Court of Justice Judgment on Privacy Shield 3.7.1 GDPRhub and GDPRtoday 3.1.2. Juridical Review against the DPC 3.1.3 Mass complaint on EU-US Data Transfers 3.8 RESEARCH ................................... 18 3.1.4 FAQs for Controllers and Users 3.8.1 Report on Streaming Services 3.1.5 Feedback on future data transfer mechanisms 3.8.1 Report on privacy policies of video conferencing services 3.8.3 Report on SARS-CoV-2 Tracking under 3.2 MOBILE TRACKING ........................12 GDPR 3.2.1 Complaint against Google’s Android 3.8.4 Review of Austrian “Stopp Corona App” Advertising ID 3.2.2 Complaint against Apple’s Identifier for Advertisers 3.9 UPDATES ON PAST PROJECTS ....... 20 3.9.1 Streaming complaints 3.9.2 Forced Consent - Google 3.3 ENCRYPTION .................................13 3.9.3 Grindr 3.3.1 Complaint against Amazon 4. FINANCES 2020 .......................22 3.4 DATA SUBJECT RIGHTS ................. 14 3.4.1 Complaint against A1 Telekom Austria 3.4.2 Complaint against address broker AZ Direct 5. NOYB IN MEDIA ......................24 GMBH 3.4.3 Complaint against Wizz Air 6. NOYB IN NUMBERS .................25 3.4.4 noyb’s Exercise your Rights Series BACK TO TABLE OF CONTENT 2/27 Annual Report 2020 CHAPTER 1 Preface 2020 marks noyb’s third year fighting for your rights. -

The Sustainable Value of Open Banking: Insights from an Open Data Lens

Proceedings of the 54th Hawaii International Conference on System Sciences | 2021 The Sustainable Value of Open Banking: Insights from an Open Data Lens Kevin O’Leary Tadhg Nagle Philip O’Reilly University College Cork University College Cork University College Cork [email protected] [email protected] [email protected] Christos Papadopoulos-Filelis Milad Dehghani University College Cork University College Cork [email protected] [email protected] Abstract a shift from an old institutional regime of opacity to an Open Banking has emerged as an initiative which has increased openness and transparency. While quite vague the potential to disrupt the retail banking sector by this definition has led to the acknowledgement that improving competition and innovation in the industry. Open Banking challenges many of the institutionalized But is Open Banking capable of producing sustainable assumptions around the aspects of information value? This is a question that is relevant for all open asymmetry [6]. This in particular highlights the central initiatives given the transfer of value from incumbents nature of openness around data and information and a to newer entities with the aim of improving innovation point of focus when exploring the Open Banking, which and customer benefit. It is particularly relevant for has received little or no interest from academics [6], Open Banking at this stage of its maturity. This study partly because many of the relevant interactions are undertakes a global analysis (across 17 regions) on taking place outside the view of researchers [7]. Open Banking through the lens of Open Data. We The retail banking industry has traditionally been contribute to the open data lens and provide insights referred to as a ‘walled garden’ environment, into the potential success of Open Banking. -

The Legal 500 Comparative Guide

Contributing Firm Reed Smith The Legal 500 Country Comparative Guides Authors Jeffrey D. Silberman Partner United States: Fintech [email protected] This country-specific Q&A provides an overview of Herbert F. Kozlov Partner fintech laws and regulations applicable in United States. For a full list of jurisdictional Q&As visit here [email protected] Maria B. Earley Partner [email protected] Christine T. Parker Partner [email protected] Peter A. Emmi Partner [email protected] Trevor A. Levine Associate [email protected] 1. What are the sources of payments law in your jurisdiction? Payments laws in the United States consist of an array of regulatory regimes administered by both state and federal regulators. The primary purpose of these regimes is to protect consumers, facilitate orderly and efficient transfers of value and prevent money laundering and other illegal activities involving payments. At the federal level, payments touch a number of financial industry statutes and regulations, including the Consumer Credit Protection Act, Bank Secrecy Act, Gramm-Leach-Bliley Act, Electronic Funds Transfer Act and Reg. E, Dodd-Frank Act, Expedited Funds Availability Act, Reg. CC, Reg. J, Reg. II, and the Uniform Commercial Code. At the state level, payments are generally covered by money transmission, auto-renewal, surcharge, and prepaid access laws. The federal and state statutory regimes governing payments is supported by periodic rulemakings and guidance issued by regulators. In addition to governmental oversight, the payments industry has developed certain standards by which participants must abide. For example, the Payment Card Industry Security Standards Council administers and maintains payment card industry data security standards (PCI-DSS) for those who process, store or transmit cardholder data. -

Key Trends in Payments and Banking Infrastructure

Conclusion Payments White Paper 1 White Paper Payments Key trends in payments and banking infrastructure The modernization of banking Introduction and payment systems is seeing the The future of banking and payments is instant, open deployment of hybrid public and and everywhere. These trends have evolved based private cloud architectures, often on the developments of newer business models and backed by technical infrastructure that increasingly linked back to the old legacy systems, features greater connectivity, responsiveness, reliability dispensing with which is just too hard and security. This can be seen in industry use cases in many cases. This paper looks at six such as emergence of: use cases for these newly architected § Banking as a Service systems and how they can bring § Core banking modernization efficiency, security, speed and cost § Local processing of banking and payments savings to financial services companies § Real-time domestic payments and their customers. § Open banking § Cross-border payment rails Each of these is explored in more detail below. Digital infrastructure is a hybrid-distributed architecture Legacy payments infrastructure Modernized payment infrastructure § Centralized § Distributed at the edge § Siloed § Interconnected to ecosystems § Rigid and slow § Agile and elastic Equinix.com Confidential The emergence of Banking as a Service Payments White Paper 2 Key Trends Payments/Banking Local payment processing Open banking/apps and as a Service or digital banking platform ecosystem Core banking and Real-time and domestic Payment rails hybrid IT modernization payment schemes credit/debit & cross border Infrastructure Edge Exchange Infrastructure that is Infrastructure that needs to Infrastructure that benefits by increasingly dependent upon be in a particular country or being adjacent to a large number cloud service providers with region due to local partnerships, of ecosystem participants that robust choices of low-latency data sovereignty or latency share standardized messaging connections to cloud providers. -

Future of Financial Services Itera 2020

Trend report from Itera Fall 2020 Digitalization of the financial services industry in the Nordics Preface The banking industry is constantly changing, accelerated not the least by a global pandemic that few people anticipated. Itera has created this trend report to get a readout on the key factors driving the financial indudstry in the Nordics, with a Norwegian emphasis. Itera is passionate about the banking and financial services industry. We stay on top of trends and ongoing developments, discussing everything from events of Earth-shattering significance to the smallest, seemingly insignificant details. We actively seek out new knowledge, working to screen out the noise in order to understand the big picture. We put the international trends into a Norwegian and Nordic context and actively apply what we’ve learned from other industries. Fintech is a "buzzword” that’s here to stay, and definitions abound when it comes to the exact meaning of the term. Most people agree that it's about financial technology, but when we talk about the new versus the old, it often becomes a topic of heated debate. In this report, the term “fintech” is used as a collective term that applies not only to agile start-ups, but also to more mature, established companies in the financial services industry. Because in the Nordics, well-established companies have demonstrated that they have the flexibility and moxie to keep pace with fast-growing young companies. This report is based on several discussions with movers and shakers in the industry, in addition to the experience we’ve gained implementing digitalization across the banking industry in collaboration with our customers.