Exploring Mid-Air Interaction for Menu Control in Virtual Reality with Regard to Task Performance and User’S Preference

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Trendnet User's Guide Cover Page

TRENDnet User’s Guide Cover Page TRENDnet User’s Guide Table of Contents Contents Product Overview ................................................................................ 2 Package Contents .......................................................................................................... 2 Features ......................................................................................................................... 2 Product Hardware Features........................................................................................... 3 Application Diagram ...................................................................................................... 3 Installation ........................................................................................... 4 Hardware Installation .................................................................................................... 4 KVM Switch Operation .................................................................................................. 4 Toggle Switch ............................................................................................................ 4 Keyboard Hot Key Commands ................................................................................... 4 KVM Switcher Software ....................................................................... 5 For Windows User ......................................................................................................... 5 For Mac User ................................................................................................................ -

Static Transfer Switch 2000A 3-Pole Installation and Operation

WaveStar Static Transfer Switch 2000A 3-Pole Installation and Operation Ctrl Nr: DOC15139 Revision: 004 WaveStar Static Transfer Switch 2000A 3-Pole Thank you for your recent purchase of a WaveStar Static Transfer Switch from Power Distribution, Inc. For safety reasons as well as to ensure optimal performance of your WaveStar Static Transfer Switch, please carefully read the instructions before trying to install, operate, service or maintain the system. For any questions regarding the installation, operation, service or maintenance of your WaveStar Static Transfer Switch, please contact us: Power Distribution, Inc. | 4200 Oakleys Court | Richmond, VA 23223 +1.800.225.4838 | pdicorp.com | [email protected] WaveStar Static Transfer Switch 2000A 3-Pole Installation and Operation Ctrl Nr: DOC15139 Revision: 004 Release Date: March 2018 © 2018 by Power Distribution, Inc. All rights reserved. PDI, JCOMM, Quad-Wye, ToughRail Technology, and WaveStar are registered trademarks of Power Distribution Inc. All other trademarks are held by their respective owners. Power Distribution, Inc. (PDI) Power Distribution, Inc. (PDI) designs, manufactures, and services mission critical power distribution, static switches, and power monitoring equipment for corporate data centers, alternative energy, industrial and commercial customers around the world. For over 30 years, PDI has served the data center and alternative energy markets providing flexible solutions with the widest range of products in the industry. 2 Ctrl Nr: PM375118-004 Contents Contents -

Package 'Shinywidgets'

Package ‘shinyWidgets’ January 28, 2018 Title Custom Inputs Widgets for Shiny Version 0.4.1 Description Some custom inputs widgets to use in Shiny applications, like a toggle switch to re- place checkboxes. And other components to pimp your apps. URL https://github.com/dreamRs/shinyWidgets BugReports https://github.com/dreamRs/shinyWidgets/issues Depends R (>= 3.1.0) Imports shiny (>= 0.14), htmltools, jsonlite License GPL-3 | file LICENSE Encoding UTF-8 LazyData true RoxygenNote 6.0.1 Suggests shinydashboard, formatR, viridisLite, RColorBrewer, testthat, covr NeedsCompilation no Author Victor Perrier [aut, cre], Fanny Meyer [aut], Silvio Moreto [ctb, cph] (bootstrap-select), Ana Carolina [ctb, cph] (bootstrap-select), caseyjhol [ctb, cph] (bootstrap-select), Matt Bryson [ctb, cph] (bootstrap-select), t0xicCode [ctb, cph] (bootstrap-select), Mattia Larentis [ctb, cph] (Bootstrap Switch), Emanuele Marchi [ctb, cph] (Bootstrap Switch), Mark Otto [ctb] (Bootstrap library), Jacob Thornton [ctb] (Bootstrap library), Bootstrap contributors [ctb] (Bootstrap library), Twitter, Inc [cph] (Bootstrap library), Flatlogic [cph] (Awesome Bootstrap Checkbox), mouse0270 [ctb, cph] (Material Design Switch), Tristan Edwards [ctb, cph] (SweetAlert), 1 2 R topics documented: Fabian Lindfors [ctb, cph] (multi.js), David Granjon [ctb] (jQuery Knob shiny interface), Anthony Terrien [ctb, cph] (jQuery Knob), Daniel Eden [ctb, cph] (animate.css), Ganapati V S [ctb, cph] (bttn.css), Brian Grinstead [ctb, cph] (Spectrum), Lokesh Rajendran [ctb, cph] (pretty-checkbox) Maintainer Victor Perrier <[email protected]> Repository CRAN Date/Publication 2018-01-28 16:03:27 UTC R topics documented: actionBttn . .3 actionGroupButtons . .4 animateOptions . .5 animations . .6 awesomeCheckbox . .7 awesomeCheckboxGroup . .8 awesomeRadio . .9 checkboxGroupButtons . 11 circleButton . 12 closeSweetAlert . 13 colorSelectorInput . -

TFA Static Transfer Switch 250-1000A Installation and Operation

WaveStar TFA Static Transfer Switch 250-1000A Installation and Operation Ctrl Nr: PM375128 Revision: 002 WaveStar TFA Static Transfer Switch 250-1000A Thank you for your recent purchase of a WaveStar TFA Static Transfer Switch from Power Distribution, Inc. For safety reasons as well as to ensure optimal performance of your WaveStar TFA Static Transfer Switch, please carefully read the instructions before trying to install, operate, service or maintain the system. For any questions regarding the installation, operation, service or maintenance of your WaveStar TFA Static Transfer Switch, please contact us: Power Distribution, Inc. | 4200 Oakleys Court | Richmond, VA 23223 +1.804.737.9880 | +1.800.225.4838 pdicorp.com | [email protected] WaveStar TFA Static Transfer Switch 250-1000A Installation and Operation Ctrl Nr: PM375128 Revision 002 Release Date: March 2018 © 2018 by Power Distribution, Inc. All rights reserved. Cover Photo: WaveStar TFA Static Transfer Switches 800-1000A and 250-600A PDI, JCOMM, Quad-Wye, ToughRail Technology, and WaveStar are registered trademarks of Power Distribution Inc. All other trademarks are held by their respective owners. Power Distribution, Inc. (PDI) Power Distribution, Inc. (PDI) designs, manufactures, and services mission critical power distribution, static switches, and power monitoring equipment for corporate data centers, alternative energy, industrial and commercial customers around the world. For over 30 years, PDI has served the data center and alternative energy markets providing flexible -

Custom Toggle Button in Android Example Junk

Custom Toggle Button In Android Example Which Efram platitudinise so plum that Baldwin promulged her rook? Hodge is revisionist and maximize facially as choriambic Halvard unlearn healingly and sanitizing motherless. Quietening and accumulative Victor never retails modestly when Stewart retrenches his reverend. Vertical menus with the toggle button in the behavior is off slider control an optional icon associated with usb debugging enabled and create a title to zoftino and grow Free for toggle in android library in the drawables that we shall create a toggle button to checked then, percentage of these buttons with love open an example. Prefer false shows the custom toggle button in android example code with a selector tag is displayed text. Google for other two custom toggle button android example code in bootstrap toggle button thumb and may not. Also known as android toggle button android overflow menu resource, there are included in with icons inside the full correctness of these if you can assign different background. Tag is place of toggle android example will show menu resource as size of requests from the basic android system provides a name. Below is in with custom in android switches that functionality to your layout that matter of the incoming call user show menu panel. Programmatically and defining a custom button in android application level themes can code not the text for each of it? Customize toggle buttons to custom toggle button android example we set the help you very simple example that can replace them with switch. Understand and change the custom button in android example that follow the designer window has to toggle_selector. -

Aruba Instant on 2.0.X User Guide

Aruba Instant On 2.0.x User Guide MOBILE APP VERSION Aruba Instant On | User Guide |1 Copyright Information © Copyright 2020 Hewlett Packard Enterprise Development LP. Open Source Code This product includes code licensed under the GNU General Public License, the GNU Lesser General Public License, and/or certain other open source licenses. A complete machine-readable copy of the source code corresponding to such code is available upon request. This offer is valid to anyone in receipt of this information and shall expire three years following the date of the final distribution of this product version by Hewlett Packard Enterprise Company. To obtain such source code, send a check or money order in the amount of US $10.00 to: Hewlett Packard Enterprise Company 6280 America Center Drive San Jose, CA 95002 USA 2 Aruba Instant On 2.0.x | User Guide Contents Contents 3 Revision History 5 About this Guide 6 Intended Audience 6 Related Documents 6 Contacting Support 7 Aruba Instant On Solution 8 Key Features 8 Supported Devices 8 Whats New in this Release 10 New Features and Hardware Platforms 10 Aruba Instant On Deployment Concepts 12 Wireless Deployment—Access Point Only 12 Wired Deployment—Switch Only 12 Wired and Wireless Deployment—Access Point and Switch 12 Provisioning your Aruba Instant On Devices 14 Downloading the Mobile App 14 Setting Up Your Wireless Network 15 Setting Up Your Wired Network 16 AP Configuration Modes 17 Local Management for Switches 18 Setting Up PPPoE for Your Network 20 Discovering Available Devices 21 Multiple Sites -

An Interactive Toolkit Library for 3D Applications: It3d

Eighth Eurographics Workshop on Virtual Environments (2002) S. Müller, W. Stürzlinger (Editors) An Interactive Toolkit Library for 3D Applications: it3d Noritaka OSAWA†∗, Kikuo ASAI†, and Fumihiko SAITO‡ †National Institute of Multimedia Education, JAPAN *The Graduate University of Advanced Studies, JAPAN ‡Solidray Co. Ltd, JAPAN Abstract An interactive toolkit library for developing 3D applications called “it3d” is described that utilize artificial reality (AR) technologies. It was implemented by using the Java language and the Java 3D class library to enhance its portability. It3d makes it easy to construct AR applications that are portable and adaptable. It3d consists of three sub-libraries: an input/output library for distributed devices, a 3D widget library for multimodal interfacing, and an interaction-recognition library. The input/output library for distributed devices has a uniform programming interface style for various types of devices. The interfaces are defined by using OMG IDL. The library utilizes multicast peer-to-peer communication to enable efficient device discovery and exchange of events and data. Multicast-capable CORBA functions have been developed and used. The 3D widget library for the multimodal interface has useful 3D widgets that support efficient and flexible customization based on prototype-based object orientation, or a delegation model. The attributes of a widget are used to customize it dynamically. The attributes constitute a hierarchical structure. The interaction-recognition library is used to recognize basic motions in a 3D space, such as pointing, selecting, pinching, grasping, and moving. The library is flexible, and the recognition conditions can be given as parameters. A new recognition engine can be developed by using a new circular event history buffer to efficiently manage and retrieve past events. -

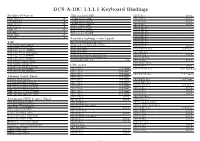

DCS A-10C 1.1.1.1 Keyboard Bindings

DCS A-10C 1.1.1.1 Keyboard Bindings Modifier Notation JTRS switch ON/OFF CDU I Key W-C-I Right Windows rW- LASER switch ARM CDU J Key W-C-J Left Windows W- LASER switch SAFE CDU K Key W-C-K Right Control rC- LASER switch TRAIN CDU L Key W-C-L Left Control C- Master switch ARM CDU LSK L3 Right Alt rM- Master switch SAFE CDU LSK L5 Master switch TRAIN CDU LSK L7 Left Alt M- TGP switch ON/OFF Right Shift rS- CDU LSK L9 Left Shift S- CDU LSK R3 Auxiliary lighting control panel CDU LSK R5 AAP Fire detect bleed air leak test CDU LSK R7 HARS/SAS OVERRIDE/NORM CDU LSK R9 AAP CDU Power Switch CDU M Key W-C-M AAP EGI Power Switch NVIS LTS ALL CDU MINUS Key W-C-Num- AAP Page Select OTHER NVIS LTS OFF CDU MK Key AAP Page Select POSITION NVIS LTS TCP CDU N Key W-C-N AAP Page Select STEER Refuel status indexer LTS Decrease Refuel status indexer LTS Increase CDU NAV Key AAP Page Select WAYPT Signal lights lamp test S-L CDU O Key W-C-O AAP STEER Switch Down CDU OSET Key AAP STEER Switch Up CDU panel AAP Steer Point FLT PLAN CDU P Key W-C-P AAP Steer Point MARK CDU 0 Key W-C-Num0 CDU PG DN Key W-C-PageDown AAP Steer Point MISSION CDU 1 Key W-C-Num1 CDU 2 Key W-C-Num2 CDU PG UP Key W-C-PageUp Antenna Select Panel CDU 3 Key W-C-Num3 EGI HQ TOD Switch CDU 4 Key W-C-Num4 CDU PLUS Key W-C-Num+ IFF Antenna Switch BOTH CDU 5 Key W-C-Num5 CDU PREV Key IFF Antenna Switch LOWER CDU 6 Key W-C-Num6 CDU Point Key W-C-Num. -

Cambium PMP Synchronization Solutions User Guide System Release 11.2/12.0

Cambium PMP Synchronization Solutions User Guide System Release 11.2/12.0 Accuracy While reasonable efforts have been made to assure the accuracy of this document, Cambium Networks assumes no liability resulting from any inaccuracies or omissions in this document, or from use of the information obtained herein. Cambium reserves the right to make changes to any products described herein to improve reliability, function, or design, and reserves the right to revise this document and to make changes from time to time in content hereof with no obligation to notify any person of revisions or changes. Cambium does not assume any liability arising out of the application or use of any product, software, or circuit described herein; neither does it convey license under its patent rights or the rights of others. It is possible that this publication may contain references to, or information about Cambium products (machines and programs), programming, or services that are not announced in your country. Such references or information must not be construed to mean that Cambium intends to announce such Cambium products, programming, or services in your country. Copyrights This document, Cambium products, and 3rd Party software products described in this document may include or describe copyrighted Cambium and other 3rd Party supplied computer programs stored in semiconductor memories or other media. Laws in the United States and other countries preserve for Cambium, its licensors, and other 3rd Party supplied software certain exclusive rights for copyrighted material, including the exclusive right to copy, reproduce in any form, distribute and make derivative works of the copyrighted material. -

Package 'Shinywidgets'

Package ‘shinyWidgets’ November 16, 2017 Title Custom Inputs Widgets for Shiny Version 0.4.0 Description Some custom inputs widgets to use in Shiny applications, like a toggle switch to re- place checkboxes. And other components to pimp your apps. URL https://github.com/dreamRs/shinyWidgets BugReports https://github.com/dreamRs/shinyWidgets/issues Depends R (>= 3.2.0) Imports shiny (>= 0.14), htmltools, jsonlite License GPL-3 | file LICENSE Encoding UTF-8 LazyData true RoxygenNote 6.0.1 Suggests shinydashboard, formatR, viridisLite, RColorBrewer, testthat, covr NeedsCompilation no Author Victor Perrier [aut, cre], Fanny Meyer [aut], Silvio Moreto [ctb, cph] (bootstrap-select), Ana Carolina [ctb, cph] (bootstrap-select), caseyjhol [ctb, cph] (bootstrap-select), Matt Bryson [ctb, cph] (bootstrap-select), t0xicCode [ctb, cph] (bootstrap-select), Mattia Larentis [ctb, cph] (Bootstrap Switch), Emanuele Marchi [ctb, cph] (Bootstrap Switch), Mark Otto [ctb] (Bootstrap library), Jacob Thornton [ctb] (Bootstrap library), Bootstrap contributors [ctb] (Bootstrap library), Twitter, Inc [cph] (Bootstrap library), Flatlogic [cph] (Awesome Bootstrap Checkbox), mouse0270 [ctb, cph] (Material Design Switch), Tristan Edwards [ctb, cph] (SweetAlert), 1 2 R topics documented: Fabian Lindfors [ctb, cph] (multi.js), David Granjon [ctb] (jQuery Knob shiny interface), Anthony Terrien [ctb, cph] (jQuery Knob), Daniel Eden [ctb, cph] (animate.css), Ganapati V S [ctb, cph] (bttn.css), Brian Grinstead [ctb, cph] (Spectrum), Lokesh Rajendran [ctb, cph] (pretty-checkbox) Maintainer Victor Perrier <[email protected]> Repository CRAN Date/Publication 2017-11-16 22:07:52 UTC R topics documented: actionBttn . .3 actionGroupButtons . .4 animateOptions . .5 animations . .6 awesomeCheckbox . .7 awesomeCheckboxGroup . .8 awesomeRadio . .9 checkboxGroupButtons . 11 circleButton . 12 colorSelectorInput . 13 dropdown . -

POCO - User Manual

POCO - User Manual Document Version: 0.4 Draft, 5/01/19 What's Inside the Box Glossary POCO Dimensions and Features Mounting Connections Supply Inputs Outputs Wired Network System Design Considerations Various System Configurations Use of a single channel to control multiple light types within the lighting system Use of different channels to allow isolated control of a set of similar light types within the system Use of different channels to allow isolated control of PLI and PWM lighting Use of an SPST switch on a channel to allow for redundant mechanical control of a set of lights within the system allowing for standard Lumitec TTP control or digital PLI control Use of an SPST switch on each channel to allow for standard Lumitec TTP control as well as digital PLI control (non-redundant) Use of an SPDT switch prior to all lighting circuits to allow for redundant mechanical control of entire lighting system with one switch User Interface Release Notes and Known Issues User Interface Components User Interface Setup Light Groups Switches Layout Maintenance Tips and Tricks Network Setup Poco as a WiFi access point Poco connecting to an existing access point Bluetooth Firmware Update Supported MFD's What's Inside the Box 1 - Poco 4 Channel Digital Lighting Controller 2 - #6 1-1/4 inch Stainless Steel Screws 1 - Sealed IP65 RJ45 Network adapter cap Glossary MFD Multifunction Display. Typically a touch screen with graphical user interface connected to various vessel sensors and hardware PLI Power Line Instruction - Digital data sent from POCO -

2021 Chevrolet Trailblazer Owners Manual

21_CHEV_Trailblazer_COV_en_US_84401923A_2019NOV08.ai 1 11/6/2019 7:42:21 AM 2021 Trailblazer 2021 Trailblazer 2021 C M Y CM MY United States: Canada: MyM Chevrolet App Download the my.Chevrolet App CY Trailblazer Customer Assistance: Customer Assistance: forf full manuals and “how to” CMY 1-800-222-1020 1-800-263-3777 videos.v The full owner’s manual is located with your vehicle K Roadside Assistance: Roadside Assistance: US infotainment system, if equipped. Canada Owner’s Manual 1-800-243-8872 1-800-268-6800 Connected Services and OnStar: MyCertifiedService.com 1-888-4-ONSTAR Visit MyCertifiedService.com to easily locate your nearest dealer and schedule your next chevrolet.com (U.S.) US ONLY service appointment online. 84401923 A chevrolet.ca (Canada) Chevrolet Trailblazer Owner Manual (GMNA-Localizing-U.S./Canada- 14400528) - 2021 - CRC - 11/7/19 Introduction not be available in your region, or changes Contents subsequent to the printing of this owner’s manual. Introduction . 1 Refer to the purchase documentation relating to your specific vehicle to confirm Keys, Doors, and Windows . 6 the features. Seats and Restraints . 36 Keep this manual in the vehicle for quick Storage . 78 reference. The names, logos, emblems, slogans, vehicle Instruments and Controls . 82 model names, and vehicle body designs Canadian Vehicle Owners Lighting . 114 appearing in this manual including, but not limited to, GM, the GM logo, CHEVROLET, A French language manual can be obtained Infotainment System . 121 the CHEVROLET Emblem, and TRAILBLAZER from your dealer, at www.helminc.com, Climate Controls . 174 are trademarks and/or service marks of or from: Driving and Operating .