Computing Matrix Functions in Arbitrary Precision Arithmetic

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Recent and Future Developments of GNU MPFR

Recent and future developments of GNU MPFR Paul Zimmermann iRRAM/MPFR/MPC workshop, Dagstuhl, April 18, 2018 The GNU MPFR library • a software implementation of binary IEEE-754 • variable/arbitrary precision (up to the limits of your computer) • each variable has its own precision: mpfr_init2 (a, 35) • global user-defined exponent range (might be huge): mpfr_set_emin (-123456789) • mixed-precision operations: a b − c where a has 35 bits, b has 42 bits, c has 17 bits • correctly rounded mathematical functions (exp; log; sin; cos; :::) as in Section 9 of IEEE 754-2008 2 History I 2000: first public version; I 2008: MPFR is used by GCC 4.3.0 for constant folding: double x = sin (3.14); I 2009: MPFR becomes GNU MPFR; I 2016: 4th developer meeting in Toulouse. I Dec 2017: release 4.0.0 I mpfr.org/pub.html mentions 2 books, 27 PhD theses, 63 papers citing MPFR I Apr 2018: iRRAM/MPFR/MPC developer meeting in Dagstuhl 3 MPFR is used by SageMath SageMath version 8.1, Release Date: 2017-12-07 Type "notebook()" for the browser-based notebook interface. Type "help()" for help. sage: x=1/7; a=10^-8; b=2^24 sage: RealIntervalField(24)(x+a*sin(b*x)) [0.142857119 .. 0.142857150] 4 Representation of MPFR numbers (mpfr_t) I precision p ≥ 1 (in bits); I sign (−1 or +1); I exponent (between Emin and Emax), also used to represent special numbers (NaN, ±∞, ±0); I significand (array of dp=64e limbs), defined only for regular numbers (neither NaN, nor ±∞ and ±0, which are singular values). -

GNU MPFR the Multiple Precision Floating-Point Reliable Library Edition 4.1.0 July 2020

GNU MPFR The Multiple Precision Floating-Point Reliable Library Edition 4.1.0 July 2020 The MPFR team [email protected] This manual documents how to install and use the Multiple Precision Floating-Point Reliable Library, version 4.1.0. Copyright 1991, 1993-2020 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back- Cover Texts. A copy of the license is included in Appendix A [GNU Free Documentation License], page 59. i Table of Contents MPFR Copying Conditions ::::::::::::::::::::::::::::::::::::::: 1 1 Introduction to MPFR :::::::::::::::::::::::::::::::::::::::: 2 1.1 How to Use This Manual::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 2 2 Installing MPFR ::::::::::::::::::::::::::::::::::::::::::::::: 3 2.1 How to Install ::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 3 2.2 Other `make' Targets :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 4 2.3 Build Problems :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 4 2.4 Getting the Latest Version of MPFR ::::::::::::::::::::::::::::::::::::::::::::::: 4 3 Reporting Bugs::::::::::::::::::::::::::::::::::::::::::::::::: 5 4 MPFR Basics ::::::::::::::::::::::::::::::::::::::::::::::::::: 6 4.1 Headers and Libraries :::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::: 6 -

This PDF Is Provided for Academic Purposes

This PDF is provided for academic purposes. If you want this document for commercial purposes, please get this article from IEEE. FLiT: Cross-Platform Floating-Point Result-Consistency Tester and Workload Geof Sawaya∗, Michael Bentley∗, Ian Briggs∗, Ganesh Gopalakrishnan∗, Dong H. Ahny ∗University of Utah, yLaurence Livermore National Laboratory Abstract—Understanding the extent to which computational much performance gain to pass up, and so one must embrace results can change across platforms, compilers, and compiler flags result-changes in practice. However, exploiting such compiler can go a long way toward supporting reproducible experiments. flags is fraught with many dangers. A scientist publishing In this work, we offer the first automated testing aid called FLiT (Floating-point Litmus Tester) that can show how much these a piece of code with these flags used in the build may not results can vary for any user-given collection of computational really understand the extent to which the results would change kernels. Our approach is to take a collection of these kernels, across inputs, platforms, and other compilers. For example, the disperse them across a collection of compute nodes (each with optimization flag -O3 does not hold equal meanings across a different architecture), have them compiled and run, and compilers. Also, some flags are exclusive to certain compilers; bring the results to a central SQL database for deeper analysis. Properly conducting these activities requires a careful selection in those cases, a user who is forced to use a different compiler (or design) of these kernels, input generation methods for them, does not know which substitute flags to use. -

Effectiveness of Floating-Point Precision on the Numerical Approximation by Spectral Methods

Mathematical and Computational Applications Article Effectiveness of Floating-Point Precision on the Numerical Approximation by Spectral Methods José A. O. Matos 1,2,† and Paulo B. Vasconcelos 1,2,∗,† 1 Center of Mathematics, University of Porto, R. Dr. Roberto Frias, 4200-464 Porto, Portugal; [email protected] 2 Faculty of Economics, University of Porto, R. Dr. Roberto Frias, 4200-464 Porto, Portugal * Correspondence: [email protected] † These authors contributed equally to this work. Abstract: With the fast advances in computational sciences, there is a need for more accurate compu- tations, especially in large-scale solutions of differential problems and long-term simulations. Amid the many numerical approaches to solving differential problems, including both local and global methods, spectral methods can offer greater accuracy. The downside is that spectral methods often require high-order polynomial approximations, which brings numerical instability issues to the prob- lem resolution. In particular, large condition numbers associated with the large operational matrices, prevent stable algorithms from working within machine precision. Software-based solutions that implement arbitrary precision arithmetic are available and should be explored to obtain higher accu- racy when needed, even with the higher computing time cost associated. In this work, experimental results on the computation of approximate solutions of differential problems via spectral methods are detailed with recourse to quadruple precision arithmetic. Variable precision arithmetic was used in Tau Toolbox, a mathematical software package to solve integro-differential problems via the spectral Tau method. Citation: Matos, J.A.O.; Vasconcelos, Keywords: floating-point arithmetic; variable precision arithmetic; IEEE 754-2008 standard; quadru- P.B. -

Linux from Scratch Version 7.7-Systemd

Linux From Scratch Version 7.7-systemd 创建者:Gerard Beekmans 编辑者:Matthew Burgess 和 Armin K. 翻译团队:LCTT 译者/校对:wxy, ictlyh, dongfengweixiao, zpl1025, H-mudcup, Yuking-net, kevinSJ Copyright © 1999-2015 Gerard Beekmans 目录 序章 前言 致读者 LFS 的目标架构 LFS 和标准 本书中的软件包逻辑 前置需求 宿主系统需求 排版约定 本书结构 勘误表 第一部分 介绍 第一章 介绍 如何构建 LFS 系统 上次发布以来的更新 更新日志 资源 帮助 第二部分 准备构建 第二章 准备新分区 简介 创建新分区 在分区上创建文件系统 挂载新分区 设置 $LFS 变量 第三章 软件包与补丁 简介 所有软件包 所需补丁 第四章 最后的准备 简介 创建 $LFS/tools 目录 添加 LFS 用户 设置环境 关于 SBU 关于测试套件 第五章 构建临时文件系统 简介 工具链技术备注 通用编译指南 Binutils-2.25 - 第一遍 GCC-4.9.2 - 第一遍 Linux-3.19 API 头文件 Glibc-2.21 Libstdc++-4.9.2 Binutils-2.25 - 第二遍 GCC-4.9.2 - 第二遍 Tcl-8.6.3 Expect-5.45 DejaGNU-1.5.2 Check-0.9.14 Ncurses-5.9 Bash-4.3.30 Bzip2-1.0.6 Coreutils-8.23 Diffutils-3.3 File-5.22 Findutils-4.4.2 Gawk-4.1.1 Gettext-0.19.4 Grep-2.21 Gzip-1.6 M4-1.4.17 Make-4.1 Patch-2.7.4 Perl-5.20.2 Sed-4.2.2 Tar-1.28 Texinfo-5.2 Util-linux-2.26 Xz-5.2.0 清理无用内容 改变属主 第三部分 构建 LFS 系统 第六章 安装基本的系统软件 简介 准备虚拟内核文件系统 软件包管理 进入 chroot 环境 创建目录 创建必需的文件和符号链接 Linux-3.19 API Headers Man-pages-3.79 Glibc-2.21 调整工具链 Zlib-1.2.8 File-5.22 Binutils-2.25 GMP-6.0.0a MPFR-3.1.2 MPC-1.0.2 GCC-4.9.2 Bzip2-1.0.6 Pkg-config-0.28 Ncurses-5.9 Attr-2.4.47 Acl-2.2.52 Libcap-2.24 Sed-4.2.2 Shadow-4.2.1 Psmisc-22.21 Procps-ng-3.3.10 E2fsprogs-1.42.12 Coreutils-8.23 Iana-Etc-2.30 M4-1.4.17 Flex-2.5.39 Bison-3.0.4 Grep-2.21 Readline-6.3 Bash-4.3.30 Bc-1.06.95 Libtool-2.4.6 GDBM-1.11 Expat-2.1.0 Inetutils-1.9.2 Perl-5.20.2 XML::Parser-2.44 Autoconf-2.69 Automake-1.15 Diffutils-3.3 Gawk-4.1.1 Findutils-4.4.2 Gettext-0.19.4 Intltool-0.50.2 Gperf-3.0.4 Groff-1.22.3 Xz-5.2.0 GRUB-2.02~beta2 Less-458 Gzip-1.6 IPRoute2-3.19.0 Kbd-2.0.2 Kmod-19 Libpipeline-1.4.0 Make-4.1 Patch-2.7.4 Systemd-219 D-Bus-1.8.16 Util-linux-2.26 Man-DB-2.7.1 Tar-1.28 Texinfo-5.2 Vim-7.4 关于调试符号 再次清理无用内容 清理 第七章 基本系统配置 简介 通用网络配置 LFS 系统中的设备和模块控制 定制设备的符号链接 配置系统时钟 配置 Linux 主控台 配置系统本地化 创建 /etc/inputrc 文件 创建 /etc/shells 文件 使用及配置 Systemd 第八章 让 LFS 系统可引导 简介 创建 /etc/fstab 文件 Linux-3.19 用 GRUB 设置引导过程 第九章 尾声 最后的最后 为 LFS 用户数添砖加瓦 重启系统 接下来做什么呢? 第四部分 附录 附录 A. -

Fast Fourier Transform Using Compiler Auto-Vectorization Master’S Thesis in Complex Adaptive Systems

DF Fast Fourier Transform using compiler auto-vectorization Master’s thesis in Complex Adaptive Systems DUNDAR GOC¨ Department of Mathematical Sciences CHALMERS UNIVERSITY OF TECHNOLOGY Gothenburg, Sweden 2019 Master’s thesis 2019 Fast Fourier Transform using compiler auto-vectorization DUNDAR GOC¨ DF Department of Mathematical Sciences Chalmers University of Technology Gothenburg, Sweden 2019 Fast Fourier Transform using compiler auto-vectorization DUNDAR GOC¨ c DUNDAR GOC,¨ 2019. Supervisor: Martin Raum, Department of Mathematical Sciences at Chalmers University of Technology Examiner: Martin Raum, Department of Mathematical Sciences at Chalmers University of Technology Master’s Thesis 2019 Department of Mathematical Sciences Chalmers University of Technology SE-412 96 Gothenburg Telephone +46 31 772 1000 Cover: An illustration of the FFT algorithm structure. Typeset in LATEX Digital Gothenburg, Sweden 2019 iv Fast Fourier Transform using compiler auto-vectorization DUNDAR GOC¨ Department of Mathematical Sciences Chalmers University of Technology Abstract The purpose of this thesis is to develop a fast Fourier transformation algorithm written in C with the use of GCC (GNU Compiler Collection) auto-vectorization in the multiplicative group of integers modulo n, where n is a word sized odd integer. The specific Fourier transform algorithm employed is the cache-friendly version of the Truncated Fourier Transform. The algorithm was implemented by modifying an existing library for modular arithmetic written in C called zn poly. The majority of the thesis work consisted of changing the code in a way to make integration into FLINT possible. FLINT is a versatile library, written in C, aimed at fast arithmetic of different kinds. The results show that auto-vectorization is possible with a potential speedup factor of 3. -

Introduction to the GNU MPFR Library

Introduction to the GNU MPFR Library Vincent LEFÈVRE AriC, INRIA Grenoble – Rhône-Alpes / LIP, ENS-Lyon GdT AriC, 2014-04-22 [gdt201404.tex 68929 2014-04-22 10:17:19Z vinc17/xvii] Outline Introduction Presentation of GNU MPFR, History Why MPFR? MPFR Basics Output Functions Test of MPFR (make check) Applications Timings The Future – Work in Progress Conclusion [gdt201404.tex 68929 2014-04-22 10:17:19Z vinc17/xvii] Vincent LEFÈVRE (INRIA / LIP, ENS-Lyon) Introduction to the GNU MPFR Library GdT AriC, 2014-04-22 2 / 56 Introduction: Arbitrary Precision Arbitrary precision: the ability to perform calculations on numbers whose “size” can be arbitrarily large, with environmental limits (available memory, size of basic data types, etc.). [gdt201404.tex 68929 2014-04-22 10:17:19Z vinc17/xvii] Vincent LEFÈVRE (INRIA / LIP, ENS-Lyon) Introduction to the GNU MPFR Library GdT AriC, 2014-04-22 3 / 56 Introduction: Arbitrary Precision Arbitrary precision: the ability to perform calculations on numbers whose “size” can be arbitrarily large, with environmental limits (available memory, size of basic data types, etc.). Different kinds of (arbitrary-precision) arithmetics: integer (e.g., GMP/mpz), rational (e.g., GMP/mpq), fixed-point, floating-point, etc. [gdt201404.tex 68929 2014-04-22 10:17:19Z vinc17/xvii] Vincent LEFÈVRE (INRIA / LIP, ENS-Lyon) Introduction to the GNU MPFR Library GdT AriC, 2014-04-22 3 / 56 Introduction: Arbitrary Precision Arbitrary precision: the ability to perform calculations on numbers whose “size” can be arbitrarily large, with environmental limits (available memory, size of basic data types, etc.). Different kinds of (arbitrary-precision) arithmetics: integer (e.g., GMP/mpz), rational (e.g., GMP/mpq), fixed-point, floating-point, etc. -

Introduction to the GNU MPFR Library

Introduction to the GNU MPFR Library Vincent LEFÈVRE INRIA Grenoble – Rhône-Alpes / LIP, ENS-Lyon GNU Hackers Meeting, Paris, 2011-08-28 [ghm2011.tex 45903 2011-08-27 21:28:35Z vinc17/xvii] Outline Presentation, History MPFR Basics Output Functions Test of MPFR (make check) Applications Timings Conclusion [ghm2011.tex 45903 2011-08-27 21:28:35Z vinc17/xvii] Vincent LEFÈVRE (INRIA / LIP, ENS-Lyon) Introduction to the GNU MPFR Library GNU Hackers Meeting 2011, Paris 2 / 40 GNU MPFR in a Few Words GNU MPFR is an efficient multiple-precision floating-point library with well-defined semantics (copying the good ideas from the IEEE-754 standard), in particular correct rounding. 80 mathematical functions, in addition to utility functions (assignments, conversions. ). Special data (Not a Number, infinities, signed zeros). Originally developed at LORIA, INRIA Nancy – Grand Est. Since the end of 2006, a joint project between the Arénaire (LIP, ENS-Lyon) and CACAO (now Caramel) INRIA project-teams. Written in C (ISO + optional extensions); based on GMP (mpn/mpz). Licence: LGPL (version 3 or later, for GNU MPFR 3). [ghm2011.tex 45903 2011-08-27 21:28:35Z vinc17/xvii] Vincent LEFÈVRE (INRIA / LIP, ENS-Lyon) Introduction to the GNU MPFR Library GNU Hackers Meeting 2011, Paris 3 / 40 MPFR History 1998–2000 ARC INRIA Fiable. November 1998 Foundation text (Guillaume Hanrot, Jean-Michel Muller, Joris van der Hoeven, Paul Zimmermann). Early 1999 First lines of code (G. Hanrot, P. Zimmermann). 9 June 1999 First commit into CVS (later, SVN). June-July 1999 Sylvie Boldo (AGM, log). 2000–2002 ARC AOC (Arithmétique des Ordinateurs Certifiée). -

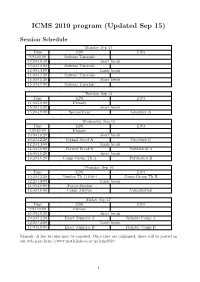

ICMS 2010 Program (Updated Sep 15)

ICMS 2010 program (Updated Sep 15) Session Schedule Monday, Sep 13 Time Z201 Z103 9:00-10:20 Sofware Turorials 10:20-10:40 short break 10:40-12:00 Sofware Turorials 12:00-14:00 lunch break 14:00-15:20 Sofware Turorials 15:20-15:40 short break 15:40-17:00 Sofware Turorials Tuesday, Sep 14 Time Z201 Z103 14:00-15:00 Plenary 15:00-15:20 short break 15:20-18:00 Special Func Groebner A Wednesday, Sep 15 Time Z201 Z103 9:00-10:00 Plenary 10:00-10:20 short break 10:20-12:20 Formal Proof A Groebner B 12:20-14:00 lunch break 14:00-16:00 Formal Proof B Polyhedral A 16:00-16:20 short break 16:20-18:20 Comp Group Th A Polyhedral B Thursday, Sep 16 Time Z201 Z103 10:20-12:20 Number Th (11:00-) Comp Group Th B 12:20-14:00 lunch break 14:00-16:00 Poster Session 16:00-18:00 Comp Algebra Visualization Friday, Sep 17 Time Z201 Z103 9:00-10:00 Plenary 10:00-10:20 short break 10:20-12:20 Exact Numeric A Reliable Comp A 12:20-14:00 lunch break 14:00-16:00 Exact Numeric B Reliable Comp B Remark: A few lectures may be canceled. Once they are con¯rmed, these will be posted on our web page http://www.math.kobe-u.ac.jp/icms2010. 1 Monday, September 13 Software Turorials (Z201; 9:00-17:00) 9:00-9:40 Hamada, Tatsuyoshi: ICMS-DVD 9:40-10:20 Takayama, Nobuki: Risa/Asir 10:20-10:40 short break 10:40-11:20 Witty, Carl: The Sage mathematics software system 11:20-12:00 Tanaka, Satoru: NZMATH | a practical number theoretic tool 12:00-14:00 lunch break 14:00-14:40 van der Hoeven, Joris: GNU TEXmacs 14:40-15:20 Pilarczyk, Pawel: CHomP - Computational Homology Project 15:20-15:40 -

Optimized Binary64 and Binary128 Arithmetic with GNU MPFR Vincent Lefèvre, Paul Zimmermann

Optimized Binary64 and Binary128 Arithmetic with GNU MPFR Vincent Lefèvre, Paul Zimmermann To cite this version: Vincent Lefèvre, Paul Zimmermann. Optimized Binary64 and Binary128 Arithmetic with GNU MPFR. 24th IEEE Symposium on Computer Arithmetic (ARITH 24), Jul 2017, London, United Kingdom. pp.18-26, 10.1109/ARITH.2017.28. hal-01502326 HAL Id: hal-01502326 https://hal.inria.fr/hal-01502326 Submitted on 5 Apr 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. 1 Optimized Binary64 and Binary128 Arithmetic with GNU MPFR Vincent Lefèvre and Paul Zimmermann Abstract—We describe algorithms used to optimize the GNU then denote β the radix, assumed to be a power of two. We call MPFR library when the operands fit into one or two words. left shift (resp. right shift) a shift towards the most (resp. least) On modern processors, a correctly rounded addition of two significant bits. We use a few shorthands: HIGHBIT denotes quadruple precision numbers is now performed in 22 cycles, a 63 subtraction in 24 cycles, a multiplication in 32 cycles, a division in the limb 2 ; ONE denotes the limb 1; GMP_NUMB_BITS, 64 cycles, and a square root in 69 cycles. -

CAMPARY Cuda Multiple Precision Arithmetic Library

CAMPARY CudA Multiple Precision ARithmetic librarY Valentina Popescu Joined work with: Mioara Joldes, Jean-Michel Muller March 2015 AM AR CudA Multiple PrecisionP ARithmetic librarY in 2014 the video Psy - Gangnam Style touched the limit update to 64-bit with a limit of 9; 223; 372; 036; 854; 775; 808 (more than 9 quintillion) ∗ statement: We never thought a video would be watched in numbers greater than a 32-bit integer... but that was before we met Psy. When do we need more precision? Youtube view counter: 32-bit integer register (limit 2; 147; 483; 647) ∗courtesy of http://www.reddit.com/r/ProgrammerHumor/ 1 / 21 update to 64-bit with a limit of 9; 223; 372; 036; 854; 775; 808 (more than 9 quintillion) statement: We never thought a video would be watched in numbers greater than a 32-bit integer... but that was before we met Psy. When do we need more precision? Youtube view counter: 32-bit integer register (limit 2; 147; 483; 647) in 2014 the video Psy - Gangnam Style touched the limit ∗ ∗courtesy of http://www.reddit.com/r/ProgrammerHumor/ 1 / 21 When do we need more precision? Youtube view counter: 32-bit integer register (limit 2; 147; 483; 647) in 2014 the video Psy - Gangnam Style touched the limit update to 64-bit with a limit of 9; 223; 372; 036; 854; 775; 808 (more than 9 quintillion) ∗ statement: We never thought a video would be watched in numbers greater than a 32-bit integer... but that was before we met Psy. ∗courtesy of http://www.reddit.com/r/ProgrammerHumor/ 1 / 21 Need more precision {few hundred bits{ than standard available Need massive parallel computations: {high performance computing (HPC){ More serious things! Dynamical systems: 0.4 bifurcation analysis, 0.2 0 compute periodic orbits (finding sinks x2 in the H´enonmap, iterating the -0.2 Lorenz attractor), -0.4 long term stability of the solar system. -

Reliable Computing with GNU MPFR

Reliable computing with GNU MPFR Paul Zimmermann LORIA/INRIA Nancy-Grand Est, Equipe´ CARAMEL - b^atiment A, 615 rue du jardin botanique, F-54603 Villers-l`es-NancyCedex Abstract. This article presents a few applications where reliable com- putations are obtained using the GNU MPFR library. Keywords: reliable computing, correct rounding, IEEE 754, GNU MPFR The overview of the 3rd International Workshop on Symbolic-Numeric Com- putation (SNC 2009), held in Kyoto in August 2009, says: Algorithms that com- bine ideas from symbolic and numeric computation have been of increasing inter- est over the past decade. The growing demand for speed, accuracy and reliability in mathematical computing has accelerated the process of blurring the distinc- tion between two areas of research that were previously quite separate. [...] Using numeric computations certainly speeds up several symbolic algorithms, however to ensure the correctness of the final result, one should be able to deduce some reliable facts from those numeric computations. Unfortunately, most numerical software tools do not provide any accuracy guarantee to the user. Let us demon- strate this fact on a few examples. Can we deduce the sign of sin(2100) from the following computation with Maple version 13? > evalf(sin(2^100)); 0.4491999480 This problem concerns otherp computer algebra systems, for example Sage 4.4.2, where the constant C = eπ 163 − 262537412640768744 evaluated to different precisions yields different signs: sage: f=exp(pi*sqrt(163))-262537412640768744 sage: numerical_approx(f, digits=15) 448.000000000000 sage: numerical_approx(f, digits=30) -5.96855898038484156131744384766e-13 or Mathematica 6.0, where the same constant C ≈ −0:75·10−12, integrated from 0 to 1, yields a result too large by several orders of magnitude: In[1]:= NIntegrate[Exp[Pi*Sqrt[163]]-262537412640768744,{x,0,1}] Out[1]= -480.