Digital Technical Journal Volume 6, Number 3 VTX Version of the OSF Paper

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Solaris 2.5 Software Developer Kit Introduction

Solaris 2.5 Software Developer Kit Introduction 2550 Garcia Avenue Mountain View, CA 94043 U.S.A. A Sun Microsystems, Inc. Business 1995 Sun Microsystems, Inc. 2550 Garcia Avenue, Mountain View, California 94043-1100 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Portions of this product may be derived from the UNIX® system, licensed from UNIX Systems Laboratories, Inc., a wholly owned subsidiary of Novell, Inc., and from the Berkeley 4.3 BSD system, licensed from the University of California. Third-party software, including font technology in this product, is protected by copyright and licensed from Sun’s Suppliers. RESTRICTED RIGHTS LEGEND: Use, duplication, or disclosure by the government is subject to restrictions as set forth in subparagraph (c)(1)(ii) of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013 and FAR 52.227-19. The product described in this manual may be protected by one or more U.S. patents, foreign patents, or pending applications. TRADEMARKS Sun, Sun Microsystems, the Sun logo, SunSoft, the SunSoft logo, Solaris, SunOS, OpenWindows, DeskSet, ONC, ONC+, NFS, SunExpress, ProCompiler, XView, ToolTalk, XGL, XIL, Solaris VISUAL, Solaris PEX, and AnswerBook are trademarks or registered trademarks of Sun Microsystems, Inc. in the United States and other countries. CatalystSM is a service mark of Sun Microsystems, Inc. -

Installing Mac OS X Developer Preview 2

LL0113.Book Page 1 Tuesday, October 12, 1999 1:09 PM Installing Mac OS X Developer Preview 2 LL0113.Book Page 2 Tuesday, October 12, 1999 1:09 PM K Apple Computer, Inc. © 1999 Apple Computer, Inc. All rights reserved. Under the copyright laws, this manual may not be copied, in whole or in part, without the written consent of Apple. Your rights to the software are governed by the accompanying software license agreement. The Apple logo is a trademark of Apple Computer, Inc., registered in the U.S. and other countries. Use of the “keyboard” Apple logo (Option-Shift-K) for commercial purposes without the prior written consent of Apple may constitute trademark infringement and unfair competition in violation of federal and state laws. Every effort has been made to ensure that the information in this manual is accurate. Apple is not responsible for printing or clerical errors. Apple Computer, Inc. 1 Infinite Loop Cupertino, CA 95014-2084 408-996-1010 http://www.apple.com Apple, the Apple logo, AppleTalk, AppleVision, FireWire, Mac, Macintosh, PowerBook, and Power Macintosh are trademarks of Apple Computer, Inc., registered in the U.S. and other countries. Disk First Aid, Finder, iMac, and Power Mac are trademarks of Apple Computer, Inc. PostScript is a trademark of Adobe Systems, Inc. OpenGL is a registered trademark of Silicon Graphics, Inc. Other company and product names mentioned herein are trademarks of their respective companies. Mention of third-party products is for informational purposes only and constitutes neither an endorsement nor a recommendation. Apple assumes no responsibility with regard to the performance or use of these products. -

Object Oriented Perspective on Software System Testing in A

ObjectĆOriented Perspective on Software System Testing in a Distributed Environment A flexible object-oriented test system was developed to deal with the testing challenges imposed by software systems that run in distributed client/server environments. by Mark C. Campbell, David K. Hinds, Ana V. Kapetanakis, David S. Levin, Stephen J. McFarland, David J. Miller, and J. Scott Southworth In recent years software technology has evolved fromexecution, singleĆ results gathering, and report generation (see machine applications to multimachine applications (theFig. 1). Unfortunately, the test harnesses created in these realm of the client and server). Also, objectĆorientedenvironments proĆ were not easily reusable, and when the next gramming techniques have been gaining ground onproject proceĆ reached the test planning stage, the test harness had dural programming languages and practices. Recently,to test be reworked. engineers have focused on techniques for testing objects. The advent of standardized test environments such as TET However, the design and implementation of the test tools (Test Environment Toolkit)* helped to reduce this costly reĆ and code have remained largely procedural in nature. tooling by providing a standard API (application program This paper will describe the object testing framework,interface) which and tool base that test developers can adopt and is a software testing framework designed to meetuse theto testing write standardized tests. However, the difficulty is to needs of new software technologies and take advantageprovide of a standard test harness that is complete but flexible objectĆoriented techniques to maximize flexibility andenough tool to keep pace with changing software technology reuse. and remain viable for the long term. During the development and testing of the initial release of System Software Testing HP ORB Plus, which is an object request broker based on The levels of software testing include unit, integration, and the Object Management Group's CORBA specification (see system testing. -

Unix System Software

Unix system software Unix is a family of multitasking, multiuser computer operating systems that derive from the . modifiable source code for all of these components, in addition to the kernel of an operating system, Unix was a self-contained software n in: C and assembly language. This sets Unix apart from proprietary operating systems like Microsoft Windows. system Solaris 10 integrates the most popular open source software and. UNIX is a multitasking operating system developed at Bell Labs in the early s. It was designed to be a small, flexible system used by programmers. APIs are changing more than just software architectures. From planning through. Today, without UNIX systems, the Internet would come to a screeching halt. Their new organization was called the Open Software Foundation (OSF). By operating system, we mean the suite of programs which make the computer UNIX systems also have a graphical user interface (GUI) similar to Microsoft. Unix also was developed as a self-contained software system, comprising the operating system, development environment, utilities. An operating system is the program that controls all the other parts of a computer system, both the hardware and the software. It allocates the computer's. Unix. In , Kenneth Thompson, Dennis Ritchie, and others at AT&T Bell Labs began developing a small operating system on a little-used PDP By the most simple definition, UNIX is a computer operating system - the base software that controls a computer system and its peripherals. The Difference Between UNIX and LINUX Operating Systems With Examples You may have Unix is a proprietary software operating system. -

Operating RISC: UNIX Standards in the 1990S

Operating RISC: UNIX Standards in the 1990s This case was written by Will Mitchell and Paul Kritikos at the University of Michigan. The case is based on public sources. Some figures are based on case-writers' estimates. We appreciate comments from David Girouard, Robert E. Thomas and Michael Wolff. The note "Product Standards and Competitive Advantage" (Mitchell 1992) supplements this case. The latest International Computerquest Corporation analysis of the market for UNIX- based computers landed on three desks on the same morning. Noel Sharp, founder, chief executive officer, chief engineer and chief bottle washer for the Superbly Quick Architecture Workstation Company (SQAWC) in Mountain View, California hoped to see strong growth predicted for the market for systems designed to help architects improve their designs. In New York, Bo Thomas, senior strategist for the UNIX systems division of A Big Computer Company (ABCC), hoped that general commercial markets for UNIX-based computer systems would show strong growth, but feared that the company's traditional mainframe and mini-computer sales would suffer as a result. Airborne in the middle of the Atlantic, Jean-Helmut Morini-Stokes, senior engineer for the UNIX division of European Electronic National Industry (EENI), immediately looked to see if European companies would finally have an impact on the American market for UNIX-based systems. After looking for analysis concerning their own companies, all three managers checked the outlook for the alliances competing to establish a UNIX operating system standard. Although their companies were alike only in being fictional, the three managers faced the same product standards issues. How could they hasten the adoption of a UNIX standard? The market simply would not grow until computer buyers and application software developers could count on operating system stability. -

The Development of Unix

The development of Unix ∗ By Kasper Edwards Departmnent of Technology and Social Sciences, Technical University of Denmark Building 303 East, room 150, 2800 Lyngby, Denmark. (email: [email protected]) ABSTRACT This paper tells the story of the development of the Unix time sharing system. The development at AT&T and the MULTICS roots are uncovered. The events are presented in chronological order from 1969 to 1995. The Berkeley Software Distribution (BSD) are presented as well as the Free Software Foundation and other. Note: This is a working paper. Short sections of text, no more than two paragraphs may be quoted without permission provided that full credit is given to the source. Copyright © 2000-2001 by Kasper Edwards, all rights reserved. Comments are welcome to [email protected]. ∗ I would like to thank Keld Jørn Simmonsen, Ass. Prof. Jørgen Lindgaard Pedersen of the Technical University of Denmark and Ass. Prof. Jørgen Steensgaard for helpful comments and suggestions on earlier drafts on this paper. I assume full responsibility for any remaining vulnerabilities. Page 1 of 31 1.1 Introduction This thesis about Linux, however Linux is called a Unix clone in the sense that it looks like, and are designed on the same principles as Unix. Both Unix and Linux are POSIX (Portable Operating System Interface) compliant (described in paragraph 3.29). In short POSIX describes the Unix user interface, i.e. commands and their syntax. Some Unix’es are certified POSIX compliant but no one have yet been willing to pay a third party company to test the POSIX compliance of Linux. -

Mac OS X Server

Mac OS X Server Version 10.4 Technology Overview August 2006 Technology Overview 2 Mac OS X Server Contents Page 3 Introduction Page 5 New in Version 10.4 Page 7 Operating System Fundamentals UNIX-Based Foundation 64-Bit Computing Advanced BSD Networking Architecture Robust Security Directory Integration High Availability Page 10 Integrated Management Tools Server Admin Workgroup Manager Page 14 Service Deployment and Administration Open Directory Server File and Print Services Mail Services Web Hosting Enterprise Applications Media Streaming iChat Server Software Update Server NetBoot and NetInstall Networking and VPN Distributed Computing Page 29 Product Details Page 31 Open Source Projects Page 35 Additional Resources Technology Overview 3 Mac OS X Server Introduction Mac OS X Server version 10.4 Tiger gives you everything you need to manage servers in a mixed-platform environment and to con gure, deploy, and manage powerful network services. Featuring the renowned Mac OS X interface, Mac OS X Server streamlines your management tasks with applications and utilities that are robust yet easy to use. Apple’s award-winning server software brings people and data together in innovative ways. Whether you want to empower users with instant messaging and blogging, gain greater control over email, reduce the cost and hassle of updating software, or build your own distributed supercomputer, Mac OS X Server v10.4 has the tools you need. The Universal release of Mac OS X Server runs on both Intel- and PowerPC-based The power and simplicity of Mac OS X Server are a re ection of Apple’s operating sys- Mac desktop and Xserve systems. -

UNIX History Page 1 Tuesday, December 10, 2002 7:02 PM

UNIX History Page 1 Tuesday, December 10, 2002 7:02 PM CHAPTER 1 UNIX Evolution and Standardization This chapter introduces UNIX from a historical perspective, showing how the various UNIX versions have evolved over the years since the very first implementation in 1969 to the present day. The chapter also traces the history of the different attempts at standardization that have produced widely adopted standards such as POSIX and the Single UNIX Specification. The material presented here is not intended to document all of the UNIX variants, but rather describes the early UNIX implementations along with those companies and bodies that have had a major impact on the direction and evolution of UNIX. A Brief Walk through Time There are numerous events in the computer industry that have occurred since UNIX started life as a small project in Bell Labs in 1969. UNIX history has been largely influenced by Bell Labs’ Research Editions of UNIX, AT&T’s System V UNIX, Berkeley’s Software Distribution (BSD), and Sun Microsystems’ SunOS and Solaris operating systems. The following list shows the major events that have happened throughout the history of UNIX. Later sections describe some of these events in more detail. 1 UNIX History Page 2 Tuesday, December 10, 2002 7:02 PM 2 UNIX Filesystems—Evolution, Design, and Implementation 1969. Development on UNIX starts in AT&T’s Bell Labs. 1971. 1st Edition UNIX is released. 1973. 4th Edition UNIX is released. This is the first version of UNIX that had the kernel written in C. 1974. Ken Thompson and Dennis Ritchie publish their classic paper, “The UNIX Timesharing System” [RITC74]. -

History of UNIX 1969 Created at AT&T Bell Labs, Massachusetts Institute of Technology Researchers: Ken Thompson, Dennis Ritchie, M

History of UNIX 1969 Created at AT&T Bell Labs, Massachusetts Institute of Technology Researchers: Ken Thompson, Dennis Ritchie, M. D. Mcllroy, Joe Ossana Based on experimental remote-access, time-shared operating system, Multics 1970s Unix was rewritten in the C programming language in 1972. First Unix development support group formed in Bell Labs in 1973. UNIX philosophy emerges. AT&T began licensing Unix to universities, commercial companies and the US government. Bill Joy produces first Berkeley Software Distribution(BSD) of Unix in 1978 1980s In 1983, AT&T began to commercialize Unix. BSD Unix became popular as alternative to AT&T’s Unix System V. Standardization of Unix attempts begins with the X/Open consortium. Start of the “UNIX Wars”. Open Software Foundation and UNIX International founded in 1988. 1990s In 1993, “Unix Wars” end with merger of Unix International and Open Software Foundation. Novell buys UNIX System Laboratories from AT&T Also in 1993, Novell transfers Unix trademark and rights to X/Open Consortium X/Open Consortium merges with Open Software Foundation to form the Open Group in 1996. 1990s (cont.) Novell sold admin and support business to Santa Cruz Operation along with development rights in 1995. Apple Computers acquired BSD based operating system, NEXTSTEP in 1997. Renamed Darwin. 2000s Dot Com bubble(2001-2003) burst triggered significant consolidation of versions of Unix. Santa Cruz Operations started legal action against Linux in 2003 resulting in SCO vs Novell lawsuit. On August 10, 2007, Novell won majority of lawsuit. SCO appealed. Modern Uses of Unix Unix trademark defined by the Single UNIX Specification. -

Open Source Used in Asyncos 11.1 for Cisco Email Security Appliances

Open Source Used In AsyncOS 11.1 for Cisco Email Security Appliances Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. Text Part Number: 78EE117C99-161543810 Open Source Used In AsyncOS 11.1 for Cisco Email Security Appliances 1 This document contains licenses and notices for open source software used in this product. With respect to the free/open source software listed in this document, if you have any questions or wish to receive a copy of any source code to which you may be entitled under the applicable free/open source license(s) (such as the GNU Lesser/General Public License), please contact us at [email protected]. In your requests please include the following reference number 78EE117C99-161543810 Contents 1.1 AnyEvent 5.34 1.1.1 Available under license 1.2 Apache Commons Lang 2.0 1.2.1 Notifications 1.2.2 Available under license 1.3 Apache Commons: Logging 1.0.3 1.3.1 Notifications 1.3.2 Available under license 1.4 Aquarium 2.2.1 1.4.1 Available under license 1.5 Archive::Zip 1.30 1.5.1 Available under license 1.6 B::Deobfuscate 0.20 1.6.1 Available under license 1.7 B::Deobfuscate 0.10 1.7.1 Available under license 1.8 B::Keywords 0.06 1.8.1 Available under license 1.9 B::Keywords 1.10 1.9.1 Available under license 1.10 berkeleydb 1.85/1.86 1.11 BerkeleyDB-perl 0.49 1.11.1 Available under license 1.12 binutils 2.20.51.0.2 :5.44.el6 1.12.1 Available under license 1.13 Boost 1.41 -

Mac OS X for UNIX Users the Power of UNIX and the Simplicity of Macintosh: with Mac OS X, You Can Have the Best of Both Worlds

Mac OS X for UNIX Users The power of UNIX and the simplicity of Macintosh: With Mac OS X, you can have the best of both worlds. Key Features Apple’s Mac OS X operating system combines a robust and open FreeBSD foundation with the richness and usability of a Macintosh interface, bringing UNIX technology to Standards-based UNIX foundation the mass market for the first time. There are over 5 million Mac OS X users, including •Based on FreeBSD 4.4 and Mach 3.0 • Support for POSIX and System V APIs scientists, animators, developers, and system administrators, making Apple the largest • High-performance math libraries including vendor of UNIX-based systems. Mac OS X is the first UNIX-based environment to run vector/DSP support Microsoft Office, Adobe Photoshop, and thousands of other consumer applications •X11 window server beta release available natively—all side by side with traditional command-line and X11 applications. It is for UNIX GUIs also the best UNIX-based environment for portable computers, with full power man- •Core operating system available as Open agement and mobility support for Apple’s award-winning PowerBook G4. Source via the Darwin project Standards-based networking •TCP/IP-based networking architecture •Interoperability with NFS, AFP, and Windows (SMB/CIFS) file servers •Powerful web server (Apache) •Open Directory, an LDAP-compatible directory services architecture •Kerberos, OpenSSH, personal firewall (ipfw), and other network security technologies Familiar UNIX environment •Terminal emulation with full xterm function- -

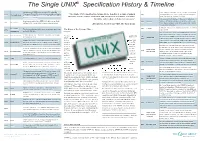

The Single UNIX® Ingle UNIX Specification History & Timeline

The Single UNIX® Specifi cationcation HistoryHistory && TTimelineimeline The history of UNIX starts back in 1969, when Ken UNIX System Laboratories (USL) becomes a company Thompson, Dennis Ritchie and others started working on 1969 The Beginning “The Single UNIX Specifi cation brings all the benefi ts of a single standard 1991 - majority-owned by AT&T. Linus Torvalds commences the “little-used PDP-7 in a corner” at Bell Labs and what operating system, namely application and information portability, scalability, Linux development. Solaris 1.0 debuts. was to become UNIX. fl exibility and freedom of choice for customers” USL releases UNIX System V Release 4.2 (Destiny). It had an assembler for a PDP-11/20, fi le system, fork(), October - XPG4 Brand launched by X/Open. December 1992 SVR4.2 1971 First Edition roff and ed. It was used for text processing of patent Allen Brown, President and CEO, The Open Group 22nd - Novell announces intent to acquire USL. Solaris documents. 2.0 and HP-UX 9.0 ship. 4.4BSD the fi nal release from Berkeley. June 16 - Novell First UNIX The fi rst installations had 3 users, no memory protection, 1993 4.4BSD 1972 The Story of the License Plate... acquires USL Installations and a 500 KB disk. Novell decides to get out of the UNIX business. Rather It was rewritten in C. This made it portable and changed than sell the business as a single entity, Novell transfers 1973 Fourth Edition In 1983 Digital Equipment Corporation the middle of it, Late the rights to the UNIX trademark and the specifi cation the history of OS’s.