Structural Credit Risk Models in Banking with Applications to the Financial Crisis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Smart Financing: the Value of Venture Debt Explained

TRINITY CAPITAL INVESTMENT SMART FINANCING: THE VALUE OF VENTURE DEBT EXPLAINED Alex Erhart, David Erhart and Vibhor Garg ABSTRACT This paper conveys the value of venture debt to startup companies and their venture capital investors. Venture debt is shown to be a smart financing option that complements venture capital and provides significant value to both common and preferred shareholders in a startup company. The paper utilizes mathematical models based on industry benchmarks for the cash burn J-curve and milestone-based valuation to illustrate the financing needs of a startup company and the impact of equity dilution. The value of venture debt is further explained in three primary examples that demonstrate the ideal situations and timing for debt financing. The paper concludes with two examples that quantify the value of venture debt by calculating the percentage of ownership saved for both entrepreneurs and investors by combining venture debt with venture capital. INTRODUCTION TO VENTURE DEBT Venture debt, also known as venture 2. Accounts receivable financing Venture debt is a subset of the venture lending or venture leasing, is a allows revenue-generating startup capital industry and is utilized worldwide.[2] type of debt financing provided to companies to borrow against It is generally accepted that for every venture capital-backed companies. their accounts receivable items four to seven venture equity dollars Unlike traditional bank lending, venture (typically 80-85%). invested in a company, one dollar is (or debt is available to startup companies could be) financed in venture debt.[3, 4] without positive cash flow or significant 3. Equipment financing is typically Therefore, a startup company should be assets to use as collateral.[1] There are structured as a lease and is used able to access roughly 14%-25% of their three primary types of venture debt: for the purchase of equipment invested capital in venture debt. -

SCORE Visa Financial Management Workbook

Welcome to Financial Management for Small Business EVERY BUSINESS DECISION IS A FINANCIAL DECISION Do you wish that your business had booming sales, substantial customer demand and rapid growth? Be careful what you wish for. Many small business owners are unprepared for success. If you fail to forecast and prepare for growth, you may be unable to bridge the ever-widening financial gap between the money coming in and the money going out. In other words, by failing to manage your cash flow, good news often turns to bad. At the same time, your business may be suddenly pummeled by a change in the economic climate, or by a string of bad luck. If you’re not prepared with a “what if” financial plan for emergencies, even a temporary downturn can become a business-ending tailspin. YOU CAN DO IT! That’s why every decision you make in your business—whether it’s creating a website, investing in classified ads, or hiring an employee—has a financial impact. And each business decision affects your cash flow— the amount of money that comes in and goes out of your business. We’ll help you with your decision-making with this online primer. Throughout this guide, you’ll find case- studies, examples, and expert guidance on every aspect of small business finances. You’ll see that our goal is the same as yours—to make your business financially successful. FOUR KEYS TO SUCCESS Maximize your income and the speed with which you get paid. Throughout this site, we offer tips and checklists for dealing with maximizing income. -

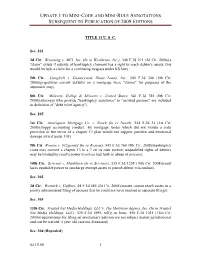

Update 1 to Mini-Code and Mini-Rule Annotations Subsequent to Publication of 2009 Editions

UPDATE 1 TO MINI-CODE AND MINI-RULE ANNOTATIONS SUBSEQUENT TO PUBLICATION OF 2009 EDITIONS TITLE 11 U. S. C. Sec. 101 2d Cir. Browning v. MCI, Inc. (In re Worldcom, Inc.), 546 F.3d 211 (2d Cir. 2008)(a "claim" exists if outside of bankruptcy claimant has a right to reach debtor's assets; this would include a claim for a continuing trespass under KS law). 5th Cir. Campbell v. Countrywide Home Loans, Inc., 545 F.3d 348 (5th Cir. 2008)(prepetition escrow defaults on a mortgage were "claims" for purposes of the automatic stay). 8th Cir. Milavetz, Gallop & Milavetz v. United States, 541 F.3d 785 (8th Cir. 2008)(attorneys who provide "bankruptcy assistance" to "assisted persons" are included in definition of "debt relief agency"). Sec. 105 1st Cir. Ameriquest Mortgage Co. v. Nosek (In re Nosek), 544 F.3d 34 (1st Cir. 2008)(sloppy accounting conduct by mortgage lender which did not violate a code provision or the terms of a chapter 13 plan would not support punitive and emotional damage award under 105). 9th Cir Rosson v. Fitzgerald (In re Rosson), 545 F.3d 764 (9th Cir. 2008)(bankruptcy court may convert a chapter 13 to a 7 on its own motion; unqualified rights of debtors may be limited by court's power to police bad faith or abuse of process). 10th Cir. Scrivner v. Mashburn (In re Scrivner), 535 F.3d 1258 (10th Cir. 2008)(court lacks equitable power to surcharge exempt assets to punish debtor misconduct). Sec. 302 2d Cir. Wornick v. Gaffney, 44 F.3d 486 (2d Cir. -

Financial Management (203)

MBA (Business Economics) II Semester Paper- Financial Management (203) UNIT- II Topic- Over-Capitalisation and Under- Capitalisation Meaning of Over-Capitalisation Overcapitalization occurs when a company has issued more debt and equity than its assets are worth. The market value of the company is less than the total capitalized value of the company. An overcapitalized company might be paying more in interest and dividend payments than it has the ability to sustain long-term. The heavy debt burden and associated interest payments might be a strain on profits and reduce the amount of retained funds the company has to invest in research and development or other projects. To escape the situation, the company may need to reduce its debt load or buy back shares to reduce the company's dividend payments. Restructuring the company's capital is a solution to this problem. The phrase ‘over-capitalisation’ has been misunderstood with abundance of capital. In actual practice, overcapitalized concerns have been found short of funds. Truly speaking, over- capitalisation is a relative term used to denote that the firm in question is not earning reasonable income on its funds. According to Bonneville, Dewey and Kelly, “When a business is unable to earn a fair rate of return on its outstanding securities, it is over-capitalized.” Likewise, Gerstenberg opines that “a corporation is over-capitalized when its earnings are not large enough to yield a fair return on the amount of stocks and bonds that have been issued.” Thus, over-capitalisation refers to that state of affairs where earnings of the corporation do not justify the amount of capital invested in the business. -

Private Equity Value Creation in Finance: Evidence from Life Insurance

University of Pennsylvania Carey Law School Penn Law: Legal Scholarship Repository Faculty Scholarship at Penn Law 2-14-2020 Private Equity Value Creation in Finance: Evidence from Life Insurance Divya Kirti International Monetary Fund Natasha Sarin University of Pennsylvania Carey Law School Follow this and additional works at: https://scholarship.law.upenn.edu/faculty_scholarship Part of the Banking and Finance Law Commons, Corporate Finance Commons, Finance Commons, Finance and Financial Management Commons, Insurance Commons, Insurance Law Commons, and the Law and Economics Commons Repository Citation Kirti, Divya and Sarin, Natasha, "Private Equity Value Creation in Finance: Evidence from Life Insurance" (2020). Faculty Scholarship at Penn Law. 2154. https://scholarship.law.upenn.edu/faculty_scholarship/2154 This Article is brought to you for free and open access by Penn Law: Legal Scholarship Repository. It has been accepted for inclusion in Faculty Scholarship at Penn Law by an authorized administrator of Penn Law: Legal Scholarship Repository. For more information, please contact [email protected]. Private Equity Value Creation in Finance: Evidence from Life Insurance Divya Kirti∗1 and Natasha Sarin2 1International Monetary Fund 2University of Pennsylvania Law School and Wharton School of Business January 13, 2020 Abstract This paper studies how private equity buyouts create value in the insurance industry, where decen- tralized regulation creates opportunities for aggressive tax and capital management. Using novel data on 57 large private equity deals in the insurance industry, we show that buyouts create value by decreasing insurers' tax liabilities; and by reaching-for-yield: PE firms tilt their subsidiaries' bond portfolios toward junk bonds while avoiding corresponding capital charges. -

In the United States Bankruptcy Court for the District of Delaware

Case 19-10844 Doc 3 Filed 04/15/19 Page 1 of 69 IN THE UNITED STATES BANKRUPTCY COURT FOR THE DISTRICT OF DELAWARE In re Chapter 11 Achaogen, Inc., Case No. 19-10844 (__) Debtor.1 DECLARATION OF BLAKE WISE IN SUPPORT OF FIRST DAY RELIEF I, Blake Wise, hereby declare under penalty of perjury, pursuant to section 1746 of title 28 of the United States Code, as follows: 1. I have served as the Chief Executive Officer of Achaogen, Inc. (the “Debtor” or “Achaogen”) since January 2018. Prior to serving as CEO, I was the Chief Operating Officer of Achaogen from October 2015 to December 2017 and was also President of Achaogen from February 2017 to December 2017. In such capacities, I am familiar with the Debtor’s day-to-day operations, business, and financial affairs. Previously, I spent 12 years at Genentech beginning in marketing and sales and spent the last 2 years as the Vice President of BioOncology. I also currently serve as a member of the Board of Directors of Calithera Biosciences, a publicly traded bioscience company focused on discovering and developing small molecule drugs that slow tumor growth. I hold a Bachelor’s Degree in Business Economics from the University of California, Santa Barbara and a Master of Business Administration from the University of California, Berkeley – Walter A. Haas School of Business. 1 The last four digits of the Debtor’s federal tax identification number are 3693. The Debtor’s mailing address for purposes of this Chapter 11 Case is 1 Tower Place, Suite 400, South San Francisco, CA 94080. -

Getting Beyond Breakeven a Review of Capitalization Needs and Challenges of Philadelphia-Area Arts and Culture Organizations

Getting Beyond Breakeven A Review of Capitalization Needs and Challenges of Philadelphia-Area Arts and Culture Organizations Commissioned by The Pew Charitable Trusts and the William Penn Foundation Author Susan Nelson, Principal, TDC Contributors Allison Crump, Senior Associate, TDC Juliana Koo, Senior Associate, TDC This report was made possible by The Pew Charitable Trusts and the William Penn Foundation. It may be downloaded at www.tdcorp.org/pubs. The Pew Charitable Trusts is driven by the power of knowledge to solve today’s most challenging problems. Pew applies a rigorous, analytical approach to improve public policy, inform the public, and stimulate civic life. We partner with a diverse range of donors, public and private organizations, and concerned citizens who share our commitment to fact-based solutions and goal-driven investments to improve society. Learn more about Pew at www.pewtrusts.org. The William Penn Foundation, founded in 1945 by Otto and Phoebe Haas, is dedicated to improving the quality of life in the Greater Philadelphia region through efforts that foster rich cultural expression, strengthen children’s futures, and deepen connections to nature and community. In partnership with others, the Foundation works to advance a vital, just, and caring community. Learn more about the Foundation online at www.williampennfoundation.org. TDC is one of the nation’s oldest and largest providers of management consulting services to the nonprofit sector. For over 40 years, TDC has worked exclusively with nonprofit, governmental, educational and philanthropic organizations, providing them with the business and management tools critical to carrying out their missions effectively. Learn more about TDC at www.tdcorp.org. -

Taking the Risk out of Systemic Risk Measurement by Levent Guntay and Paul Kupiec1 August 2014

Taking the risk out of systemic risk measurement by Levent Guntay and Paul Kupiec1 August 2014 ABSTRACT Conditional value at risk (CoVaR) and marginal expected shortfall (MES) have been proposed as measures of systemic risk. Some argue these statistics should be used to impose a “systemic risk tax” on financial institutions. These recommendations are premature because CoVaR and MES are ad hoc measures that: (1) eschew statistical inference; (2) confound systemic and systematic risk; and, (3) poorly measure asymptotic tail dependence in stock returns. We introduce a null hypothesis to separate systemic from systematic risk and construct hypothesis tests. These tests are applied to daily stock returns data for over 3500 firms during 2006-2007. CoVaR (MES) tests identify almost 500 (1000) firms as systemically important. Both tests identify many more real-side firms than financial firms, and they often disagree about which firms are systemic. Analysis of hypotheses tests’ performance for nested alternative distributions finds: (1) skewness in returns can cause false test rejections; (2) even when asymptotic tail dependence is very strong, CoVaR and MES may not detect systemic risk. Our overall conclusion is that CoVaR and MES statistics are unreliable measures of systemic risk. Key Words: systemic risk, conditional value at risk, CoVaR, marginal expected shortfall, MES, systemically important financial institutions, SIFIs 1 The authors are, respectively, Senior Financial Economist, Federal Deposit Insurance Corporation and Resident Scholar, The American Enterprise Institute. The views in this paper are those of the authors alone. They do not represent the official views of the American Enterprise Institute or the Federal Deposit Insurance Corporation. -

Entered Tawana C

U.S. BANKRUPTCY COURT NORTHERN DISTRICT OF TEXAS ENTERED TAWANA C. MARSHALL, CLERK THE DATE OF ENTRY IS ON THE COURT'S DOCKET The following constitutes the ruling of the court and has the force and effect therein described. United States Bankruptcy Judge Signed March 28, 2013 IN THE UNITED STATES BANKRUPTCY COURT FOR THE NORTHERN DISTRICT OF TEXAS DALLAS DIVISION IN RE: § § CASE NO. 09-38306-sgj-7 EQUIPMENT EQUITY HOLDINGS, INC., § (Chapter 7) § Debtor. § _____________________________________________________________________________ HAROLD GERNSBACHER, ANDREW § SCRUGGS, JAMES SCRUGGS, LEE § SCRUGGS, WILLIAM SCRUGGS, § ROBERT ZINTGRAFF, DAVID § CAMPBELL, REED JACKSON, § Adversary No. 11-03362-sgj CYNTHIA JACKSON, LYNDA § CAMPBELL, JEFF GRANDY, JEFFREY § VREELAND, WALTER ESKURI, § ROGER VANG, REUBEN PALM, JAMES § PALM, RICHARD PALM, MARK PALM, § MICHAEL PALM, THOMAS PALM, § SHANNON PALM, SUSAN PALM, § MAUREEN PALM, PAMELA PALM, § KRISTEN PALM, GENE LEE, STEPHEN § HOWZE and STEPHEN REYNOLDS, § MEMORANDUM OPINION PAGE 1 § Plaintiffs, § v. § § DAVID CAMPBELL, S. REED JACKSON, § ROBERT N. ZINTGRAFF, ANDREW § SCRUGGS, WALTER ESKURI, HAROLD § GERNSBACHER, GLENCOE GROWTH § CLOSELY-HELD BUSINESS FUND, L.P., § STOCKWELL FUND, L.P., § MASSACHUSETTS MUTUAL LIFE § INSURANCE COMPANY, MASSMUTUAL § HIGH YIELD PARTNERS II LLC, § GLENCOE CAPITAL PARTNERS II § L.P., THOMAS M. GARVIN, GLENCOE § CAPITAL PARTNERS II, THOMAS L. § BINDLEY REVOCABLE TRUST, § KEVIN BRUCE, ED POORE, and BILL § AISENBERG, § § Defendants. § MEMORANDUM OPINION IN SUPPORT OF JUDGMENT: -

Distressed Mergers and Acquisitions

Wachtell, Lipton, Rosen & Katz DISTRESSED MERGERS AND ACQUISITIONS 2013 Summary of Contents Introduction ..............................................................................................................1 I. Out-of-Court Workouts of Troubled Companies ...............................................3 A. Initial Responses to Distress ........................................................................4 B. Out-of-Court Transactions ...........................................................................9 II. Prepackaged and Pre-Negotiated Bankruptcy Plans ........................................40 A. Prepackaged Plans .....................................................................................41 B. Pre-Negotiated Plans ..................................................................................46 C. Pre-Negotiated Section 363 Sales ..............................................................48 III. Acquisitions Through Bankruptcy ...................................................................48 A. Acquisitions Through a Section 363 Auction ............................................48 B. Acquisitions Through the Conventional Plan Process .............................101 IV. Acquisition and Trading in Claims of Distressed Companies .......................150 A. What Claims Should an Investor Seeking Control Buy? .........................150 B. What Rights Does the Claim Purchaser Obtain? .....................................154 C. Acquisition of Claims Confers Standing to Be Heard in a Chapter 11 Case -

Piercing the Corporate Veil—The Undercapitalization Factor

Chicago-Kent Law Review Volume 59 Issue 1 Article 2 December 1982 Piercing the Corporate Veil—The Undercapitalization Factor Harvey Gelb University of Wyoming College of Law Follow this and additional works at: https://scholarship.kentlaw.iit.edu/cklawreview Part of the Business Organizations Law Commons, and the Legal Remedies Commons Recommended Citation Harvey Gelb, Piercing the Corporate Veil—The Undercapitalization Factor, 59 Chi.-Kent L. Rev. 1 (1982). Available at: https://scholarship.kentlaw.iit.edu/cklawreview/vol59/iss1/2 This Article is brought to you for free and open access by Scholarly Commons @ IIT Chicago-Kent College of Law. It has been accepted for inclusion in Chicago-Kent Law Review by an authorized editor of Scholarly Commons @ IIT Chicago-Kent College of Law. For more information, please contact [email protected], [email protected]. PIERCING THE CORPORATE VEIL-THE UNDERCAPITALIZATION FACTOR HARVEY GELB* Limited liability is regarded commonly as a corporate attribute, indeed an advantage of doing business in the corporate form.' Its sig- nificance to shareholders may be diminished to the extent that creditors obtain personal guarantees from them 2 or insurance covers certain lia- bilities. However, neither guarantees nor insurance are always present or adequate3 and when corporate funds are otherwise insufficient to cover judgments, frustrated plaintiffs may sue shareholder-defendants in defiance of the limited liability principle. The recognition of a corporation as an entity separate from its shareholders is well established and furnishes a theoretical basis for the idea that the liabilities of the corporation, whether tort or contract, are its liabilities and not those of its shareholders.4 Moreover, limited shareholder liability serves an important public policy-the policy of encouraging investment by limiting risks. -

The Three Real Justifications for Piercing the Corporate Veil

FINDING ORDER IN THE MORASS: THE THREE REAL JUSTIFICATIONS FOR PIERCING THE CORPORATE VEIL Jonathan Maceyt &Joshua Mittstt Few doctrines are more shrouded in mystery or litigated more often than piercing the corporate veil. We develop a new theoreticalframework that posits that veil piercing is done to achieve three discrete public policy goals, each of which is consistent with economic efficiency: (1) achieving the pur- pose of an existing statute or regulation; (2) preventing shareholdersfrom obtaining credit by misrepresentation;and (3) promoting the bankruptcy val- ues of achieving the orderly, efficient resolution of a bankrupt's estate. We analyze the facts of veil-piercing cases to show how the outcomes are ex- plained by our taxonomy. We demonstrate that a supposedjustification for veil piercing-undercapitalization-infact rarely, if ever, provides an inde- pendent basis for piercing the corporate veil. Finally, we employ modern quantitative machine learningmethods never before utilized in legal scholar- ship to analyze thefull text of 9,380judicialopinions. We demonstrate that our theories systematically predict veil-piercing outcomes, that the widely in- voked rationaleof "undercapitalization"of the business poorly explains these cases, and that our theories most closely reflect the actual textual structure of the opinions. INTRODUCTION .................................................... 100 I. THE CURRENT MORASS .................................. 104 A. Limited Liability and Veil-Piercing Doctrine ........ 104 B. Scholarly Attempts to Chart a Path Forward ........ 110 II. A NEW TAX ONOMY ...................................... 113 A. Achieving the Purpose of a Regulatory or Statutory Schem e ............................................. 115 1. Environmental Law .............................. 115 2. ERISA .......................................... 118 B. Avoiding Misrepresentation by Shareholders ........ 123 1. Contract Creditors ................................ 123 2. Tort Creditors ...................................