A Neurophysiologically-Inspired Statistical Language Model

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Improving Neural Language Models with A

Published as a conference paper at ICLR 2017 IMPROVING NEURAL LANGUAGE MODELS WITH A CONTINUOUS CACHE Edouard Grave, Armand Joulin, Nicolas Usunier Facebook AI Research {egrave,ajoulin,usunier}@fb.com ABSTRACT We propose an extension to neural network language models to adapt their pre- diction to the recent history. Our model is a simplified version of memory aug- mented networks, which stores past hidden activations as memory and accesses them through a dot product with the current hidden activation. This mechanism is very efficient and scales to very large memory sizes. We also draw a link between the use of external memory in neural network and cache models used with count based language models. We demonstrate on several language model datasets that our approach performs significantly better than recent memory augmented net- works. 1 INTRODUCTION Language models, which are probability distributions over sequences of words, have many appli- cations such as machine translation (Brown et al., 1993), speech recognition (Bahl et al., 1983) or dialogue agents (Stolcke et al., 2000). While traditional neural networks language models have ob- tained state-of-the-art performance in this domain (Jozefowicz et al., 2016; Mikolov et al., 2010), they lack the capacity to adapt to their recent history, limiting their application to dynamic environ- ments (Dodge et al., 2015). A recent approach to solve this problem is to augment these networks with an external memory (Graves et al., 2014; Grefenstette et al., 2015; Joulin & Mikolov, 2015; Sukhbaatar et al., 2015). These models can potentially use their external memory to store new information and adapt to a changing environment. -

Formatting Instructions for NIPS -17

System Combination for Machine Translation Using N-Gram Posterior Probabilities Yong Zhao Xiaodong He Georgia Institute of Technology Microsoft Research Atlanta, Georgia 30332 1 Microsoft Way, Redmond, WA 98052 [email protected] [email protected] Abstract This paper proposes using n-gram posterior probabilities, which are estimated over translation hypotheses from multiple machine translation (MT) systems, to improve the performance of the system combination. Two ways using n-gram posteriors in confusion network decoding are presented. The first way is based on n-gram posterior language model per source sentence, and the second, called n-gram segment voting, is to boost word posterior probabilities with n-gram occurrence frequencies. The two n-gram posterior methods are incorporated in the confusion network as individual features of a log-linear combination model. Experiments on the Chinese-to-English MT task show that both methods yield significant improvements on the translation performance, and an combination of these two features produces the best translation performance. 1 Introduction In the past several years, the system combination approach, which aims at finding a consensus from a set of alternative hypotheses, has been shown with substantial improvements in various machine translation (MT) tasks. An example of the combination technique was ROVER [1], which was proposed by Fiscus to combine multiple speech recognition outputs. The success of ROVER and other voting techniques give rise to a widely used combination scheme referred as confusion network decoding [2][3][4][5]. Given hypothesis outputs of multiple systems, a confusion network is built by aligning all these hypotheses. The resulting network comprises a sequence of correspondence sets, each of which contains the alternative words that are aligned with each other. -

Membrane Properties Underlying the Firing of Neurons in the Avian Cochlear Nucleus

The Journal of Neuroscience, September 1994, 74(g): 53526364 Membrane Properties Underlying the Firing of Neurons in the Avian Cochlear Nucleus A. D. Reyes,i*2 E. W Rubel,* and W. J. Spain2,3z4 ‘Department of Medical Genetics, *Virginia Merrill Bloedel Hearing Research Center, and 3Department of Medicine (Division of Neurology), University of Washington School of Medicine, Seattle, Washington 98195, and 4Seattle VA Medical Center, Seattle, Washington 98108 Neurons of the avian nucleus magnocellularis (NM) relay The avian nucleus magnocellularis(NM) contains the second- auditory information from the Vlllth nerve to other parts of order auditory neurons that serve as a relay in the pathway the auditory system. To examine the cellular properties that responsiblefor sound localization in the azimuth. NM neurons permit NM neurons to transmit reliably the temporal char- are innervated by VIIIth nerve fibers and project bilaterally to acteristics of the acoustic stimulus, we performed whole- neurons of the nucleus laminaris (NL, Parks and Rubel, 1975; cell recordings in neurons of the chick NM using an in vitro Rubel and Parks, 1975; Young and Rubel, 1983; Carr and Kon- thin slice preparation. NM neurons exhibited strong outward ishi, 1990). Differences in the times of arrival of sound to the rectification near resting potential; the voltage responses to two ears, which vary with location of sound, translate to dif- depolarizing current steps were substantially smaller than ferencesin the arrival times of impulses to NL from the ipsi- to equivalent hyperpolarizing steps. Suprathreshold current lateral and contralateral NM (Overholt et al., 1992; Josephand steps evoked only a single action potential at the start of Hyson, 1993). -

Rezension Im Erweiterten Forschungskontext: ESC 2016

Repositorium für die Medienwissenschaft Christoph Oliver Mayer Rezension im erweiterten Forschungskontext: ESC 2016 https://doi.org/10.17192/ep2016.1.4446 Veröffentlichungsversion / published version Rezension / review Empfohlene Zitierung / Suggested Citation: Mayer, Christoph Oliver: Rezension im erweiterten Forschungskontext: ESC. In: MEDIENwissenschaft: Rezensionen | Reviews, Jg. 33 (2016), Nr. 1. DOI: https://doi.org/10.17192/ep2016.1.4446. Nutzungsbedingungen: Terms of use: Dieser Text wird unter einer Creative Commons - This document is made available under a creative commons - Namensnennung 3.0/ Lizenz zur Verfügung gestellt. Nähere Attribution 3.0/ License. For more information see: Auskünfte zu dieser Lizenz finden Sie hier: https://creativecommons.org/licenses/by/3.0/ https://creativecommons.org/licenses/by/3.0/ Hörfunk und Fernsehen 99 Rezension im erweiterten Forschungskontext: ESC Christine Ehardt, Georg Vogt, Florian Wagner (Hg.): Eurovision Song Contest: Eine kleine Geschichte zwischen Körper, Geschlecht und Nation Wien: Zaglossus 2015, 344 S., ISBN 9783902902320, EUR 19,95 Der Sieg von Conchita Wurst beim Triebel 2011; Goldstein/Taylor 2013) Eurovision Song Contest (ESC) 2014 sowie von einzelnen Wissenschaft- und die sich daran anschließenden ler_innen unterschiedlicher Disziplinen europaweiten Diskussionen um Tole- (z.B. Sieg 2013; Mayer 2015) angesto- ranz und Gleichberechtigung haben ßen wurden. eine ganze Palette von Publikationen Gemeinsam ist all den neueren über den bedeutendsten Populärmusik- Ansätzen ein gesteigertes Interesse für Wettbewerb hervorgerufen (z.B. Wol- Körperinszenierungen, Geschlech- ther/Lackner 2014; Lackner/Rau 2015; terrollen und Nationsbildung, denen Vogel et al. 2015; Kennedy O’Connor sich auch der als „kleine Geschichte“ 2015; Vignoles/O’Brien 2015). Nur untertitelte Sammelband der Wie- wenige wissenschaftliche Monografien ner Medienwissenschaftler_innen (z.B. -

Karaoke Mietsystem Songlist

Karaoke Mietsystem Songlist Ein Karaokesystem der Firma Showtronic Solutions AG in Zusammenarbeit mit Karafun. Karaoke-Katalog Update vom: 13/10/2020 Singen Sie online auf www.karafun.de Gesamter Katalog TOP 50 Shallow - A Star is Born Take Me Home, Country Roads - John Denver Skandal im Sperrbezirk - Spider Murphy Gang Griechischer Wein - Udo Jürgens Verdammt, Ich Lieb' Dich - Matthias Reim Dancing Queen - ABBA Dance Monkey - Tones and I Breaking Free - High School Musical In The Ghetto - Elvis Presley Angels - Robbie Williams Hulapalu - Andreas Gabalier Someone Like You - Adele 99 Luftballons - Nena Tage wie diese - Die Toten Hosen Ring of Fire - Johnny Cash Lemon Tree - Fool's Garden Ohne Dich (schlaf' ich heut' nacht nicht ein) - You Are the Reason - Calum Scott Perfect - Ed Sheeran Münchener Freiheit Stand by Me - Ben E. King Im Wagen Vor Mir - Henry Valentino And Uschi Let It Go - Idina Menzel Can You Feel The Love Tonight - The Lion King Atemlos durch die Nacht - Helene Fischer Roller - Apache 207 Someone You Loved - Lewis Capaldi I Want It That Way - Backstreet Boys Über Sieben Brücken Musst Du Gehn - Peter Maffay Summer Of '69 - Bryan Adams Cordula grün - Die Draufgänger Tequila - The Champs ...Baby One More Time - Britney Spears All of Me - John Legend Barbie Girl - Aqua Chasing Cars - Snow Patrol My Way - Frank Sinatra Hallelujah - Alexandra Burke Aber Bitte Mit Sahne - Udo Jürgens Bohemian Rhapsody - Queen Wannabe - Spice Girls Schrei nach Liebe - Die Ärzte Can't Help Falling In Love - Elvis Presley Country Roads - Hermes House Band Westerland - Die Ärzte Warum hast du nicht nein gesagt - Roland Kaiser Ich war noch niemals in New York - Ich War Noch Marmor, Stein Und Eisen Bricht - Drafi Deutscher Zombie - The Cranberries Niemals In New York Ich wollte nie erwachsen sein (Nessajas Lied) - Don't Stop Believing - Journey EXPLICIT Kann Texte enthalten, die nicht für Kinder und Jugendliche geeignet sind. -

Eurovision Song Contest: Lena-Meyer Landrut Verpasst Mit „Taken by a Stranger“ Die Charts-Spitze

09.03.2011 http://www.derwesten.de/nachrichten/Lena-Meyer-Landrut-verpasst-mit-Taken-By-A-Stranger- die-Charts-Spitze-id4397104.html Eurovision Song Contest Lena-Meyer Landrut verpasst mit „Taken By A Stranger“ die Charts-Spitze Nachrichten, 09.03.2011, Ingmar Kreienbrink Lena Meyer-Landrut wird für Deutschland beim Eurovision Song Contest mit dem Song „Taken By A Stranger“ das Projekt Titelverteidigung angehen. (Foto: © Sandra Ludewig / Universal Music 2011) Essen. Die Bilanz ist auf den ersten Blick beeindruckend: Mit fünf Singles aus ihrem neuen Album landete Lena Meyer-Landrut gleichzeitig in den Deutschen Charts. Doch „Taken by a stranger“ - ihr Song für den Eurovision Song Contest - landet hinter „Grenade“ von Bruno Mars „nur“ auf Platz 2. Bei dem vorherigen Medienhype durch die ARD/ProSieben-Kooperations-Sendung „Unser Song für Deutschland“ ist das kleine Überraschung. Schließlich sprang ihr Sieger-Lied „Satellite“ vor einem Jahr ebenso von Null auf 1 wie zahlreiche andere Songs aus anderen Casting-Shows wie etwa „Deutschland sucht den Superstar“ (DSDS). „Mittleres Chart-Fiasko für Plattenfirma Universal“ Jetzt diskutieren die Fans über die Folgen und die Außenwirkung durch die „Schlappe“. Schließlich ist „Taken by a Stranger“ für den Eurovision Song Contest „Unser Song für Deutschland“. In einem Blog ist unter anderem von einem mittleren Chart-Fiasko für die Plattenfirma „Universal“ die Rede. Doch die Gründe für das Ergebnis scheinen wesentlich komplexer. So hat es offenbar in der Startwoche eine Verkaufssperre für den Titel bei wichtigen Downloadshops vom Media Markt, Saturn, MedionMusic und Jamba gegeben. „Download-Verkäufe machen rund 85 Prozent der offiziellen deutschen Single Charts aus“, erläutert Hans Schmucker von media control auf DerWesten-Anfrage. -

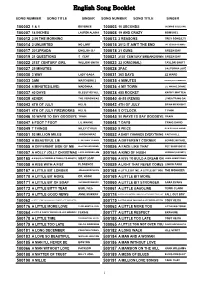

English Song Booklet

English Song Booklet SONG NUMBER SONG TITLE SINGER SONG NUMBER SONG TITLE SINGER 100002 1 & 1 BEYONCE 100003 10 SECONDS JAZMINE SULLIVAN 100007 18 INCHES LAUREN ALAINA 100008 19 AND CRAZY BOMSHEL 100012 2 IN THE MORNING 100013 2 REASONS TREY SONGZ,TI 100014 2 UNLIMITED NO LIMIT 100015 2012 IT AIN'T THE END JAY SEAN,NICKI MINAJ 100017 2012PRADA ENGLISH DJ 100018 21 GUNS GREEN DAY 100019 21 QUESTIONS 5 CENT 100021 21ST CENTURY BREAKDOWN GREEN DAY 100022 21ST CENTURY GIRL WILLOW SMITH 100023 22 (ORIGINAL) TAYLOR SWIFT 100027 25 MINUTES 100028 2PAC CALIFORNIA LOVE 100030 3 WAY LADY GAGA 100031 365 DAYS ZZ WARD 100033 3AM MATCHBOX 2 100035 4 MINUTES MADONNA,JUSTIN TIMBERLAKE 100034 4 MINUTES(LIVE) MADONNA 100036 4 MY TOWN LIL WAYNE,DRAKE 100037 40 DAYS BLESSTHEFALL 100038 455 ROCKET KATHY MATTEA 100039 4EVER THE VERONICAS 100040 4H55 (REMIX) LYNDA TRANG DAI 100043 4TH OF JULY KELIS 100042 4TH OF JULY BRIAN MCKNIGHT 100041 4TH OF JULY FIREWORKS KELIS 100044 5 O'CLOCK T PAIN 100046 50 WAYS TO SAY GOODBYE TRAIN 100045 50 WAYS TO SAY GOODBYE TRAIN 100047 6 FOOT 7 FOOT LIL WAYNE 100048 7 DAYS CRAIG DAVID 100049 7 THINGS MILEY CYRUS 100050 9 PIECE RICK ROSS,LIL WAYNE 100051 93 MILLION MILES JASON MRAZ 100052 A BABY CHANGES EVERYTHING FAITH HILL 100053 A BEAUTIFUL LIE 3 SECONDS TO MARS 100054 A DIFFERENT CORNER GEORGE MICHAEL 100055 A DIFFERENT SIDE OF ME ALLSTAR WEEKEND 100056 A FACE LIKE THAT PET SHOP BOYS 100057 A HOLLY JOLLY CHRISTMAS LADY ANTEBELLUM 500164 A KIND OF HUSH HERMAN'S HERMITS 500165 A KISS IS A TERRIBLE THING (TO WASTE) MEAT LOAF 500166 A KISS TO BUILD A DREAM ON LOUIS ARMSTRONG 100058 A KISS WITH A FIST FLORENCE 100059 A LIGHT THAT NEVER COMES LINKIN PARK 500167 A LITTLE BIT LONGER JONAS BROTHERS 500168 A LITTLE BIT ME, A LITTLE BIT YOU THE MONKEES 500170 A LITTLE BIT MORE DR. -

Identity, Spectacle and Representation: Israeli Entries at the Eurovision

Identity, spectacle and representation: Israeli entries at the Eurovision Song Contest1 Identidad, espectáculo y representación: las candidaturas de Israel en el Festival de la Canción de Eurovisión José Luis Panea holds a Degree in Fine Arts (University of Salamanca, 2013), and has interchange stays at Univer- sity of Lisbon and University of Barcelona. Master’s degree in Art and Visual Practices Research at University of Castilla-La Mancha with End of Studies Special Prize (2014) and Pre-PhD contract in the research project ARES (www.aresvisuals.net). Editor of the volume Secuencias de la experiencia, estadios de lo visible. Aproximaciones al videoarte español 2017) with Ana Martínez-Collado. Aesthetic of Modernity teacher and writer in several re- views especially about his research line ‘Identity politics at the Eurovision Song Contest’. Universidad de Castilla-La Mancha, España. [email protected] ORCID: 0000-0002-8989-9547 Recibido: 01/08/2018 - Aceptado: 14/11/2018 Received: 01/08/2018 - Accepted: 14/11/2018 Abstract: Resumen: Through a sophisticated investment, both capital and symbolic, A partir de una sofisticada inversión, capital y simbólica, el Festival the Eurovision Song Contest generates annually a unique audio- de Eurovisión genera anualmente un espectáculo audiovisual en la ISSN: 1696-019X / e-ISSN: 2386-3978 visual spectacle, debating concepts as well as community, televisión pública problematizando conceptos como “comunidad”, Europeanness or cultural identity. Following the recent researches “Europeidad” e “identidad cultural”. Siguiendo las investigaciones re- from the An-glo-Saxon ambit, we will research different editions of cientes en el ámbito anglosajón, recorreremos sus distintas ediciones the show. -

A Study on Neural Network Language Modeling

A Study on Neural Network Language Modeling Dengliang Shi [email protected] Shanghai, Shanghai, China Abstract An exhaustive study on neural network language modeling (NNLM) is performed in this paper. Different architectures of basic neural network language models are described and examined. A number of different improvements over basic neural network language mod- els, including importance sampling, word classes, caching and bidirectional recurrent neural network (BiRNN), are studied separately, and the advantages and disadvantages of every technique are evaluated. Then, the limits of neural network language modeling are ex- plored from the aspects of model architecture and knowledge representation. Part of the statistical information from a word sequence will loss when it is processed word by word in a certain order, and the mechanism of training neural network by updating weight ma- trixes and vectors imposes severe restrictions on any significant enhancement of NNLM. For knowledge representation, the knowledge represented by neural network language models is the approximate probabilistic distribution of word sequences from a certain training data set rather than the knowledge of a language itself or the information conveyed by word sequences in a natural language. Finally, some directions for improving neural network language modeling further is discussed. Keywords: neural network language modeling, optimization techniques, limits, improve- ment scheme 1. Introduction Generally, a well-designed language model makes a critical difference in various natural language processing (NLP) tasks, like speech recognition (Hinton et al., 2012; Graves et al., 2013a), machine translation (Cho et al., 2014a; Wu et al., 2016), semantic extraction (Col- lobert and Weston, 2007, 2008) and etc. -

Eusipco 2013 1569741253

EUSIPCO 2013 1569741253 1 2 3 4 PLSA ENHANCED WITH A LONG-DISTANCE BIGRAM LANGUAGE MODEL FOR SPEECH 5 RECOGNITION 6 7 Md. Akmal Haidar and Douglas O’Shaughnessy 8 9 INRS-EMT, 6900-800 de la Gauchetiere Ouest, Montreal (Quebec), H5A 1K6, Canada 10 11 12 13 ABSTRACT sition to extract the semantic information for different words and documents. In PLSA and LDA, semantic properties of 14 We propose a language modeling (LM) approach using back- words and documents can be shown in probabilistic topics. 15 ground n-grams and interpolated distanced n-grams for The PLSA latent topic parameters are trained by maximizing 16 speech recognition using an enhanced probabilistic latent the likelihood of the training data using an expectation max- 17 semantic analysis (EPLSA) derivation. PLSA is a bag-of- imization (EM) procedure and have been successfully used 18 words model that exploits the topic information at the docu- for speech recognition [3, 5]. The LDA model has been used 19 ment level, which is inconsistent for the language modeling successfully in recent research work for LM adaptation [6, 7]. 20 in speech recognition. In this paper, we consider the word A bigram LDA topic model, where the word probabilities are 21 sequence in modeling the EPLSA model. Here, the predicted conditioned on their preceding context and the topic proba- 22 word of an n-gram event is drawn from a topic that is cho- bilities are conditioned on the documents, has been recently 23 sen from the topic distribution of the (n-1) history words. investigated [8]. -

Eurovision Karaoke

1 Eurovision Karaoke ALBANÍA ASERBAÍDJAN ALB 06 Zjarr e ftohtë AZE 08 Day after day ALB 07 Hear My Plea AZE 09 Always ALB 10 It's All About You AZE 14 Start The Fire ALB 12 Suus AZE 15 Hour of the Wolf ALB 13 Identitet AZE 16 Miracle ALB 14 Hersi - One Night's Anger ALB 15 I’m Alive AUSTURRÍKI ALB 16 Fairytale AUT 89 Nur ein Lied ANDORRA AUT 90 Keine Mauern mehr AUT 04 Du bist AND 07 Salvem el món AUT 07 Get a life - get alive AUT 11 The Secret Is Love ARMENÍA AUT 12 Woki Mit Deim Popo AUT 13 Shine ARM 07 Anytime you need AUT 14 Conchita Wurst- Rise Like a Phoenix ARM 08 Qele Qele AUT 15 I Am Yours ARM 09 Nor Par (Jan Jan) AUT 16 Loin d’Ici ARM 10 Apricot Stone ARM 11 Boom Boom ÁSTRALÍA ARM 13 Lonely Planet AUS 15 Tonight Again ARM 14 Aram Mp3- Not Alone AUS 16 Sound of Silence ARM 15 Face the Shadow ARM 16 LoveWave 2 Eurovision Karaoke BELGÍA UKI 10 That Sounds Good To Me UKI 11 I Can BEL 86 J'aime la vie UKI 12 Love Will Set You Free BEL 87 Soldiers of love UKI 13 Believe in Me BEL 89 Door de wind UKI 14 Molly- Children of the Universe BEL 98 Dis oui UKI 15 Still in Love with You BEL 06 Je t'adore UKI 16 You’re Not Alone BEL 12 Would You? BEL 15 Rhythm Inside BÚLGARÍA BEL 16 What’s the Pressure BUL 05 Lorraine BOSNÍA OG HERSEGÓVÍNA BUL 07 Water BUL 12 Love Unlimited BOS 99 Putnici BUL 13 Samo Shampioni BOS 06 Lejla BUL 16 If Love Was a Crime BOS 07 Rijeka bez imena BOS 08 D Pokušaj DUET VERSION DANMÖRK BOS 08 S Pokušaj BOS 11 Love In Rewind DEN 97 Stemmen i mit liv BOS 12 Korake Ti Znam DEN 00 Fly on the wings of love BOS 16 Ljubav Je DEN 06 Twist of love DEN 07 Drama queen BRETLAND DEN 10 New Tomorrow DEN 12 Should've Known Better UKI 83 I'm never giving up DEN 13 Only Teardrops UKI 96 Ooh aah.. -

Master's Thesis Preparation Report

Eindhoven University of Technology MASTER Improving the usability of the domain-specific language editors using artificial intelligence Rajasekar, Nandini Award date: 2021 Link to publication Disclaimer This document contains a student thesis (bachelor's or master's), as authored by a student at Eindhoven University of Technology. Student theses are made available in the TU/e repository upon obtaining the required degree. The grade received is not published on the document as presented in the repository. The required complexity or quality of research of student theses may vary by program, and the required minimum study period may vary in duration. General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain Department of Mathematics and Computer Science Department of Electrical Engineering Improving the usability of the domain-specific language editors using artificial intelligence Master Thesis Preparation Report Nandini Rajasekar Supervisors: Prof. Dr. M.G.J. van den Brand M. Verano Merino, MSc 1.0 Eindhoven, July 2020 Contents Contents ii List of Figures iii 1 Introduction 1 1.1 Language Oriented Programming............................1 1.2 Language Workbenches.................................1 1.3 Artificial Intelligence...................................2 2 Motivation 4 3 Goal and Approach5 3.1 Goal............................................5 3.2 Research questions....................................5 3.3 Approach.........................................5 4 Literature Survey7 5 Prototype 10 5.1 Next steps........................................