Locating Political Power in Internet Infrastructure by Ashwin Jacob

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Analysis of Server-Smartphone Application Communication Patterns

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Aaltodoc Publication Archive Aalto University School of Science Degree Programme in Computer Science and Engineering Péter Somogyi Analysis of server-smartphone application communication patterns Master’s Thesis Budapest, June 15, 2014 Supervisors: Professor Jukka Nurminen, Aalto University Professor Tamás Kozsik, Eötvös Loránd University Instructor: Máté Szalay-Bekő, M.Sc. Ph.D. Aalto University School of Science ABSTRACT OF THE Degree programme in Computer Science and MASTER’S THESIS Engineering Author: Péter Somogyi Title: Analysis of server-smartphone application communication patterns Number of pages: 83 Date: June 15, 2014 Language: English Professorship: Data Communication Code: T-110 Software Supervisor: Professor Jukka Nurminen, Aalto University Professor Tamás Kozsik, Eötvös Loránd University Instructor: Máté Szalay-Bekő, M.Sc. Ph.D. Abstract: The spread of smartphone devices, Internet of Things technologies and the popularity of web-services require real-time and always on applications. The aim of this thesis is to identify a suitable communication technology for server and smartphone communication which fulfills the main requirements for transferring real- time data to the handheld devices. For the analysis I selected 3 popular communication technologies that can be used on mobile devices as well as from commonly used browsers. These are client polling, long polling and HTML5 WebSocket. For the assessment I developed an Android application that receives real-time sensor data from a WildFly application server using the aforementioned technologies. Industry specific requirements were selected in order to verify the usability of this communication forms. The first one covers the message size which is relevant because most smartphone users have limited data plan. -

Performance of VBR Packet Video Communications on an Ethernet LAN: a Trace-Driven Simulation Study

9.3.1 Performance of VBR Packet Video Communications on an Ethernet LAN: A Trace-Driven Simulation Study Francis Edwards Mark Schulz edwardsF@sl .elec.uq.oz.au marksas1 .elec. uq.oz.au Department of Electrical and Computer Engineering The University of Queensland St. Lucia Q 4072 Australia Abstract medium term, we can expect that current LAN technolo- Provision of multimedia communication services on today’s gies will be utilized in the near term for packet transport of packet-switched network infrastructure is becoming increas- video applications [4,5, 61. Characterizing the performance ingly feasible. However, there remains a lack of information of current networks carrying video communications traffic is regarding the performance of multimedia sources operating therefore an important issue. This paper investigates packet in bursty data traffic conditions. In this study, a videotele- transport of real time video communications traffic, charac- phony system deployed on the Ethernet LAN is simulated, teristic of videotelephony applications, on the popular 10 employing high time-resolution LAN traces as the data traf- Mbit/s Ethernet LAN. fic load. In comparison with Poisson traffic models, the Previous work has established that Ethernet is capable of trace-driven cases produce highly variable packet delays, and supporting video communication traffic in the presence of higher packet loss, thereby degrading video traffic perfor- Poisson data traffic [6, 71. However, recent studies of high mance. In order to compensate for these effects, a delay time-resolution LAN traffic have observed highly bursty control scheme based on a timed packet dropping algorithm traffic patterns which sustain high variability over timescales is examined. -

The Future of Internet Governance: Should the United States Relinquish Its Authority Over ICANN?

The Future of Internet Governance: Should the United States Relinquish Its Authority over ICANN? Lennard G. Kruger Specialist in Science and Technology Policy September 1, 2016 Congressional Research Service 7-5700 www.crs.gov R44022 The Future of Internet Governance: Should the U.S. Relinquish Its Authority over ICANN Summary Currently, the U.S. government retains limited authority over the Internet’s domain name system, primarily through the Internet Assigned Numbers Authority (IANA) functions contract between the National Telecommunications and Information Administration (NTIA) and the Internet Corporation for Assigned Names and Numbers (ICANN). By virtue of the IANA functions contract, the NTIA exerts a legacy authority and stewardship over ICANN, and arguably has more influence over ICANN and the domain name system (DNS) than other national governments. Currently the IANA functions contract with NTIA expires on September 30, 2016. However, NTIA has the flexibility to extend the contract for any period through September 2019. On March 14, 2014, NTIA announced the intention to transition its stewardship role and procedural authority over key Internet domain name functions to the global Internet multistakeholder community. To accomplish this transition, NTIA asked ICANN to convene interested global Internet stakeholders to develop a transition proposal. NTIA stated that it would not accept any transition proposal that would replace the NTIA role with a government-led or an intergovernmental organization solution. For two years, Internet stakeholders were engaged in a process to develop a transition proposal that will meet NTIA’s criteria. On March 10, 2016, the ICANN Board formally accepted the multistakeholder community’s transition plan and transmitted that plan to NTIA for approval. -

Growth of the Internet

Growth of the Internet K. G. Coffman and A. M. Odlyzko AT&T Labs - Research [email protected], [email protected] Preliminary version, July 6, 2001 Abstract The Internet is the main cause of the recent explosion of activity in optical fiber telecommunica- tions. The high growth rates observed on the Internet, and the popular perception that growth rates were even higher, led to an upsurge in research, development, and investment in telecommunications. The telecom crash of 2000 occurred when investors realized that transmission capacity in place and under construction greatly exceeded actual traffic demand. This chapter discusses the growth of the Internet and compares it with that of other communication services. Internet traffic is growing, approximately doubling each year. There are reasonable arguments that it will continue to grow at this rate for the rest of this decade. If this happens, then in a few years, we may have a rough balance between supply and demand. Growth of the Internet K. G. Coffman and A. M. Odlyzko AT&T Labs - Research [email protected], [email protected] 1. Introduction Optical fiber communications was initially developed for the voice phone system. The feverish level of activity that we have experienced since the late 1990s, though, was caused primarily by the rapidly rising demand for Internet connectivity. The Internet has been growing at unprecedented rates. Moreover, because it is versatile and penetrates deeply into the economy, it is affecting all of society, and therefore has attracted inordinate amounts of public attention. The aim of this chapter is to summarize the current state of knowledge about the growth rates of the Internet, with special attention paid to the implications for fiber optic transmission. -

Security Distributed and Networking Systems (507 Pages)

Security in ................................ i Distri buted and /Networking.. .. Systems, . .. .... SERIES IN COMPUTER AND NETWORK SECURITY Series Editors: Yi Pan (Georgia State Univ., USA) and Yang Xiao (Univ. of Alabama, USA) Published: Vol. 1: Security in Distributed and Networking Systems eds. Xiao Yang et al. Forthcoming: Vol. 2: Trust and Security in Collaborative Computing by Zou Xukai et al. Steven - Security Distributed.pmd 2 5/25/2007, 1:58 PM Computer and Network Security Vol. 1 Security in i Distri buted and /Networking . Systems Editors Yang Xiao University of Alabama, USA Yi Pan Georgia State University, USA World Scientific N E W J E R S E Y • L O N D O N • S I N G A P O R E • B E I J I N G • S H A N G H A I • H O N G K O N G • TA I P E I • C H E N N A I Published by World Scientific Publishing Co. Pte. Ltd. 5 Toh Tuck Link, Singapore 596224 USA office: 27 Warren Street, Suite 401-402, Hackensack, NJ 07601 UK office: 57 Shelton Street, Covent Garden, London WC2H 9HE British Library Cataloguing-in-Publication Data A catalogue record for this book is available from the British Library. SECURITY IN DISTRIBUTED AND NETWORKING SYSTEMS Series in Computer and Network Security — Vol. 1 Copyright © 2007 by World Scientific Publishing Co. Pte. Ltd. All rights reserved. This book, or parts thereof, may not be reproduced in any form or by any means, electronic or mechanical, including photocopying, recording or any information storage and retrieval system now known or to be invented, without written permission from the Publisher. -

New Zealand's High Speed Research Network

Report prepared for the Ministry of Business, Innovation and Employment New Zealand’s high speed research network: at a critical juncture David Moore, Linda Tran, Michael Uddstrom (NIWA) and Dean Yarrall 05 December 2018 About Sapere Research Group Limited Sapere Research Group is one of the largest expert consulting firms in Australasia and a leader in provision of independent economic, forensic accounting and public policy services. Sapere provides independent expert testimony, strategic advisory services, data analytics and other advice to Australasia’s private sector corporate clients, major law firms, government agencies, and regulatory bodies. Wellington Auckland Level 9, 1 Willeston St Level 8, 203 Queen St PO Box 587 PO Box 2475 Wellington 6140 Auckland 1140 Ph: +64 4 915 7590 Ph: +64 9 909 5810 Fax: +64 4 915 7596 Fax: +64 9 909 5828 Sydney Canberra Melbourne Suite 18.02, Level 18, 135 GPO Box 252 Level 8, 90 Collins Street King St Canberra City ACT 2601 Melbourne VIC 3000 Sydney NSW 2000 Ph: +61 2 6267 2700 GPO Box 3179 GPO Box 220 Fax: +61 2 6267 2710 Melbourne VIC 3001 Sydney NSW 2001 Ph: +61 3 9005 1454 Ph: +61 2 9234 0200 Fax: +61 2 9234 0201 Fax: +61 2 9234 0201 For information on this report please contact: Name: David Moore Telephone: +64 4 915 5355 Mobile: +64 21 518 002 Email: [email protected] Page i Contents Executive summary ..................................................................................................... vii 1. Introduction ...................................................................................................... 1 2. NRENs are essential to research data exchange .............................................. 2 2.1 A long history of NRENs ....................................................................................... 2 2.1.1 Established prior to adoption of TCP/IP ............................................ -

Current Affairs 2013- January International

Current Affairs 2013- January International The Fourth Meeting of ASEAN and India Tourism Minister was held in Vientiane, Lao PDR on 21 January, in conjunction with the ASEAN Tourism Forum 2013. The Meeting was jointly co-chaired by Union Tourism Minister K.Chiranjeevi and Prof. Dr. Bosengkham Vongdara, Minister of Information, Culture and Tourism, Lao PDR. Both the Ministers signed the Protocol to amend the Memorandum of Understanding between ASEAN and India on Strengthening Tourism Cooperation, which would further strengthen the tourism collaboration between ASEAN and Indian national tourism organisations. The main objective of this Protocol is to amend the MoU to protect and safeguard the rights and interests of the parties with respect to national security, national and public interest or public order, protection of intellectual property rights, confidentiality and secrecy of documents, information and data. Both the Ministers welcomed the adoption of the Vision Statement of the ASEAN-India Commemorative Summit held on 20 December 2012 in New Delhi, India, particularly on enhancing the ASEAN Connectivity through supporting the implementation of the Master Plan on ASEAN Connectivity. The Ministers also supported the close collaboration of ASEAN and India to enhance air, sea and land connectivity within ASEAN and between ASEAN and India through ASEAN-India connectivity project. In further promoting tourism exchange between ASEAN and India, the Ministers agreed to launch the ASEAN-India tourism website (www.indiaasean.org) as a platform to jointly promote tourism destinations, sharing basic information about ASEAN Member States and India and a visitor guide. The Russian Navy on 20 January, has begun its biggest war games in the high seas in decades that will include manoeuvres off the shores of Syria. -

Distributed Computing Network System, Said to Be "Distributed" When the Computer Programming and the Data to Be Worked on Are Spread out Over More Than One Computer

Praveen Balda et al, International Journal of Computer Science and Mobile Computing, Vol.4 Issue.4, April- 2015, pg. 761-767 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IJCSMC, Vol. 4, Issue. 4, April 2015, pg.761 – 767 RESEARCH ARTICLE Security Enhancement in Distributed Networking Praveen Balda, Sh. Matish Garg Distributed Networking is a distributed computing network system, said to be "distributed" when the computer programming and the data to be worked on are spread out over more than one computer. Usually, this is implemented over a network. Prior to the emergence of low-cost desktop computer power, computing was generally centralized to one computer. Although such centers still exist, distribution networking applications and data operate more efficiently over a mix of desktop workstations, local area network servers, regional servers, Web servers, and other servers. One popular trend is client/server computing. This is the principle that a client computer can provide certain capabilities for a user and request others from other computers that provide services for the clients. (The Web's Hypertext Transfer Protocol is an example of this idea.) Enterprises that have grown in scale over the years and those that are continuing to grow are finding it extremely challenging to manage their distributed network in the traditional client/server computing model. The recent developments in the field of cloud computing has opened up new possibilities. Cloud-based networking vendors have started to sprout offering solutions for enterprise distributed networking needs. -

About the Internet Governance Forum

About the Internet Governance Forum The Internet Governance Forum (IGF) – convened by the United Nations Secretary-General – is the global multistakeholder forum for dialogue on Internet governance issues. BACKGROUND AND CONTEXT 1 The IGF as an outcome of WSIS 2 IGF mandate 2 THE IGF PROCESS 3 IGF annual meetings 3 Intersessional activities 4 National, regional and youth IGF initiatives (NRIs) 5 PARTICIPATION IN IGF ACTIVITIES 6 Online participation 6 COORDINATION OF IGF WORK 7 Multistakeholder Advisory Group 7 IGF Secretariat 7 UN DESA 7 FUNDING 7 IMPACT 8 LOOKING AHEAD: STRENGTHENING THE IGF 8 The IGF in the High-level Panel on Digital Cooperation’s report 8 The IGF in the Secretary-General’s Roadmap for Digital Cooperation 9 9 1 BACKGROUND AND CONTEXT The IGF as an outcome of WSIS Internet governance was one of the most controversial issues during the first phase of the World Summit on the Information Society (WSIS-I), held in Geneva in December 2003. It was recognised that understanding Internet governance was essential in achieving the development goals of the Geneva Plan of Action, but defining the term and understanding the roles and responsibilities of the different stakeholders involved proved to be difficult. The UN Secretary-General set up a Working Group on Internet Governance (WGIG) to explore these issues and prepare a report to feed into the second phase of WSIS (WSIS-II), held in Tunis in November 2005. Many elements contained in the WGIG report – developed through an open process and multistakeholder consultations – were endorsed in the Tunis Agenda for the Information Society (one of the main outcomes of WSIS-II). -

History of the Internet-English

Sirin Palasri Steven Huter ZitaWenzel, Ph.D. THE HISTOR Y OF THE INTERNET IN THAILAND Sirin Palasri Steven G. Huter Zita Wenzel (Ph.D.) The Network Startup Resource Center (NSRC) University of Oregon The History of the Internet in Thailand by Sirin Palasri, Steven Huter, and Zita Wenzel Cover Design: Boonsak Tangkamcharoen Published by University of Oregon Libraries, 2013 1299 University of Oregon Eugene, OR 97403-1299 United States of America Telephone: (541) 346-3053 / Fax: (541) 346-3485 Second printing, 2013. ISBN: 978-0-9858204-2-8 (pbk) ISBN: 978-0-9858204-6-6 (English PDF), doi:10.7264/N3B56GNC ISBN: 978-0-9858204-7-3 (Thai PDF), doi:10.7264/N36D5QXN Originally published in 1999. Copyright © 1999 State of Oregon, by and for the State Board of Higher Education, on behalf of the Network Startup Resource Center at the University of Oregon. This work is licensed under a Creative Commons Attribution- NonCommercial 3.0 Unported License http://creativecommons.org/licenses/by-nc/3.0/deed.en_US Requests for permission, beyond the Creative Commons authorized uses, should be addressed to: The Network Startup Resource Center (NSRC) 1299 University of Oregon Eugene, Oregon 97403-1299 USA Telephone: +1 541 346-3547 Email: [email protected] Fax: +1 541-346-4397 http://www.nsrc.org/ This material is based upon work supported by the National Science Foundation under Grant No. NCR-961657. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. -

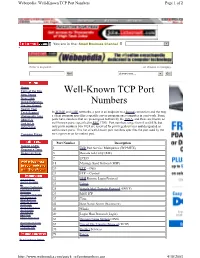

Well-Known TCP Port Numbers Page 1 of 2

Webopedia: Well-Known TCP Port Numbers Page 1 of 2 You are in the: Small Business Channel Jump to Website Enter a keyword... ...or choose a category. Go! choose one... Go! Home Term of the Day Well-Known TCP Port New Terms New Links Quick Reference Numbers Did You Know? Search Tool Tech Support In TCP/IP and UDP networks, a port is an endpoint to a logical connection and the way Webopedia Jobs a client program specifies a specific server program on a computer in a network. Some About Us ports have numbers that are preassigned to them by the IANA, and these are known as Link to Us well-known ports (specified in RFC 1700). Port numbers range from 0 to 65536, but Advertising only ports numbers 0 to 1024 are reserved for privileged services and designated as well-known ports. This list of well-known port numbers specifies the port used by the Compare Prices server process as its contact port. Port Number Description Submit a URL 1 TCP Port Service Multiplexer (TCPMUX) Request a Term Report an Error 5 Remote Job Entry (RJE) 7 ECHO 18 Message Send Protocol (MSP) 20 FTP -- Data 21 FTP -- Control Internet News 22 SSH Remote Login Protocol Internet Investing IT 23 Telnet Windows Technology Linux/Open Source 25 Simple Mail Transfer Protocol (SMTP) Developer Interactive Marketing 29 MSG ICP xSP Resources Small Business 37 Time Wireless Internet Downloads 42 Host Name Server (Nameserv) Internet Resources Internet Lists 43 WhoIs International EarthWeb 49 Login Host Protocol (Login) Career Resources 53 Domain Name System (DNS) Search internet.com Advertising -

The Internet ! Based on Slides Originally Published by Thomas J

15-292 History of Computing The Internet ! Based on slides originally published by Thomas J. Cortina in 2004 for a course at Stony Brook University. Revised in 2013 by Thomas J. Cortina for a computing history course at Carnegie Mellon University. A Vision of Connecting the World – the Memex l Proposed by Vannevar Bush l first published in the essay "As We May Think" in Atlantic Monthly in 1945 and subsequently in Life Magazine. l "a device in which an individual stores all his books, records, and communications, and which is mechanized so that it may be consulted with exceeding speed and flexibility" l also indicated the idea that would become hypertext l Bush’s work was influential on all Internet pioneers The Memex The Impetus to Act l 1957 - U.S.S.R. launches Sputnik I into space l 1958 - U.S. Department of Defense responds by creating ARPA l Advanced Research Projects Agency l “mission is to maintain the technological superiority of the U.S. military” l “sponsoring revolutionary, high-payoff research that bridges the gap between fundamental discoveries and their military use.” l Name changed to DARPA (Defense) in 1972 ARPANET l The Advanced Research Projects Agency Network (ARPANET) was the world's first operational packet switching network. l Project launched in 1968. l Required development of IMPs (Interface Message Processors) by Bolt, Beranek and Newman (BBN) l IMPs would connect to each other over leased digital lines l IMPs would act as the interface to each individual host machine l Used packet switching concepts published by Leonard Kleinrock, most famous for his subsequent books on queuing theory Early work Baran (L) and Davies (R) l Paul Baran began working at the RAND corporation on secure communications technologies in 1959 l goal to enable a military communications network to withstand a nuclear attack.