Contents of Lecture 8 on UNIX History

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UNIX and Computer Science Spreading UNIX Around the World: by Ronda Hauben an Interview with John Lions

Winter/Spring 1994 Celebrating 25 Years of UNIX Volume 6 No 1 "I believe all significant software movements start at the grassroots level. UNIX, after all, was not developed by the President of AT&T." Kouichi Kishida, UNIX Review, Feb., 1987 UNIX and Computer Science Spreading UNIX Around the World: by Ronda Hauben An Interview with John Lions [Editor's Note: This year, 1994, is the 25th anniversary of the [Editor's Note: Looking through some magazines in a local invention of UNIX in 1969 at Bell Labs. The following is university library, I came upon back issues of UNIX Review from a "Work In Progress" introduced at the USENIX from the mid 1980's. In these issues were articles by or inter- Summer 1993 Conference in Cincinnati, Ohio. This article is views with several of the pioneers who developed UNIX. As intended as a contribution to a discussion about the sig- part of my research for a paper about the history and devel- nificance of the UNIX breakthrough and the lessons to be opment of the early days of UNIX, I felt it would be helpful learned from it for making the next step forward.] to be able to ask some of these pioneers additional questions The Multics collaboration (1964-1968) had been created to based on the events and developments described in the UNIX "show that general-purpose, multiuser, timesharing systems Review Interviews. were viable." Based on the results of research gained at MIT Following is an interview conducted via E-mail with John using the MIT Compatible Time-Sharing System (CTSS), Lions, who wrote A Commentary on the UNIX Operating AT&T and GE agreed to work with MIT to build a "new System describing Version 6 UNIX to accompany the "UNIX hardware, a new operating system, a new file system, and a Operating System Source Code Level 6" for the students in new user interface." Though the project proceeded slowly his operating systems class at the University of New South and it took years to develop Multics, Doug Comer, a Profes- Wales in Australia. -

Integrating Preemption Threshold Scheduling and Dynamic Voltage Scaling for Energy Efficient Real-Time Systems

Integrating Preemption Threshold Scheduling and Dynamic Voltage Scaling for Energy Efficient Real-Time Systems Ravindra Jejurikar1 and Rajesh Gupta2 1 Centre for Embedded Computer Systems, University of California Irvine, Irvine CA 92697, USA jeÞÞ@cec׺ÙciºedÙ 2 Department of Computer Science and Engineering, University of California San Diego, La Jolla, CA 92093, USA gÙÔØa@c׺Ùc×dºedÙ Abstract. Preemption threshold scheduling (PTS) enables designing scalable real-time systems. PTS not only decreases the run-time overhead of the system, but can also be used to decrease the number of threads and the memory require- ments of the system. In this paper, we combine preemption threshold scheduling with dynamic voltage scaling to enable energy efficient scheduling in real-time systems. We consider scheduling with task priorities defined by the Earliest Dead- line First (EDF) policy. We present an algorithm to compute threshold preemption levels for tasks with given static slowdown factors. The proposed algorithm im- proves upon known algorithms in terms of time complexity. Experimental results show that preemption threshold scheduling reduces on an average 90% context switches, even in the presence of task slowdown. Further, we describe a dynamic slack reclamation technique that working in conjunction with PTS that yields on an average 10% additional energy savings. 1 Introduction With increasing mobility and proliferation of embedded systems, low power consump- tion is an important aspect of embedded systems design. Generally speaking, the pro- cessor consumes a significant portion of the total energy, primarily due to increased computational demands. Scaling the processor frequency and voltage based on the per- formance requirements can lead to considerable energy savings. -

What Is an Operating System III 2.1 Compnents II an Operating System

Page 1 of 6 What is an Operating System III 2.1 Compnents II An operating system (OS) is software that manages computer hardware and software resources and provides common services for computer programs. The operating system is an essential component of the system software in a computer system. Application programs usually require an operating system to function. Memory management Among other things, a multiprogramming operating system kernel must be responsible for managing all system memory which is currently in use by programs. This ensures that a program does not interfere with memory already in use by another program. Since programs time share, each program must have independent access to memory. Cooperative memory management, used by many early operating systems, assumes that all programs make voluntary use of the kernel's memory manager, and do not exceed their allocated memory. This system of memory management is almost never seen any more, since programs often contain bugs which can cause them to exceed their allocated memory. If a program fails, it may cause memory used by one or more other programs to be affected or overwritten. Malicious programs or viruses may purposefully alter another program's memory, or may affect the operation of the operating system itself. With cooperative memory management, it takes only one misbehaved program to crash the system. Memory protection enables the kernel to limit a process' access to the computer's memory. Various methods of memory protection exist, including memory segmentation and paging. All methods require some level of hardware support (such as the 80286 MMU), which doesn't exist in all computers. -

Performance Best Practices for Vmware Workstation Vmware Workstation 7.0

Performance Best Practices for VMware Workstation VMware Workstation 7.0 This document supports the version of each product listed and supports all subsequent versions until the document is replaced by a new edition. To check for more recent editions of this document, see http://www.vmware.com/support/pubs. EN-000294-00 Performance Best Practices for VMware Workstation You can find the most up-to-date technical documentation on the VMware Web site at: http://www.vmware.com/support/ The VMware Web site also provides the latest product updates. If you have comments about this documentation, submit your feedback to: [email protected] Copyright © 2007–2009 VMware, Inc. All rights reserved. This product is protected by U.S. and international copyright and intellectual property laws. VMware products are covered by one or more patents listed at http://www.vmware.com/go/patents. VMware is a registered trademark or trademark of VMware, Inc. in the United States and/or other jurisdictions. All other marks and names mentioned herein may be trademarks of their respective companies. VMware, Inc. 3401 Hillview Ave. Palo Alto, CA 94304 www.vmware.com 2 VMware, Inc. Contents About This Book 5 Terminology 5 Intended Audience 5 Document Feedback 5 Technical Support and Education Resources 5 Online and Telephone Support 5 Support Offerings 5 VMware Professional Services 6 1 Hardware for VMware Workstation 7 CPUs for VMware Workstation 7 Hyperthreading 7 Hardware-Assisted Virtualization 7 Hardware-Assisted CPU Virtualization (Intel VT-x and AMD AMD-V) -

Chapter 1. Origins of Mac OS X

1 Chapter 1. Origins of Mac OS X "Most ideas come from previous ideas." Alan Curtis Kay The Mac OS X operating system represents a rather successful coming together of paradigms, ideologies, and technologies that have often resisted each other in the past. A good example is the cordial relationship that exists between the command-line and graphical interfaces in Mac OS X. The system is a result of the trials and tribulations of Apple and NeXT, as well as their user and developer communities. Mac OS X exemplifies how a capable system can result from the direct or indirect efforts of corporations, academic and research communities, the Open Source and Free Software movements, and, of course, individuals. Apple has been around since 1976, and many accounts of its history have been told. If the story of Apple as a company is fascinating, so is the technical history of Apple's operating systems. In this chapter,[1] we will trace the history of Mac OS X, discussing several technologies whose confluence eventually led to the modern-day Apple operating system. [1] This book's accompanying web site (www.osxbook.com) provides a more detailed technical history of all of Apple's operating systems. 1 2 2 1 1.1. Apple's Quest for the[2] Operating System [2] Whereas the word "the" is used here to designate prominence and desirability, it is an interesting coincidence that "THE" was the name of a multiprogramming system described by Edsger W. Dijkstra in a 1968 paper. It was March 1988. The Macintosh had been around for four years. -

The Strange Birth and Long Life of Unix - IEEE Spectrum Page 1 of 6

The Strange Birth and Long Life of Unix - IEEE Spectrum Page 1 of 6 COMPUTING / SOFTWARE FEATURE The Strange Birth and Long Life of Unix The classic operating system turns 40, and its progeny abound By WARREN TOOMEY / DECEMBER 2011 They say that when one door closes on you, another opens. People generally offer this bit of wisdom just to lend some solace after a misfortune. But sometimes it's actually true. It certainly was for Ken Thompson and the late Dennis Ritchie, two of the greats of 20th-century information technology, when they created the Unix operating system, now considered one of the most inspiring and influential pieces of software ever written. A door had slammed shut for Thompson and Ritchie in March of 1969, when their employer, the American Telephone & Telegraph Co., withdrew from a collaborative project with the Photo: Alcatel-Lucent Massachusetts Institute of KEY FIGURES: Ken Thompson [seated] types as Dennis Ritchie looks on in 1972, shortly Technology and General Electric after they and their Bell Labs colleagues invented Unix. to create an interactive time- sharing system called Multics, which stood for "Multiplexed Information and Computing Service." Time-sharing, a technique that lets multiple people use a single computer simultaneously, had been invented only a decade earlier. Multics was to combine time-sharing with other technological advances of the era, allowing users to phone a computer from remote terminals and then read e -mail, edit documents, run calculations, and so forth. It was to be a great leap forward from the way computers were mostly being used, with people tediously preparing and submitting batch jobs on punch cards to be run one by one. -

The Strange Birth and Long Life of Unix - IEEE Spectrum

The Strange Birth and Long Life of Unix - IEEE Spectrum http://spectrum.ieee.org/computing/software/the-strange-birth-and-long-li... COMPUTING / SOFTWARE FEATURE The Strange Birth and Long Life of Unix The classic operating system turns 40, and its progeny abound By WARREN TOOMEY / DECEMBER 2011 They say that when one door closes on you, another opens. People generally offer this bit of wisdom just to lend some solace after a misfortune. But sometimes it's actually true. It certainly was for Ken Thompson and the late Dennis Ritchie, two of the greats of 20th-century information technology, when they created the Unix operating system, now considered one of the most inspiring and influential pieces of software ever written. A door had slammed shut for Thompson and Ritchie in March of 1969, when their employer, the American Telephone & Telegraph Co., withdrew from a collaborative project with the Photo: Alcatel-Lucent Massachusetts Institute of KEY FIGURES: Ken Thompson [seated] types as Dennis Ritchie looks on in 1972, shortly Technology and General Electric after they and their Bell Labs colleagues invented Unix. to create an interactive time-sharing system called Multics, which stood for "Multiplexed Information and Computing Service." Time-sharing, a technique that lets multiple people use a single computer simultaneously, had been invented only a decade earlier. Multics was to combine time-sharing with other technological advances of the era, allowing users to phone a computer from remote terminals and then read e-mail, edit documents, run calculations, and so forth. It was to be a great leap forward from the way computers were mostly being used, with people tediously preparing and submitting batch jobs on punch cards to be run one by one. -

Practical Session 1, System Calls a Few Administrative Notes…

Operating Systems, 142 Tas: Vadim Levit , Dan Brownstein, Ehud Barnea, Matan Drory and Yerry Sofer Practical Session 1, System Calls A few administrative notes… • Course homepage: http://www.cs.bgu.ac.il/~os142/ • Contact staff through the dedicated email: [email protected] (format the subject of your email according to the instructions listed in the course homepage) • Assignments: Extending xv6 (a pedagogical OS) Submission in pairs. Frontal checking: 1. Assume the grader may ask anything. 2. Must register to exactly one checking session. System Calls • A System Call is an interface between a user application and a service provided by the operating system (or kernel). • These can be roughly grouped into five major categories: 1. Process control (e.g. create/terminate process) 2. File Management (e.g. read, write) 3. Device Management (e.g. logically attach a device) 4. Information Maintenance (e.g. set time or date) 5. Communications (e.g. send messages) System Calls - motivation • A process is not supposed to access the kernel. It can’t access the kernel memory or functions. • This is strictly enforced (‘protected mode’) for good reasons: • Can jeopardize other processes running. • Cause physical damage to devices. • Alter system behavior. • The system call mechanism provides a safe mechanism to request specific kernel operations. System Calls - interface • Calls are usually made with C/C++ library functions: User Application C - Library Kernel System Call getpid() Load arguments, eax _NR_getpid, kernel mode (int 80) Call sys_getpid() Sys_Call_table[eax] syscall_exit return resume_userspace return User-Space Kernel-Space Remark: Invoking int 0x80 is common although newer techniques for “faster” control transfer are provided by both AMD’s and Intel’s architecture. -

Zenon Manual Programming Interfaces

zenon manual Programming interfaces v.7.11 ©2014 Ing. Punzenberger COPA-DATA GmbH All rights reserved. Distribution and/or reproduction of this document or parts thereof in any form are permitted solely with the written permission of the company COPA-DATA. The technical data contained herein has been provided solely for informational purposes and is not legally binding. Subject to change, technical or otherwise. Contents 1. Welcome to COPA-DATA help ...................................................................................................... 6 2. Programming interfaces ............................................................................................................... 6 3. Process Control Engine (PCE) ........................................................................................................ 9 3.1 The PCE Editor ............................................................................................................................................. 9 3.1.1 The Taskmanager ....................................................................................................................... 10 3.1.2 The editing area .......................................................................................................................... 10 3.1.3 The output window .................................................................................................................... 11 3.1.4 The menus of the PCE Editor ..................................................................................................... -

Cannibal Code

KIll it with Fire © 2021 by Marianne Bellotti 2 CANNIBAL CODE f technology advances in cycles, you might assume the best legacy I modernization strategy is to wait a decade or two for paradigms to shift back and leapfrog over. If only! For all that mainframes and clouds might have in common in general, they have a number of significant dif- ferences in the implementation that block easy transitions. While the architectural philosophy of time-sharing has come back in vogue, other components of technology have been advancing at a different pace. You can divide any single product into an infinite number of elements: hard- ware, software, interfaces, protocols, and so on. Then you can add specific techniques within those categories. Not all cycles are in sync. The odds of a modern piece of technology perfectly reflecting an older piece of tech- nology are as likely as finding two days where every star in the sky had the exact same position. So, the takeaway from understanding that technology advances in cycles isn’t that upgrades are easier the longer you wait, it’s that you should avoid upgrading to new technology simply because it’s new. KIll it with Fire © 2021 by Marianne Bellotti Alignable Differences and User Interfaces Without alignable differences, consumers can’t determine the value of the technology in which they are being asked to invest. Completely innovative technology is not a viable solution, because it has no refer- ence point to help it find its market. We often think of technology as being streamlined and efficient with no unnecessary bits without a clear purpose, but in fact, many forms of technology you depend on have ves- tigial features either inherited from other older forms of technology or imported later to create the illusion of feature parity. -

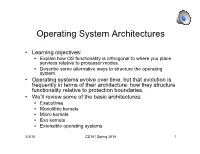

Operating System Architectures

Operating System Architectures • Learning objectives: • Explain how OS functionality is orthogonal to where you place services relative to processor modes. • Describe some alternative ways to structure the operating system. • Operating systems evolve over time, but that evolution is frequently in terms of their architecture: how they structure functionality relative to protection boundaries. • We’ll review some of the basic architectures: • Executives • Monolithic kernels • Micro kernels • Exo kernels • Extensible operating systems 2/3/15 CS161 Spring 2015 1 OS Executives • Think MS-DOS: With no hardware protection, the OS is simply a set of services: • Live in memory • Applications can invoke them • Requires a software trap to invoke them. • Live in same address space as application 1-2-3 QBasic WP Applications Command Software Traps Operating System routlines 2/3/15 CS161 Spring 2015 2 Monolithic Operating System • Traditional architecture • Applications and operating system run in different address spaces. • Operating system runs in privileged mode; applications run in user mode. Applications Operating System file system processes networking Device drivers virtual memory 2/3/15 CS161 Spring 2015 3 Microkernels (late 80’s and on) • Put as little of OS as possible in privileged mode (the microkernel). • Implement most core OS services as user-level servers. • Only microkernel really knows about hardware • File system, device drivers, virtual memory all implemented in unprivileged servers. • Must use IPC (interprocess communication) to communicate among different servers. Applications Process Virtual management memory file system networking Microkernel 2/3/15 CS161 Spring 2015 4 Microkernels: Past and Present • Much research and debate in late 80’s early 90’s • Pioneering effort in Mach (CMU). -

A Hardware Preemptive Multitasking Mechanism Based on Scan-Path Register Structure for FPGA-Based Reconfigurable Systems

A Hardware Preemptive Multitasking Mechanism Based on Scan-path Register Structure for FPGA-based Reconfigurable Systems S. Jovanovic, C. Tanougast and S. Weber Université Henri Poincaré, LIEN, 54506 Vandoeuvre lès Nancy, France [email protected] Abstract all the registers as well as all memories used in the circuit In this paper, we propose a hardware preemptive and to determine the starting point of the restoration multitasking mechanism which uses scan-path register process. On the other hand, the goal of the restoration is to structure and allows identifying the total task’s register restore the task’s context of processing as it was before its size for the FPGA-based reconfigurable systems. The interruption, in order to continue the execution previously main objective of this preemptive mechanism is to stopped. Several techniques allowing the hardware suspend hardware task having low priority, replace it by preemption are proposed in the literature. The pros and high-priority task and restart them at another time cons of mostly used approaches we will disscus in the (and/or from another area of the FPGA in FPGA-based following. designs). The main advantages of the proposed method The readback approach for context saving and storing are that it provides an attractive way for context saving of an outgoing task is one of the approaches which was and restoring of a hardware task without freezing other mostly used for the FPGA-based designs. No additional tasks during pre-emption phases and a small area hardware structures, simple implementation, no extra overhead. We show its feasibility by allowing us to design design efforts and no extra hardware consumption are the a simple computing example as well as the one of the most important advantages of this approach.