Ad Empathy: a Design Fiction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Advanced Design Practices for Sharing Futures: a Focus on Design Fiction

ADVANCED DESIGN PRACTICES FOR SHARING FUTURES: A FOCUS ON DESIGN FICTION Manuela Celi Dipartimento di Design – Politecnico diMilano,[email protected] Elena Formia Dipartimento di Architettura – Alma Mater Università di Bologna [email protected] ABSTRACT Holding different languages and planning tomorrow products, designers occupy a dialectical space between the world of today and the one that could be. Advanced design practices are oriented to define new innovation paths with a long time perspective that calls for a cooperation with the Future studies’ discipline. Advanced design and Anticipation have frequently parallel objectives, but face them from a different point of view. Design is often limited to the manipulation of visual or tangible aspects of a project, while future studies are seen as an activity oriented to policy that occurs in advance of actual outcomes and that is very distant from a concrete realization. Even with these distinctions they are both involved with future scenarios building and the necessity to make informed decisions about the common future. Taking into account this cultural framework, the main objective of the study is to understand, and improve, the role of Advanced design as facilitator in the dynamics of shaping, but mostly sharing possible visions of the future. In order to explore this value, the paper identifies the specific area of Design fiction as an illustrative field of this approach. Emerged in the last decade as “a discursive space within which new forms of cultural artefact (futures) might emerge”, Design fiction is attracting multi-disciplinary attention for its ability to inform the creation of a fictional world. -

Design Fiction Is Not Necessarily About the Future 80—81 Swiss Design Network Conference 2010 and the Fictional Element Seems to Be Reduced the Future and the New

without being exploited for future speculation Björn Franke Royal College of Art, bjorn.franke @ rca.ac.uk or future studies. In understanding fictional design as poetic design, we may also think of design as a Design Fiction is Not form of philosophical inquiry. Necessarily About the Future Keywords: Design Theory, Aesthetics, Fiction, Philosophy, Epistemology Design is an activity that is fundamentally concerned with something that does not, but could exist. It is an inventive activity, which deals with imagining alternative worlds rather than investigating the existing one. Therefore, it is concerned with the possible rather than the real. The investigation of the real and the possible are fundamentally different activities of inquiry, a distinction famously been by Aristotle. He distinguishes between the historian, who is concerned with what has happened (the real and particular) and the poet, who is concerned with what could happen (the possible and universal). Because it deals with universals, for Aristotle, poetry is an activity similar to philosophy.1 The distinction between the possible and the real, or fiction and reality, is one of the most common ways to classify something as fictional. Common design activity is not only concerned with possibilities but also with realising these possibilities. In other words, it is concerned with making the possible real. Design as a fictional activity would therefore need to remain in the realm of Discussions about fictional approaches in design are the possible without entering the realm of the real (although it can, of course, often centred on the Future or the New. These influence the realm of the real as fictional literature does). -

Fictionation Idea Generation Tool for Product Design Education Utilizing What-If Scenarios of Design Fiction: a Mixed Method Study

FICTIONATION IDEA GENERATION TOOL FOR PRODUCT DESIGN EDUCATION UTILIZING WHAT-IF SCENARIOS OF DESIGN FICTION: A MIXED METHOD STUDY A THESIS SUBMITTED TO THE GRADUATE SCHOOL OF NATURAL AND APPLIED SCIENCES OF MIDDLE EAST TECHNICAL UNIVERSITY BY ÜMİT BAYIRLI IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY IN INDUSTRIAL DESIGN SEPTEMBER 2020 Approval of the thesis: FICTIONATION IDEA GENERATION TOOL FOR PRODUCT DESIGN EDUCATION UTILIZING WHAT-IF SCENARIOS OF DESIGN FICTION: A MIXED METHOD STUDY submitted by ÜMİT BAYIRLI in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Department of Industrial Design, Middle East Technical University by, Prof. Dr. Halil Kalıpçılar Dean, Graduate School of Natural and Applied Sciences Prof. Dr. Gülay Hasdoğan Head of the Department, Industrial Design Assoc. Prof. Dr. Naz A.G.Z. Börekçi Supervisor, Department of Industrial Design, METU Examining Committee Members: Assoc. Prof. Dr. Pınar Kaygan Department of Industrial Design, METU Assoc. Prof. Dr. Naz A.G.Z. Börekçi Department of Industrial Design, METU Prof. Dr. Hanife Akar Department of Educational Sciences, METU Assoc. Prof. Dr. Dilek Akbulut Department of Industrial Design, Gazi University Assist. Prof. Dr. Canan E. Ünlü Department of Industrial Design, TED University Date: 18.09.2020 I hereby declare that all information in this document has been obtained and presented in accordance with academic rules and ethical conduct. I also declare that, as required by these rules and conduct, I have fully cited and referenced all material and results that are not original to this work. Name, Last name: Ümit Bayırlı Signature: iv ABSTRACT FICTIONATION IDEA GENERATION TOOL FOR PRODUCT DESIGN EDUCATION UTILIZING WHAT-IF SCENARIOS OF DESIGN FICTION: A MIXED METHOD STUDY Bayırlı, Ümit Doctor of Philosophy, Department of Industrial Design Supervisor: Assoc. -

Twelfth International Conference on Design Principles & Practices

Twelfth International Conference on Design Principles & Practices “No Boundaries Design” ELISAVA Barcelona School of Design and Engineering | Barcelona, Spain | 5–7 March 2018 www.designprinciplesandpractices.com www.facebook.com/DesignPrinciplesAndPractices @designpap | #DPP18 Twelfth International Conference on Design Principles & Practices www.designprinciplesandpractices.com First published in 2018 in Champaign, Illinois, USA by Common Ground Research Networks, NFP www.cgnetworks.org © 2018 Common Ground Research Networks All rights reserved. Apart from fair dealing for the purpose of study, research, criticism, or review as permitted under the applicable copyright legislation, no part of this work may be reproduced by any process without written permission from the publisher. For permissions and other inquiries, please contact [email protected]. Common Ground Research Networks may at times take pictures of plenary sessions, presentation rooms, and conference activities which may be used on Common Ground’s various social media sites or websites. By attending this conference, you consent and hereby grant permission to Common Ground to use pictures which may contain your appearance at this event. Dear Conference Attendees, Welcome to Barcelona! We hope you will enjoy the coming three days of debate, presentations, and plenaries that will bring together academics, professionals, researchers, and practitioners to explore the present and the future of design around the world. With this, the Twelfth Conference on Design Principles and Practices, we wanted to prompt some reflection on the traditional boundaries collapsing between people, things, ideas, and places in the face of new forces of technological, political, social, and cultural evolution. Often it seems that we are losing our awareness of what could be our future and our role and responsibility to participate in its building. -

Design Fiction a Short Essay on Design, Science, Fact and Fiction

Design Fiction A short essay on design, science, fact and fiction. Julian Bleecker March 2009 Design allows you to use your imagination and creativity explicitly. Think as a designer thinks. Be different and think different. Make new, unexpect- ed things come to life. Tell new stories. Reveal new experiences, new social practices, or that reflect upon today to contemplate innovative, new, habit- able futures. Toss out the bland, routine, “proprietary” processes. Take some new assumptions for a walk. Try on a different set of specifications, goals and principles. (My hunch is that if design continues to be applied like bad fashion to more areas of human practice, it will become blanched of its meaning over time, much as the application of e- or i- or interactive- or digital- to any- thing and everything quickly becomes another “and also” type of redundan- 01 cy.) When something is “designed” it suggests that there is some thoughtful exploration going on. Assuming design is about linking the imagination to its material form, when design is attached to something, like business DESIGN FICTION or finance, we can take that to mean that there is some ambition to move beyond the existing ways of doing things, toward something that adheres to different principles and practices. Things get done differently somehow, Fiction is evolutionarily valuable because it allows low-cost experimenta- or with a spirit that means to transcend merely following pre-defined steps. tion compared to trying things for real Design seems to be a notice that says there is some purposeful reflection and Dennis Dutton, overheard on Twitter http://cli.gs/VvrmvQ consideration going on expressed as the thoughtful, imaginative and mate- rial craft work activities of a designer. -

Bizarre Bio-Citizens and the Future of Medicine. the Works of Alexandra

PRZEGLĄD KULTUROZNAWCZY NR 1 (43) 2020, s. 55–69 doi:10.4467/20843860PK.20.004.11932 www.ejournals.eu/Przeglad-Kulturoznawczy/ W KRĘGU IDEI http://orcid.org/0000-0003-4867-1105 Joanna Jeśman BIZARRE BIO-CITIZENS AND THE FUTURE OF MEDICINE.… THE WORKS OF ALEXANDRA DAISY GINSBERG AND AGI HAINES IN THE CONTEXT OF SPECULATIVE DESIGN AND BIOETHICS Abstract: The article concerns speculative design in the context of bioethics. The author analyzes and interprets projects by designer Alexandra Daisy Ginsberg and Age Haines in relation to modern biotechnologies, future scenarios of medicine and machine ethics. Keywords: speculative design, bioethics, bio art, biotechnology One of the key features of the Speculative Turn is precisely that the move toward realism is not a move toward the stuffy limitations of common sense, but quite often a turn toward the downright bizarre.1 Speculative design, critical design, discursive design, these are just some of the names used to describe designing practices that very strongly resemble art & science. The three terms used here are not synonymous and will be defined further in the text but they do have certain things in common. The projects are mostly exhibited in art galleries and museums, their main purpose in not commercial, they refer to the future rather than the present and they are strongly embedded in science and technology. When we think about the mainstream design like industrial, fashion, software, in- terface, graphic or communication designs they share some features, they have to be useful, and that means practical, functional, utilitarian, pragmatic and applicable. Whereas the projects analyzed in this text are the exact opposite of practical, func- 1 L. -

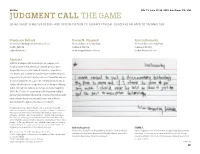

Judgment Call the Game

Abilities DIS ’19, June 23–28, 2019, San Diego, CA, USA JUDGMENT CALL THE GAME USING VALUE SENSITIVE DESIGN AND DESIGN FICTION TO SURFACE ETHICAL CONCERNS RELATED TO TECHNOLOGY Stephanie Ballard Karen M. Chappell Kristin Kennedy University of Washington Information School Microsoft Ethics & Society Team Microsoft Ethics & Society Team Seattle, WA USA Redmond, WA USA Redmond, WA USA [email protected] [email protected] [email protected] Abstract Artificial intelligence (AI) technologies are complex socio- technical systems that, while holding much promise, have frequently caused societal harm. In response, corporations, non-profits, and academic researchers have mobilized to build responsible AI, yet how to do this is unclear. Toward this aim, we designed Judgment Call, a game for industry product teams to surface ethical concerns using value sensitive design and design fiction. Through two industry workshops, we foundJudgment Call to be effective for considering technology from multiple perspectives and identifying ethical concerns. This work extends value sensitive design and design fiction to ethical AI and demonstrates the game’s effective use in industry. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than the author(s) must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. -

A Pragmatics Framework for Design Fiction

A PRAGMATICS FRAMEWORK FOR DESIGN FICTION Joseph Lindley HighWire Centre for Doctoral Training [email protected] (Lancaster University) ABSTRACT Design fiction, is often defined as the “deliberate use of diegetic prototypes to suspend disbelief about change” (Sterling 2012). Practically speaking design fictions can be seen as “a conflation of design, science fact, and science fiction” (Bleecker 2009, p. 6) where fiction is employed as a medium “not to show how things will be but to open up a space for discussion” (Dunne & Raby 2013, p.51). The concept has gained traction in recent years, with a marked increase in published research on both the meta-theory of the practice itself and also studies of using it in a variety of contexts. However, the field is in a formative period. Hales talks about the term 'design fiction as being “enticing and provocative, yet it still remains elusive” (2013, p.1) whereas Markussen & Knutz sum up this epoch in the history of design fiction by saying “It is obvious from the growing literature that design fiction is open to several different interpretations, ideologies and aims.” (2013, p.231) It seems, therefore, that design fiction currently occupies a liminal space between the excitement of possibility and the challenges of divergence. In this paper I will highlight sites of ambiguity and describe the disparate nature of design fiction theory and practice in order to illuminate the inherent complexities. Alongside I suggest elements of a ‘pragmatics framework’ for design fiction in order to strengthen foundations by facilitating a reduction in ambiguity, whilst being careful not to over specify and therefore constrain the ability to grow and adapt. -

Tom Bieling Fact and Fiction – Design As a Search for Reality on The

FLUSSER STUDIES 29 Tom Bieling Fact and Fiction – Design as a Search for Reality on the Circuit of Lies With the approach of "Speculative Design"1, a new variety was introduced into Design and related fields of Research a few years ago, which has been continuously discussed ever since. Speculative Design is a design practice aiming at exploring and criticising possible futures by creating specula- tive, often provocative, scenarios narrated through designed artifacts.2 Little attention3 has been paid here so far (although there are numerous overlaps) to Vilém Flusser's different approaches to the fictional, the fabulative, the speculative.4 What is Flusser’s relevance as a philosopher, media and communication theorist, as well as essayist and writer today, nearly thirty years after his death? And how can his reflections be opened up to the discourses and practices around Speculative Design? Design as an Epistemic Practice of the Speculative The epistemic potentials of Speculative Design are increasingly recognized within the wider aca- demic sphere, not least in the area of design research.5 Generally speaking Design wishes to develop (counter) narratives to demonstrate (alternative) proposals for the future and to furthermore pro- vide impetus for their implementation.6 This raises attention to the discursive, critical and fictional practices of design, as its tools and strategies often come along with a narrative, speculative and interrogative design methodology. In recent years, a growing number of research and knowledge fields from within and beyond design research have been devoting themselves to “questioning” 1 This also refers to equivalent terms listed below. 2 „This form of design thrives on imagination and aims to open up new perspectives on what are sometimes called wicked problems, to create spaces for discussion and debate about alternative ways of being, and to inspire and en- courage people’s imaginations to flow freely. -

Immersive Design Fiction- Experiential Futures in the Classroom

Immersive Design Fiction for Experiential Futures in the Classroom Joshua McVeigh-Schultz examples from recent student work, including: climate San Francisco State University futures, COVID-19 sanitization rituals, speculative San Francisco, CA 94132, USA automation services, familial relationships with robots, [email protected] and modular housing technologies. Author Keywords Abstract design fiction; immersive design fiction; virtual reality; Immersive design fictions (IDFs) extend methods of VR speculative design; experiential futures. prototyping by placing speculative interfaces and experiences within a virtual world. In particular, IDFs Introduction position participants as characters in a fictional Julian Bleecker’s canonical essay argued that any story storyworld with interactive elements. With this designers tell about a new technology or interface is approach, researchers and practitioners can reach also a story about the interaction rituals1—the beyond the diegetic object to explore a richer palette of protocols, routines, and social meanings—that we experiential phenomena—such as embodied imagine accompanying and evolving alongside an interactions with objects, environments, and other emerging technology or novel interface [2]. agents. In this way, IDFs enable participants and creators to think speculatively with the body. Likewise, Immersive design fictions (IDFs) use virtual reality (VR) IDFs are particularly fruitful as vehicles for critical to place speculative interaction rituals within an discussion in the design classroom, unlocking new kinds immersive storyworld [17]. By situating design fiction of inferential activity and new pathways for unpacking within VR, IDFs present a “slice of life” in a fictional the social implications of a design fiction scenario. world and offer a more embodied lens for grappling These points are demonstrated through a range of with the implications of design fiction scenarios. -

Research Fiction and Thought Experiments in Design Full Text Available At

Full text available at: http://dx.doi.org/10.1561/1100000070 Research Fiction and Thought Experiments in Design Full text available at: http://dx.doi.org/10.1561/1100000070 Other titles in Foundations and Trends R in Human-Computer Interaction HCI’s Making Agendas Jeffrey Bardzell, Shaowen Bardzell, Cindy Lin, Silvia Lindtner and Austin Toombs ISBN: 978-1-68083-372-0 A Survey of Value Sensitive Design Methods Batya Friedman, David G. Hendry and Alan Borning ISBN: 978-1-68083-290-7 Communicating Personal Genomic Information to Non-experts: A New Frontier for Human-Computer Interaction Orit Shaer, Oded Nov, Lauren Westendorf and Madeleine Ball ISBN: 978-1-68083-254-9 Personal Fabrication Patrick Baudisch and Stefanie Mueller ISBN: 978-1-68083-258-7 Canine-Centered Computing Larry Freil, Ceara Byrne, Giancarlo Valentin, Clint Zeagler, David Roberts, Thad Starner and Melody Jackson ISBN: 978-1-68083-244-0 Exertion Games Florian Mueller, Rohit Ashok Khot, Kathrin Gerling and Regan Mandryk ISBN: 978-1-68083-202-0 Full text available at: http://dx.doi.org/10.1561/1100000070 Research Fiction and Thought Experiments in Design Mark Blythe School of Design, Northumbria University, UK, [email protected] Enrique Encinas School of Design, Northumbria University, UK, [email protected] Boston — Delft Full text available at: http://dx.doi.org/10.1561/1100000070 Foundations and Trends R in Human-Computer Interaction Published, sold and distributed by: now Publishers Inc. PO Box 1024 Hanover, MA 02339 United States Tel. +1-781-985-4510 www.nowpublishers.com [email protected] Outside North America: now Publishers Inc. -

The Role of Fiction in Experiments Within Design, Art & Architecture

The Role of Fiction in Experiments within Design, Art & Architecture EVA KNUTZ, KOLDING SCHOOL OF DESIGN [email protected] THOMAS MARKUSSEN KOLDING SCHOOL OF DESIGN [email protected] POUL RIND CHRISTENSEN KOLDING SCHOOL OF DESIGN [email protected] ABSTRACT airliners transporting people across the Atlantic. The ability to use design fictions for speculating about This paper offers a typology for understanding design alternative presences or possible futures is at the core of design practice. What is new is that it is now fiction as a new approach in design research. The claimed also to be a viable road for producing valid typology allows design researchers to explain design knowledge in design research (Grand & Wiedmer, 2010). fictions according to 5 criteria: (1) “What if scenarios” as the basic construal principle of design fiction; (2) the In this paper, we argue that in order to establish design manifestation of critique; (3) design aims; (4) fiction as a promising new approach to design research, materializations and forms; and (5) the aesthetic of there is a need to develop a more detailed understanding of the role of fiction in design design fictions. The typology is premised on the idea experiments. Some attempts have already been made. that fiction may integrate with reality in many different DiSalvo (2012) thus accounts for two forms of design fiction in terms of what he calls ‘spectacle’ and ‘trope’. ways in design experiments. The explanatory power of While DiSalvo makes a valuable contribution, his the typology is exemplified through the analyses of 6 treatment is too limited for understanding other forms of design fiction.