News@UK the Newsletter of UKUUG, the UK’S Unix and Open Systems Users Group Published Electronically At

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download This Issue

Editorial Dru Lavigne, Thomas Kunz, François Lefebvre Open is the New Closed: How the Mobile Industry uses Open Source to Further Commercial Agendas Andreas Constantinou Establishing and Engaging an Active Open Source Ecosystem with the BeagleBoard Jason Kridner Low Cost Cellular Networks with OpenBTS David Burgess CRC Mobile Broadcasting F/LOSS Projects François Lefebvre Experiences From the OSSIE Open Source Software Defined Radio Project Carl B. Dietrich, Jeffrey H. Reed, Stephen H. Edwards, Frank E. Kragh The Open Source Mobile Cloud: Delivering Next-Gen Mobile Apps and Systems Hal Steger The State of Free Software in Mobile Devices Startups Bradley M. Kuhn Recent Reports Upcoming Events March Contribute 2010 March 2010 Editorial Dru Lavigne, Thomas Kunz, and François Lefebvre discuss the 3 editorial theme of Mobile. Open is the New Closed: How the Mobile Industry uses Open Source to Further Commercial Agendas Andreas Constantinou, Research Director at VisionMobile, PUBLISHER: examines the many forms that governance models can take and 5 The Open Source how they are used in the mobile industry to tightly control the Business Resource is a roadmap and application of open source projects. monthly publication of the Talent First Network. Establishing and Engaging an Active Open Source Ecosystem with Archives are available at the BeagleBoard the website: Jason Kridner, open platforms principal architect at Texas 9 http://www.osbr.ca Instruments Inc., introduces the BeagleBoard open source community. EDITOR: Low Cost Cellular Networks with OpenBTS Dru Lavigne David Burgess, Co-Founder of The OpenBTS Project, describes 14 [email protected] how an open source release may have saved the project. -

Annual Report 2006

Annual Report 2006 Table of contents Foreword Letter from the Chairman, Dave Neary 4–5 A year in review 2006—a year in GNOME 8–10 Distributions in 2006 11 Events and community initiatives GUADEC—The GNOME Conference 12–13 GNOME hackers descend on MIT Media Center 14–15 GNOME User Groups 16 The www.gnome.org revamp 17 GNOME platform 17 GNOME Foundation Administrator 17 Foundation development The Women’s Summer Outreach Program 18–20 The GNOME Mobile and Embedded Initiative 21 The GNOME Advisory Board 22–23 PHOTO The GNOME Foundation Board and Advisory Board members by David Zeuthen (continued on the inside back cover) GNOME Foundation 3 Dear Friends, All traditions need a starting point, they say. What you now hold in your hands is the first annual report of the GNOME Foundation, at the end of what has been an eventful year for us. Each year brings its challenges and rewards for the members of this global project. This year, many of our biggest challenges are in the legal arena. European countries have been passing laws to conform with the European Union Copyright Directive, and some, including France, have brought into law provisions which we as software developers find it hard to understand, but which appear to make much of what we do illegal. We have found our- selves in the center of patent wars as bigger companies jockey for position with offerings based on our hard work. And we are scratching our heads trying to figure out how to deal with the constraints of DRM and patents in multimedia, while still offering our users access to their media files. -

Praise for the Official Ubuntu Book

Praise for The Official Ubuntu Book “The Official Ubuntu Book is a great way to get you started with Ubuntu, giving you enough information to be productive without overloading you.” —John Stevenson, DZone Book Reviewer “OUB is one of the best books I’ve seen for beginners.” —Bill Blinn, TechByter Worldwide “This book is the perfect companion for users new to Linux and Ubuntu. It covers the basics in a concise and well-organized manner. General use is covered separately from troubleshooting and error-handling, making the book well-suited both for the beginner as well as the user that needs extended help.” —Thomas Petrucha, Austria Ubuntu User Group “I have recommended this book to several users who I instruct regularly on the use of Ubuntu. All of them have been satisfied with their purchase and have even been able to use it to help them in their journey along the way.” —Chris Crisafulli, Ubuntu LoCo Council, Florida Local Community Team “This text demystifies a very powerful Linux operating system . in just a few weeks of having it, I’ve used it as a quick reference a half dozen times, which saved me the time I would have spent scouring the Ubuntu forums online.” —Darren Frey, Member, Houston Local User Group This page intentionally left blank The Official Ubuntu Book Sixth Edition This page intentionally left blank The Official Ubuntu Book Sixth Edition Benjamin Mako Hill Matthew Helmke Amber Graner Corey Burger With Jonathan Jesse, Kyle Rankin, and Jono Bacon Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. -

Debian 1 Debian

Debian 1 Debian Debian Part of the Unix-like family Debian 7.0 (Wheezy) with GNOME 3 Company / developer Debian Project Working state Current Source model Open-source Initial release September 15, 1993 [1] Latest release 7.5 (Wheezy) (April 26, 2014) [±] [2] Latest preview 8.0 (Jessie) (perpetual beta) [±] Available in 73 languages Update method APT (several front-ends available) Package manager dpkg Supported platforms IA-32, x86-64, PowerPC, SPARC, ARM, MIPS, S390 Kernel type Monolithic: Linux, kFreeBSD Micro: Hurd (unofficial) Userland GNU Default user interface GNOME License Free software (mainly GPL). Proprietary software in a non-default area. [3] Official website www.debian.org Debian (/ˈdɛbiən/) is an operating system composed of free software mostly carrying the GNU General Public License, and developed by an Internet collaboration of volunteers aligned with the Debian Project. It is one of the most popular Linux distributions for personal computers and network servers, and has been used as a base for other Linux distributions. Debian 2 Debian was announced in 1993 by Ian Murdock, and the first stable release was made in 1996. The development is carried out by a team of volunteers guided by a project leader and three foundational documents. New distributions are updated continually and the next candidate is released after a time-based freeze. As one of the earliest distributions in Linux's history, Debian was envisioned to be developed openly in the spirit of Linux and GNU. This vision drew the attention and support of the Free Software Foundation, who sponsored the project for the first part of its life. -

Event Information

SPEAKERS AND TALKS Mats Petersson – AMD How Hardware Supported Virtualization in Xen works th Michael Meeks – Novell OpenOffice.org beyond 2.0... For Thursday 29 June we are pleased to be able to offer three tutorials. Descriptions and tutor Neil McGovern – Fotopic.net 30 million and counting: An insight into a enterprise biographies are on the web site. level open source systems Tutorial T1. - Thursday 29th June full day tutorial 'Building and Maintaining RPM Packages' Paul Sladen TBC Jos Vos, X/OS Experts in Open Systems BV Phil Hands TBC Ruediger Berlich – Karlsruhe ROOT and PROOF Overview: Attendees will learn how to create, modify and use RPM packages. The RPM Package Steve Coast – OpenStreetMap OpenStreetMap: The First Year Management system (RPM) is used for package management on most Linux distributions. It can also be Stuart Yeates - OSS Watch The State of OS in Higher and Further Education used for package management on other UNIX systems and for packaging non-free (binary) software. The What are all these distributions and how should I tutorial will focus on creating RPM packages for Fedora and Red Hat Enterprise Linux systems, but the theory will also apply to package software for other distributions. choose one? TBC Securing Linux Tutorial T2. - Thursday 29th June half day tutorial (a.m.) 'Optimising MySQL' Getting started with MySQL (TBC) Mark Leith MySQL Roadmap Mark Leith, MySQL Ted Haeger – Novell SUSE Linux Enterprise Desktop: Under the Hood Overview: This tutorial examines the many aspects involved when optimising a MySQL application, the Desktop Linux Innovation at Novell MySQL server, and its environment. -

Curriculum Vitae ( PDF )

Simon Pascal Klein Last updated: Tuesday, 6 May 2014 ! http://klepas.org Nationality: German (Australian permanent resident) " [email protected] Current residence: Canberra, Australia # +61 420 9797 38 ABN: 94 483 395 962 I’m Pascal — a front-end designer & writer, with a penchant for accessibility and typography. tl;dr? Both in form & content, I build beautiful and usable interfaces. Skils overview writing, research, Rooted in the open source & web community. policy advice accessibility, documentation, teaching, open source, gender, … front-end web Semantic, standards-based, accessible, and responsive web development. development & HTML, css, SVG; WCAG2; Drupal, Jekyll, WordPress, …; terminal, git, … accessibility design & media Content & media curation; design direction; podcasting, pedantic copy editing. curation the , Photoshop; Inkscape, Illustrator; audio editing, Markdown, … ! gimp ! Experience 2013 Senior Policy Advisor & web specialist — act Government Reporting to the cto, I worked for the Chief Minister and Treasury Directorate in a policy and research role as a web technologies and accessibility specialist. Work also included web development on internal prototyping projects that un- derpinned Open Government and Open Data mandates. I also launched the frst Australian government GitHub account, for the act. 2011 Managing Editor, podcast host & producer — SitePoint Lead editor & manager for SitePoint’s then new DesignFestival.com. I launched the site’s podcast, producing 15 episodes (hiting 30,000 downloads before leav- ing this position). Additionaly I authored original articles for SitePoint. I also freelanced during this time. !1 2007–09 Concept Designer — Looking Glass Solutions Graphic & interface designer, embedded within the development team. lgs was a former web development studio that serviced primarily the Australian Government and Engineers Australia. -

The Official Ubuntu Book

Praise for Previous Editions of The Official Ubuntu Book “The Official Ubuntu Book is a great way to get you started with Ubuntu, giving you enough information to be productive without overloading you.” —John Stevenson, DZone book reviewer “OUB is one of the best books I’ve seen for beginners.” —Bill Blinn, TechByter Worldwide “This book is the perfect companion for users new to Linux and Ubuntu. It covers the basics in a concise and well-organized manner. General use is covered separately from troubleshooting and error-handling, making the book well-suited both for the beginner as well as the user that needs extended help.” —Thomas Petrucha, Austria Ubuntu User Group “I have recommended this book to several users who I instruct regularly on the use of Ubuntu. All of them have been satisfied with their purchase and have even been able to use it to help them in their journey along the way.” —Chris Crisafulli, Ubuntu LoCo Council, Florida Local Community Team “This text demystifies a very powerful Linux operating system . In just a few weeks of having it, I’ve used it as a quick reference a half-dozen times, which saved me the time I would have spent scouring the Ubuntu forums online.” —Darren Frey, Member, Houston Local User Group This page intentionally left blank The Official Ubuntu Book Seventh Edition This page intentionally left blank The Official Ubuntu Book Seventh Edition Matthew Helmke Amber Graner With Kyle Rankin, Benjamin Mako Hill, and Jono Bacon Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. -

The Open Pitt What's Cooking in Linux and Open Source in Western Pennsylvania Issue 12 May 2005

The Open Pitt What's cooking in Linux and Open Source in Western Pennsylvania Issue 12 May 2005 www.wplug.org Book Review: Linux Desktop Garage by Bobbie Lynn Eicher Author: Susan Matteson the author deserves praise is that she comes much less valuable. The au- Publisher: Prentice Hall PTR put the extra effort in to provide ex- thor, Susan Matteson, may consider ISBN: 0131494198 planations that can be used by both herself to be an avid Linux user, but $29.99, 384 pages, 2005 Windows users and those who have professionally she's a web developer experience with Macs. and writer and that bias shows in a The goal of Prentice Hall's new Gar- Most valuable with this book is its way that many in the Linux com- age Series of books is to provide read- structure. Once Linux has been intro- munity won't appreciate. Experienced ers with a practical and entertaining duced, each chapter that follows is de- users are likely to be startled, to say guide to technical topics that are often voted to a type of task that users the least, by statements like the fol- intimidating to an ordinary reader. As might want such as word processing, lowing from the preface: “There are one of the latest releases offered under messaging, and games. For someone command-line programs to do those this banner, the Linux Desktop Gar- who's already used to computers but things, but why be so limited?” The age does this much well. It's not re- doesn't know anything about Linux more zealous followers of Richard commended, however, for users who equivalents for their usual software, Stallman are likely to be irritated by are looking for a guide to more ad- that makes Linux Desktop Garage an her quick dismissal of the claim that vanced topics and aren't content with excellent tool to get straight to the in- the entire operating system shouldn't just the basic user software available formation they need without getting be called Linux, though perhaps in the open source world. -

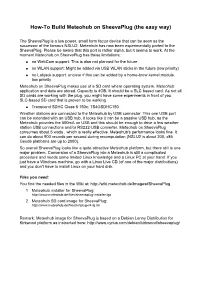

How-To Build Meteohub on Sheevaplug (The Easy Way)

How-To Build Meteohub on SheevaPlug (the easy way) The SheevaPlug is a low power, small form factor device that can be seen as the successor of the famous NSLU2. Meteohub has now been experimentally ported to the SheevaPlug. Please be aware that this port is rather alpha, but it seems to work. At the moment Meteohub on SheevaPlug has these limitations: ● no WebCam support: This is also not planned for the future ● no WLAN support: Might be added via USB WLAN sticks in the future (low priority) ● no Labjack support: unclear if this can be added by a home-brew kernel module, low priority Meteohub on SheevaPlug makes use of a SD card where operating system, Meteohub application and data are stored. Capacity is 4GB. It should be a SLC based card. As not all SD cards are working with the plug, you might have some experiments in front of you. SLC-based SD card that is proven to be working ● Transcend SDHC Class 6 150x: TS4GSDHC150 Weather stations are connected to the Meteohub by USB connector. This one USB port can be extended with an USB hub. It looks like it can be a passive USB hub, as the Meteohub provides the 500mA on USB and this should be enough to drive a few weather station USB connections and/or RS232-USB converter. Meteohub on SheevaPlug consumes about 5 watts , which is really effective. Meteohub's performance looks fine. It can do about 900 records per second during recomputation (NSLU2 is about 200, x86 Geode platforms are up to 2000). -

A Monitoring System for Intensive Agriculture Based on Mesh Networks and the Android System ⇑ Francisco G

Computers and Electronics in Agriculture 99 (2013) 14–20 Contents lists available at ScienceDirect Computers and Electronics in Agriculture journal homepage: www.elsevier.com/locate/compag A monitoring system for intensive agriculture based on mesh networks and the android system ⇑ Francisco G. Montoya a, , Julio Gómez a, Alejandro Cama a, Antonio Zapata-Sierra a, Felipe Martínez a, José Luis De La Cruz b, Francisco Manzano-Agugliaro a a Department of Engineering, Universidad de Almería, 04120 Almería, Spain b Department of Applied Physics, Universidad de Córdoba, Córdoba, Spain article info abstract Article history: One of the most important changes in the southeast Spanish lands is the switch from traditional agricul- Received 12 April 2013 ture to agriculture based on the exploitation of intensive farmlands. For this type of farming, it is impor- Received in revised form 12 July 2013 tant to use techniques that improve plantation performance. Web applications, databases and advanced Accepted 31 August 2013 mobile systems facilitate real-time data acquisition for effective monitoring. Moreover, open-source sys- tems save money and facilitate a greater degree of integration and better application development based on the system’s robustness and widespread utility for several engineering fields. This paper presents an Keywords: application for Android tablets that interacts with an advanced control system based on Linux, Apache, Wireless sensor network MySQL, PHP, Perl or Python (LAMP) to collect and monitor variables applied in precision agriculture. TinyOS 6LoWPAN Ó 2013 Elsevier B.V. All rights reserved. TinyRPL Android 1. Introduction real-time applications that operate using large datasets processed by the device or a cloud server. -

Pen Testing with Pwn Plug

toolsmith ISSA Journal | March 2012 Pen Testing with Pwn Plug By Russ McRee – ISSA Senior Member, Puget Sound (Seattle), USA Chapter Prerequisites one who owns a Sheevaplug: “Pwn Plug Community Edition does not include the web-based Plug UI, 3G/GSM support, Sheevaplug1 NAC/802.1x bypass.” 4GB SD card (needed for installation) For those of you interested in a review of the remaining fea- tures exclusive to commercial versions, I’ll post it to my blog Dedicated to the memory of Tareq Saade 1983-2012: on the heels of this column’s publishing. Dave provided me with a few insights including the Pwn This flesh and bone Plug’s most common use cases: Is just the way that we are tied in But there’s no one home • Remote, low-cost pen testing: penetration test cus- I grieve for you –Peter Gabriel tomers save on travel expenses; service providers save on travel time. • Penetration tests with a focus on physical security and s you likely know by now given toolsmith’s position social engineering. at the back of the ISSA Journal, March’s theme is • Data leakage/exfiltration testing: using a variety of co- A Advanced Threat Concepts and Cyberwarfare. Well, vert channels, the Pwn Plug is able to tunnel through dear reader, for your pwntastic reading pleasure I have just many IDS/IPS solutions and application-aware fire- the topic for you. The Pwn Plug can be considered an ad- walls undetected. vanced threat and useful in tactics that certainly resemble Information security training: the Pwn Plug touches cyberwarfare methodology. -

Plugcomputer

Fakultat¨ Informatik Institut f ¨urTechnische Informatik, Professur f ¨urVLSI-Entwurfssysteme, Diagnostik und Architektur PLUGCOMPUTER Alte Idee, Neuer Ansatz Thomas Gartner¨ Dresden, 14.7.2010 Ubersicht¨ Motivation Alte Idee: NSLU2 Neuer Ansatz: PlugComputer Quellen TU Dresden, 14.7.2010 PlugComputer Folie 2 von 24 Motivation Problemstellung Wie kann ich meine personlichen¨ Daten und Dateien moglichst¨ effizient, f ¨urmich und ausgewahlte¨ Personen, ¨uberallund immer verf ¨ugbarmachen? • Soziale Netzwerke • Hostingdienste wie z.B. Flickr, Google Suite, YouTube . • Server anmieten Probleme: • Daten ¨ubergabean Dritte • Dienste wegen ihrer Große¨ f ¨urAngreifer attraktiv TU Dresden, 14.7.2010 PlugComputer Folie 3 von 24 Motivation Losungsansatz¨ Idee: Eigener Server im eigenen, sicheren Netz das inzwischen meistens ohnehin standig¨ mit dem Internet verbunden ist. Vorteile: • Beschrankter¨ physikalischer Zugang f ¨urDritte • Voll anpassbar Nachteile: • Konfigurationsaufwand • Energieverbrauch • Larm¨ • Verantwortung f ¨urdie eigenen Daten TU Dresden, 14.7.2010 PlugComputer Folie 4 von 24 Motivation Losungsansatz¨ TU Dresden, 14.7.2010 PlugComputer Folie 5 von 24 Alte Idee: NSLU2 Allgemein Mit dem Network Storage Link von Linksys konnen¨ Sie die Speicherkapazitat¨ Ihres Netzwerks schnell und einfach um viele Gigabyte erweitern. Dieses kleine Netzwerkgerat¨ verbindet USB2.0-Festplatten direkt mit Ihrem Ethernet- Netzwerk. [Lina] TU Dresden, 14.7.2010 PlugComputer Folie 6 von 24 Alte Idee: NSLU2 Außen • 130 mm x 21 mm x 91 mm • 1x 10/100-RJ-45-Ethernet-Port • 2x USB 2.0-Port • 1x Stromanschluss TU Dresden, 14.7.2010 PlugComputer Folie 7 von 24 Alte Idee: NSLU2 Innen • Intel IXP420 (ARMv5TE) • 133 MHz, spater¨ 266 MHz • 8 MB Flash • 32 MB SDRAM TU Dresden, 14.7.2010 PlugComputer Folie 8 von 24 Alte Idee: NSLU2 Modifikationen • NSLU2 Firmware basiert auf Linux! • Ersetzbar durch Debian, OpenWrt, SlugOS .